Types of AI Models: Your Ultimate Guide

Summarize this article with:

✨ AI Generated Summary

From virtual assistants like Alexa and large language models such as Llama or GPT to self-driving cars, artificial intelligence is reshaping our daily lives. As different artificial intelligence models gain prominence across nearly every industry, their extensive and transformative impacts are increasingly evident.

But what exactly makes AI so powerful? What processes enable machines to think, learn, and even outperform human capabilities in certain tasks?

This article explores the various types of AI models that can benefit your business and shows how to build an LLM pipeline that uses these models.

TL;DR: Types of AI Models at a Glance

- AI models are programs that learn from data to perform tasks autonomously, powering applications from virtual assistants to self-driving cars

- Main categories include supervised learning (labeled data), unsupervised learning (pattern discovery), and reinforcement learning (learning via rewards). Deep learning is a specialized subset of machine learning that uses complex neural networks.

- Natural Language Processing models like transformers enable human-computer communication through text understanding and generation

- Advanced reasoning models now demonstrate sophisticated problem-solving capabilities, though these do not yet closely mirror human cognitive processes

- Modern deployment requires optimization techniques like parameter-efficient fine-tuning and comprehensive monitoring for production reliability

What Is an AI Model?

An AI (Artificial Intelligence) model is a program that performs specific business tasks autonomously, without manual intervention. Like a human brain, it can learn, solve problems, and make predictions, but it acquires knowledge by analyzing large datasets with mathematical techniques and algorithms.

For example, to teach an AI model to distinguish between pictures of phones and laptops, you would train it on many labeled images of each. The model learns patterns—size, keyboard, materials, screen design—and uses them to predict whether a new, unseen image is a phone or a laptop.

Model accuracy improves with more, higher-quality data. Beyond image recognition, artificial intelligence models power workflows such as natural language processing (NLP), anomaly detection, predictive modeling, forecasting, and robotics. Modern AI architectures have evolved to include foundation models that serve as versatile starting points for various tasks through transfer learning, significantly reducing the data and computational resources needed for specialized applications.

How Do You Create an AI Model?

1. Identify the Problem and Goals

Define the business problem—classification, regression, recommendation—and outline objectives and potential challenges.

2. Data Preparation and Gathering

Collect datasets that reflect real-world scenarios. Clean, preprocess, and label the data. Modern approaches increasingly leverage federated learning techniques that enable collaborative model training across organizations without sharing raw data, particularly valuable in privacy-sensitive domains.

3. Design the Model Architecture

Choose algorithms (rule-based, deep learning, NLP, etc.) appropriate to the problem and experiment with configurations. Consider foundation models as starting points that can be fine-tuned for specific tasks, reducing development time and computational requirements.

4. Split Data for Training, Validation, and Testing

Why split the data?

- Training set (70%) - teaches the model patterns

- Validation set (15%) - tunes hyperparameters and prevents overfitting

- Testing set (15%) - evaluates final performance on unseen data

Key point: Keep the test set completely separate until final evaluation to ensure unbiased performance assessment.

5. Model Training

Feed training data into the model and use back-propagation to adjust parameters. Modern training approaches include self-supervised learning techniques that generate implicit labels from data structure, reducing manual annotation requirements while maintaining high accuracy.

6. Hyperparameter Tuning

Balance underfitting and overfitting by adjusting batch size, learning rate, regularization, and other hyperparameters. Parameter-efficient fine-tuning methods like Low-rank Adaptation (LoRA) now enable customization of large models by updating only a small fraction of parameters, dramatically reducing computational costs. Fine-tuning hyperparameters such as batch size, learning rate, and regularization methods helps you maintain a balance between underfitting and overfitting.

7. Model Assessment

Evaluate with validation data using metrics like accuracy, precision, recall, and F1-score. Include interpretability assessments using techniques like SHAP values or attention visualization to ensure model decisions are explainable and auditable.

8. Testing and Deployment

Test on unseen data, then deploy if performance meets requirements. Follow standards such as the AI Risk Management framework. Implement monitoring systems for concept drift detection and automated retraining triggers to maintain model performance over time.

9. Ongoing Evaluation and Enhancement

Monitor, gather feedback, and update the model to adapt to changing data patterns. Establish continuous learning pipelines that can incorporate new data while preventing catastrophic forgetting of previously learned knowledge.

What Are the Main Categories of Machine Learning Models?

Machine Learning (ML) focuses on models that learn from data to recognize patterns and make predictions. This foundational category encompasses various approaches that enable computers to improve their performance on specific tasks through experience.

Key Algorithms

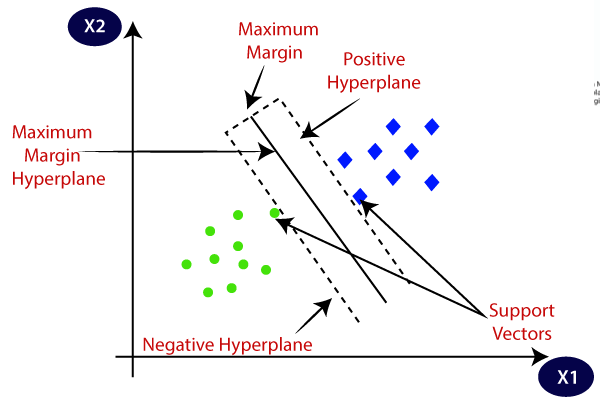

- Support Vector Machines (SVM) – find hyperplanes that separate data classes.

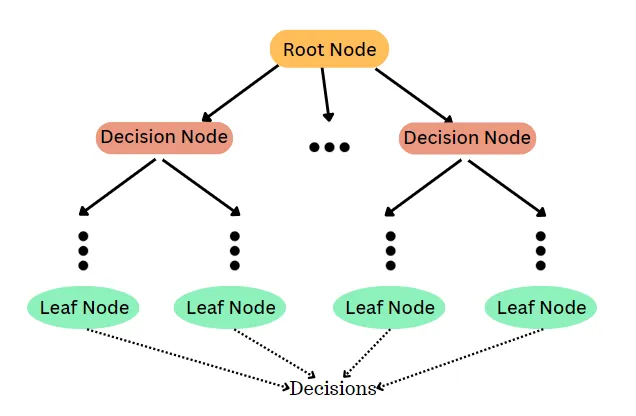

- Decision Trees – tree-structured models that split data by feature decisions.

Use Cases

- Finance: fraud detection, algorithmic trading.

- E-commerce: product or content recommendations.

- Healthcare: diagnostic assistance, drug discovery acceleration.

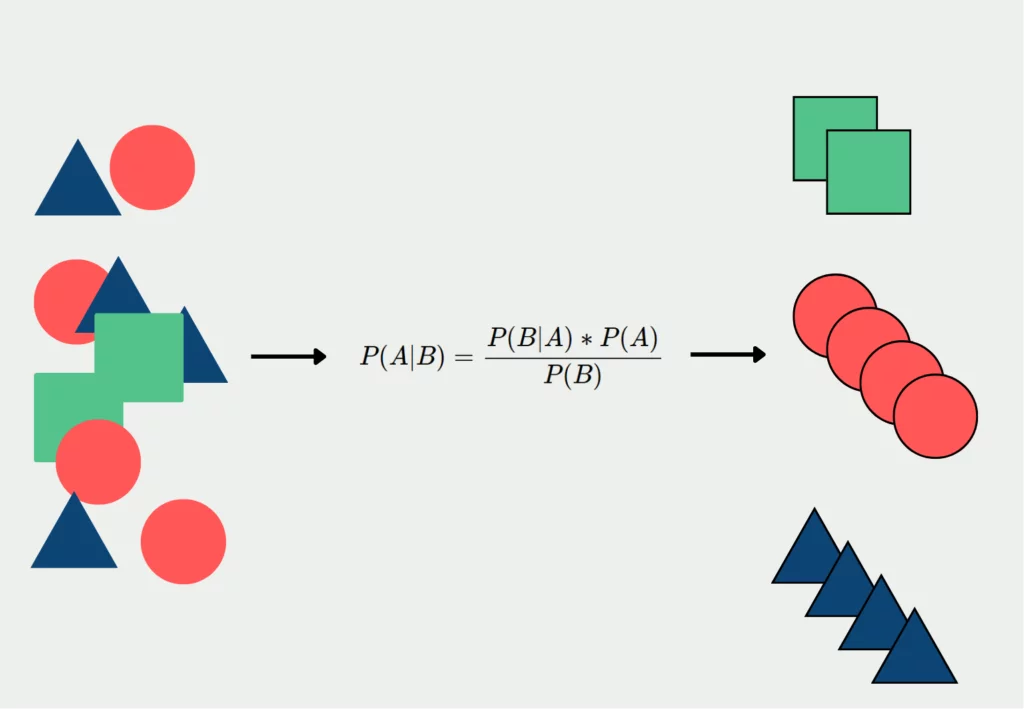

How Do Supervised Learning Models Work with Labeled Data?

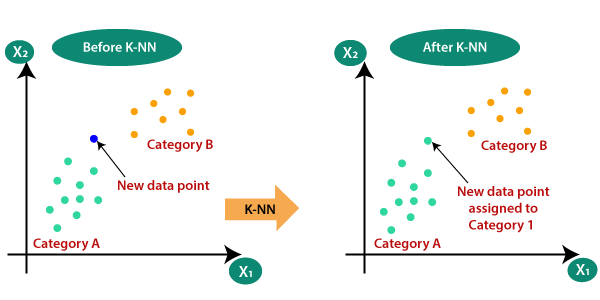

Models are trained on labeled data (input–output pairs), making supervised learning ideal for scenarios where you have examples of correct answers and want the system to learn the underlying patterns.

Key Algorithms

- K-Nearest Neighbors (k-NN) – predicts based on similarity to nearest data points.

- Naive Bayes – probabilistic classifier assuming feature independence.

Use Cases

- Classification: image recognition, sentiment analysis, medical diagnosis.

- Regression: house-price prediction, sales forecasting, risk assessment.

- Time series prediction: stock market analysis, demand forecasting.

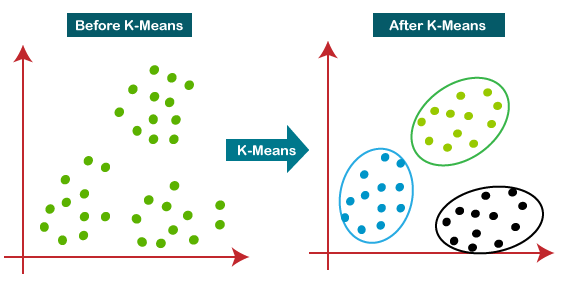

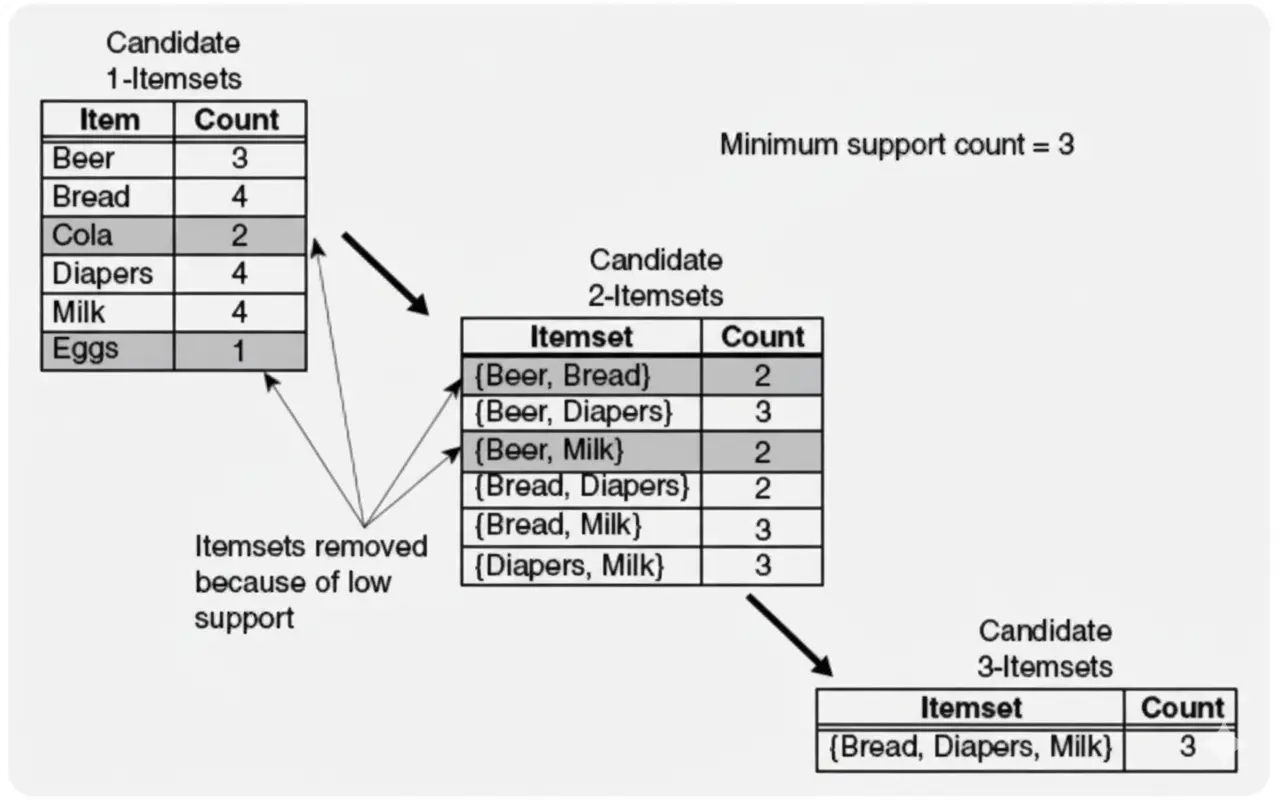

What Makes Unsupervised Learning Models Unique?

Models discover patterns in unlabeled data, making them particularly valuable for exploratory data analysis and discovering hidden structures that humans might miss.

Key Algorithms

- K-Means Clustering – groups data into K clusters.

- Apriori Algorithm – mines frequent itemsets and association rules.

Use Cases

- Customer segmentation for targeted marketing campaigns.

- Dimensionality reduction for complex datasets and visualization.

- Anomaly detection in cybersecurity and fraud prevention.

- Feature learning for extracting meaningful representations from raw data.

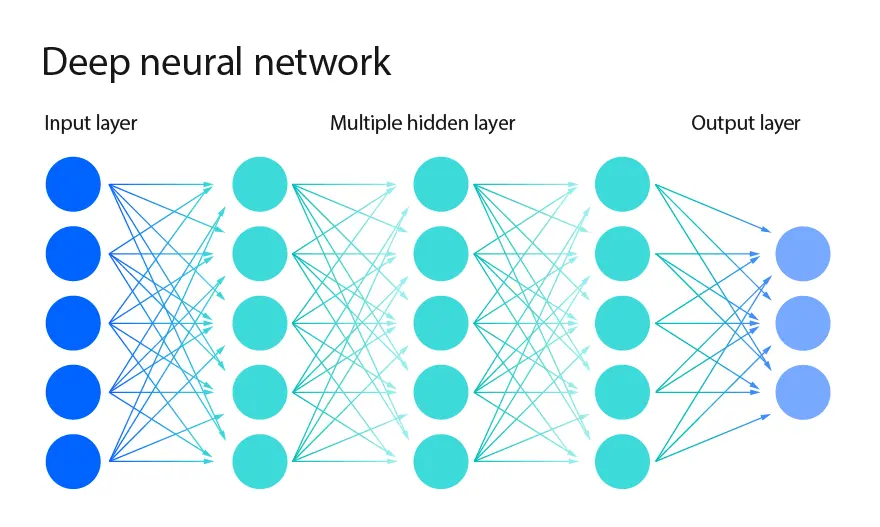

How Do Deep Learning Neural Networks Model Complex Patterns?

Neural networks with many layers model complex patterns in large datasets, enabling breakthrough performance in tasks that were previously impossible for traditional algorithms.

Key Architectures

- Convolutional Neural Networks (CNNs) – excel at image processing and computer vision tasks.

- Recurrent Neural Networks (RNNs) – handle sequential data and temporal dependencies.

Use Cases

- Speech recognition (voice-to-text, digital assistants).

- Autonomous vehicles (object detection, path planning).

- Drug discovery and protein structure prediction.

- Creative content generation, including art, music, and writing.

What Role Do Natural Language Processing Models Play in AI?

NLP enables machines to analyze, understand, and generate human language, bridging the gap between human communication and machine processing capabilities.

Key Techniques

- Transformers – self-attention models such as BERT and GPT that revolutionized language understanding.

- Token embeddings – vector representations like Word2Vec and GloVe that capture semantic relationships.

- Large Language Models (LLMs) – foundation models trained on massive text corpora for general language understanding.

Use Cases

- Machine translation (e.g., Google Translate) with near-human accuracy.

- Named-entity recognition (NER) for information extraction from documents.

- Content generation for marketing, documentation, and creative writing. Natural language shopping where a conversational AI agent can sell, support, and engage customers with human-like interactions.

- Code generation and software development assistance.

What Are Advanced Reasoning and Agentic Artificial Intelligence Models?

The latest generation of artificial intelligence models demonstrates sophisticated reasoning capabilities that mirror human cognitive processes, enabling autonomous decision-making and complex problem-solving across multiple domains.

Reasoning Architecture Innovations

Modern reasoning models like OpenAI's o1 and Microsoft's Phi-4-reasoning series employ chain-of-thought processing that decomposes complex queries into logical substeps before generating solutions. These architectures leverage "explanation tuning" where smaller models imitate the reasoning traces of larger teacher models, achieving breakthrough performance on challenging benchmarks while using significantly fewer computational resources.

The technical foundation includes recursive reasoning frameworks where models dynamically adjust action sequences based on environmental feedback, enabling applications in legal contract analysis, medical diagnosis, and scientific research that require methodical logical progression.

Agentic System Capabilities

Agentic AI systems autonomously orchestrate multi-step workflows by analyzing conditions, simulating outcomes, and adjusting tactics in real-time based on performance metrics. Unlike traditional chatbots that merely respond to queries, contemporary agents at companies like Amazon independently resolve customer issues by cross-referencing purchase histories, initiating refunds, and rerouting shipments without human intervention.

These systems share common infrastructure patterns including cloud-based orchestration layers coordinating specialized sub-agents handling discrete workflow components. The "uber agent" architecture proposed by leading vendors coordinates specialist sub-agents under centralized governance protocols, enabling what industry leaders term the "digital workforce."

How Do Model Optimization and Deployment Best Practices Enhance AI Implementation?

Successful artificial intelligence model deployment requires sophisticated optimization techniques and robust operational frameworks that ensure reliable performance while managing computational costs and maintaining security standards throughout the model lifecycle.

Efficiency Optimization Strategies

Small Language Models (SLMs) challenge the traditional assumption that larger models always perform better by achieving comparable results with dramatically reduced computational footprints. Microsoft's Phi-4-mini-reasoning model outperforms competitors hundreds of times larger through synthetic curriculum training and parameter-efficient fine-tuning techniques.

Parameter-efficient methods like Low-rank Adaptation (LoRA) enable enterprise customization by updating only a small fraction of model weights, reducing training costs while maintaining performance. These techniques allow organizations like Mayo Clinic to deploy specialized diagnostic assistants in hours rather than weeks, using minimal GPU resources compared to traditional approaches.

Production Deployment Frameworks

Modern deployment strategies embrace progressive delivery patterns that mitigate risk while optimizing performance. Shadow deployment allows teams to run new model versions alongside existing systems without directing live traffic, enabling validation of accuracy and drift detection before full rollout. Multi-armed bandit frameworks dynamically allocate traffic based on real-time performance metrics, automatically optimizing model selection as data patterns evolve.

Containerized deployment architectures using Kubernetes provide auto-scaling capabilities that handle demand spikes while maintaining service level agreements. This infrastructure supports hybrid serving approaches where smaller quantized models handle edge computations while cloud-based APIs serve complex queries, balancing latency and cost considerations.

Monitoring and Lifecycle Management

Multi-layered monitoring systems track infrastructure metrics, model performance indicators, and business impact measurements simultaneously. Automated drift detection triggers retraining pipelines when target variable distributions deviate beyond thresholds, while concept drift safeguards include fallback models that activate when confidence scores drop unexpectedly.

Model registries maintain comprehensive tracking of training dataset versions, hyperparameters, evaluation metrics across segments, and deployment history with rollback capabilities. This governance framework enables reproducible experiments and regulatory compliance while supporting continuous improvement cycles.

Security and Compliance Integration

Enterprise AI deployment incorporates security as a core architectural concern rather than an afterthought. Runtime application self-protection agents block adversarial inputs like prompt injection attacks, while model watermarking enables leak source tracing through cryptographic signatures.

Compliance automation integrates legal requirements into MLOps workflows through data lineage tracing from model outputs back to source datasets, comprehensive audit trails recording inference requests and user interactions, and role-based access controls limiting production model modifications to authorized personnel.

Scalability and Cost Management

Cloud-native architectures automatically scale with workload demands while Kubernetes orchestration provides high availability and disaster recovery capabilities. Organizations processing petabytes of data daily implement resource optimization techniques that reduce per-query costs through intelligent caching and batch processing strategies.

Streaming SQL engines enable real-time feature calculation without batch latency, supporting dynamic model ensembles that vote on predictions based on current data patterns. These technical foundations enable sustainable AI operations that scale with business growth while controlling operational expenses.

How Can Airbyte Help Build an LLM Pipeline that Leverages AI Models?

Airbyte is a comprehensive data integration platform with over 600 pre-built connectors that can ingest structured, semi-structured, and unstructured data into warehouses or vector databases (Pinecone, Weaviate, Milvus) for LLM frameworks like LangChain. This extensive connector ecosystem eliminates development overhead while enabling rapid deployment of AI-ready data pipelines.

Key AI-Focused Features

- Advanced GenAI workflow support includes RAG-specific chunking and embedding transformations that preserve contextual relationships between data elements.

- Airbyte's metadata synchronization feature maintains referential understanding by linking structured records with unstructured files during transfer, giving foundation models comprehensive context about data relationships.

- Custom connectors via the Connector Development Kit enable rapid integration with specialized data sources without extensive development cycles. Change Data Capture (CDC) capabilities keep LLM training data current through real-time synchronization, crucial for maintaining model relevance in dynamic business environments.

- PyAirbyte – Airbyte's Python library – allows data scientists to embed data integration directly into ML workflows, bridging the gap between data ingestion and AI frameworks through programmatic interfaces.

Vector Database Integration

Airbyte provides pre-built connectors to leading vector databases, transforming raw unstructured data into AI-ready embeddings during pipeline execution. Users can define chunking strategies, embedding models from providers like OpenAI or Cohere, and metadata preservation rules that link vectors back to source documents for enhanced retrievability.

This integration enables direct loading of processed data into vector stores for Retrieval-Augmented Generation (RAG) applications, eliminating intermediate processing steps and reducing time-to-deployment for AI systems.

Enterprise AI Deployment Support

Airbyte's flexible deployment options support AI initiatives across different organizational requirements. Self-managed enterprise deployments provide complete data sovereignty for regulated AI applications, while cloud-hosted options offer rapid scaling for development and testing workflows.

The platform's direct loading capabilities to major cloud data warehouses bypass intermediate staging, reducing AI pipeline costs while accelerating data velocity crucial for continuous learning systems. Incremental synchronization modes sustain model relevance through efficient change detection and selective updates.

With the data infrastructure properly configured through Airbyte, organizations can focus on model development and business logic using TensorFlow, PyTorch, or other ML libraries, confident that their data pipeline provides reliable, high-quality inputs for artificial intelligence models.

Conclusion

AI models have transformed from simple algorithms to sophisticated systems that power everything from virtual assistants to autonomous vehicles. By leveraging data integration platforms like Airbyte, organizations can build robust AI pipelines that connect diverse data sources to power their machine learning applications.

Frequently Asked Questions

1. What is an AI model, and how does it work?

An AI model is a software program trained to perform specific tasks by learning from large datasets instead of being manually programmed. It mimics human-like thinking through algorithms that identify patterns, make predictions, and adapt over time. For example, a model trained on thousands of labeled images can learn to distinguish between phones and laptops, and its accuracy improves as it’s exposed to more high-quality data.

2. What are the main types of machine learning models?

The main machine learning model types are: supervised learning (trained on labeled data for predictions), unsupervised learning(discovers patterns in unlabeled data), and reinforcement learning (learns via trial and error in dynamic environments).

3. What makes deep learning and NLP models different from traditional AI?

Deep learning models, like neural networks, are designed to handle complex patterns in large datasets by stacking multiple layers of processing. They outperform traditional AI in tasks like image recognition and speech processing. Natural Language Processing (NLP) models focus specifically on understanding and generating human language, using architectures like transformers (e.g., GPT and BERT) that power applications such as chatbots, translation tools, and virtual assistants.

4. How can businesses build and deploy AI models effectively?

Building effective AI models starts with defining the problem, gathering and preparing quality data, and selecting the right architecture. Tools like Airbyte streamline data integration for AI pipelines, while techniques like parameter-efficient fine-tuning reduce compute costs. For deployment, businesses should adopt best practices such as containerization, shadow testing, automated monitoring, and model retraining to ensure scalable, reliable, and secure AI operations.

.webp)