What Is ELT: Process, Tools, & Architecture

Summarize this article with:

✨ AI Generated Summary

Data professionals spend too much time preparing data instead of generating insights, while organizations battle persistent data quality issues. Extract, Load, Transform (ELT) solves this by loading raw data directly into cloud data warehouses before transformation—enabling faster insights and more flexible processing. This modern approach harnesses cloud computing power to handle growing datasets while streamlining analytics for evolving business needs.

This article explores why data engineers are adopting ELT, its key differences from ETL, core architecture, essential tools, and practical use cases across industries.

What Is ELT and Why Does It Matter for Modern Data Teams?

ELT, which stands for Extract, Load, Transform, is a data-integration process that prioritizes speed and flexibility. It involves extracting data from multiple sources and directly loading it into a destination like a data warehouse or data lake without performing instant modifications. Transformations are applied whenever required, either within the target environment or by integrating with external tools.

The ELT approach has become essential for modern data teams dealing with massive datasets, diverse data sources, and demanding analytics requirements. Unlike traditional methods that create processing bottlenecks, ELT leverages the computational power of modern cloud data warehouses to handle transformations efficiently at scale.

How Does the ELT Process Work in Practice?

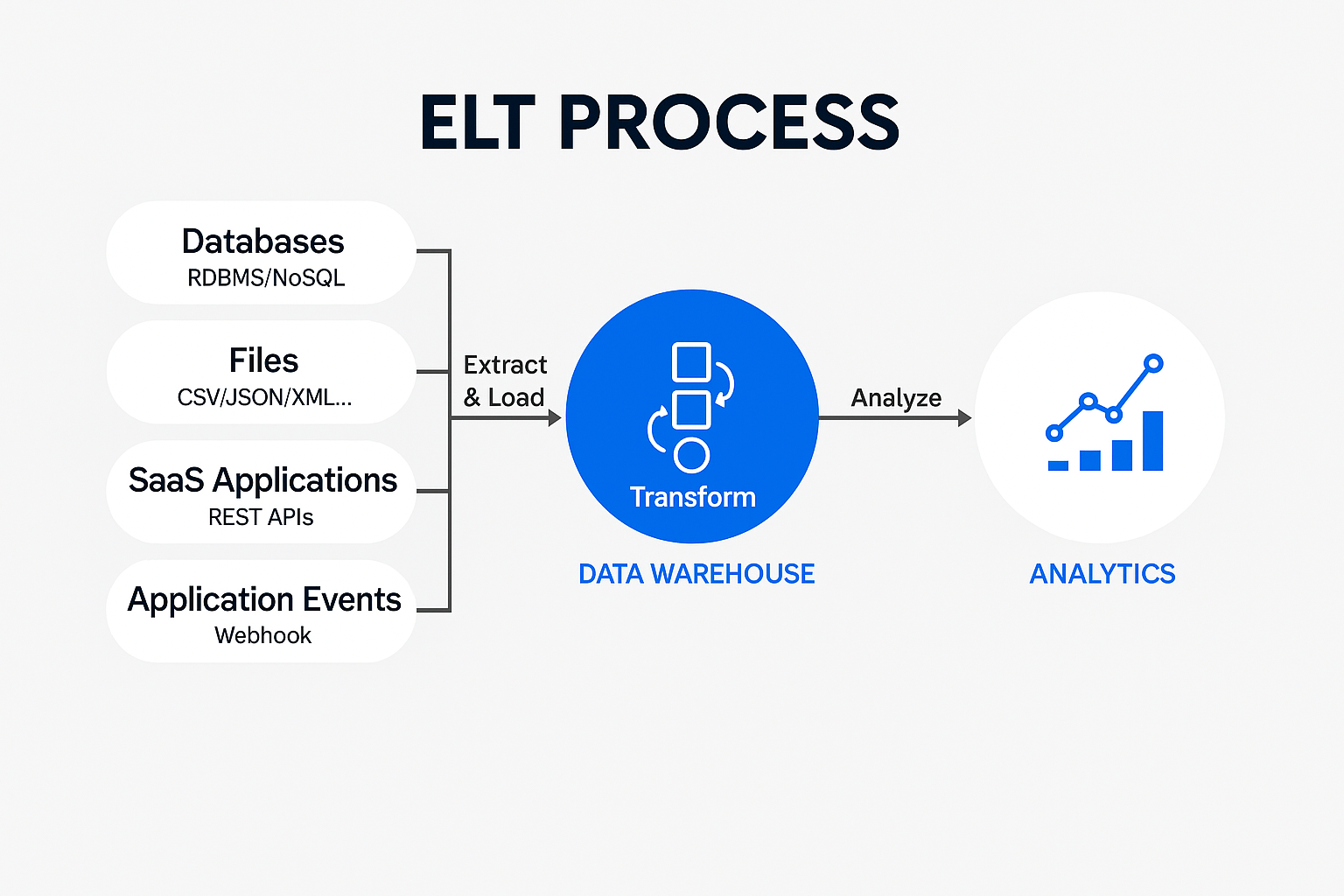

- Extract – Gather raw data from multiple sources, such as databases, files, SaaS applications, application events, and more.

- Load – Load the extracted data from a staging area into a target system, usually a data lake.

- Transform – Once the data is in the target system, apply transformations as required (mapping, normalization, cleaning, formatting, etc.).

This sequence lets data teams maintain access to original, unprocessed data while applying transformations as business requirements evolve.

How Is ELT Different From Traditional ETL?

Read more in our detailed comparison: ETL vs ELT.

Why Are Data Engineers Moving to ELT Solutions?

High flexibility allows teams to load raw data first and transform later based on evolving requirements. This approach provides access to original data for lineage tracking and audit purposes while enabling faster time-to-insights through quick ingestion and in-warehouse transformations.

Cloud-native optimization exploits the compute power of modern warehouses more effectively than traditional approaches. Cost efficiency emerges from reducing dedicated processing servers while leveraging existing warehouse infrastructure.

Scalability becomes manageable as organizations can grow without proportional infrastructure increases. This makes ELT particularly attractive for organizations experiencing rapid data growth or unpredictable workload patterns.

How Does Real-Time Streaming ELT Transform Data Processing?

Real-time streaming ELT processes data continuously instead of on a schedule, providing immediate availability for analytics and operational intelligence. This approach enables organizations to respond to business events as they occur rather than waiting for batch processing cycles.

1. Event-Driven Architecture

Message brokers like Apache Kafka and AWS Kinesis manage high-velocity streams and ensure fault tolerance. These systems handle variable data loads while maintaining consistent performance through distributed processing capabilities.

Loosely coupled components respond to events dynamically, enabling systems to adapt to changing data patterns without manual intervention. This architecture supports both predictable and unpredictable data flows while maintaining system reliability.

2. Performance and Scalability

Streaming systems scale horizontally, adding or removing resources on demand to handle variable data velocity while keeping latency low. Auto-scaling capabilities ensure optimal resource utilization while maintaining performance standards during peak and low-demand periods.

Load balancing distributes processing across multiple nodes to prevent bottlenecks and ensure consistent throughput. This distributed approach enables systems to handle growing data volumes without degrading user experience.

What Role Does AI and Machine Learning Play in Modern ELT?

AI and ML add intelligence and self-optimization to pipelines, transforming reactive data operations into proactive, self-managing systems. These technologies enable pipelines to learn from patterns and optimize performance without manual intervention.

1. Intelligent Data Observability

Advanced monitoring tracks throughput, latency, model accuracy, schema drift, and transformation quality across the entire data pipeline. These systems provide real-time visibility into pipeline health and performance metrics that matter for business operations.

Predictive analytics identify potential issues before they impact business operations. Automated alerting systems notify teams about anomalies while providing context about root causes and recommended remediation steps.

2. Automated Optimization and Self-Healing

Pipelines learn from historical patterns to predict optimal resource allocation and performance requirements. Machine learning algorithms analyze past performance to automatically adjust configurations for improved efficiency and reliability.

Automatic recovery from common failures reduces manual intervention and improves system uptime. Self-healing capabilities enable pipelines to adapt to temporary issues while maintaining data flow continuity for critical business processes.

What Are the Leading ELT Tools Available Today?

1. Airbyte

Airbyte provides 600+ connectors with an open-source foundation that eliminates vendor lock-in while offering enterprise-grade security and governance. The platform includes dbt integration for transformation workflows.

PyAirbyte enables programmatic data integration for Python developers building data-enabled applications. Enterprise deployment options support on-premises, cloud, and hybrid architectures while maintaining consistent functionality across environments.

2. Hevo Data

Hevo Data offers no-code pipelines with 150+ connectors and automatic schema management capabilities. The platform features drag-and-drop transformations and near real-time sync for business users who need quick data integration without technical expertise.

Built-in monitoring and alerting help teams maintain pipeline reliability while automated error handling reduces manual intervention requirements. The platform focuses on ease of use for organizations with limited technical resources.

3. Stitch Data

Stitch Data provides managed ELT with 140+ sources, automatic scaling, and flexible scheduling options. The platform emphasizes simplicity while maintaining enterprise capabilities for organizations transitioning from traditional ETL approaches.

Integration setup requires minimal configuration while automated monitoring ensures reliable data delivery. The platform handles scaling automatically based on data volume and processing requirements.

What Are the Most Effective ELT Use Cases Across Industries?

Healthcare: Organizations process EHR and IoT patient data in real-time for predictive analytics, improving patient outcomes and operational efficiency.

Use cases: Patient readmission prediction, resource allocation, treatment optimization.

Manufacturing: Companies integrate sensor, quality control, and supply-chain data for predictive maintenance, preventing downtime and optimizing production.

Use cases: Equipment failure prediction, quality defect detection, inventory optimization.

Financial Services: Institutions leverage continuous data processing for real-time fraud detection and regulatory compliance, identifying transaction anomalies while meeting industry standards.

Use cases: Credit risk assessment, anti-money laundering, algorithmic trading.

Technology and Software Companies: Tech firms combine telemetry, customer, and business metrics for comprehensive product analytics. Development teams process application logs and system metrics to rapidly address performance issues and enhance user experiences.

Use cases: A/B testing, feature usage analysis, performance monitoring.

Retail and E-commerce: Retailers unify POS, inventory, and customer data for personalized experiences and demand forecasting. E-commerce platforms process transaction data and website interactions to optimize conversion rates, with real-time inventory updates preventing overselling and enabling dynamic pricing.

Use cases: Customer segmentation, churn prediction, dynamic pricing, recommendation engines.

What Are the Key Limitations of ELT Implementation?

Data quality challenges emerge when loading raw data without initial validation or cleansing processes. Organizations must implement downstream quality controls and monitoring to ensure analytical accuracy and business reliability.

Complex governance and access controls require careful planning to manage who can access raw data versus transformed datasets. Security considerations become more complex when sensitive data loads directly into target systems.

High storage consumption for raw datasets increases infrastructure costs, especially for organizations with large data volumes or long retention requirements. Storage optimization strategies become essential for cost management.

Technical and Operational Challenges

Exposure windows for sensitive information require additional security measures during the load and transformation phases. Data masking and encryption strategies must account for the ELT processing sequence.

Platform-specific performance tuning becomes necessary to optimize transformation performance within target systems. Different data warehouses require different optimization approaches for efficient processing.

Dependence on destination system capabilities limits transformation complexity and processing options. Organizations must ensure their target platforms can handle required transformation logic and performance requirements.

Summary

ELT represents a modern, cloud-aligned approach to data integration that loads data first and transforms later for improved speed and flexibility. This approach leverages warehouse compute power to minimize infrastructure overhead while scaling easily to handle large and streaming datasets. ELT forms the foundation for AI-driven, self-optimizing pipelines that adapt to changing business requirements. With careful attention to data quality, governance, and security considerations, ELT accelerates insight generation and supports the evolving needs of data-driven organizations.

FAQs

What Is the Main Difference Between ETL and ELT?

The key distinction lies in the order of operations. ETL transforms data before loading it into the destination system, while ELT loads raw data first and applies transformations later within the warehouse or lake. This makes ELT faster and more scalable for large datasets.

Why Should Organizations Consider ELT Over ETL?

ELT offers greater flexibility, faster time-to-insight, and better scalability by leveraging the compute power of modern cloud warehouses. It also reduces infrastructure costs by removing the need for dedicated transformation servers.

What Types of Businesses Benefit Most From ELT?

Organizations dealing with large, diverse, or rapidly growing data sources—such as healthcare, financial services, manufacturing, and e-commerce—benefit most. These industries require real-time insights, predictive analytics, and scalable processing that ELT supports effectively.

What Are the Biggest Challenges of Implementing ELT?

Common challenges include maintaining data quality when loading raw data, managing governance and access controls, and handling high storage consumption for raw datasets. Companies must also ensure their warehouse platform supports the required transformation complexity.

How Does AI Improve ELT Pipelines?

AI and machine learning enhance ELT by automating monitoring, anomaly detection, optimization, and self-healing. This reduces manual intervention, improves reliability, and helps teams adapt pipelines to changing workloads with minimal effort.

.webp)