10 Best Data Mapping Tools to Save Time & Effort (2026)

Summarize this article with:

Imagine a task assigned to you where you have to combine customer data from two sources—an online store and a loyalty program. The online store data has customers' names, email addresses, and purchase histories in a CSV format. Whereas, the loyalty program data contains consumers' names, points, and tier levels in JSON format. Manually mapping these different fields would be tedious and error-prone.

This is where the data mapping tools come into the picture! They provide a visual interface where you can drag and drop data elements from each source, like customer names in both datasets. The tool then intelligently matches them and allows you to define any necessary transformations, like converting loyalty points into dollar values. With a few clicks, you’ll be able to build a bridge between your data silos, enabling seamless analysis of customer behavior and preferences.

In this article, you will learn more about data mapping, its types, and what tools you can use to map your data elements according to your requirements.

What is Data Mapping?

Data mapping is the process of defining explicit relationships between data elements in two different data sources. These sources can be databases, applications, files, or any other system. Data mapping aims to accurately transform data between these sources while maintaining its meaning and integrity. Here’s the breakdown of its key aspects:

- Data mapping plays a crucial role in standardizing the data formats. Different systems might represent the same data point (like a state) in different ways (e.g., “Illions” vs “IL”). It helps to bridge these discrepancies, making the data readily understandable and usable across various applications.

- It serves as the groundwork for data integration, which involves combining data from multiple sources into a unified system. Data mapping facilitates smooth and accurate integration by establishing clear connections between elements.

- Data mapping allows for data transformations to be applied during the transfer process. This can involve simple operations like renaming files to more complex transformations like converting data types, filtering, and aggregating.

What are data mapping tools?

Data mapping tools are software applications that map data fields from one database to another. They help transform data into the required format, which the destination system needs to complete integration, migration, or transformation of data effectively and accurately.

Top 10 Data Mapping Tools

In this section, you will discover the top data mapping tools available and find the perfect fit to transform your data into a symphony of insights. Here are some data mapping tools:

1. CloverDX

CloverDX is a comprehensive data integration platform that offers robust data mapping capabilities. It provides a user-friendly interface for building and deploying workflow that connects and transforms data from various sources, including databases, files, and APIs.

Here are the features of CloverDX:

- It features a visual designer interface where you can drag and drop data elements between various sources and targets. This simplifies the process of mapping corresponding fields across different data formats.

- CloverDX also allows you to define custom transformations using their CloverDX Transformation Language (CTL). This empowers you to manipulate and convert data during mapping, catering to complex data structures and your specific business needs.

- For repetitive mapping tasks, CoverDX offers a pre-built template, minimizing the risk of human error.

2. Altova

Altova offers a powerful mapping tool, Altova MapForce, designed to streamline the process of mapping and transforming data between different formats and structures. It provides a graphical drag-drop interface that allows you to visually design data mappings between various data formats, including XML, JSON, flat files, Excel, and Web Services. This makes it a versatile tool for handling diverse mapping scenarios.

Key features of Altova include:

- Altova MapForce supports automated data mapping execution, enabling you to schedule and run data transformations, saving time and effort.

- It also helps you to define filters, functions, and custom logic to manipulate and convert data as needed.

3. Pimcore

Pimcore is an open-source platform that acts as a central hub for managing various aspects of your digital presence. It offers customizable workflows for mapping and transforming data between different systems. You can define mapping rules, transformations, and validation processes to ensure data integrity.

Here’s a breakdown of Pimcore’s key areas.

- It offers easy data imports from formats such as CSV, XLSX, JSON, and XML. You can quickly map data without writing any code. This makes it an ideal solution for your business that needs to manage large amounts of data.

- With Pimcore, you can also integrate with other product-based websites like eCommerce and social media platforms. This allows you to exchange data between business applications and streamline workflows.

4. Boomi

Boomi, or Dell Boomi, is an integration platform as a service (iPaaS) that facilitates the connection and integration of various applications. It provides a platform for designing, deploying, and managing data integration between on-premises and cloud-based applications. The visual designer enables you to easily map data between two platforms and integrate them.

Key aspects of Boomi are:

- Boomi's embedded AI capabilities suggest optimal data mapping strategies based on historical data and usage patterns. It also resolves data mapping errors, improving the data quality.

- It is entirely built on the cloud, offering scalability and elasticity to easily adapt to changing data volumes and integration needs.

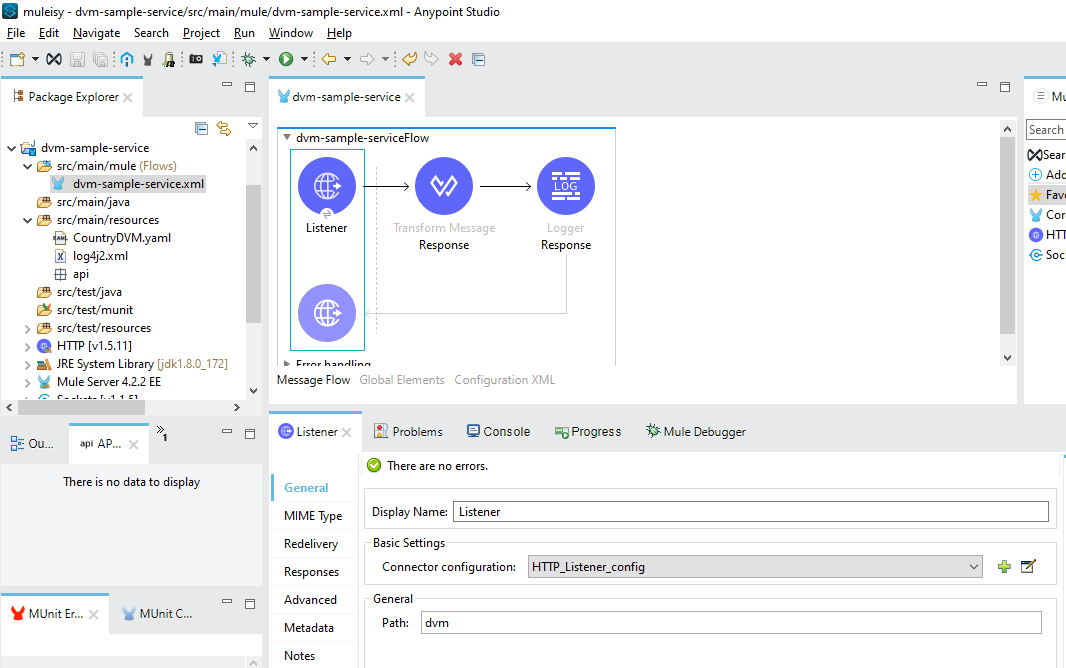

5. MuleSoft

MuleSoft is an integration platform that enables you to connect multiple applications, data sources, and devices. Its flagship product, MuleSoft Anypoint Platform, offers robust data mapping features as a part of its comprehensive set of tools and services for data integration and API management. With the Anypoint Platform, you can seamlessly map, transform, and manipulate data as it moves between different applications.

Here are MuleSoft’s Anypoint Platform key aspects:

- It provides a visual interface for designing data mapping configurations, allowing you to easily define transformation logic using a drag-and-drop interface. This graphical interface simplifies the process of mapping data between source and target structures, reducing the need for manual coding.

- MuleSoft’s programming language, DataWeave, allows you to read and modify data as it flows through the Mule application. The Mule runtime engine incorporates DataWeave in several essential components, like Transform and Set Payload. This enables you to execute DataWeave scripts and expressions in your Mule application.

6. Skyvia

Skyvia is a multi-functional cloud data integration platform. The rich set of data mapping functionality places Skyvia apart in the data integration market. Its user-friendly drag-and-drop interface makes it simple for users to map data between a variety of sources and destinations, including flat files, databases, and cloud apps.

Key Features of Skyvia:

- Visual Data Mapping: Using drag-and-drop functionality within Skyvia's visual interface allows any user to map fields to and from every source and destination. This, therefore, makes it very easy to align and transform data without requiring a lot of knowledge of coding.

- Data Transformation Capabilities: The platform enables complex data transformations using expressions in the mapping process. This flexibility is critical in dealing with intricate cases of data integration and custom business logic.

- Pre-built Connectors: Skyvia has broadened its connectivity through the availability of a multitude of pre-built connectors to popular cloud applications, databases, file storage services, etc. Such broad connectivity brings assurance of seamless integration with several systems.

7. Talend

Talend is among the most influential open-source data integration tools that have pioneered data mapping and transformation. It has an end-to-end package for development, deployment, and management tools required by data integration workflows.

Features of Talend:

- Graphical Development Environment: It is a drag-and-drop graphical environment wherein users may design data mappings. In this graphical environment, it becomes easy to create complex data flows and transformations.

- Library of Components: Talend has a rich library of prebuilt components and connectors to hundreds of data sources and targets for various transformations. This lets users further pace up the setup of integrations and automate data workflows easily.

- Advanced Data Transformation: Talend supports a wide set of advanced data transformation capabilities, including filtering, sorting, aggregating, and joining data from multiple sources. Users can also build custom transformations by using Talend's in-built scripting languages.

8. IBM InfoSphere DataStage

IBM InfoSphere DataStage is a powerful data integration tool integrated with data mapping and transformation. With it, the user can visually represent their data flow and integrate their data across sources and targets of databases, enterprise applications, and cloud services.

Features of IBM InfoSphere DataStage:

- Graphical Job Design: The tool offers drag-and-drop ability from a palette of components to the DataStage Designer workspace, where one maps and transforms data across all source and destination systems in an easy graphical interface.

- Parallel Processing: With parallel processing capabilities, DataStage helps in building high-volume data integrations with excellent performance and scalability.

- Big Library of Transformations: It has a large variety of transformation functions that are built-in within the tool; at the same time, it also offers the possibility to add user-defined transformations using scripts or by developing user-defined stages.

9. Jitterbit

Jitterbit is an all-round data integration platform with strong data mapping features for connecting and transforming data between different systems, applications, or databases. Its user-friendly interface and heavy-duty features make it quite an equally good choice for businesses.

Features of Jitterbit:

- Visual Mapping: Drag-and-drop visual data mapping in Jitterbit makes defining how data elements correspond between different sources and targets very easy.

- Pre-built Connectors: With its various pre-built connectors, Jitterbit enables integration into any of the popular systems or applications, which reduces setup time and effort virtually.

- Custom Transformation: It facilitates custom transformation through scripting, in turn allowing the user to customize any data transformation for his specific business requirements.

- Automation and Scheduling: Jitterbit facilitates the automation and scheduling of data integration tasks. This ensures that data is processed on time and efficiently without manual intervention.

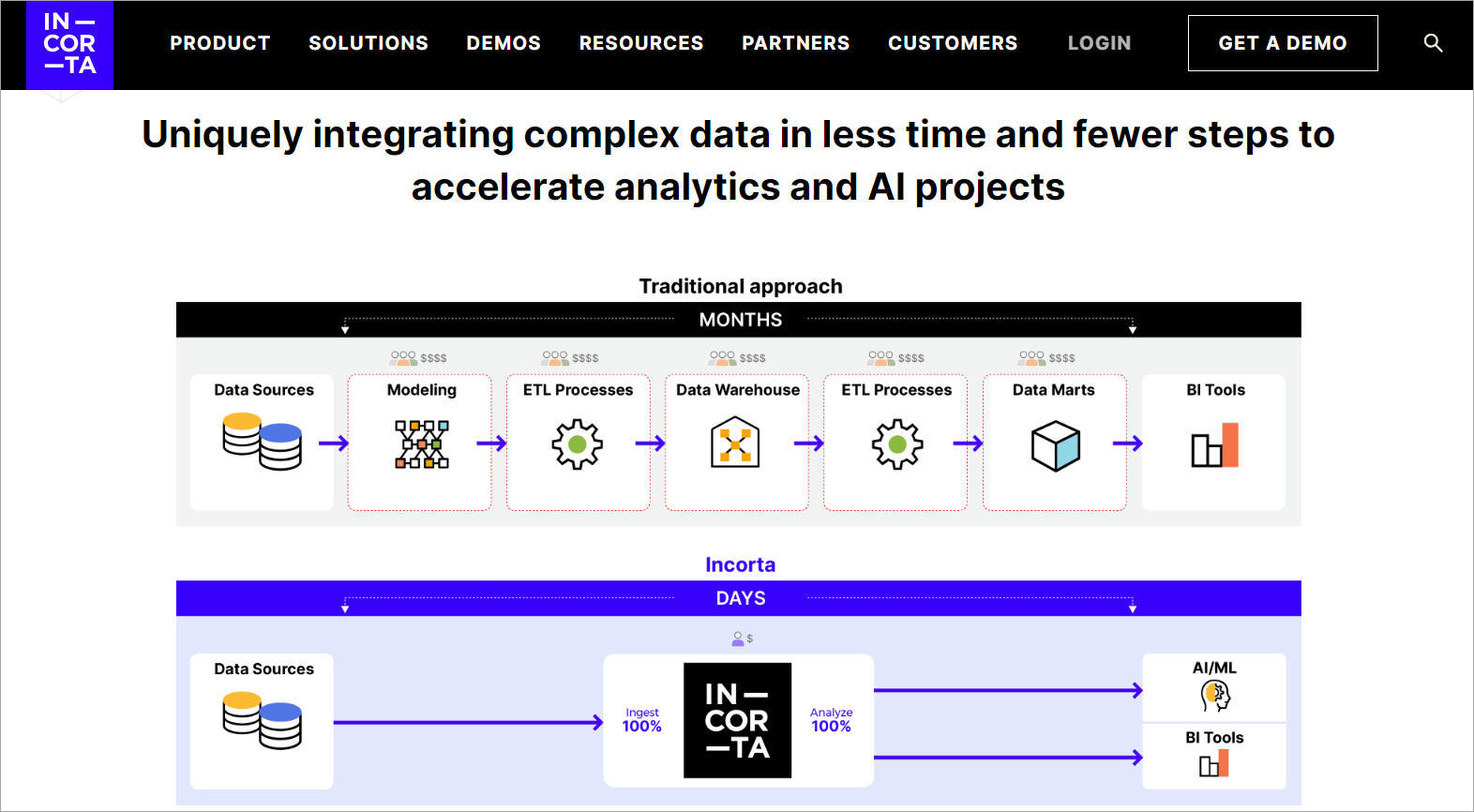

10. Incorta

Incorta is a new-generation data analytics platform that offers effective data mapping and integration capabilities. It makes the data transformation process easy by providing a means for the user to rapidly connect to the source of data, map fields, and deliver analytics in real-time.

Features of Incorta:

- Direct Data Mapping: Incorta allows users to map data directly from source systems to analytics; no long data modeling and transformation cycles are required.

- Real-Time Analytics: Real-time data integration and analytics are supported—current information for decision-making.

- Schema-Free Data Integration: Fast integration of data is possible with Incorta's schema-free approach, with no need for a huge amount of data preparation, thus allowing users to turn around changes in the requirements of the data very quickly.

- Advanced Security: Through data integration, Incorta offers robust security and analytic processing.

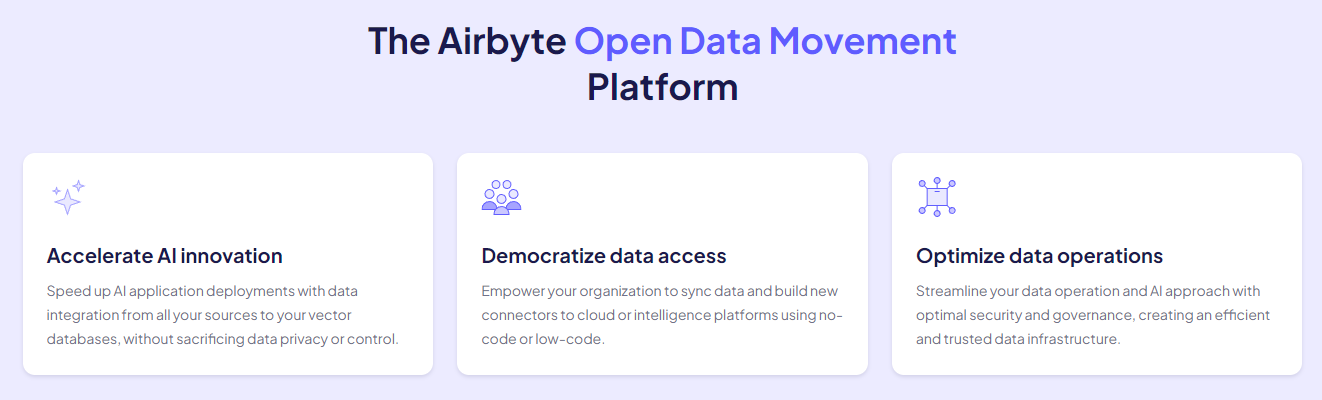

Streamline Your Analytics Journey with Airbyte Today!

Managing and mapping multiple data connections across different tools can add complexity to your analytics journey. Moreover, data quality across various destinations requires ongoing vigilance.

This is where Airbyte comes to your rescue! As a leading data integration platform, it helps you streamline data workflows. With over 550+ pre-built and custom connectors features, it automates the flow of information between systems. If the platform you are looking for is unavailable in the pre-built options, you can create your own connector using AI-powered Connector Builder. The AI-assist technology automatically fills out most fields in the UI by reading through your preferred platform’s API documentation.

Additionally, Airbyte supports incremental sync that allows you to extract only the data that has been changed since the last successful sync. This eliminates the need to re-map the entire data set every time you run a sync, saving time and resources.

Here are the key features of Airbyte:

- No-Code Data Integration: It simplifies data connection and transfer between systems without requiring coding skills, enabling you to design and implement data workflows using its intuitive visual interface.

- Streamline GenAI Workflows: Airbyte allows you to handle semi-structured and unstructured data by transforming it into vector embeddings using RAG techniques like chunking, embedding, and indexing. You can store these vectors in popular vector databases supported by Airbyte, including Pinecone and Weaviate, which facilitates GenAI application development.

- dbt Transformation: Airbyte enables you to seamlessly integrate with dbt, a robust data transformation tool. This integration empowers you to efficiently manipulate and process your data.

- PyAirbyte: PyAirbyte is an open-source Python library that empowers you to handle your data pipelines with ease. It allows you to extract data using Airbyte connectors. You can further transform data with Python libraries and load it into a supported destination.

- Self-Managed Enterprise: To support large data volumes for enterprise-level workflows, Airbyte offers a Self-Managed Enterprise edition. This version extends Airbyte's capabilities by providing features like multitenancy, enterprise support with SLAs, and PII masking.

Type of Data Mapping

Data mapping isn’t a one-size-fits-all solution. Several methods exist, each with its strengths and weaknesses. Your choice depends on factors like the task at hand, available resources, and data complexity. Here are some of the techniques listed below that might align with your specific needs and objectives.

1. Manual Data Mapping

Manual data mapping refers to the process of manually connecting and defining the relationships between data fields in different sources. This process requires careful attention to detail and understanding of the data models involved. Manual data mapping is commonly used in scenarios where the volume of data is manageable or custom transformations are required.

2. Automated Data Mapping

Automated data mapping involves software or algorithms automatically identifying and matching data elements between different systems or data origins. This process relies on predefined rules, heuristics, or machine learning algorithms to recognize patterns, relationships, and similarities within the data. Automated data mapping is often employed to streamline data integration, migration, or synchronization across diverse platforms without much human intervention. This approach is generally faster than manual mapping and is less prone to human-made errors.

3. Semi-Automated Data Mapping

This approach combines both manual and automated processes in data mapping and integration workflow. In this method, human intervention is involved to a certain extent, typically for defining rules, handling exceptions, or performing validation checks. While on the other hand automated tools match fields or attributes between source and target datasets based on predefined criteria and assist in the execution of repetitive tasks. By leveraging the efficiency of both (human and machine), the semi-automated technique deals with complex and ambiguous mappings.

4. Schema Mapping

Schema mapping defines the relationships and correspondences between the structures (schemas) of two different databases or sources. It involves mapping attributes, tables, or entities from one schema to another, ensuring a coherent and accurate data transfer during migration. Additionally, schema mapping connects data types, handles naming conventions, and addresses differences in structure or hierarchy between the source and target schemas.

Benefits of using data mapping tools

1. Improved Data Integration

Data mapping tools streamline data integration, making it easy to combine data from different sources into one unique meaningful dataset. These are very important for any business wanting to integrate data from various systems, including customer relationship management, enterprise resource planning, and other types of enterprise applications.

2. Time and Cost Efficiency

It reduces the time and resources used in the manual transformation of data. Such efficiency helps to increase the speed of project timelines, reduces operational costs, and enables IT teams to work on other important strategic activities.

3. Scalability

While designed to work with huge amounts of data, these data mapping tools are suitable for organizations with a wide breadth of data integration requirements. They scale to accommodate growing data sets so businesses can maintain high performance and reliability as they grow bigger.

4. Simplified Data Migration

Data mapping tools ease the process of data migration from any legacy system to modern platforms; data is mapped correctly and transformed to reduce the risks of loss or data corruption in the process of migration.

5. Real-time Data Processing

Advanced data mapping tools enable real-time processing, whereby data is integrated and analyzed during generation. This is quite critical in applications where information needs to be current, as in the case of real-time analytics and monitoring systems.

Key features to look out for in data mapping tools

While selecting a data mapping tool, there are important features you need to consider to ensure that the selected tool best fits your business needs.

1. User-Friendly Interface

This is an important consideration for better data mapping. One should look for tools that provide a drag-and-drop, user-friendly interface to make mappings easy, especially for non-technical users.

2. Extensive Integration

Data mapping tools with good integration capabilities can integrate data from various sources, including databases, cloud services, applications, and others. Most of these tools have pre-built connectors from popular data sources, simplifying the integration process.

3. Flexible Schema Support

Data mapping tools should have flexible schema support, making it easier to manipulate data and transform it into a usable form regardless of the source’s schema. It will enable you to get the data in the form you want. Data can be of various formats and can be of different sizes.

4. Advanced Data Transformation

Look for tools that will support complex data transformations—that is, to convert data into the required structure and format. It also includes functions for data cleansing, enrichment, aggregation, and validation to make sure that transferred data is of quality and accuracy.

5. Error Handling

Most data mapping tools include advanced error-handling features, enabling them to identify and report data mismatches, sometimes even correcting inaccurate data to guarantee smooth data integration and reduction of downtime.

Conclusion

With accurate data transfer and transformation, you can perform better analysis and fully utilize the data. By leveraging these data mapping tools and techniques, you can overcome the challenges of data mapping and unlock the potential of your data for valuable insights.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: