Top ETL Tools for Elasticsearch Integration to follow

Summarize this article with:

Elasticsearch holds valuable data, but moving it efficiently to other destinations is often a headache for data engineers. Whether you're consolidating data warehouses, creating analytics pipelines, or building data lakes, you need reliable ETL tools to extract and load Elasticsearch data. This guide walks through battle-tested Elasticsearch ETL tools that make data migration straightforward, helping you pick the right one for your specific needs.

What is Elasticsearch?

Elasticsearch is a turbocharged search system that stores data in JSON format and provides a RESTful API for quick data operations, making it popular for search functionality, log analytics, and real-time data processing. Think of it as a powerful, scalable database that excels at searching and analyzing text data, which is why many companies use it for everything from product search to application monitoring.

What is Elasticsearch ETL?

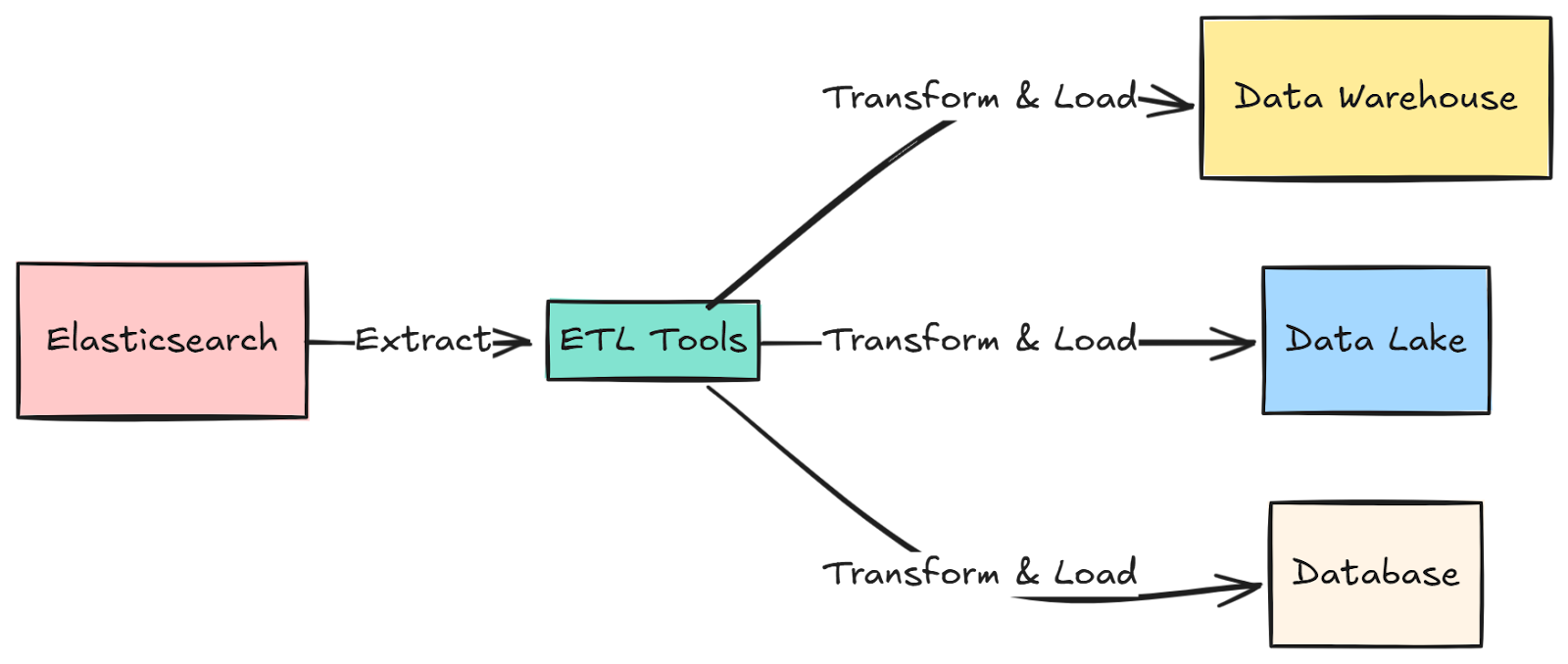

Elasticsearch ETL is the process of extracting data from Elasticsearch and loading it into different target systems like data warehouses, lakes, or databases. This process helps organizations centralize their data from Elasticsearch for analytics, reporting, or data consolidation purposes.

Common Use Cases for Elasticsearch ETL Tools

Data Warehouse Integration

Your application logs and search data in Elasticsearch contain valuable business insights, but they're isolated from other data sources. ETL tools help consolidate this data into your data warehouse, enabling unified analytics and reporting alongside data from other systems.

Business Intelligence & Analytics

Many BI tools don't directly connect to Elasticsearch or have limited query capabilities. ETL tools extract and transform Elasticsearch data into formats optimized for your BI platforms, making it easier to create dashboards and generate reports.

Data Lake Population

Organizations often need historical Elasticsearch data for machine learning or long-term analysis. ETL tools can efficiently move this data to your data lake, where it's stored cost-effectively and can be processed using various big data tools.

Criterias to select the right Elasticsearch ETL solution for you

As a company, you don't want to use one separate data integration tool for every data source you want to pull data from. So you need to have a clear integration strategy and some well-defined evaluation criteria to choose your Elasticsearch ETL solution.

Here is our recommendation for the criteria to consider:

- Connector need coverage: does the ETL tool extract data from all the multiple systems you need, should it be any cloud app or Rest API, relational databases or noSQL databases, csv files, etc.? Does it support the destinations you need to export data to - data warehouses, databases, or data lakes?

- Connector extensibility: for all those connectors, are you able to edit them easily in order to add a potentially missing endpoint, or to fix an issue on it if needed?

- Ability to build new connectors: all data integration solutions support a limited number of data sources.

- Support of change data capture: this is especially important for your databases.

- Data integration features and automations: including schema change migration, re-syncing of historical data when needed, scheduling feature

- Efficiency: how easy is the user interface (including graphical interface, API, and CLI if you need them)?

- Integration with the stack: do they integrate well with the other tools you might need - dbt, Airflow, Dagster, Prefect, etc. - ?

- Data transformation: Do they enable to easily transform data, and even support complex data transformations? Possibly through an square integration with dbt

- Level of support and high availability: how responsive and helpful the support is, what are the average % successful syncs for the connectors you need. The whole point of using ETL solutions is to give back time to your data team.

- Data reliability and scalability: do they have recognizable brands using them? It also shows how scalable and reliable they might be for high-volume data replication.

- Security and trust: there is nothing worse than a data leak for your company, the fine can be astronomical, but the trust broken with your customers can even have more impact. So checking the level of certification (SOC2, ISO) of the tools is paramount. You might want to expand to Europe, so you would need them to be GDPR-compliant too.

Top 10 Elasticsearch ETL tools

Here are the top Elasticsearch ETL tools based on their popularity and the criteria listed above:

1. Airbyte

A modern, open-source data integration platform that connects Elasticsearch to 300+ destinations like Snowflake, BigQuery, and Redshift. It feels like the Swiss Army knife of data integration - it handles everything from simple CSV exports to complex real-time syncs.

Deployment options: Both cloud and self-hosted

Platform type: Open-source data integration platform

Key features:

- Pre-built Elasticsearch connector

- CDC support to track real-time changes

- Built-in data normalization and transformation capabilities

2. Fivetran

Fivetran is a closed-source, managed ELT service that was created in 2012. Fivetran has about 300 data connectors and over 5,000 customers.

Fivetran offers some ability to edit current connectors and create new ones with Fivetran Functions, but doesn't offer as much flexibility as an open-source tool would.

What's unique about Fivetran?

Being the first ELT solution in the market, they are considered a proven and reliable choice. However, Fivetran charges on monthly active rows (in other words, the number of rows that have been edited or added in a given month), and are often considered very expensive.

Here are more critical insights on the key differentiations between Airbyte and Fivetran

3. Stitch Data

Stitch is a cloud-based platform for ETL that was initially built on top of the open-source ETL tool Singer.io. More than 3,000 companies use it.

Stitch was acquired by Talend, which was acquired by the private equity firm Thoma Bravo, and then by Qlik. These successive acquisitions decreased market interest in the Singer.io open-source community, making most of their open-source data connectors obsolete. Only their top 30 connectors continue to be maintained by the open-source community.

What's unique about Stitch?

Given the lack of quality and reliability in their connectors, and poor support, Stitch has adopted a low-cost approach.

Here are more insights on the differentiations between Airbyte and Stitch, and between Fivetran and Stitch.

4. Matillion

Matillion is a self-hosted ELT solution, created in 2011. It supports about 100 connectors and provides all extract, load and transform features. Matillion is used by 500+ companies across 40 countries.

What's unique about Matillion?

Being self-hosted means that Matillion ensures your data doesn’t leave your infrastructure and stays on premise. However, you might have to pay for several Matillion instances if you’re multi-cloud. Also, Matillion has verticalized its offer from offering all ELT and more. So Matillion doesn't integrate with other tools such as dbt, Airflow, and more.

Here are more insights on the differentiations between Airbyte and Matillion.

5. Airflow

Apache Airflow is an open-source workflow management tool. Airflow is not an ETL solution but you can use Airflow operators for data integration jobs. Airflow started in 2014 at Airbnb as a solution to manage the company's workflows. Airflow allows you to author, schedule and monitor workflows as DAG (directed acyclic graphs) written in Python.

What's unique about Airflow?

Airflow requires you to build data pipelines on top of its orchestration tool. You can leverage Airbyte for the data pipelines and orchestrate them with Airflow, significantly lowering the burden on your data engineering team.

Here are more insights on the differentiations between Airbyte and Airflow.

6. Pentaho

Pentaho is an ETL and business analytics software that offers a comprehensive platform for data integration, data mining, and business intelligence. It offers ETL, and not ELT and its benefits.

What is unique about Pentaho?

What sets Pentaho data integration apart is its original open-source architecture, which allows for easy customization and integration with other systems and platforms. Additionally, Pentaho provides advanced data analytics and reporting tools, including machine learning and predictive analytics capabilities, to help businesses gain insights and make data-driven decisions.

However, Pentaho is also an Enterprise product, so hard to implement without any self-serve option.

7. Singer

Singer is also worth mentioning as the first open-source JSON-based ETL framework. It was introduced in 2017 by Stitch (which was acquired by Talend in 2018) as a way to offer extendibility to the connectors they had pre-built. Talend has unfortunately stopped investing in Singer’s community and providing maintenance for the Singer’s taps and targets, which are increasingly outdated, as mentioned above.

8. Rivery

Rivery is another cloud-based ELT solution. Founded in 2018, it presents a verticalized solution by providing built-in data transformation, orchestration and activation capabilities. Rivery offers 150+ connectors, so a lot less than Airbyte. Its pricing approach is usage-based with Rivery pricing unit that are a proxy for platform usage. The pricing unit depends on the connectors you sync from, which makes it hard to estimate.

9. HevoData

HevoData is another cloud-based ELT solution. Even if it was founded in 2017, it only supports 150 integrations, so a lot less than Airbyte. HevoData provides built-in data transformation capabilities, allowing users to apply transformations, mappings, and enrichments to the data before it reaches the destination. Hevo also provides data activation capabilities by syncing data back to the APIs.

10. Meltano

Meltano is an open-source orchestrator dedicated to data integration, spined off from Gitlab on top of Singer’s taps and targets. Since 2019, they have been iterating on several approaches. Meltano distinguishes itself with its focus on DataOps and the CLI interface. They offer a SDK to build connectors, but it requires engineering skills and more time to build than Airbyte’s CDK. Meltano doesn’t invest in maintaining the connectors and leave it to the Singer community, and thus doesn’t provide support package with any SLA.

All those ETL tools are not specific to Elasticsearch, you might also find some other specific data loader for Elasticsearch data. But you will most likely not want to be loading data from only Elasticsearch in your data stores.

Why Not Use Native Elasticsearch ETL Tools like Logstash?

While Logstash is part of the Elastic Stack, it's primarily designed for log processing and requires significant development effort for modern data integration needs. Airbyte, on the other hand, provides ready-to-use connectors for popular destinations like Snowflake and BigQuery, along with automated schema handling and data normalization. The platform's point-and-click interface eliminates weeks of custom coding that Logstash would require. For data teams focused on rapid integration and scalability, Airbyte provides a more efficient, purpose-built solution than Logstash.

Which data can you extract from Elasticsearch?

Elasticsearch's API provides access to a wide range of data types, including:

1. Textual data: Elasticsearch can index and search through large volumes of textual data, including documents, emails, and web pages.

2. Numeric data: Elasticsearch can store and search through numeric data, including integers, floats, and dates.

3. Geospatial data: Elasticsearch can store and search through geospatial data, including latitude and longitude coordinates.

4. Structured data: Elasticsearch can store and search through structured data, including JSON, XML, and CSV files.

5. Unstructured data: Elasticsearch can store and search through unstructured data, including images, videos, and audio files.

6. Log data: Elasticsearch can store and search through log data, including server logs, application logs, and system logs.

7. Metrics data: Elasticsearch can store and search through metrics data, including performance metrics, network metrics, and system metrics.

8. Machine learning data: Elasticsearch can store and search through machine learning data, including training data, model data, and prediction data.

Overall, Elasticsearch's API provides access to a wide range of data types, making it a powerful tool for data analysis and search.

How to start pulling data in minutes from Elasticsearch

If you decide to test Airbyte, you can start analyzing your Elasticsearch data within minutes in three easy steps:

Step 1: Set up Elasticsearch as a source connector

1. Open the Airbyte UI and navigate to the "Sources" tab.

2. Click on the "Create Connection" button and select "Elasticsearch" as the source.

3. Enter the required information such as the name of the connection and the Elasticsearch URL.

4. Provide the Elasticsearch credentials such as the username and password.

5. Specify the index or indices that you want to replicate.

6. Choose the replication mode, either full or incremental.

7. Set the replication schedule according to your needs.

8. Test the connection to ensure that the Elasticsearch source connector is working correctly.

9. Save the connection and start the replication process.

It is important to note that the Elasticsearch source connector on Airbyte.com requires a valid Elasticsearch URL and credentials to establish a connection. The connector also allows you to specify the index or indices that you want to replicate and choose the replication mode and schedule. Once the connection is established, Airbyte will replicate the data from Elasticsearch to your destination of choice.

Step 2: Set up a destination for your extracted Elasticsearch data

Choose from one of 50+ destinations where you want to import data from your Elasticsearch source. This can be a cloud data warehouse, data lake, database, cloud storage, or any other supported Airbyte destination.

Step 3: Configure the Elasticsearch data pipeline in Airbyte

Once you've set up both the source and destination, you need to configure the connection. This includes selecting the data you want to extract - streams and columns, all are selected by default -, the sync frequency, where in the destination you want that data to be loaded, among other options.

And that's it! It is the same process between Airbyte Open Source that you can deploy within 5 minutes, or Airbyte Cloud which you can try here, free for 14-days.

Conclusion

This article outlined the criteria that you should consider when choosing a data integration solution for Elasticsearch ETL/ELT. Based on your requirements, you can select from any of the top 10 ETL/ELT tools listed above. We hope this article helped you understand why you should consider doing Elasticsearch ETL and how to best do it.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: