Learn how to build an end-to-end Retrieval-Augmented Generation (RAG) pipeline. We will extract data from Google Drive using Airbyte Cloud to load it on Snowflake Cortex.

Summarize this article with:

Investing in cryptocurrency has been around for a while. However, people have been making blind investments without solid knowledge of the topic. By getting a comprehensive understanding of the underlying principles, market dynamics, and potential risks and rewards associated with cryptocurrencies, investors can make more informed decisions. This guide aims to make use of analytical data of Bitcoin, exploring its potential as an investment, the factors influencing its value, and the strategies for managing and mitigating risks.

In this tutorial, we'll walk you through the process of using Airbyte to pass a document titled "Is Bitcoin a Good Investment" to Snowflake Cortex for processing. This process, called retrieval-augmented generation, leverages Snowflake's LLM functions to seamlessly consume and analyze the data. Eventually, you'll have a comprehensive understanding of how to extract valuable insights from this document , leveraging advanced data tools to better inform your cryptocurrency investment decisions.

We will be moving airbyte data into Snowflake Cortex, which allows us to perform cosine similarity search. Finally, we can get some insights from our document.

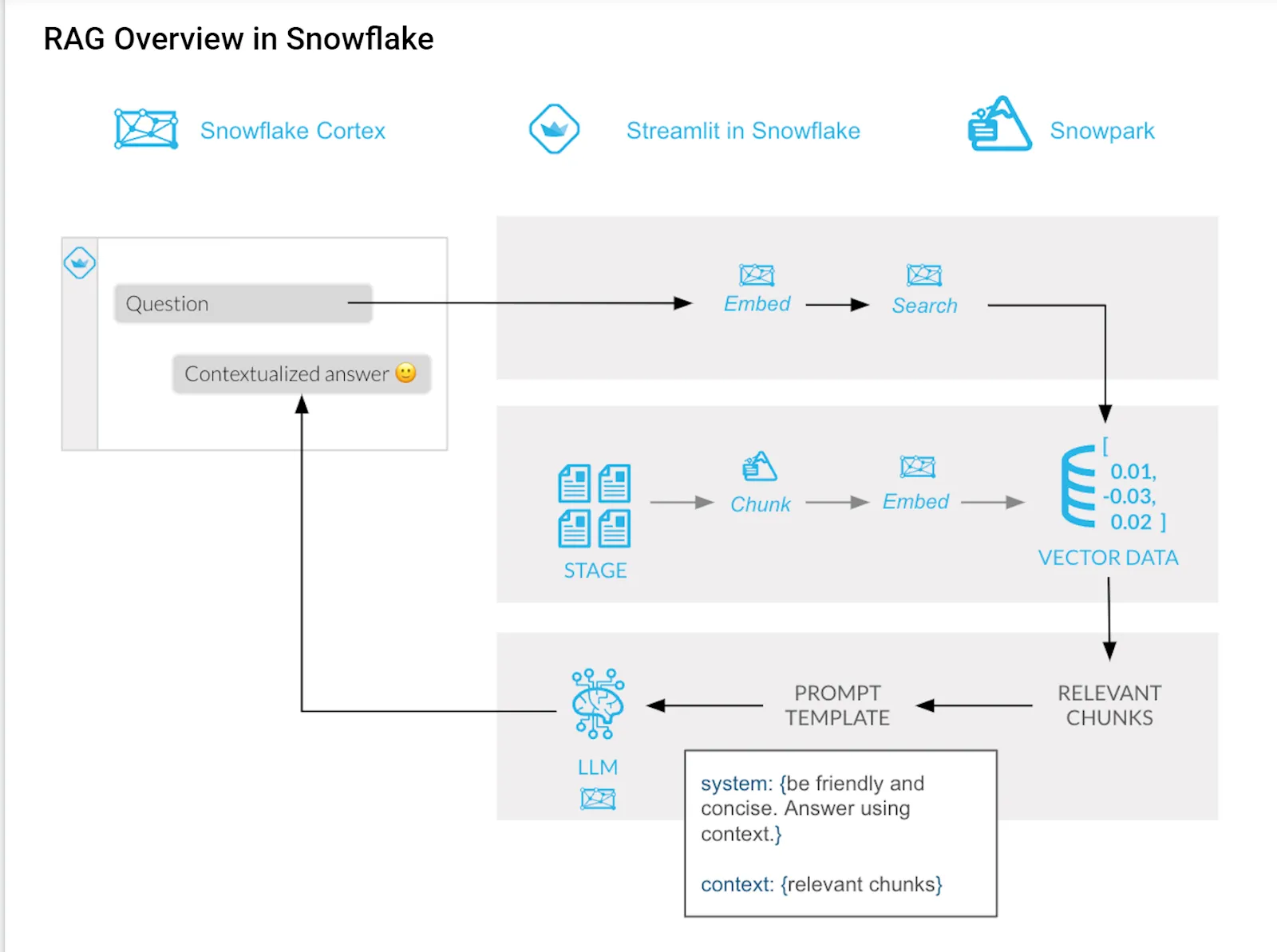

LLMs are quite helpful when you are interested in general information. Unfortunately, we cannot say the same when it comes to domain-specific information and that's where they start to hallucinate. By providing LLms with up-to-date information from any data source, you address this limitation since the LLM can now use this data. This process is called Retrieval-Augmented Generation (RAG).

I provided my document download link but feel free to use your own custom source.

1. Data Source: In this tutorial we use a Google Drive folder

2. Airbyte Cloud Account : Log in here

3. SnowFlake Account: Ensure Cortex functions are enabled. Log in here

4. OpenAI api key: Ensure you do not have a rate limit to continue. Access yours here

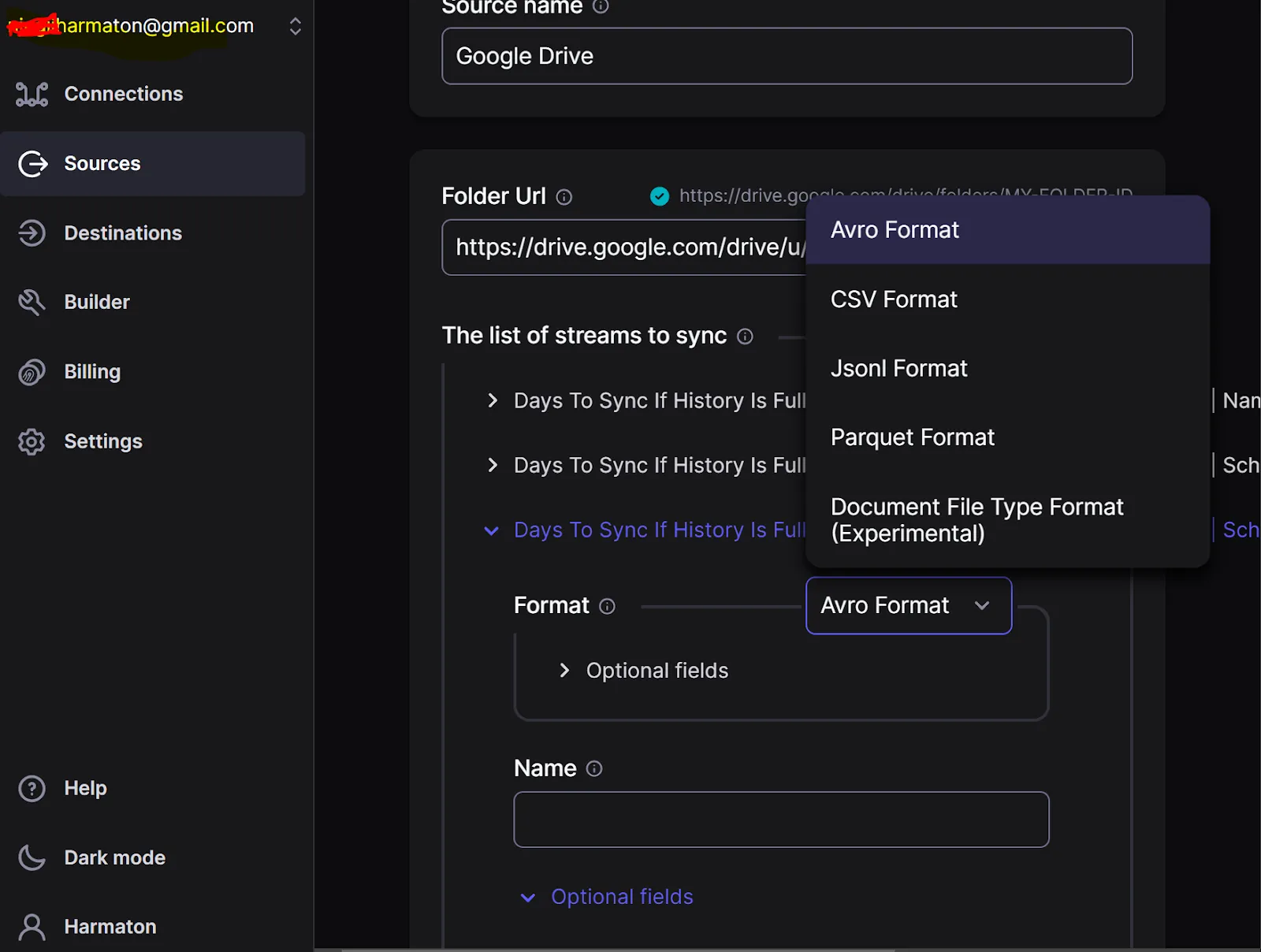

In Airbyte cloud source connectors , select the Google Drive connector as your source, paste your folder url (mandatory) and create a stream with the Document File Type Format (Experimental). Finally, test it to ensure it's perfectly set up.

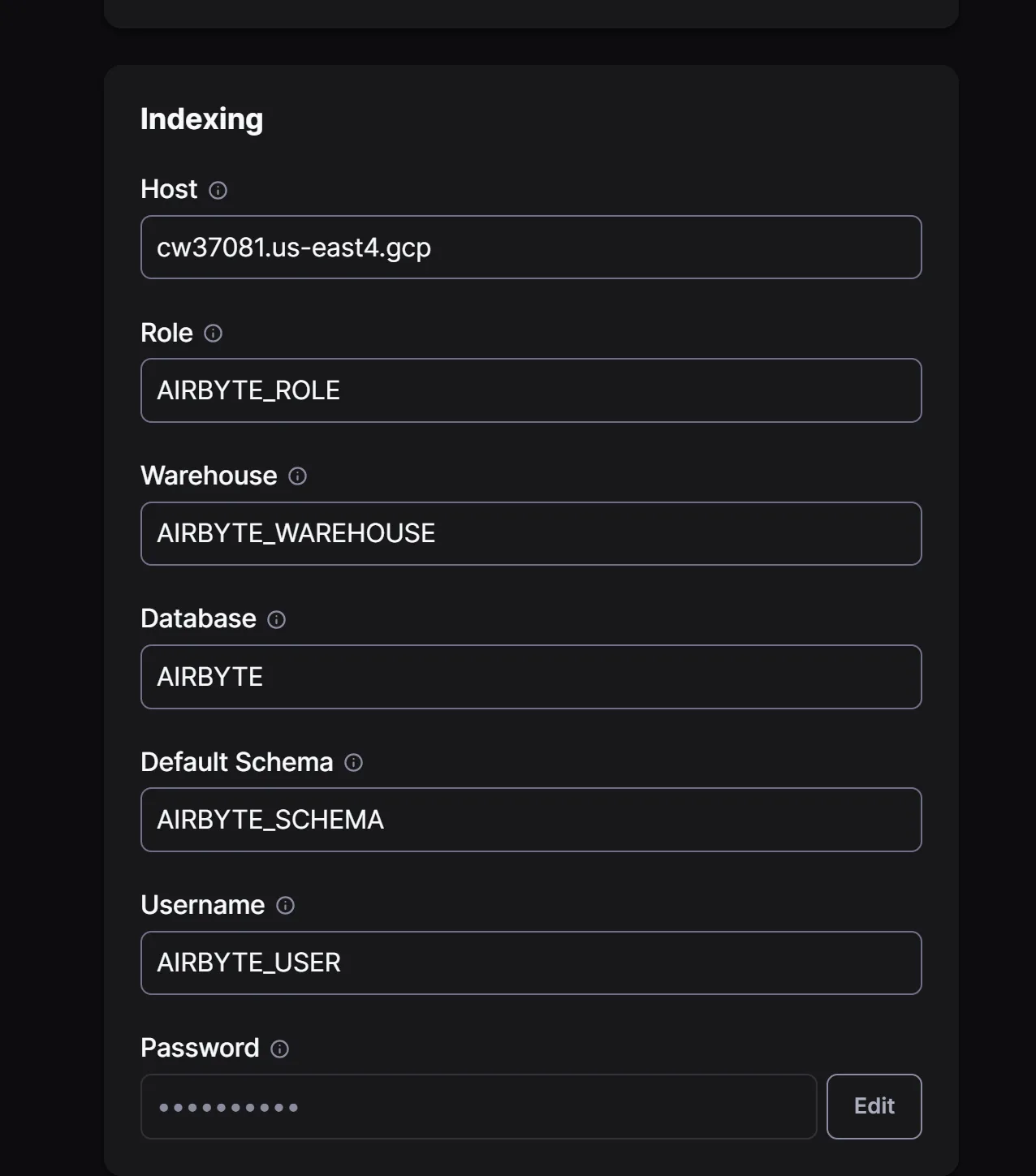

To set up a Snowflake instance you need to set up entities (warehouse, database, schema, user, and role) in the Snowflake console as explained in this documentation .

Basically, run the following worksheet in the snowflake console (ensure that you are running all statements).

This will spin up a database, ready to store your data. Move back to Airbyte cloud destination connectors and set up Snowflake Cortex. Make sure to set up your credentials with the following format based on the script above. Finally, test the source to make sure it's working as expected.

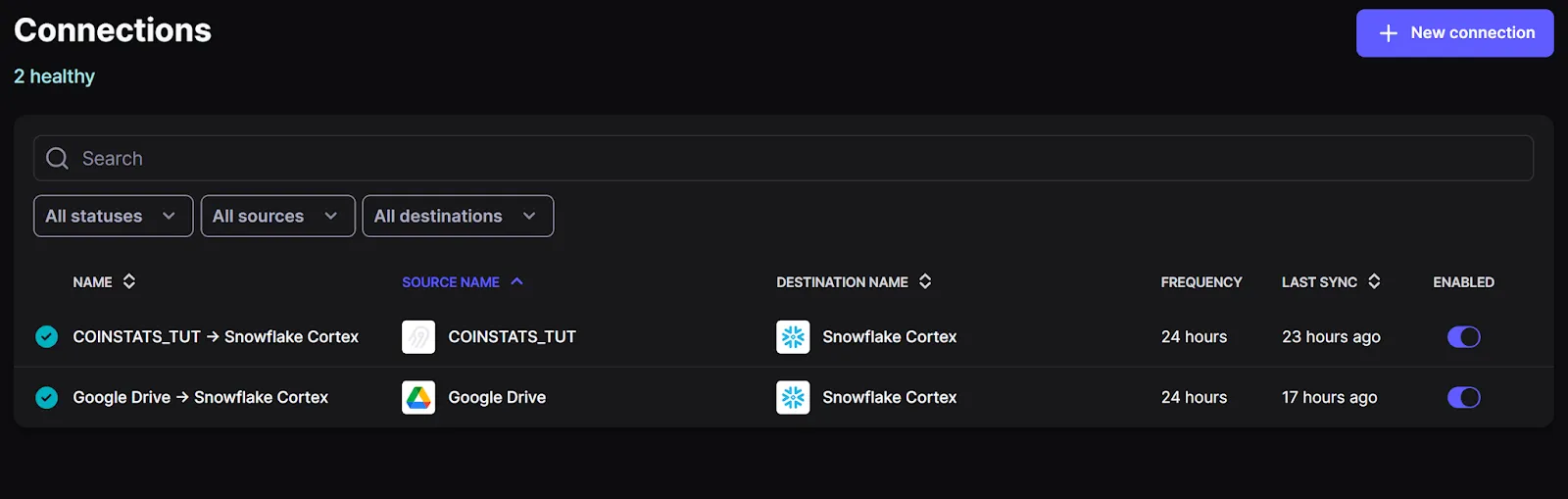

Next, We create a connection and sync the data to access it in a snowflake instance. Here is an example of successful connections after sync;

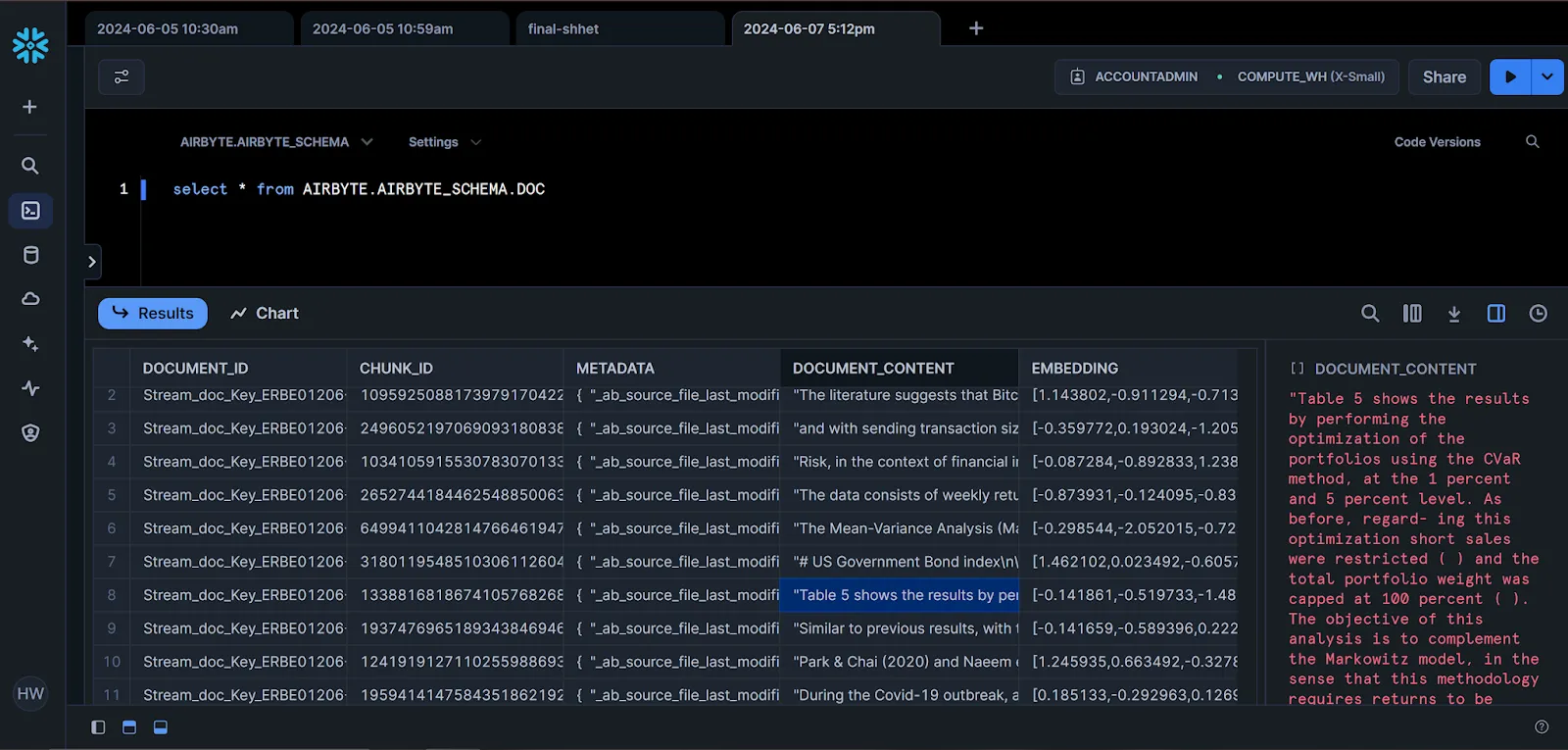

At this point you should be able to see the data in Snowflake. The following columns will be available in your database, tables have the following columns

Here is a snippet of how one of my results appears.

RAG heavily relies on semantic comparison techniques. The measurement of similarity between vectors is a fundamental operation in semantic comparison. This operation is used to find the top N closest vectors to a query vector, which can be used for semantic search. Vector search also enables developers to improve the accuracy of their generative AI responses by providing related documents to a large language model.

The key elements in the RAG process are :

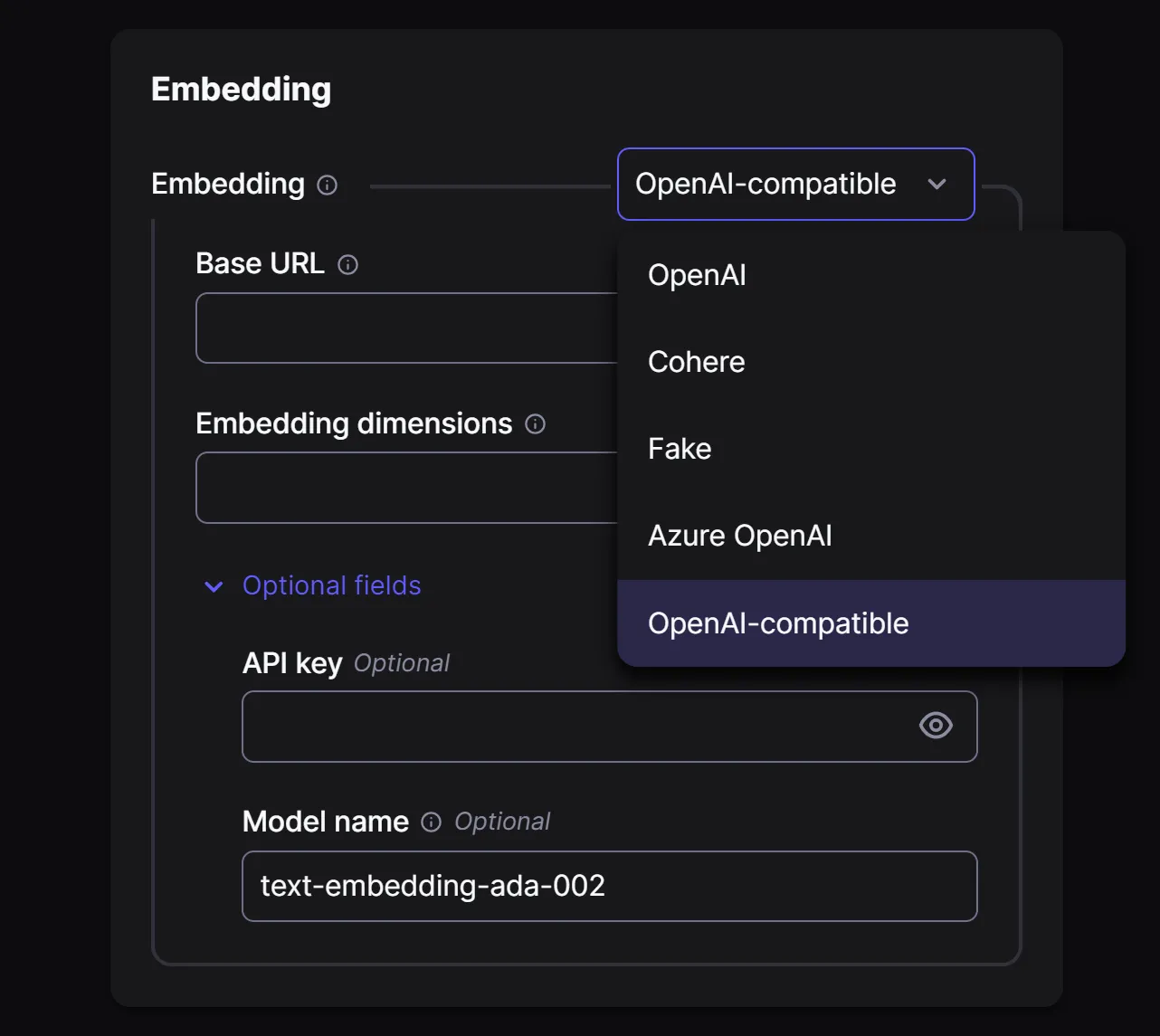

Ideally, You can either embed data using OpenAI, Cohere, OpenAI compatible or Fake(from Airbyte Cloud UI). Then you have to embed the question with the appropriate method distinct from each model.

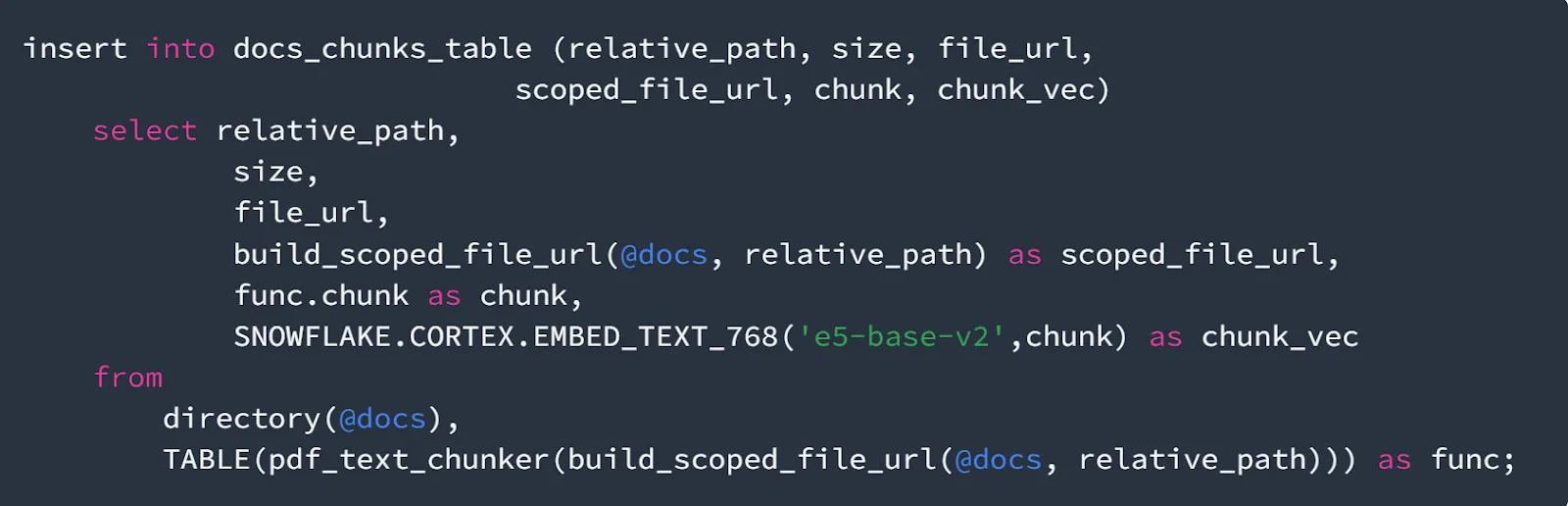

In cases you use Fake to embed the data, you will need to replace the fake embedding in Snowflake with the Snowflake Cortex embedding.

You can use the following functions to embed data instantly on Snowflake Cortex:

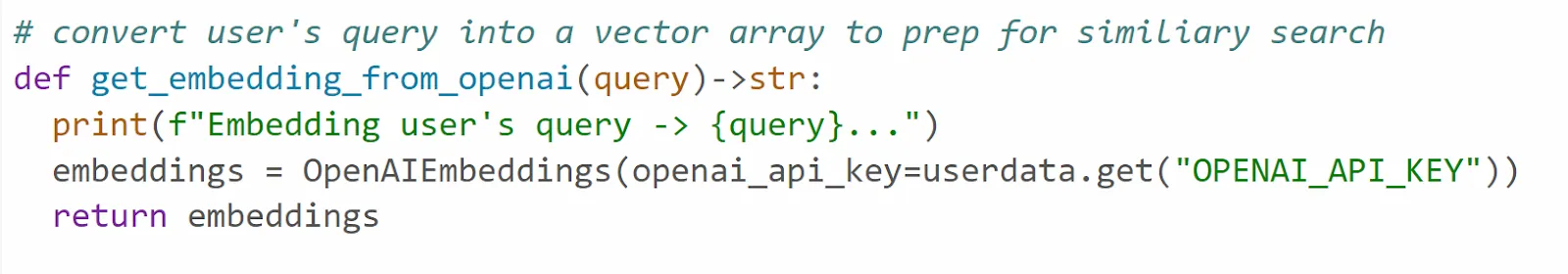

If you use OpenAI the data embedding model, you will generate the embeddings using OpenAI embedding function.

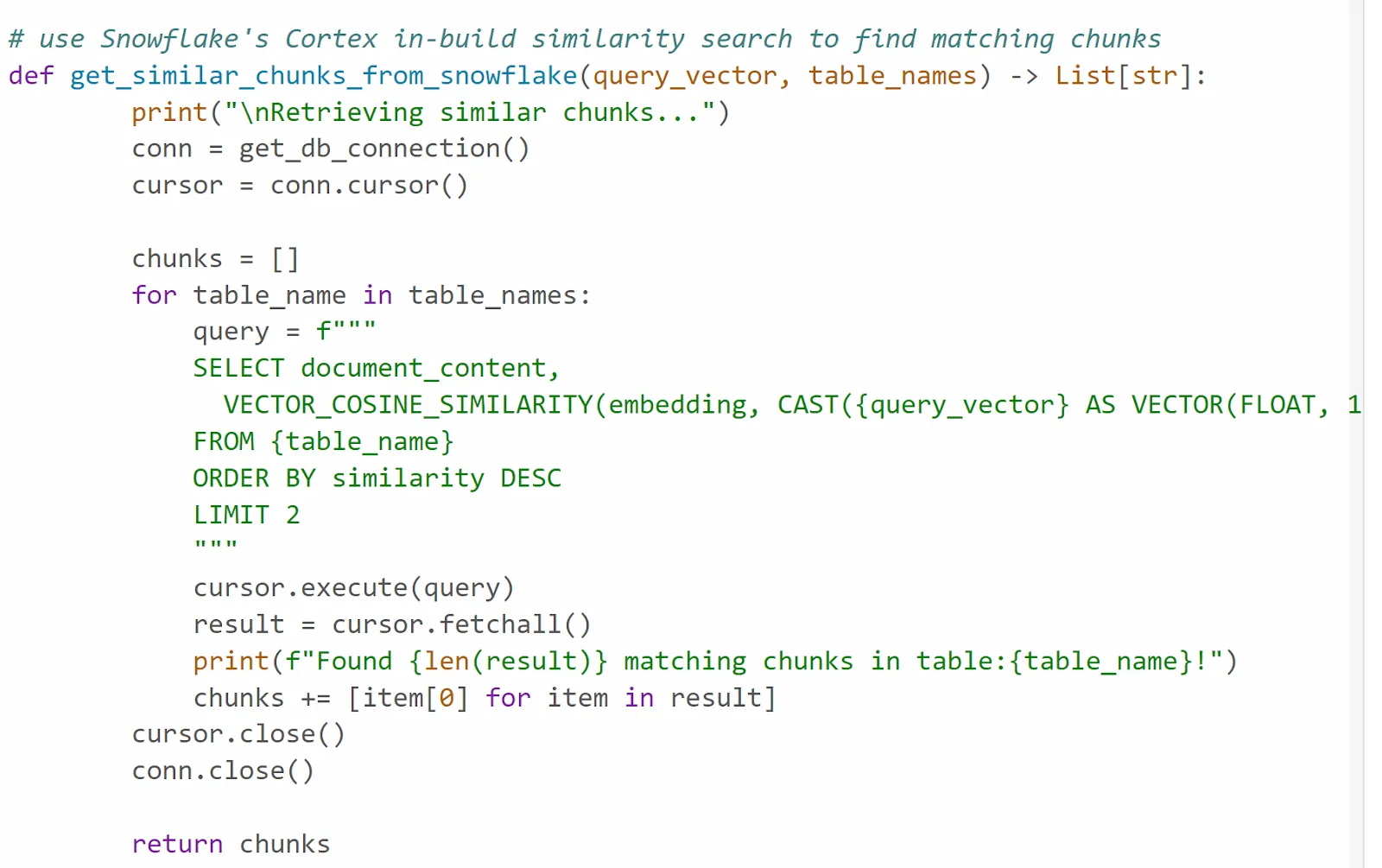

Snowflake Cortex provides three vector similarity functions:

VECTOR_COSINE_SIMILARITY :We will use this function in our demo.

Learn how to manage privileges in Snowflake to allow you to use Cortex functions like complete here

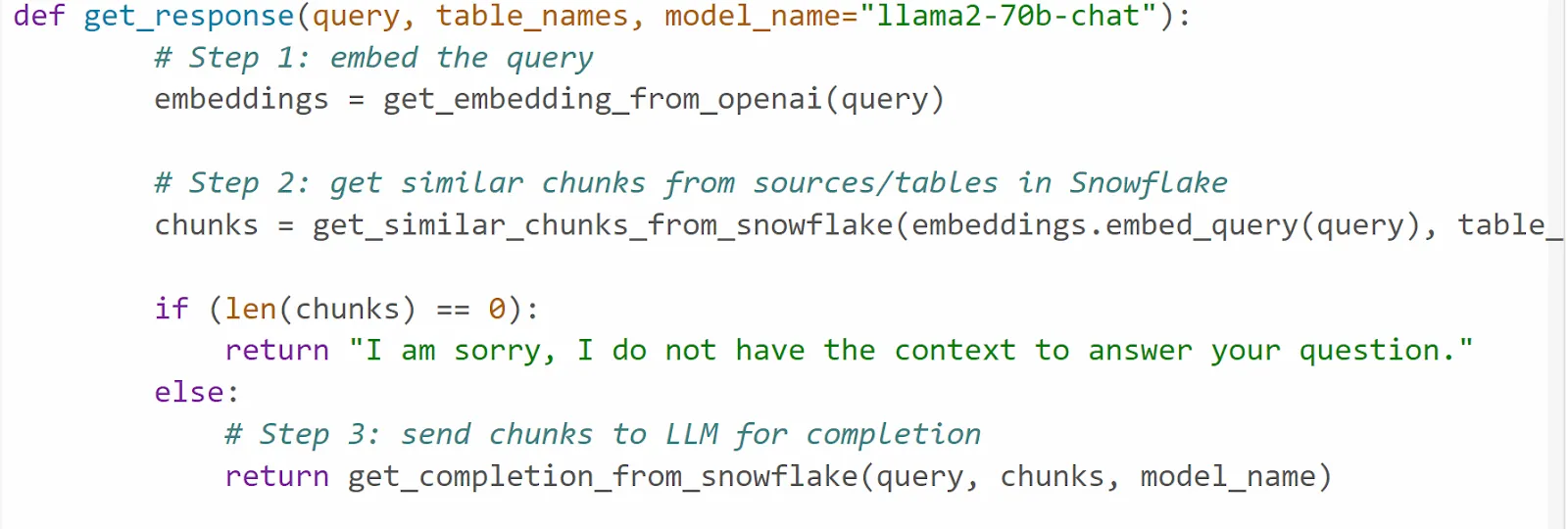

Finally, get the response.

To gain a better understanding of the logic, visit the following resources, Google Colab to use OpenAI embeddings and codelab to use a fake model.

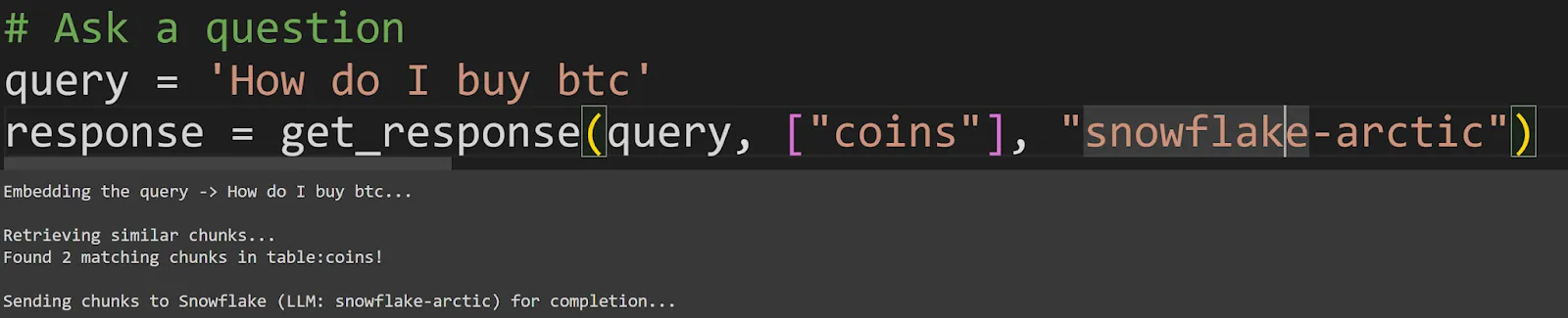

Demo of our crypto advisor RAG

This tutorial provides a step-by-step guide on leveraging Airbyte data in Snowflake infra, Snowflake Cortex and LLM to perform RAG operations. As we saw in our demo, the measurement of similarity between vectors is a fundamental operation in semantic comparison. By following the tutorial, you can easily utilize valuable data to gain high quality insights.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.