Agent Engine Public Beta is now live!

Learn how to build an end-to-end RAG pipeline, extracting data from Microsoft Sharepoint using Airbyte Cloud, loading it on Milvus (Zilliz), and then using LangChain to perform RAG on the stored data.

Summarize this article with:

To solve the problem that comes with LLMs hallucinating when they lack sufficient data, a technique called Retrieval augmented generation (RAG) is employed to provide the LLM with more context and recent data. In this tutorial, I will demonstrate the step by step procedure of getting the data from Microsoft sharepoint and creating a pipeline from scratch using Airbyte cloud and loading the data into Zilliz which is a vector database. From this database, we will create a RAG agent that answers questions while making use of the document content we loaded into Microsoft Sharepoint using Langchain , an official Zilliz partner and OpenAI LLM.

Before setting up a Sharepoint application on Airbyte as a source, you will need to load a document into a folder of your choice in the Sharepoint account. In your sharepoint drive, create a folder and add a document into it. Get the tenant ID of your app, the drive name and the folder path. This will help in configuring a connection in airbyte.

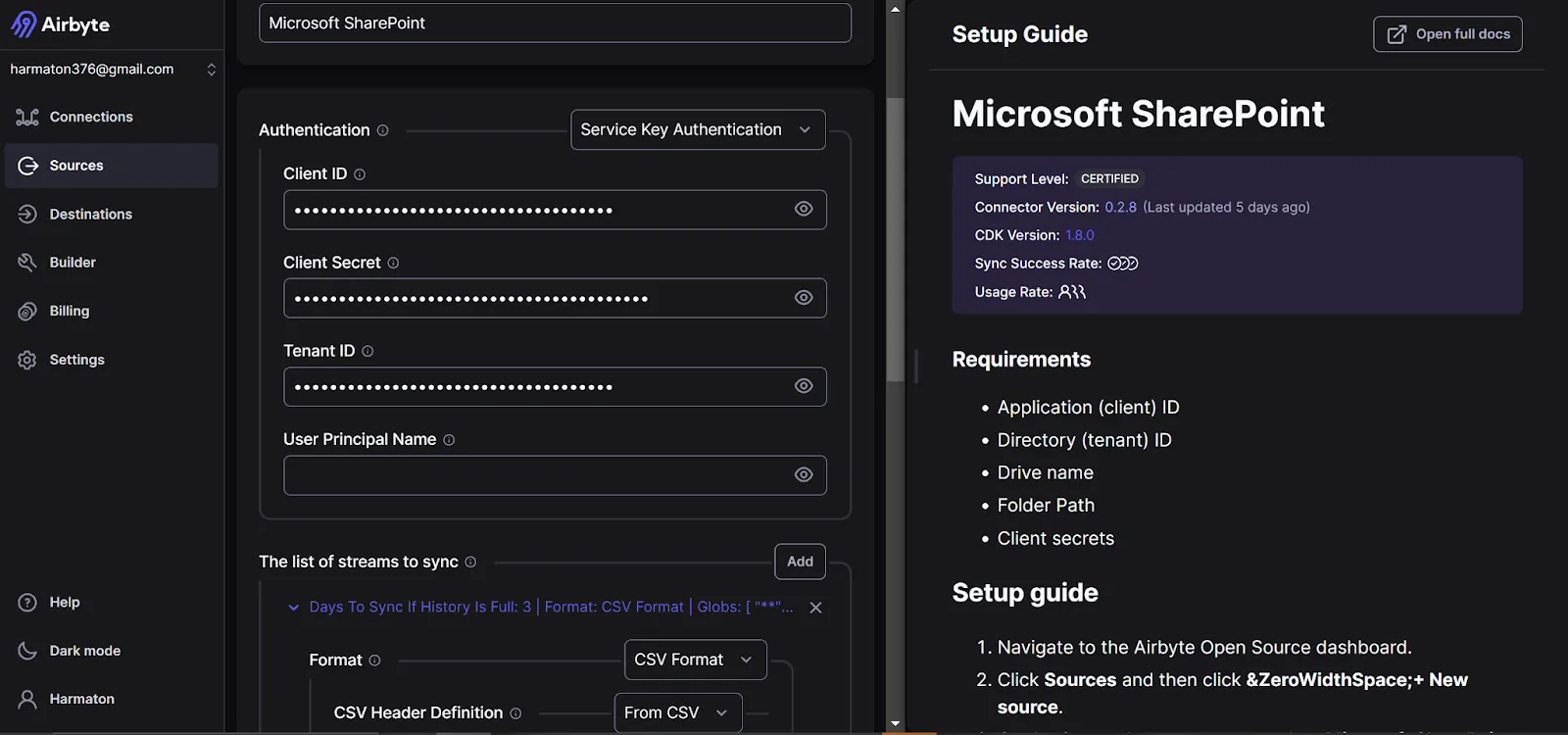

Navigate to the airbyte Airbyte cloud dashboard, click sources then select Microsoft Sharepoint as your source from the source options. You require the following :

For this tutorial, we are using a csv test document and therefore we will create a stream that has csv format as shown below. Feel free to set up a format of your choice.

Alternatively, you can just use OAUTH that will verify directly on your PC without having to setup the credentials.

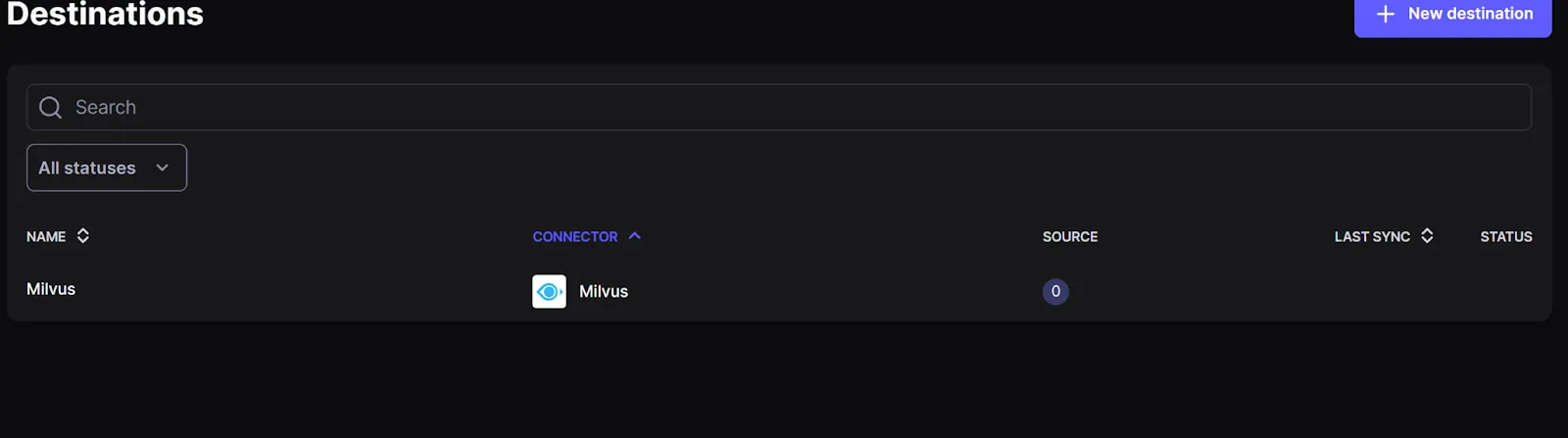

Once the source is successfully connected, go ahead and setup the destination Milvus connector.

To get started with the Zilliz database, create a new cluster and a collection within it. Navigate to the API keys page and copy the api key for pasting into airbyte.

Create

This process involves three steps which include:

text-embedding-ada-002. You require an OPEN AI api token, Zilliz api token, your Instance endpoint url and collection name to load data into. Setup processing chunk size to 512.

After testing the connection, navigate to connections in the airbyte dashboard and sync the connection from the Microsoft sharepoint app to Zilliz vector datastore.

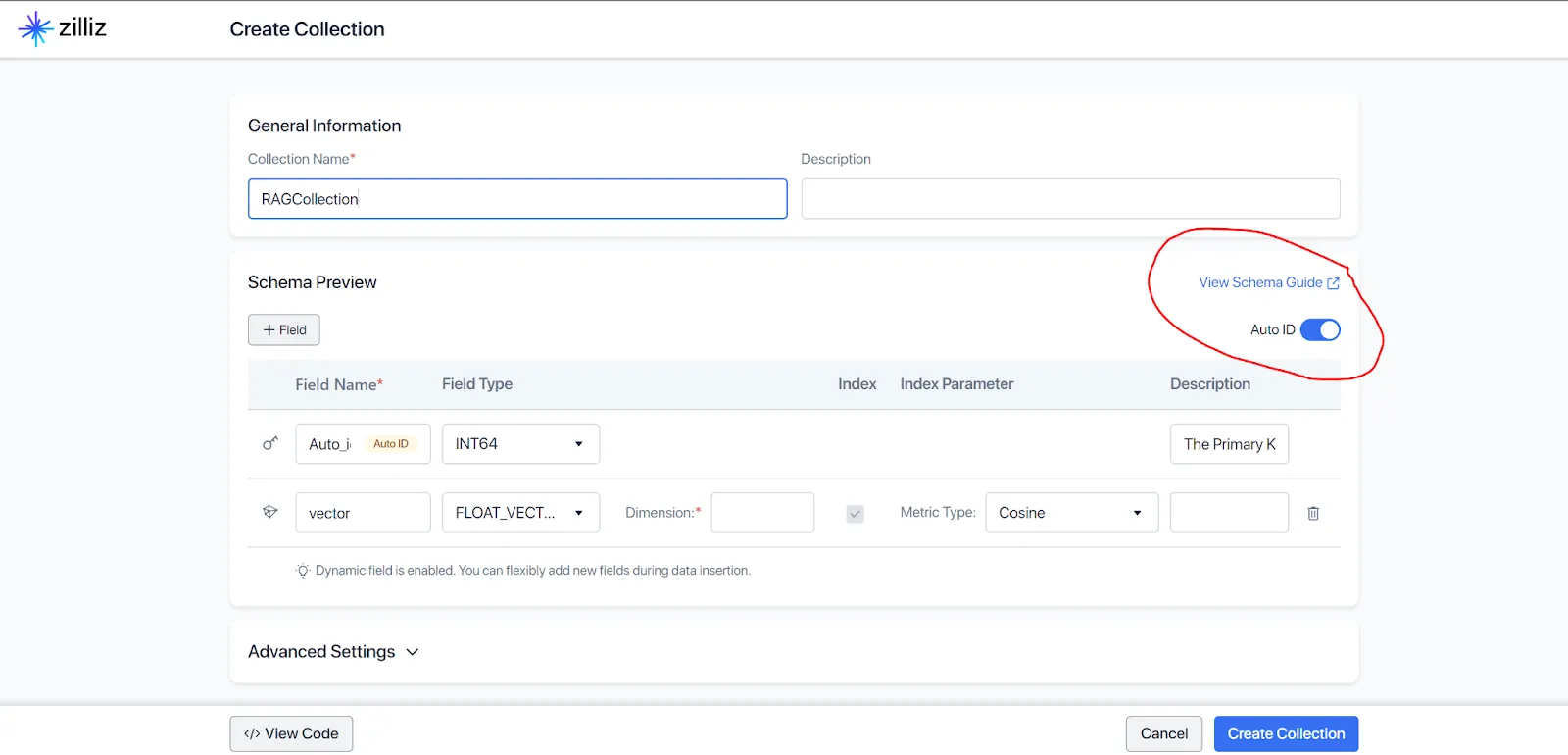

Make sure that the collection primary key has an auto_id

Set the metric type to Cosine and dimensions to 1000.

Once successful, we can sync the connection to the Microsoft sharepoint app source.

To initialize a langchain RAG implementation based on the indexed data, we use the following code.

This tutorial guides you through a step by step tutorial of loading data into Microsoft sharepoint, streaming the data into Zilliz vector database which stores indexed data to be used for similarity search on the go. We used langchain to integrate the data into a retrieval augmented generation operation making use of the indexed data.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.