SQL vs. Python: A Comparative Analysis for Data

Summarize with Perplexity

Data professionals spend 90% of their time cleaning dirty data instead of driving business insights, while 64% cite technical debt as their primary frustration and 92% of executives struggle to scale AI initiatives. This isn't just inefficiency—it represents a fundamental disconnect between the analytical tools we use and the complex, AI-driven workflows modern organizations demand. The traditional SQL versus Python debate has evolved beyond simple performance comparisons into a strategic decision about how to build scalable, intelligent data infrastructure that can handle everything from real-time IoT streams to vector-based semantic search.

Rather than forcing you to choose sides in an outdated binary debate, this analysis examines how SQL and Python have transformed to address these modern challenges, when each language delivers optimal results, and how emerging hybrid approaches are reshaping data analysis workflows for the AI era.

Data Processing Evolution: From Warehouses to Lakes & Back

A decade ago, the data industry struggled with scaling data warehouses. The solution that emerged was to take data out of the warehouse and transform it in memory with programming languages like Python and Java, using tools such as Hadoop, Spark, and Dask. This shift was accompanied by a move from data warehouses to data lakes.

Modern data warehouses have largely removed the scalability problem, and we're now seeing a reverse trend: transforming data within warehouses again. This change has been driven by dbt (data build tool), which fixes several limitations of SQL by enabling modular SQL, enforcing Git-based version control, and adding model testing.

The traditional arguments against SQL in the SQL-vs-Python debate are fading. The clean division of labor—SQL for querying and consolidation, Python for complex transformations—is blurring. For example, dask-sql lets you mix SQL operations and Python code.

Cloud-native implementations have fundamentally changed performance characteristics, with distributed SQL engines now handling petabyte-scale analytics while maintaining sub-second response times. This infrastructure evolution enables real-time decision making across IoT networks, financial trading systems, and customer-facing applications where latency directly impacts business outcomes.

What Are the Core Strengths That Make SQL Essential for Modern Data Operations?

Structured Query Language (SQL) is a cornerstone in data management, renowned for its efficiency in querying and manipulating structured data stored in relational databases. SQL excels at data retrieval, filtering, aggregation, and joining, making it indispensable for handling large datasets with complex relationships.

Declarative Syntax and Performance Optimization

SQL's declarative nature simplifies data-manipulation tasks, making it accessible to both novices and experts while enabling sophisticated query optimization. Modern SQL engines leverage cost-based optimizers that automatically restructure complex joins and aggregations, often delivering performance improvements that manual optimization cannot match. Database systems can execute SQL queries directly where data resides, eliminating network overhead and memory limitations that plague alternative approaches.

Cloud data warehouses have enhanced SQL's capabilities through automatic scaling, columnar storage optimization, and intelligent caching mechanisms. These improvements enable SQL to process billion-row datasets with response times measured in seconds rather than minutes, supporting real-time analytics requirements across industries from financial trading to supply chain management.

Enterprise-Grade Governance and Security

SQL databases provide built-in access controls, audit trails, and data lineage tracking that enterprise organizations require for compliance and security. Role-based permissions, column-level security, and automated encryption ensure that sensitive data remains protected while enabling self-service analytics. This governance framework becomes critical when dealing with regulated data across healthcare, financial services, and government sectors.

The declarative nature of SQL also enables automated policy enforcement and consistent data definitions across organizational boundaries. Data catalogs can parse SQL queries to understand data dependencies, while governance tools can automatically apply data quality rules and privacy protections without requiring manual intervention from data engineering teams.

Structured Data Processing Excellence

SQL remains unmatched for operations involving structured data relationships, complex joins across multiple tables, and analytical functions like window operations and hierarchical queries. The language's mathematical foundation in relational algebra enables query engines to apply sophisticated optimizations that dramatically improve performance for typical business intelligence and reporting workloads.

Modern SQL implementations support advanced analytical functions including recursive CTEs for hierarchical data, JSON processing capabilities for semi-structured content, and statistical functions that rival specialized analytics tools. These features enable comprehensive data analysis within the database environment without requiring data movement to external processing systems.

SQL Limitations in Contemporary Data Workflows

SQL focuses on structured data operations, so advanced analytics like machine learning model training, statistical modeling, and custom algorithmic development often require external tools or specialized extensions. While SQL can handle many analytical tasks, complex iterative processes and custom business logic implementation remain challenging within the constraints of declarative query syntax.

The rigid structure of traditional SQL can make working with semi-structured or unstructured data cumbersome, often necessitating extensive preprocessing or schema evolution processes. Although modern SQL dialects support JSON and XML processing, the development experience for complex data transformations lacks the flexibility that procedural programming languages provide for custom business logic implementation.

How Does Python Enable Advanced Analytics and Flexible Data Processing?

Python, a general-purpose programming language, has exploded in popularity within the data-science community thanks to its rich ecosystem—libraries such as Pandas, NumPy, Matplotlib, scikit-learn, and more.

Comprehensive Library Ecosystem for Data Science

Python's ecosystem spans data manipulation through Pandas and Polars, statistical computing via NumPy and SciPy, machine learning with scikit-learn and TensorFlow, and visualization through Matplotlib and Plotly. This breadth enables end-to-end analytical workflows within a single programming environment, from data ingestion through model deployment and monitoring.

Recent developments in the Python data ecosystem have addressed historical performance limitations through libraries like Polars, which utilizes Rust-based parallel processing to handle billion-row datasets efficiently. DuckDB integration enables SQL-like performance for analytical operations while maintaining Python's flexibility for custom transformations and business logic implementation.

Advanced Analytics and Machine Learning Capabilities

Python supports predictive modeling, clustering, natural language processing, computer vision, and other sophisticated techniques that extend far beyond SQL's analytical scope. The language provides direct integration with cloud-based AI services, enabling developers to incorporate large language models, image recognition, and speech processing into analytical workflows without complex API management.

Machine learning model development, training, and deployment workflows are deeply integrated into Python's ecosystem through MLOps tools like MLflow, Weights & Biases, and Kubeflow. These capabilities enable data scientists to build production-ready analytical systems that continuously learn and adapt to changing business conditions.

Flexibility for Custom Business Logic

Python's procedural nature enables complex data transformations, iterative analysis, and custom business rule implementation that would be verbose or impossible to express in SQL. The language supports object-oriented programming for building reusable analytical components, functional programming for data pipeline construction, and integration with external systems through comprehensive API libraries.

This flexibility proves essential when dealing with complex data quality rules, custom aggregation logic, or business-specific calculations that require conditional processing, external data enrichment, or real-time decision making based on multiple data sources and business rules.

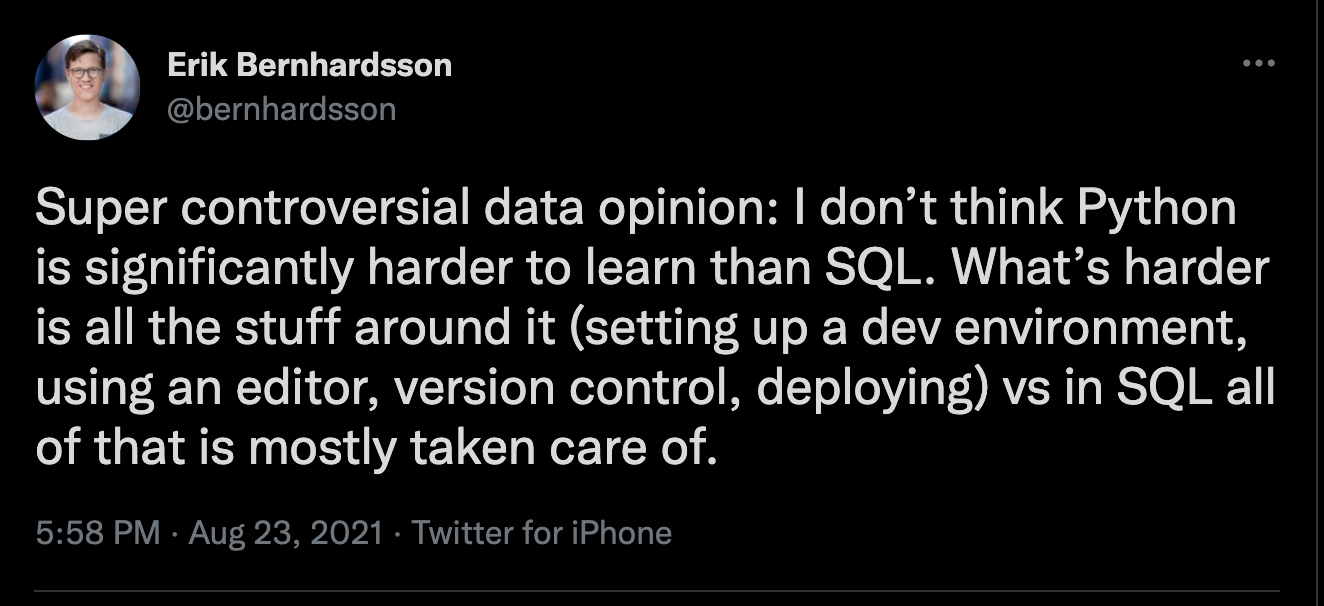

Python's Performance and Learning Considerations

Users transitioning from SQL or other languages must invest time learning Python syntax, library-specific APIs, and data science best practices. The learning curve extends beyond basic programming to understanding statistical concepts, machine learning principles, and software engineering practices for building maintainable analytical systems.

Some Python operations incur higher computational overhead than in-database SQL processing, particularly when large datasets must be moved into local memory for processing. However, distributed computing frameworks like Dask and cloud-based solutions increasingly mitigate these performance concerns while preserving Python's flexibility advantages.

What Are the Key Performance and Functionality Differences Between Python vs SQL?

The main difference between SQL and Python for data analysis is that SQL is a domain-specific language for querying and managing structured data in databases, whereas Python is a general-purpose language offering extensive libraries for advanced analytics, machine learning, and automation.

Performance Characteristics Across Different Workloads

Running SQL directly in a data warehouse usually outperforms Python for basic retrieval and aggregation because computation happens next to the data, eliminating network transfer overhead and leveraging database-specific optimizations. SQL engines utilize columnar storage, predicate pushdown, and parallel processing to deliver sub-second response times for complex aggregations across billion-row datasets.

Performance varies significantly with the SQL engine and the Python technology stack. Modern distributed computing solutions like Dask, Ray, and Polars can achieve comparable or superior performance for certain workloads by leveraging parallel processing and memory-efficient algorithms. Cloud-optimized SQL solutions maintain advantages for structured data aggregation, while Python excels in iterative processing and custom transformation logic.

The performance equation changes dramatically with workload characteristics. SQL dominates for set-based operations, complex joins, and analytical functions, while Python provides superior performance for iterative algorithms, machine learning model training, and custom business logic that requires procedural processing patterns.

See the Databricks and Snowflake benchmarking discussions here and here for context.

Functional Capabilities and Analytical Scope

While SQL can be faster for basic operations, its declarative nature becomes limiting for complex analytical workflows. Writing iterative exploratory analysis, machine learning pipelines, or custom business logic purely in SQL quickly becomes verbose and difficult to maintain, especially when requirements involve conditional processing or external system integration.

Python's functional advantages extend beyond analytics to include web scraping, API integration, automated reporting, and real-time data processing. The language supports end-to-end analytical workflows from data collection through model deployment, enabling data scientists to build comprehensive solutions within a single development environment.

The SQL ecosystem lacks comprehensive package management compared to Python's PyPI repository containing hundreds of thousands of specialized libraries. While dbt has introduced modular SQL development practices, the ecosystem remains limited compared to Python's extensive library ecosystem for specialized analytical tasks, API integrations, and custom business logic implementation.

Developer Experience and Productivity Factors

Testing and Quality Assurance

Python supports comprehensive unit testing frameworks that enable fine-grained validation across entire analytical pipelines. Libraries like chispa for PySpark and beavis for Dask provide specialized testing capabilities for distributed data processing, while pytest enables sophisticated test fixture management and automated quality assurance workflows.

# Example: testing PySpark transformations with chispa

from chispa.column_comparer import assert_column_equality

import pyspark.sql.functions as F

def remove_non_word_characters(col):

return F.regexp_replace(col, r"[^\w\s]+", "")

def test_remove_non_word_characters():

data = [("matt7", "matt"), ("bill&", "bill"), ("isabela*", "isabela"), (None, None)]

df = (spark.createDataFrame(data, ["name", "expected"])

.withColumn("clean", remove_non_word_characters(F.col("name"))))

assert_column_equality(df, "clean", "expected")

Traditional SQL environments provide limited testing capabilities beyond dbt's model-level validation. While dbt has improved SQL testing practices significantly, the granularity and sophistication of testing frameworks remain limited compared to Python's comprehensive testing ecosystem and continuous integration capabilities.

Debugging and Development Workflow

Python development environments support interactive debugging through breakpoints, variable inspection, and step-by-step code execution. Jupyter notebooks enable iterative exploration with immediate feedback, while IDEs provide sophisticated code completion, error detection, and refactoring capabilities that accelerate development productivity.

SQL debugging typically relies on rewriting queries, adding Common Table Expressions for intermediate result inspection, or using database-specific profiling tools. While modern SQL IDEs have improved the development experience, the debugging capabilities remain limited compared to full-featured programming language environments.

Version Control and Collaboration

Python's modular structure through functions, classes, and modules creates cleaner version control histories with meaningful commit diffs. The language's ecosystem includes sophisticated dependency management, environment isolation, and collaborative development practices that support team-based analytical projects.

While dbt has brought SQL development into modern version control practices, large nested queries still generate noisy diffs that complicate code review and collaboration processes. Python's natural modularity better supports collaborative development and code reuse across analytical projects.

How Can AI-Integrated Database Architectures Transform Python vs SQL Workflows?

Modern database systems are evolving beyond traditional structured data processing to incorporate artificial intelligence capabilities directly within database engines. This transformation fundamentally changes how organizations approach the python vs sql decision by enabling sophisticated AI operations through familiar SQL interfaces while maintaining Python's flexibility for custom model development and deployment.

Vector Processing and Semantic Search Integration

SQL Server 2025 introduces native vector data types and DiskANN indexing, enabling high-dimensional similarity searches directly within relational databases without requiring external vector databases. This advancement allows semantic querying of unstructured content like images, documents, and media using cosine distance calculations while maintaining ACID compliance and familiar SQL syntax.

Organizations can now implement Retrieval Augmented Generation architectures entirely within database environments, combining structured business data with semantic document search through T-SQL queries. This approach reduces latency by eliminating data movement between systems while providing governance controls that traditional vector databases lack.

The integration extends to cross-modal retrieval scenarios where SQL queries can match textual descriptions to product images, find similar customer behavior patterns through embedding comparisons, or identify relevant documents based on semantic similarity rather than keyword matching. These capabilities transform databases from simple storage systems into intelligent information retrieval engines.

AI Model Management Within Database Systems

SQL Server 2025's REST API integration enables direct T-SQL interaction with AI models through stored procedures, allowing dynamic switching between embedding models, version-controlled model deployment, and secure isolation of runtime environments from database engines. This architectural approach eliminates traditional ETL overhead while maintaining enterprise governance requirements.

Data professionals can implement complete machine learning pipelines using familiar SQL syntax, from text chunking and embedding generation through model inference and result storage. The approach supports popular AI services including Azure OpenAI, local Ollama deployments, and open-source models while providing consistent interfaces across different AI providers.

The integration enables real-time AI inference within transactional systems, allowing businesses to incorporate recommendations, sentiment analysis, and predictive insights directly into operational workflows without building separate analytical infrastructure or managing complex data synchronization processes.

Hybrid SQL-Python AI Development Patterns

Modern AI development increasingly combines SQL's data management strengths with Python's model development capabilities through integrated execution environments. Snowpark's Python API allows deployment of Hugging Face models as stored procedures within Snowflake, creating closed-loop systems where data transformation, model inference, and result storage occur within unified environments.

This hybrid approach enables data scientists to develop sophisticated models using Python's rich ecosystem while deploying them through SQL interfaces that business analysts and operational teams can easily consume. The pattern reduces deployment complexity while maintaining model performance and enabling real-time inference within existing business processes.

Development workflows now support iterative model refinement where SQL queries provide training data and feature engineering capabilities while Python handles model development and validation, with results automatically materialized back into database environments for consumption by downstream analytical and operational systems.

What Role Do Next-Generation Performance Libraries Play in Modern Python vs SQL Decisions?

The performance landscape for both SQL and Python has been transformed by next-generation libraries and execution engines that address historical limitations while introducing new capabilities for large-scale data processing and real-time analytics.

Rust-Based Python Performance Revolution

Polars has emerged as a game-changing DataFrame library that utilizes Apache Arrow's columnar format and Rust-based parallel processing to handle billion-row datasets with performance characteristics previously only available through distributed computing frameworks. Organizations report analysis time reductions from 45 minutes to under 5 minutes for complex market trend detection and customer behavior analysis.

The library implements lazy evaluation patterns that optimize entire analytical pipelines before execution, dramatically reducing memory usage while maximizing CPU utilization across all available cores. This approach enables local processing of datasets that previously required cloud-based distributed computing infrastructure, reducing both costs and operational complexity.

DuckDB complements these capabilities by providing an in-memory analytical database that processes 120-million-row datasets in seconds while consuming 20% less resources than cloud alternatives. The combination enables rapid prototyping and feature engineering workflows that previously required expensive cloud infrastructure or time-consuming data sampling strategies.

Hybrid Execution and Query Optimization

Modern platforms now enable intelligent routing between Python and SQL execution backends based on workload characteristics and performance optimization algorithms. Snowpark's hybrid execution model automatically determines whether operations should execute as SQL queries or Python procedures, delivering significant performance improvements while maintaining familiar development interfaces.

These systems optimize query plans across language boundaries, enabling SQL aggregations to feed directly into Python machine learning pipelines without data serialization overhead. The approach eliminates traditional trade-offs between SQL performance and Python flexibility by automatically selecting optimal execution paths based on data characteristics and computational requirements.

Databricks' unified analytics engine exemplifies this trend by providing SQL warehouses that seamlessly integrate with Python notebooks, enabling data engineers to leverage SQL's performance advantages for data preparation while data scientists focus on model development using Python's extensive machine learning ecosystem.

Stream Processing and Real-Time Analytics

Next-generation libraries enable real-time processing capabilities that blur traditional distinctions between batch and streaming analytics. SQL Server 2025's transaction log streaming provides microsecond-latency event publishing to Kafka and Event Hubs, enabling real-time inventory dashboards, fraud detection systems, and dynamic pricing engines that react immediately to business events.

Python libraries like Polars and DuckDB support streaming data processing patterns that enable continuous analytics on live data streams without requiring complex distributed streaming infrastructure. This capability democratizes real-time analytics by making it accessible to organizations that cannot invest in traditional streaming platforms.

The combination enables sophisticated analytical workflows where SQL handles high-throughput data ingestion and initial aggregation while Python provides real-time model inference and custom business logic implementation, creating responsive systems that adapt to changing business conditions without manual intervention.

How Can Organizations Bridge SQL and Python for Optimal Data Workflows?

In practice, many projects blend both languages: SQL for efficient data extraction/aggregation; Python for deeper analysis, modeling, and visualization. Modern tools are making this integration seamless while preserving each language's strengths.

Cloud-Native Integration Platforms

Query data lakes with SQL via AWS Athena, Google BigQuery, or Azure Synapse while maintaining Python connectivity through standardized interfaces. Data warehouses like Snowflake expose DataFrame-style APIs through Snowpark, enabling Python developers to work with familiar syntax while leveraging warehouse-scale performance.

Libraries such as dask-snowflake support distributed reads and writes from Python environments, enabling seamless data movement between local development environments and production data warehouses without compromising performance or security requirements.

import dask_snowflake

import snowflake.connector

with snowflake.connector.connect(**creds) as conn:

ddf = dask_snowflake.from_snowflake(

query="""

SELECT *

FROM TableA

JOIN TableB USING (id)

""",

conn=conn,

)

A full notebook example is available here.

Unified Development and Deployment Workflows

Modern data platforms support unified development experiences where SQL queries and Python code coexist within the same analytical workflows through tools like dbt Core's Python models, which create hybrid DAGs where SQL handles initial data extraction and Python applies custom business logic and machine learning inference.

These platforms maintain consistent version control, testing frameworks, and deployment pipelines regardless of whether individual components use SQL or Python, enabling teams to optimize each analytical step with the most appropriate technology while maintaining operational consistency across the entire data pipeline.

Git-based workflows now support both SQL scripts and Python notebooks within unified repositories, enabling comprehensive code review processes and collaborative development practices that span different technical skill sets and organizational roles.

Choosing the Right Tool for Your Projects

- Use SQL for simple, performance-critical queries that run inside the data warehouse, especially for structured data aggregation, reporting, and business intelligence workflows that require sub-second response times.

- Use dbt to organize more complex SQL models and manage them with Git-based version control, particularly for data transformation workflows that require testing, documentation, and collaborative development practices.

- Use Python (and distributed libraries like Dask or Polars) for exploratory analysis, machine learning model development, custom business logic implementation, and analytical workflows requiring robust testing and external system integration.

The decision increasingly depends on specific use case requirements rather than broad technology preferences. Financial services organizations might use SQL for regulatory reporting while employing Python for risk modeling, while e-commerce companies might leverage SQL for inventory management and Python for recommendation systems.

Conclusion

Rather than a stand-off, SQL and Python excel at different stages of the data-processing pipeline. The gap between these technologies continues to narrow through tools like dbt, Snowpark, DuckDB, and Polars, which enable teams to leverage optimal performance characteristics without sacrificing development productivity or analytical capabilities.

The emergence of AI-integrated database architectures and next-generation performance libraries transforms the traditional python vs sql debate from a binary choice into a strategic orchestration decision. Organizations that embrace hybrid approaches gain competitive advantages by matching each analytical challenge with the most appropriate technological solution while maintaining consistent governance and operational practices.

Future success in data analysis requires fluency across both technologies rather than specialization in either SQL or Python alone. The most effective analytical strategies combine SQL's efficiency for structured data operations with Python's flexibility for advanced analytics, creating responsive systems that adapt to evolving business requirements while maintaining the performance and governance standards that enterprise organizations demand.

Frequently Asked Questions

Is SQL still relevant in the age of AI and advanced analytics?

Yes. SQL remains foundational for structured data operations, especially when performance, governance, and scalability are critical. With innovations like dbt, distributed SQL engines, and cloud-native warehouses, SQL continues to evolve and power enterprise-grade analytics at scale.

When should I choose Python over SQL?

Python is ideal for advanced analytics, machine learning, and workflows that require custom business logic or iterative processing. If your project involves predictive modeling, natural language processing, or integrating multiple data sources, Python’s ecosystem provides greater flexibility than SQL.

Can SQL and Python be used together effectively?

Absolutely. Many modern workflows combine SQL for data extraction and aggregation with Python for deeper analysis and modeling. Platforms like Snowflake (with Snowpark), dbt Core, and Dask make it seamless to bridge the two, allowing teams to leverage each language’s strengths in a unified pipeline.

How do AI-integrated databases impact the SQL vs Python debate?

AI-enabled databases blur the lines by embedding vector search, semantic querying, and model execution directly into SQL environments. This reduces the need to move data into Python for every AI task while still allowing Python to drive custom model development and deployment.

.png)

.png)