Analytics Maturity Model: A Complete Enterprise Assessment Guide

Summarize this article with:

✨ AI Generated Summary

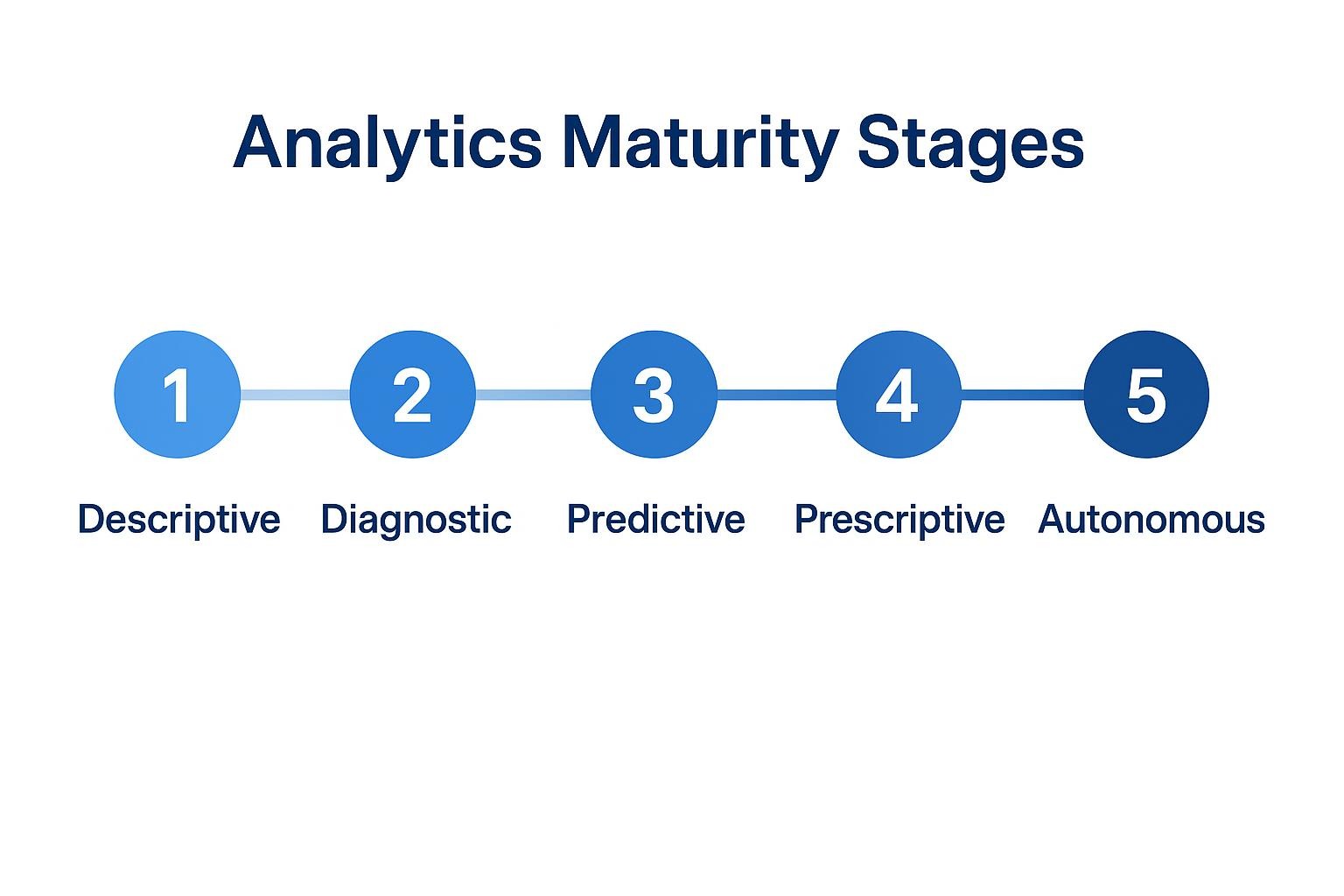

Advanced analytics maturity accelerates decision-making and competitive advantage by progressing through five stages: descriptive, diagnostic, predictive, prescriptive, and autonomous. Organizations must assess maturity across five dimensions—data infrastructure, analytical capabilities, people and skills, culture and strategy, and operationalization—to identify gaps and prioritize improvements.

- Common barriers include data silos, legacy infrastructure, low data literacy, lack of executive sponsorship, and unclear governance.

- Unified data architectures eliminate silos, automate pipelines, and enable real-time insights, speeding maturity progression.

- Advancement requires continuous improvement via consolidating data, automating reports, assigning data owners, training users, and tracking KPIs like time-to-decision, automation rates, model accuracy, and business impact.

- Successful maturity growth demands synchronized progress in technology, culture, governance, and skills, with executive support and clear ROI measurement.

Advanced analytics maturity translates directly to speed: the faster you move from data collection to confident action, the quicker you outpace competitors. A structured maturity model shows exactly how ready or vulnerable your organization is across data infrastructure, skills, governance, and culture. This lets you focus investment where competitive gaps hit hardest.

The stakes are clear. Data teams tell us that most organizations admit their data capabilities still lag what they need to stay competitive, and measurement remains inconsistent. Companies that climb the maturity curve report faster product iterations, lower operating costs, and stronger customer retention.

What Is the Analytics Maturity Model and Why Does It Matter?

Most data teams waste months building dashboards that executives never open. A data maturity framework prevents this by showing you exactly where your organization stands, from basic reporting to AI-driven decision systems. Frameworks like TDWI provide clear benchmarks so you know which gaps to address first.

These assessments reveal what raw metrics miss. A low people-and-skills score explains why your expensive BI tools sit unused. A strong infrastructure rating means you're ready for machine learning pilots. Public benchmarks let you compare your scores with industry peers and build the business case for targeted investments.

Effective maturity frameworks measure five core dimensions that work as multidimensional maps rather than linear progressions:

- Data infrastructure covering scalability, integration, and governance

- Analytical capabilities spanning tools, methods, and automation depth

- People and skills encompassing data literacy and cross-team alignment

- Culture and strategy reflecting executive sponsorship and data-driven mindset

- Operationalization measuring how well insights embed into workflows

Your scores across these dimensions rarely rise together. You might excel technically while struggling culturally. Self-assessment tools help convert your current position into a prioritized roadmap: fix weak areas, build on strengths, and fund initiatives that advance you toward predictive, prescriptive, and eventually autonomous capabilities.

What Are the Five Stages of Analytics Maturity?

Data maturity typically follows a broadly similar pattern across major frameworks: organizations start with basic reporting and progress toward more advanced analytics, sometimes leading to systems that can make decisions on their own. However, the specifics like the number of stages and definitions differ between various assessment guides.

Stage 1: Descriptive

You answer "what happened?" with spreadsheets and basic dashboards. Data lives in silos; governance happens through email threads. Teams export CSV files manually and spend more time formatting than analyzing.

Stage 2: Diagnostic

You start asking "why did it happen?" Self-service BI tools let business users drill down into root causes, but your team still spends nights reconciling data from different systems. Connecting plant floor sensors to central dashboards reveals which machines cause downtime.

Stage 3: Predictive

Statistical models and machine learning forecast "what will happen?" Marketing teams use propensity scoring; supply chain managers run demand scenarios. Combining time-series forecasts with external data sources like weather patterns exemplifies the cross-system insight that defines predictive maturity.

Stage 4: Prescriptive

Your systems recommend "what should we do?" Finance teams test capital allocation scenarios in minutes instead of weeks. Model governance becomes formal: you track bias, document assumptions, and require stakeholder sign-offs before deployment. This stage requires significant process discipline to maintain decision quality.

Stage 5: Autonomous

Decisions execute under human oversight. Real-time rules engines reroute shipments; AI systems adjust ad spend based on performance. Few organizations operate here consistently. Those that do couple automated controls with continuous model monitoring to ensure reliable outcomes.

Progress isn't just about better technology. Each stage demands matching advances in skills, processes, and organizational culture. Predictive models fail without clean data. Executive sponsorship without proper funding leads to stalled initiatives. Move to the next stage only when your people, governance, and infrastructure can handle the increased complexity.

How Can Enterprises Assess Their Analytics Maturity Level?

The assessment process follows a clear sequence:

1. Define the Scope

Decide whether you're evaluating a single business unit or the whole enterprise. Clarity here prevents ambiguous scores later.

2. Collect Evidence

Distribute survey questions that rate statements on a 1-to-5 scale, then hold stakeholder interviews to probe gaps the numbers surface.

3. Analyze Dependencies

Plot scores for each dimension on a radar chart. Asymmetry makes weak spots obvious and sparks targeted discussion.

4. Benchmark Externally

Compare your composite score to industry averages to calibrate ambition.

5. Draft a Target-State Roadmap

Translate gaps into sequenced initiatives with clear owners and deadlines. This includes upskilling, new tooling, and governance changes.

Turn the assessment into a baseline metric by re-running the same survey quarterly and tracking how quickly lagging dimensions close the gap.

What Barriers Commonly Slow Analytics Maturity Growth?

Progress stalls when a few persistent hurdles keep data teams stuck in reactive mode, preventing the organizational advancement that creates competitive advantage.

Organizations counter these obstacles by building unified data architectures that eliminate silos, replacing batch pipelines with scalable platforms, and embedding data literacy programs alongside clear governance frameworks. Companies that have adopted unified data platforms for cross-team access report faster time-to-insight and renewed executive confidence.

How Can a Unified Data Architecture Accelerate Analytics Maturity?

Most teams spend their time wrestling with data silos instead of building predictive models. They're stuck copying data between systems, debugging broken joins, and explaining why yesterday's dashboard shows different numbers than today's report.

A unified data architecture collapses these silos into a single, governed source of truth. You move from "collecting data" to "acting on insights" without the latency that drags programs behind their competitors.

Key components that accelerate maturity:

- Unified integration layer: Connects operational databases, SaaS applications, and files across cloud and on-premises environments, ending copy-paste ETL work that consumes engineering time.

- Centralized governance and metadata management: Tracks lineage and enforces role-based access in one place, providing the data quality foundation required for predictive and prescriptive analytics.

- Low-latency streaming and CDC pipelines: Feeds dashboards and models with fresh events instead of yesterday's snapshots, enabling diagnostic and predictive capabilities.

- External secrets management: Keeps credentials outside data pipelines, reducing audit scope while maintaining security for compliance-heavy environments.

- Modular control and data planes: Scales storage and compute independently while meeting regional compliance requirements through hybrid control planes that keep raw data, PII, and CDC logs inside your own environment.

Organizations report fewer internal data requests after adopting unified platforms, with consistent data quality gains that eliminate the "can I trust this number?" debate. Your team spends their time building forecasts instead of fixing joins.

How to Advance to the Next Stage of Analytics Maturity?

Moving up the maturity curve isn't a one-time project. It's quarterly improvements that compound over time. Start by identifying where your team wastes the most time today, then fix those bottlenecks first.

The advancement process follows six critical steps:

1. Consolidate Data Sources

Your team spends significant time chasing down data from different systems. Replace manual extracts with automated pipelines that pull from every source and keep data fresh. Teams reclaim substantial time when they eliminate spreadsheet gymnastics.

2. Automate Recurring Reports

If someone manually updates the same dashboard every Monday, you're wasting valuable time. Self-service BI tools free your staff from report factories so they can focus on finding insights instead of formatting charts.

3. Start with One Predictive Model

Pick a forecasting use case with a clear business owner who will act on the results. Inventory planning and demand forecasting usually show immediate ROI.

4. Assign Data Owners

Data quality problems multiply when no one owns the data. Assign specific people to own data products, set quality SLAs, and enforce role-based access before building complex models on shaky foundations.

5. Train Your Users, Not Just Your Tools

Rolling out new platforms without training guarantees low adoption. Teams that understand basic statistics actually use the tools you deploy.

6. Measure What Matters

Track time from data capture to decision, user adoption rates, and model accuracy. Use radar charts to visualize which capabilities still lag behind and review progress every quarter.

Each cycle teaches you where the next bottleneck lives. Focus on removing friction, not adding features.

What KPIs Should You Track to Measure Analytics Maturity Progress?

You can't steer your program if you don't know whether you're moving faster, getting smarter, or generating real business impact. Maturity frameworks recommend turning progress into numbers you revisit regularly.

- Time from data collection to decision-making: Reducing latency can enable progress from descriptive toward diagnostic maturity, though this is not the sole indicator of transition. Track this as a supporting metric.

- Percentage of automated versus manual reports: Growing automation means staff spend more cycles on modeling and less on slide decks, a hallmark of predictive stage teams.

- Predictive model accuracy: Measure through lift, recall, or MAE. Consistent improvement shows your models are learning and being retrained rather than left to decay.

- Data quality scores on critical tables: High completeness and consistency indicate governance strong enough for prescriptive capabilities.

- User adoption rate of your tools: Growing monthly active users proves data culture is spreading beyond the core team.

- Dollar value created by initiatives: Attach revenue, cost savings, or risk reduction to every project to justify continued investment.

Set incremental targets that match where you are today: if your dashboards still refresh overnight, aim to cut that window in half before chasing real-time. Reassess each KPI quarterly, visualize trends in a simple radar chart, and benchmark your scores with peer data from assessment portals and maturity guides.

You'll know you've reached the next stage when your weakest metric finally catches up with the rest.

How to Build Analytics Maturity as a Competitive Advantage?

Data maturity models provide the roadmap your team needs to move from reactive reporting to predictive capabilities. Companies operating at advanced maturity levels consistently outperform competitors still stuck in descriptive work, creating sustainable competitive advantages through faster decision-making and deeper business insights.

Assess your current state and plan your next advancement to transform raw data into market differentiation. Airbyte Enterprise Flex delivers hybrid deployment that enables compliance without capability trade-offs, keeping data in your infrastructure while providing the 600+ connectors and modern pipelines that accelerate maturity progression. Talk to Sales about unified data architecture for your analytics maturity roadmap.

Frequently Asked Questions

How long does it take to move from one analytics maturity stage to the next?

Timeline varies significantly based on starting infrastructure, executive sponsorship, and resource allocation. Teams that consolidate data sources and automate basic reporting can reach diagnostic maturity faster. Predictive capabilities typically require longer timeframes from descriptive baseline due to the need for clean historical data, trained models, and organizational buy-in. Prescriptive and autonomous stages demand sustained investment in governance, model operations, and change management.

Can an organization skip maturity stages or advance multiple levels simultaneously?

Skipping stages rarely works in practice. Each maturity level builds on capabilities from previous stages. Predictive models require the clean, consolidated data that diagnostic maturity provides. Prescriptive systems need the forecasting accuracy developed during predictive stages. Organizations that attempt to jump ahead typically encounter data quality issues, governance gaps, or adoption failures that force them back to foundational work. However, different business units can operate at different maturity levels, allowing advanced teams to pilot higher-stage capabilities while others catch up.

What are the most common failure points when trying to advance analytics maturity?

Data teams report three primary failure modes. First, attempting technology upgrades without addressing organizational culture results in expensive tools that sit unused. Second, building advanced capabilities on poor data quality creates unreliable outputs that erode stakeholder trust. Third, lacking executive sponsorship means initiatives die when they need budget or cross-functional cooperation. Successful maturity advancement requires synchronized progress across technology, people, process, and governance rather than focusing solely on analytics tools.

How do you measure ROI on analytics maturity investments?

Measure both efficiency gains and business impact. Track time saved through automated reporting, reduced data request queues, and faster time-to-insight as operational metrics. Calculate business value through specific use cases: revenue from better pricing models, cost reduction from optimized operations, or risk avoidance from fraud detection. Early-stage maturity investments show ROI through efficiency (staff time recovered), while advanced stages demonstrate impact through business outcomes (revenue growth, margin improvement). Establish baseline metrics before starting maturity initiatives, then track quarterly progress against those benchmarks.

.webp)