5 Reasons Why You Should Automate Data Ingestion

Summarize this article with:

✨ AI Generated Summary

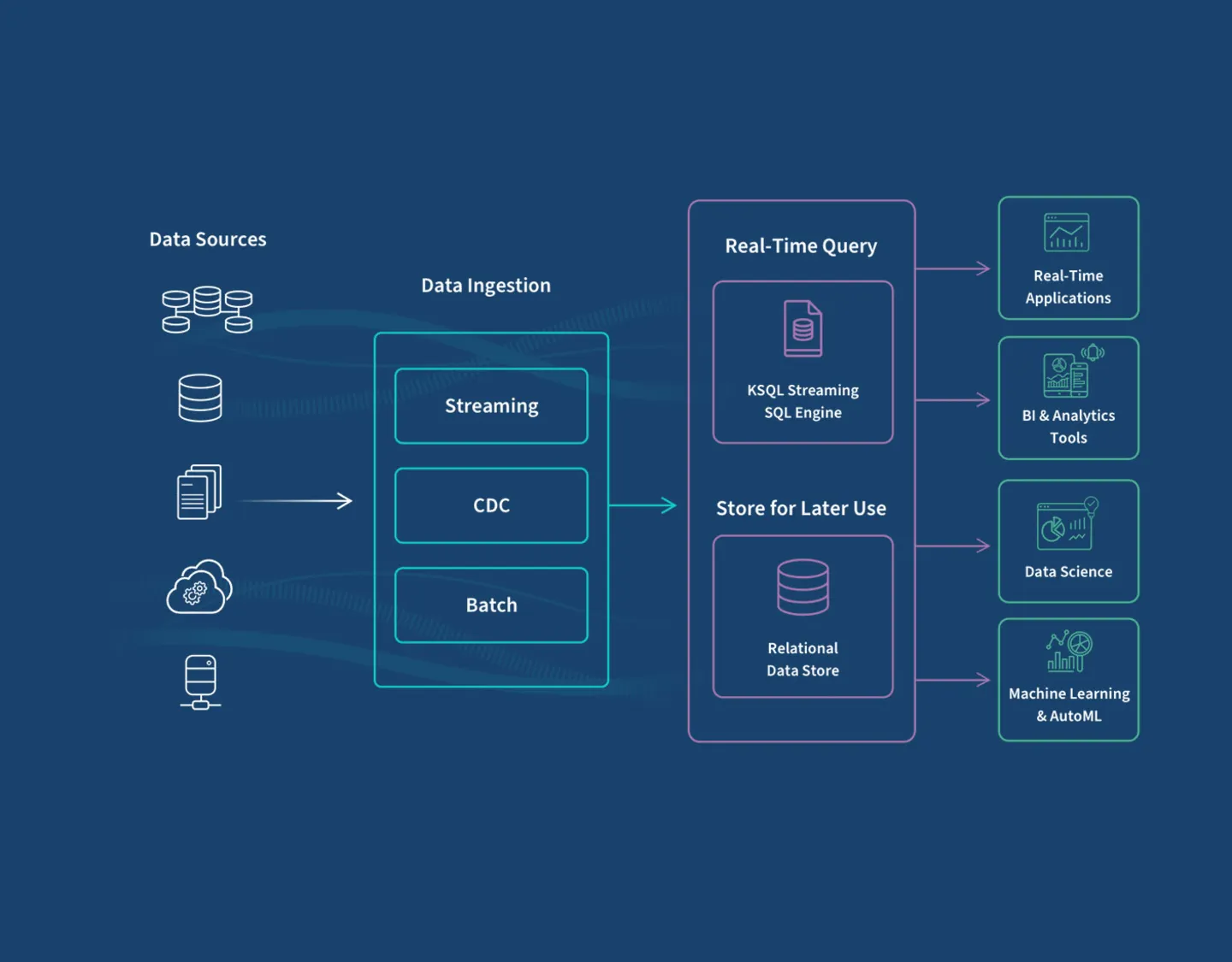

Automated data ingestion uses tools to efficiently collect, process, and store large volumes of data with minimal human intervention, improving accuracy, accessibility, scalability, cost-efficiency, and employee productivity. Key steps include identifying data sources, choosing batch or real-time ingestion, selecting appropriate tools (e.g., Airbyte, Apache Kafka, Apache NiFi), implementing data validation, ensuring security, and managing data destinations. Modern AI-powered platforms enhance data quality and enable real-time analytics, supporting better business decisions and scalable enterprise operations.

You need to use data for different enterprise operations including sales, CRM, marketing, and supply-chain management to gain a competitive edge and increase profitability. Over the years, data production has increased significantly across organizations worldwide. You therefore need a robust roadmap to manage such massive amounts of data.

While working with high-volume data, having a good data-ingestion strategy is essential to avoid data loss and reduce latency. Automation can play a crucial role in achieving this. By leveraging automated data ingestion, you can collect, store, and use data effectively to make well-informed business decisions.

Let's understand what automated data ingestion is and why you should opt for it for efficient data management and downstream enterprise operations.

What Is Automated Data Ingestion?

Automated data ingestion is the process of using automation tools and techniques to collect, process, and store data from various sources into a suitable data-storage system. Unlike manual procedures, automated data ingestion involves solutions that require minimal to no human intervention. By using such tools, you can improve the speed and efficiency of data ingestion and overall data-based workflows in your organization.

Data ingestion is a preliminary step for data integration. However, the data ingestion vs. data integration processes differ from each other. Data ingestion involves collecting raw data, while the process of transforming and consolidating this data is known as data integration.

Automating data ingestion can facilitate faster data integration, ensuring data availability for subsequent tasks like data analysis. Modern ai data ingestion solutions leverage machine learning capabilities to optimize data collection patterns and improve processing efficiency across diverse data sources.

Why Should You Automate Data Ingestion?

Improves Data Accuracy

Manual data ingestion is prone to human errors including typos, misconfigured data mappings, and inconsistent formatting, which can compromise data integrity. Automated solutions include built-in error-handling and validation mechanisms that allow you to detect and resolve inconsistencies quickly. These systems prevent incorrect data from entering your workflow and maintain data quality standards across all ingestion processes.

Advanced ai data ingestion platforms can automatically identify data anomalies and apply corrective measures without manual intervention. This reduces the risk of downstream analytics errors that could impact business decisions.

Enhances Data Accessibility

Automated platforms reduce latency in collecting and processing data, ensuring information is available when required. Cloud-based ingestion tools facilitate data extraction from several sources simultaneously, creating a unified view of organizational data. Streaming solutions allow continuous retrieval for real-time analytics such as fraud detection, IoT monitoring, and customer behavior analysis.

Real-time data accessibility enables faster decision-making and improves competitive positioning. Organizations can respond to market changes and customer needs more effectively when data flows seamlessly into analytical systems.

Achieves Scalability

Manual processes cannot keep up with growing data requirements as organizations expand their digital footprint. Automated platforms enable batch ingestion for large volumes and leverage streaming technologies like Apache Kafka to handle high-velocity data. This allows you to build scalable pipelines that grow with your business needs.

Scalable automation ensures consistent performance regardless of data volume increases. Modern platforms automatically adjust resource allocation based on workload demands, preventing bottlenecks during peak processing periods.

Optimizes Costs

Many automated tools are cloud-hosted and fully managed, lowering infrastructure-maintenance costs and reducing operational failures that would otherwise consume additional resources. Automated systems eliminate the need for dedicated personnel to monitor and manage routine data transfers. This frees up technical resources for higher-value strategic initiatives.

Cloud-based automation also provides predictable pricing models that scale with usage rather than requiring upfront infrastructure investments. Organizations can optimize costs by paying only for the processing power and storage they actually use.

Increases Employee Productivity

By off-loading repetitive collection and transfer tasks to automation, teams can focus on higher-value activities including research, innovation, and strategic analysis. Data engineers can concentrate on designing better architectures and solving complex integration challenges. Business analysts can spend more time interpreting data rather than waiting for it to become available.

Automation reduces the manual effort required for routine maintenance tasks like monitoring data quality and troubleshooting pipeline failures. This allows teams to be more proactive in their approach to data management and business intelligence.

How Do You Automate Data Ingestion?

1. Identify Data Sources

Evaluate the sources including databases, flat files, APIs, IoT devices, and cloud applications from which you want to ingest data. Document the data formats, update frequencies, and access requirements for each source. Understanding source characteristics helps determine the most appropriate ingestion approach and tool selection.

Consider data sensitivity and compliance requirements when cataloging sources. Some data may require special handling for privacy regulations or security protocols.

2. Choose the Right Data-Ingestion Approach

Decide between batch, real-time, or hybrid ingestion based on volume, latency requirements, and resource availability. Batch processing works well for large volumes of historical data that can be processed at scheduled intervals. Real-time streaming is essential for applications requiring immediate data availability such as fraud detection or live dashboards.

Hybrid approaches combine both methods to balance performance with resource utilization. Many organizations start with batch processing and gradually implement streaming for time-sensitive data sources.

3. Select Automated Data-Ingestion Tools

Consider platforms such as Airbyte, Apache Kafka, Apache NiFi, Amazon Kinesis, or Apache Flume based on your specific requirements. A comparison of popular data-ingestion tools can help you decide which platform best fits your use case. Evaluate factors like connector availability, scalability, ease of use, and total cost of ownership.

Look for tools that offer both cloud-hosted and on-premises deployment options. This flexibility ensures you can meet data sovereignty requirements while maintaining operational efficiency.

4. Implement Data Transformation and Validation

Standardize data through cleaning and transformation processes including deduplication, handling null values, and data type conversions. Some platforms provide AI and ML features for automated data validation and quality monitoring. Implement data profiling to understand patterns and identify potential quality issues before they impact downstream processes.

Establish clear data quality rules and thresholds that trigger alerts when violations occur. This proactive approach prevents poor-quality data from affecting business intelligence and analytics workflows.

5. Set Up Data Destination

Store ingested data in data warehouses, data lakes, databases, or streaming platforms as appropriate for your analytical needs. Consider factors like query performance, storage costs, and integration with existing analytics tools when selecting destinations. Modern cloud platforms offer multiple storage options that can be optimized for different use cases.

Plan for data lifecycle management including archiving and deletion policies. This ensures storage costs remain manageable as data volumes grow over time.

6. Ensure Data Security

Deploy encryption for data in transit and at rest, role-based access control, and multi-factor authentication to protect sensitive information. Verify that chosen tools comply with regulations like GDPR, HIPAA, or industry-specific requirements. Implement audit logging to track data access and modifications for compliance reporting.

Regular security assessments help identify potential vulnerabilities in your data ingestion pipeline. Update security measures as new threats emerge and regulations evolve.

Which Tools Can Automate Data Ingestion?

1. Airbyte

Airbyte is a powerful data-movement platform with an extensive library of 600+ pre-built connectors covering databases, APIs, SaaS applications, and cloud services. If a connector doesn't exist for your specific data source, you can create one via the Connector Builder, the Low-Code CDK, or the Python/Java CDKs. This flexibility ensures you can connect to virtually any data source without being limited by pre-built options.

The platform offers multiple deployment options including Airbyte Cloud for fully-managed operations, Airbyte Open Source for maximum control, and Airbyte Self-Managed Enterprise for organizations requiring on-premises deployment with advanced governance features.

Key capabilities include:

- Change Data Capture (CDC) for incremental replication and real-time sync

- Vector-store destinations (Pinecone, Milvus, Weaviate, Chroma) for semi-structured or unstructured data

- Integration with dbt for post-load transformations and data modeling

- AI-powered Connector Builder with an AI assistant for rapid connector development

- Python SDK and airbyte-lib package for programmatic pipelines and custom workflows

- Multiple sync modes including incremental append, full refresh overwrite, and deduplication

2. Apache Kafka

Apache Kafka is an open-source event-streaming platform designed for publishing, subscribing, processing, and storing streams of data in real-time. It excels at handling high-throughput, low-latency data streams making it ideal for applications requiring immediate data processing and analysis. Kafka's distributed architecture ensures fault tolerance and horizontal scalability across multiple servers.

The platform serves as the backbone for many real-time analytics and monitoring systems. Organizations use Kafka to build event-driven architectures that can process millions of messages per second while maintaining data durability and consistency.

Key highlights include:

- Distributed, scalable architecture that allows adding brokers to handle higher loads

- Partitions for parallel processing of large topics and improved throughput

- Kafka Connect framework to exchange data between Kafka and external systems

- Built-in replication for fault tolerance and high availability

3. Apache NiFi

Apache NiFi is an open-source data-movement platform that enables you to design, control, and monitor dataflows through an intuitive web-based user interface. The platform emphasizes ease of use with drag-and-drop functionality for building complex data pipelines without extensive coding requirements. NiFi provides comprehensive data provenance tracking, allowing you to see exactly how data moves through your system.

The platform excels at handling diverse data formats and protocols, making it suitable for organizations with heterogeneous data environments. Its visual approach to pipeline design makes it accessible to both technical and non-technical users.

Notable features include:

- Reliable data flow with write-ahead logs and content repositories for guaranteed delivery

- Data buffering and back-pressure controls to manage queued data during peak loads

- Extensive processor library for data transformation and routing operations

- Built-in security features including SSL, user authentication, and authorization controls

Conclusion

Data-ingestion automation is critical for managing high-volume data successfully across modern enterprises. This article has provided an overview of automated data ingestion and key reasons to adopt it including improved accuracy, enhanced accessibility, scalability, cost optimization, and increased productivity. By selecting the right tool whether Airbyte, Kafka, NiFi, or another platform you can ingest data efficiently and leverage it for diverse real-world applications. Modern ai data ingestion solutions continue to evolve, offering more sophisticated capabilities for handling complex data environments and enabling organizations to extract maximum value from their data assets.

Frequently Asked Questions

What is the difference between batch and real-time data ingestion?

Batch ingestion handles large data at scheduled intervals for historical analysis, while real-time ingestion streams data continuously for immediate insights like fraud detection or live dashboards. Organizations often combine both approaches based on use case needs.

How do I choose the right automated data ingestion tool?

To choose the right automated data ingestion tool, you must first define your specific business needs, data environment, and team expertise. Then, evaluate potential tools based on core factors like integration capabilities, processing methods, scalability, and cost.

What security considerations are important for automated data ingestion?

Important security measures for automated data ingestion involve strong access controls, end-to-end encryption (for data at rest and in transit), source-level data validation and cleansing, regulatory compliance, detailed audit logging for traceability, and embedding security best practices within the data governance framework.

Can automated data ingestion handle unstructured data?

Yes, modern automated ingestion tools handle structured, semi-structured, and unstructured data from documents, images, videos, and social media. AI, machine learning, vector databases, and specialized connectors help extract, transform, and analyze complex unstructured content effectively.

How do I measure the success of automated data ingestion implementation?

Evaluate the success of automated data ingestion using metrics across performance (throughput, latency, resource usage), data quality (accuracy, completeness, consistency), reliability (error , uptime), business impact (cost savings, user adoption), and efficiency (faster insights, reduced manual effort). Continuous monitoring, data observability automated validation, and feedback are essential for sustained improvement.

.webp)