Automated Data Processing: What It Is, How It Works, & Tools

Summarize this article with:

✨ AI Generated Summary

Automated data processing (ADP) streamlines data collection, cleaning, transformation, and storage with minimal human intervention, significantly boosting efficiency, scalability, security, and real-time decision-making. Modern ADP systems leverage AI and machine learning for intelligent pipeline orchestration, anomaly detection, and predictive resource management, transforming data workflows across enterprises.

- Key benefits: increased efficiency, scalability, faster decisions, improved security, and cost savings

- Types of automation: batch, stream, multiprocessing, distributed, and unified batch-stream processing

- Popular tools: Airbyte, Azure Data Factory, IBM DataStage

- Data observability ensures data quality, lineage, anomaly detection, and proactive health monitoring

Data teams at growing enterprises are often trapped in an impossible choice between expensive, inflexible legacy ETL platforms requiring 30-50 engineers for basic pipeline maintenance, or complex custom integrations that consume resources without delivering business value. This structural challenge highlights the critical need for automated data processing solutions that can effectively handle modern data-management demands while supporting AI-driven initiatives and real-time decision-making requirements.

What Is Automated Data Processing?

Automated data processing (ADP) refers to the use of technology to automatically process, organize, and manage data with minimal human intervention. It enables fast and accurate processing of large amounts of data, resulting in faster and better outcomes. Systems that implement ADP are designed to streamline data-related tasks, reduce manual effort, and minimize the risk of errors, thus significantly enhancing overall productivity.

ADP encompasses various aspects, from data collection and validation to transformation and storage. It represents a holistic data-management approach that automates each step necessary to ensure your data is complete, structured, aggregated, and ready for analysis and reporting.

Modern automated data-processing systems increasingly incorporate artificial intelligence and machine-learning capabilities to make intelligent decisions about data routing, quality assessment, and transformation optimization. These systems now support decentralized architectures like Data Mesh and unified platforms through Data Fabric implementations that address scalability challenges across heterogeneous environments.

What Are the Key Benefits of Automated Data Processing?

ADP offers numerous advantages to your business:

Increases Efficiency

Automating data processing speeds up tasks that would take hours or days to complete manually. This allows you to focus on strategic activities instead of getting bogged down in repetitive tasks. For example, an automated system can process thousands of customer orders simultaneously, ensuring timely fulfillment and freeing up staff for higher-value work.

Scalability

As your business grows, manually managing data becomes increasingly complicated and resource-intensive. Automated solutions can handle increased workloads without compromising performance, allowing you to scale your business while keeping workflows smooth and efficient.

Faster Decision-Making

ADP solutions enable you to make decisions faster by providing real-time access to accurate, up-to-date information. Automated systems ensure the quality and timeliness of data used for analysis and insights, helping you respond quickly to changing market conditions or internal challenges.

Improves Data Security

When sensitive data is handled manually, it becomes more prone to breaches, theft, or accidental exposure. Automated systems use advanced encryption, controlled access, and secure storage to protect data from unauthorized access or leaks. They also maintain detailed logs of all data activities, providing transparency and accountability.

Cost Savings

ADP helps reduce operational costs by streamlining tasks that typically require significant time investments. This results in fewer resources needed to manage data, leading to substantial savings. Additionally, automating processes reduces expenses related to errors.

What Are the Different Types of Data Processing Automation?

Understanding the following approaches helps you choose the right automation strategy for your specific business needs.

Batch Processing

Batch processing involves collecting and processing data in large groups or batches at scheduled intervals. This approach is useful for tasks that don't require immediate results, such as payroll processing or historical reporting.

Stream Processing

Stream processing, also known as real-time data processing, continuously handles data as it is generated. This proves critical for applications requiring instant insights, such as system monitoring or IoT analytics.

Multiprocessing

Multiprocessing utilizes multiple processors or cores within a single system to perform tasks simultaneously, significantly reducing processing time for compute-intensive workloads.

Distributed Processing

Distributed processing spreads data-processing tasks across multiple interconnected computers or servers, enhancing efficiency and reliability, especially for large datasets.

Unified Batch-Stream Processing

Modern architectures have eliminated the traditional separation between batch and stream processing through unified execution engines, allowing identical transformation logic for historical and real-time data.

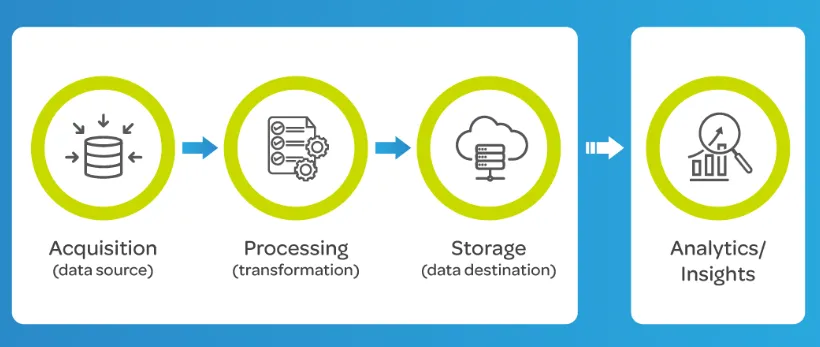

How Does Automated Data Processing Work?

- Data Collection: Automated systems pull data from sensors, databases, user input, and external APIs, creating a single source of truth for further processing.

- Data Cleaning: Once collected, data is cleaned to remove duplicates, fill missing values, and correct invalid entries.

- Data Transformation: Clean data is transformed—aggregated, normalized, and enriched—so it's ready for analysis. Automated ETL tools streamline this step.

- Data Storage: Finally, processed data is stored in databases, data warehouses, or data lakes, ensuring it remains accessible, secure, and ready for downstream applications.

What Is Data Observability and Why Is It Critical?

Data observability provides comprehensive visibility into data health, pipeline performance, and system behavior.

The Five Pillars of Data Observability

- Data Quality Monitoring:Validates accuracy, completeness, and consistency in real time.

- Lineage and Traceability: Documents every data movement and transformation.

- Anomaly Detection and Predictive Analytics: Baselines normal behavior and flags deviations.

- Metadata Correlation: Links pipeline events to business metrics for rapid root-cause analysis.

- Proactive Health Scoring: Synthesizes observability metrics into actionable grades.

How Are AI and Machine Learning Transforming ADP?

The integration of artificial intelligence and machine learning technologies into automated data processing, particularly in areas such as personal loans, represents the most transformative trend reshaping the industry.

Intelligent Data Pipeline Orchestration

AI creates self-optimizing pipelines that adjust processing parameters based on workload characteristics and historical performance.

Automated Data Quality Assessment

Machine-learning models detect anomalies and quality issues across diverse data sources without predefined rules.

Predictive Data Processing

ML anticipates future processing needs—enabling proactive resource scaling and capacity planning.

Self-Learning Data Pipelines

Reinforcement-learning agents experiment with alternative execution plans and autonomously implement the most efficient workflows.

Which Tools Can Help You Build Automated Data Processing Workflows?

1. Airbyte

Airbyte is an open-source data-integration platform with 600+ connectors, a no-code UI, and an AI-powered Connector Builder.

2. Azure Data Factory

Azure Data Factory offers 90 + built-in connectors, a visual drag-and-drop interface, and native integration with Azure services.

3. IBM DataStage

IBM DataStage provides a graphical designer, parallel-processing engine, and metadata-driven governance features for enterprise ETL/ELT workloads.

Conclusion

Automated data processing transforms how enterprises handle data by eliminating manual tasks and enabling real-time insights. Modern ADP systems incorporate AI capabilities that optimize data pipelines while ensuring quality, security, and compliance across diverse environments. By implementing the right automation strategy, organizations can focus on building business value rather than maintaining data infrastructure, ultimately turning data bottlenecks into competitive advantages.

Frequently Asked Questions

What is automated data processing (ADP)?

ADP uses technology to collect, clean, transform, and store data automatically with minimal human intervention.

Why should businesses use automated data processing?

It improves efficiency, scales easily, enhances security, supports real-time decision-making, and lowers costs.

Which tools can help automate data processing workflows?

Popular options include Airbyte, Azure Data Factory, and IBM DataStage.

How does AI enhance automated data processing?

AI introduces intelligent orchestration, adaptive transformation, real-time anomaly detection, and predictive scaling, all of which increase speed and accuracy without manual intervention.

.webp)