Real-Time Data Processing: Architecture, Tools & Examples

Summarize this article with:

✨ AI Generated Summary

Real-time data processing transforms raw data within milliseconds, enabling rapid decision-making and enhanced customer experiences across industries like finance, retail, healthcare, and manufacturing. Key architectures include Lambda, Kappa, and Delta, each balancing batch and streaming workloads, while challenges involve scalability, data quality, complexity, security, and cost. Emerging trends such as Zero-ETL and AI-native infrastructures reduce latency and operational overhead, with tools like Apache Kafka, Flink, and Airbyte facilitating robust, scalable real-time pipelines.

Data processing transforms raw data into a usable format for real-life applications. Among its many approaches, real-time processing offers the lowest latency, enabling rapid insight generation and decision-making. Organizations increasingly recognize that the ability to process and act upon data in real-time has become a critical differentiator, enabling them to respond more quickly to market changes, optimize operational efficiency, and deliver superior customer experiences.

This article explores the architecture, benefits, challenges, tooling, and use cases of real-time data processing, along with emerging trends that are reshaping the landscape.

What Is Real-Time Data Processing and How Does It Work?

Real-time data processing ingests, transforms, stores, and analyzes data the instant it is produced—typically within milliseconds. This approach has evolved from a niche technical capability to a foundational requirement for modern business operations, with demand for fresh, reliable data continuing to grow exponentially alongside the rise of AI-driven applications.

A common example is an e-commerce recommendation engine where customer actions like browsing, clicks, and purchases are captured immediately, and streaming analytics generates personalized recommendations in real time.

The fundamental shift toward real-time processing represents more than a technological upgrade. It signifies a reimagining of how organizations conceptualize and utilize their data assets. Traditional data processing architectures that relied heavily on batch operations and periodic updates are proving inadequate for the demands of modern business requirements that necessitate immediate responsiveness and contextual relevance.

The real-time processing workflow consists of four critical stages that work in concert to deliver immediate insights. Data collection serves as the entry point, ingesting information from diverse sources such as server logs, IoT devices, social media feeds, and transactional systems via tools like Apache Kafka or Amazon Kinesis.

Data processing involves aggregating, cleaning, transforming, and enriching streams using sophisticated algorithms and business logic. Data storage persists results in databases, streaming platforms, or in-memory stores optimized for rapid access and retrieval.

Finally, data distribution exposes processed data to downstream systems for analytics, operational decision-making, or feeding other real-time applications.

Modern real-time processing systems emphasize scalability, adaptability, and seamless integration with machine learning frameworks, prioritizing efficient handling of unstructured data while supporting real-time processing and compatibility with training and inference pipelines. This evolution has been particularly evident in the adoption of storage-compute separation models, where systems leverage cloud storage as primary storage for stream processing—representing a significant departure from traditional approaches that relied on local storage and memory.

What Are the Key Benefits of Real-Time Data Processing?

Faster Decision-Making

Real-time processing enables immediate insights that highlight opportunities or threats as they emerge, fundamentally changing how organizations respond to dynamic market conditions. Organizations can now implement predictive maintenance programs that analyze sensor data in real-time, identifying potential failures before they occur and scheduling maintenance activities to minimize production disruptions.

Enhanced Data Quality

Anomalies appear near the source in real-time systems, simplifying root-cause analysis and correction while data context remains fresh and actionable.

Elevated Customer Experience

Rapid feedback loops improve personalization and engagement by enabling immediate response to customer behavior and preferences.

Increased Data Security

Continuous monitoring detects fraud and security breaches in real-time, enabling immediate intervention before significant damage occurs.

How Does Real-Time Processing Compare to Batch and Near Real-Time Approaches?

Organizations increasingly adopt hybrid processing models that combine real-time and batch processing capabilities, optimizing resource utilization while maintaining responsiveness for critical operations.

What Are the Main Architectural Approaches for Real-Time Processing?

1. Lambda Architecture

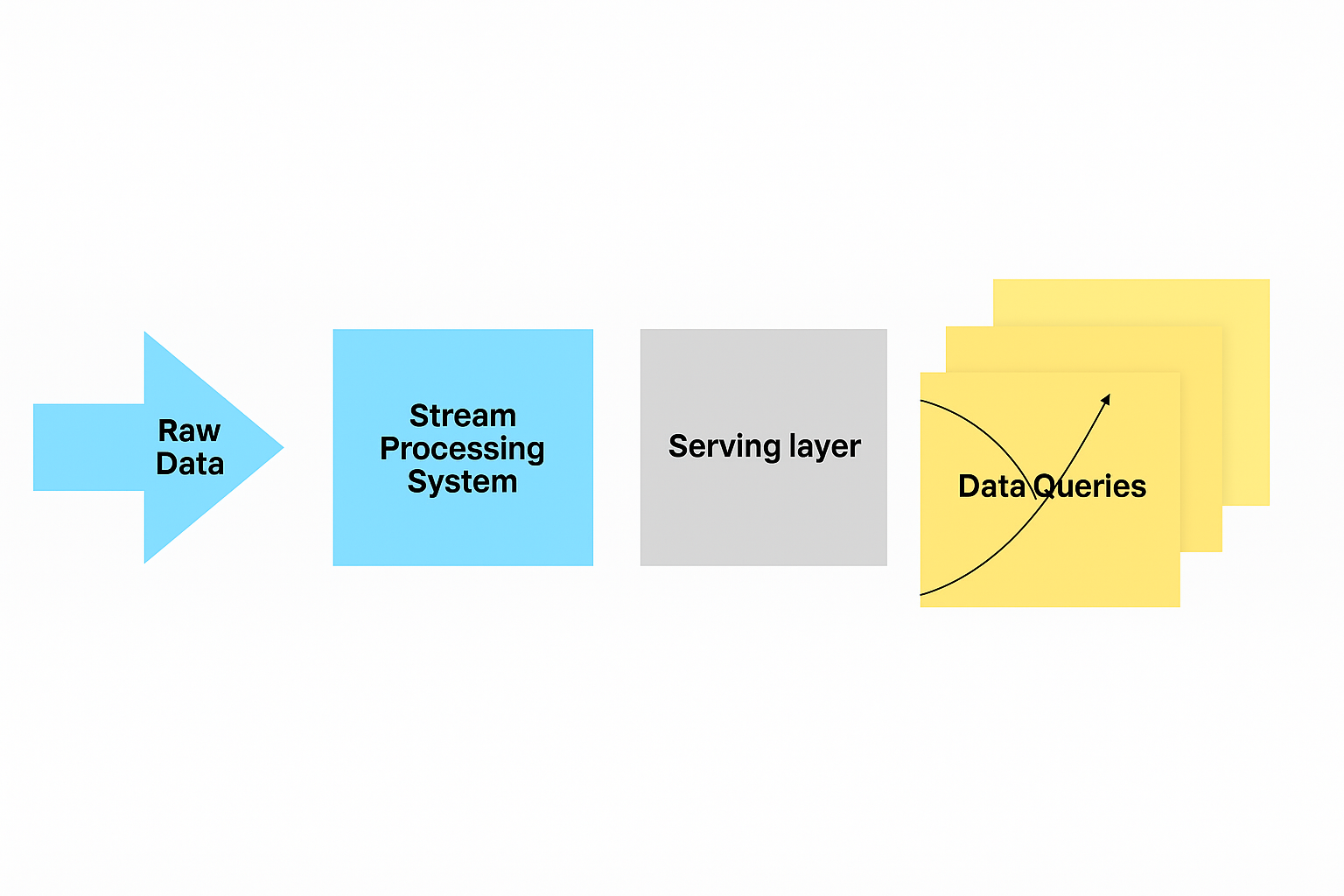

Lambda architecture employs three distinct layers: batch, speed, and serving layers that merge historical and live data to provide comprehensive analytics capabilities. The batch layer processes large volumes of historical data to generate accurate, complete views, while the speed layer handles real-time data with lower latency but potentially reduced accuracy. The serving layer combines results from both layers to present unified views to applications and users. This architecture provides fault tolerance and handles both historical analysis and real-time processing requirements, though it requires maintaining two separate processing systems.

2. Kappa Architecture

Kappa architecture simplifies operations by using a single streaming layer that handles both replays and live data, eliminating the complexity of maintaining separate batch and streaming systems. This approach treats all data as streams, processing both historical and real-time data through the same streaming infrastructure. Kappa architecture reduces operational complexity and development overhead by maintaining a single codebase for data processing logic, making it easier to ensure consistency between historical and real-time processing results.

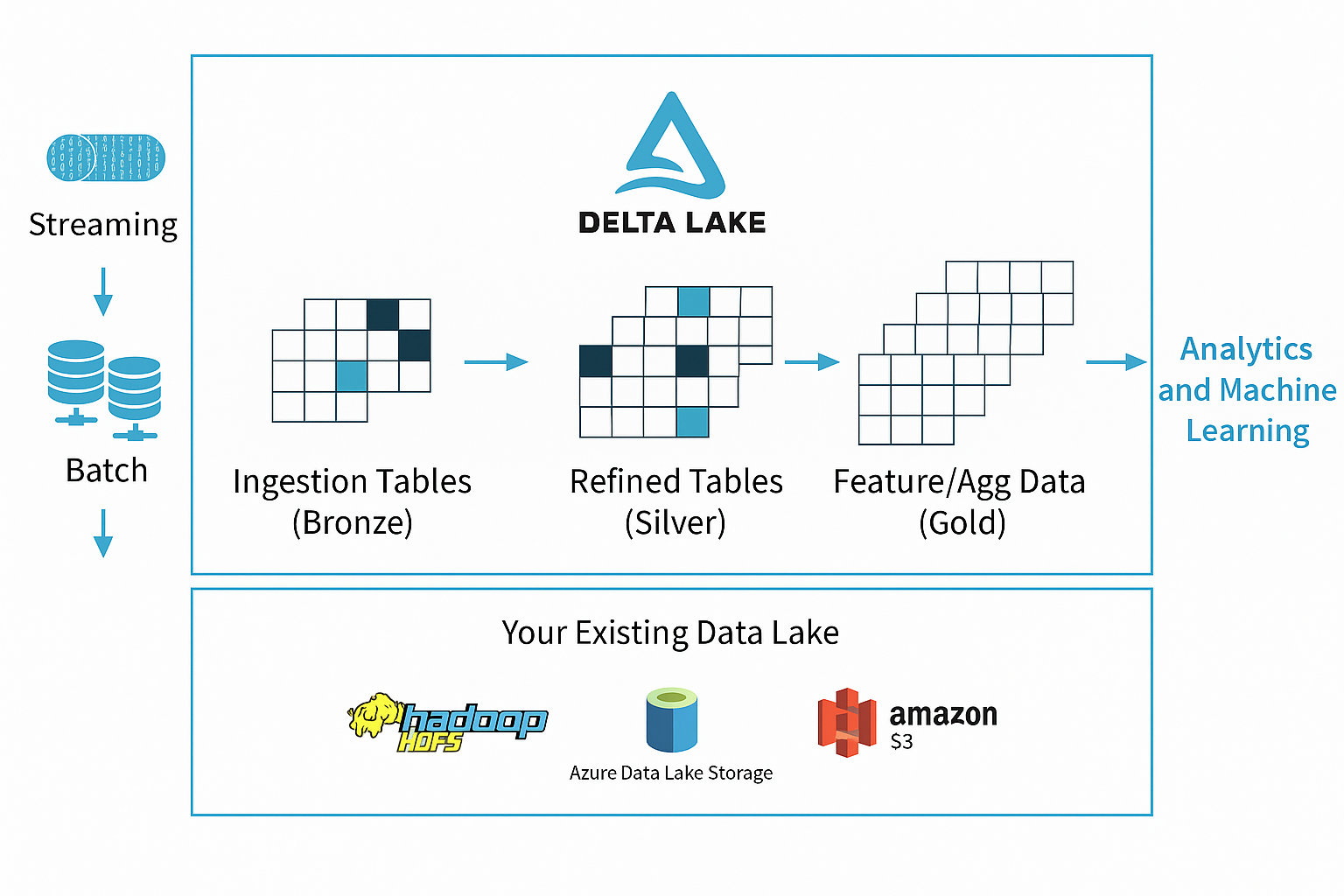

3. Delta Architecture

Delta architecture employs micro-batching to unify streaming and batch workloads in modern data lakes, providing a balanced approach that combines the benefits of both processing paradigms. This architecture processes data in small, frequent batches that provide near real-time capabilities while maintaining the reliability and correctness guarantees associated with batch processing. Delta architecture leverages modern data lake technologies and formats that support both streaming and batch access patterns, enabling organizations to implement unified data processing strategies without sacrificing functionality or performance.

What Are the Primary Challenges in Real-Time Data Processing?

Scalability presents the first major challenge as systems must handle surges in data volume without overload. Organizations need infrastructure that can dynamically scale to meet peak demand while maintaining consistent performance levels.

Data quality issues emerge from diverse formats, occasional data loss, and limited validation time. Real-time environments provide little opportunity for extensive data cleansing and validation compared to batch processing scenarios.

Complexity increases significantly when coordinating ingestion, transformation, and storage processes in parallel. Multiple components must work seamlessly together while maintaining fault tolerance and data consistency.

Security requirements demand robust controls without adding latency to processing pipelines. Traditional security measures may introduce delays that compromise real-time performance requirements.

Cost considerations include specialized infrastructure, expert personnel, and operational overhead. Real-time systems typically require more expensive hardware and skilled resources compared to traditional batch processing environments.

How Are Zero-ETL Architectures Transforming Real-Time Data Processing?

Zero-ETL architectures eliminate traditional extract-transform-load pipelines, enabling direct, real-time data integration between operational systems and analytical environments. They rely on data virtualization, schema-on-read, and advanced query engines, dramatically reducing latency and operational complexity while cutting costs.

This approach represents a fundamental shift from traditional data processing models. Instead of moving and transforming data through multiple stages, zero-ETL enables direct querying and analysis of data where it resides.

Organizations benefit from reduced infrastructure complexity and faster time-to-insights. The elimination of intermediate data movement steps reduces both latency and potential failure points in data pipelines.

How Is Artificial Intelligence Revolutionizing Real-Time Data Processing?

AI-native data infrastructure emphasizes efficient handling of unstructured data, real-time processing, and seamless integration with machine learning frameworks. This integration enables organizations to deploy intelligent systems that can learn and adapt continuously.

Real-time AI enables continuous model updates that improve accuracy over time. Models can incorporate new data immediately rather than waiting for batch retraining cycles.

On-demand predictions embedded in pipelines allow for immediate decision-making based on current data patterns. This capability transforms how organizations respond to changing conditions and opportunities.

Retrieval-augmented generation (RAG) for LLMs provides contextually relevant responses by combining real-time data with pre-trained language models. Edge AI deployments enable autonomous local decisions without requiring cloud connectivity, reducing latency and improving reliability in distributed environments.

What Are the Most Common Use Cases for Real-Time Data Processing?

Financial services leverage real-time processing for algorithmic trading and fraud detection. High-frequency trading platforms require microsecond response times to capitalize on market opportunities and execute trades effectively.

E-commerce and retail organizations implement real-time recommendations and dynamic pricing systems. These applications personalize customer experiences while optimizing revenue through responsive pricing strategies.

Healthcare systems deploy continuous patient monitoring and predictive analytics to improve patient outcomes. Real-time analysis of vital signs and medical data enables early intervention and preventive care.

Manufacturing and industrial applications focus on predictive maintenance and quality control. Sensor data analysis prevents equipment failures and maintains product quality standards through immediate corrective actions.

Transportation and logistics companies optimize ride-hailing algorithms and fleet management systems. Real-time location data and traffic patterns enable efficient routing and resource allocation.

What Are Specific Examples of Real-Time Processing Applications?

Stream processing powers inventory and pricing optimization in retail environments. Systems analyze purchase patterns and demand signals to adjust pricing and stock levels dynamically.

Financial fraud detection systems operate at sub-second latency to identify suspicious transactions before completion. Machine learning models analyze transaction patterns and flag anomalies immediately.

IoT sensor analytics enable predictive maintenance in manufacturing facilities. Continuous monitoring of equipment performance identifies potential failures before they cause production disruptions.

Real-time ETL with change data capture (CDC) keeps analytical systems synchronized with operational databases. Changes propagate immediately to maintain data consistency across systems.

Network traffic monitoring systems detect threats and implement auto-rerouting to maintain service availability. Security systems analyze patterns in real-time to identify and respond to potential attacks.

Location-based routing optimizes ride-sharing and delivery services by analyzing real-time traffic data and demand patterns. Predictive maintenance systems for power grids analyze sensor data to maintain reliability and prevent outages.

What Tools and Technologies Enable Real-Time Data Processing?

Ingestion tools include Apache Kafka, Apache NiFi, and Amazon Kinesis for collecting and streaming data from various sources. These platforms provide reliable, scalable data ingestion with built-in fault tolerance.

Stream processing frameworks such as Apache Flink, Apache Spark Streaming, Apache Storm, and Apache Samza handle real-time data transformation and analysis. Each offers different trade-offs between latency, throughput, and complexity.

Storage solutions include Apache Cassandra, Amazon DynamoDB, Firebase, and MongoDB for persisting processed data with low-latency access. These databases are optimized for high-volume, real-time workloads.

Analytics engines like Google Cloud Dataflow, Azure Stream Analytics, StreamSQL, and IBM Streams provide managed services for real-time data analysis and insights generation.

What Are the Trade-offs Between Cloud and On-Premise Deployments?

Hybrid models combine benefits of both approaches, allowing organizations to balance control, cost, and operational requirements.

How Do You Build an Effective Real-Time Data Pipeline?

1. Define Clear Objectives

Start by establishing business requirements, latency expectations, throughput needs, and success metrics. Clear objectives guide technology selection and architecture decisions throughout the implementation process.

2. Choose Appropriate Data Sources

Identify relevant data sources including logs, IoT devices, APIs, and transactional systems. Consider data volume, velocity, and variety requirements when selecting sources for real-time processing.

3. Select Robust Ingestion Technology

Choose low-latency, fault-tolerant streaming platforms that can handle expected data volumes reliably. Consider factors like message ordering, delivery guarantees, and integration capabilities.

4. Implement Processing Logic

Design validation, transformation, enrichment, and machine learning inference capabilities. Ensure processing logic can handle data quality issues and maintain performance under varying loads.

5. Pick Appropriate Storage Solutions

Select scalable, performant storage systems that comply with access patterns and regulatory requirements. Consider factors like consistency requirements, query patterns, and retention policies.

6. Deploy and Monitor Continuously

Implement comprehensive observability, alerting, and continuous optimization practices. Monitor system performance, data quality, and business metrics to ensure ongoing success.

How Does Airbyte Enable Real-Time Data Processing?

Airbyte offers comprehensive real-time data processing capabilities through its modern integration platform. The platform provides over 600+ connectors that enable organizations to build robust real-time data pipelines without extensive custom development.

Real-time CDC connectors for major databases enable immediate data synchronization between operational systems and analytical environments. These connectors capture changes as they occur, ensuring downstream systems receive the most current data.

AI Connector Builder generates connectors from natural-language prompts, dramatically reducing development time for custom integrations. This capability enables organizations to quickly connect new data sources without traditional connector development overhead.

Flexible deployment options include fully-managed cloud services and self-hosted enterprise solutions. Organizations can choose deployment models that meet their security, compliance, and operational requirements.

Capacity-based pricing provides predictable costs independent of data volume, enabling organizations to scale their real-time processing capabilities without unexpected cost increases. This pricing model supports growth while maintaining budget predictability.

Native integration with orchestration tools and vector databases enables seamless incorporation into existing data workflows. The platform supports both structured and unstructured data movement, providing unified capabilities for diverse data processing requirements.

Frequently Asked Questions

What is the difference between real-time and streaming data processing?

All real-time processing is streaming, but not all streaming achieves millisecond-level latency required for true real-time scenarios. Streaming refers to the processing paradigm that handles continuous data flows, while real-time specifically refers to processing with minimal latency constraints.

How do you handle data quality issues in real-time processing?

Implement automated validation, anomaly detection, and remediation workflows that operate in-stream without adding significant latency. Use schema validation, statistical outlier detection, and automated data correction rules to maintain quality standards while preserving processing speed.

What are the key performance metrics for real-time data systems?

Processing latency, throughput, error rates, availability, and data quality scores represent core technical metrics. Domain-specific business metrics such as fraud detection accuracy, recommendation click-through rates, and customer satisfaction scores measure business value delivery.

How do you ensure security in real-time environments?

Encrypt data in transit and at rest using industry-standard protocols and apply fine-grained access controls to limit data exposure. Use continuous threat monitoring tools optimized for streaming workloads to detect and respond to security incidents without compromising processing performance.

Conclusion

Real-time data processing has evolved from a specialized capability to a foundational requirement for modern enterprises. Advances in streaming technology, AI integration, and Zero-ETL approaches now enable organizations of all sizes to deploy low-latency, high-value data solutions. Success hinges on selecting the right architecture, balancing cost and complexity, and leveraging platforms that remove traditional barriers to real-time adoption. Organizations that embrace real-time processing capabilities position themselves to respond quickly to market changes and deliver superior customer experiences.

.webp)