Resource Pooling vs Agent Sprawl: Modern Hybrid Deployment Model

Summarize this article with:

✨ AI Generated Summary

Agent sprawl in hybrid deployments leads to scattered, resource-heavy, and insecure processes that complicate management and increase costs. Resource pooling, managed via a centralized control plane, consolidates compute and connectivity, improving scalability, security, governance, and operational efficiency.

- Agent sprawl causes coverage gaps, security risks, resource drain, and operational overhead.

- Resource pooling enables elastic capacity, unified policy enforcement, centralized secrets, and streamlined updates.

- Modern hybrid models split control and data planes to ensure data sovereignty, outbound-only connectivity, and zero host installations.

- Best practices include consolidating orchestration, deploying shared data planes, automating scaling, and centralizing logging.

- Agents remain useful only in edge cases like air-gapped sites or legacy systems but should be phased out in favor of pooled infrastructure.

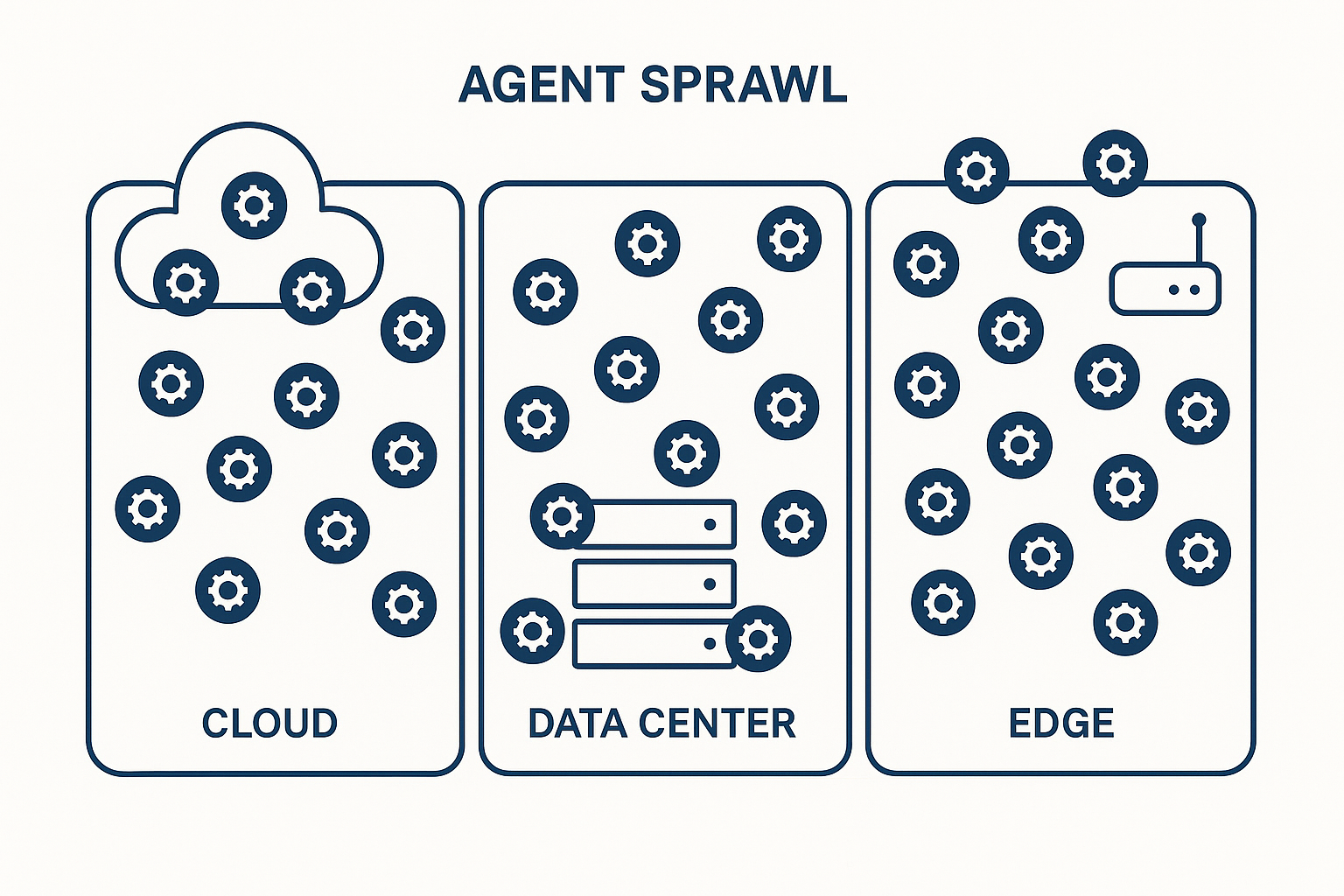

You manage workloads across data centers, cloud environments, and edge locations. Centralizing everything promises operational efficiency until teams report slow deployment cycles; decentralizing creates management complexity as agents multiply across every host. When that happens, monitoring dashboards show incomplete coverage, and the processes you do manage consume CPU, create security vulnerabilities, and fall behind on patches.

The real problem isn't choosing between cloud and on-premises, but achieving control, scale, and security without agent sprawl.

Modern hybrid deployment models solve this challenge through resource pooling and a single control plane that coordinates local execution. Let's examine why this proliferation happens and how resource pooling fixes it.

What Is Agent Sprawl in Hybrid Deployments?

Agent sprawl happens when every team in your hybrid stack installs its own monitoring or sync agent, until you can't keep track of which processes live where. Instead of one centrally managed runner, you end up with dozens, sometimes hundreds, scattered across clouds, data centers, and edge nodes.

Sprawl typically starts when siloed teams add their own processes to unblock projects without waiting for shared infrastructure. Without a unified control plane, each environment needs a separate scheduler and runner. Cloud-only tooling often demands on-premises "shims" to reach local data, multiplying installs across your infrastructure. What begin as temporary fixes turn permanent because nobody circles back to decommission them.

The costs compound quickly:

- Coverage gaps emerge because these distributed processes are hard to deploy everywhere

- Security risks multiply as elevated-privilege processes expand your attack surface and create patching races

- Resource drain occurs as each process consumes CPU, memory, and I/O you'd rather assign to workloads

- Version drift results from manual updates, leading to fragile pipelines

- Credential sprawl happens when credentials sit in config files that nobody tracks

- Operational burden grows as engineers spend weekends patching instead of building features

- Troubleshooting delays occur when logs are spread across machines, making root-cause analysis take hours

A global retailer ran more than 200 processes across its VPCs and on-prem clusters; when a breach hit, the team couldn't even tell which were still active, let alone whether they were patched. That's sprawl in action.

What Is Resource Pooling and Why Does It Matter?

Resource pooling transforms hybrid operations by placing shared compute, storage, or connector pools behind a single control plane that you govern centrally. Instead of deploying dozens of processes across every host, you draw capacity from centralized pools only when workloads require it. Virtualization and dynamic allocation allow the same hardware to serve multiple jobs, then sit idle, or handle different tasks, seconds later.

The operational benefits are immediate. A unified control plane reduces moving parts, cutting patch management and troubleshooting overhead. On-demand capacity allocation increases utilization while reducing expensive over-provisioning. Governance improves because every job, secret, and audit trail exists in one place, not scattered across 200 processes that may not be current with security updates.

Here's how pooling compares with unchecked sprawl across key operational dimensions:

Whether you're reconciling trades in financial services or syncing IoT telemetry in manufacturing, shared pools deliver the control, scale, and auditability hybrid deployments require without drowning teams in individual process management.

How Does the Modern Hybrid Deployment Model Solve This Trade-Off?

A modern hybrid stack splits responsibilities between a cloud-hosted control plane and one or more regional data planes you run inside your own network. The control plane handles workload scheduling, policy enforcement, and upgrades. Your data planes execute jobs next to the data, keeping performance high and administrative access local.

This architecture delivers several key benefits:

- Unified governance through a single control plane gives you uniform RBAC, logging, and audit trails without juggling dozens of vendor dashboards

- Consistent policy enforcement lets governance teams apply one policy set that gets enforced everywhere, on-premises or in the cloud

- Complete visibility shows you exactly which pipelines are running, where, and for how long

- Data sovereignty protects sensitive workloads inside regulated regions through local data planes

- Placement flexibility enables bursty analytics jobs to run in public cloud when you need extra capacity

- Enhanced security through outbound-only connectivity keeps firewalls closed, removing a large attack surface compared with inbound callbacks

- Zero host installations eliminate the need to install a process on every host by orchestrating over APIs and container schedulers

- Namespace isolation ensures each data plane runs separately, so one team's upgrade never breaks another's pipeline

Airbyte Enterprise Flex follows this architecture. Your 600+ connectors run inside customer-controlled Kubernetes clusters, yet the hybrid control plane in Airbyte Cloud schedules, scales, and monitors them centrally with no per-source processes, no hidden drift, and no trade-off between control and convenience.

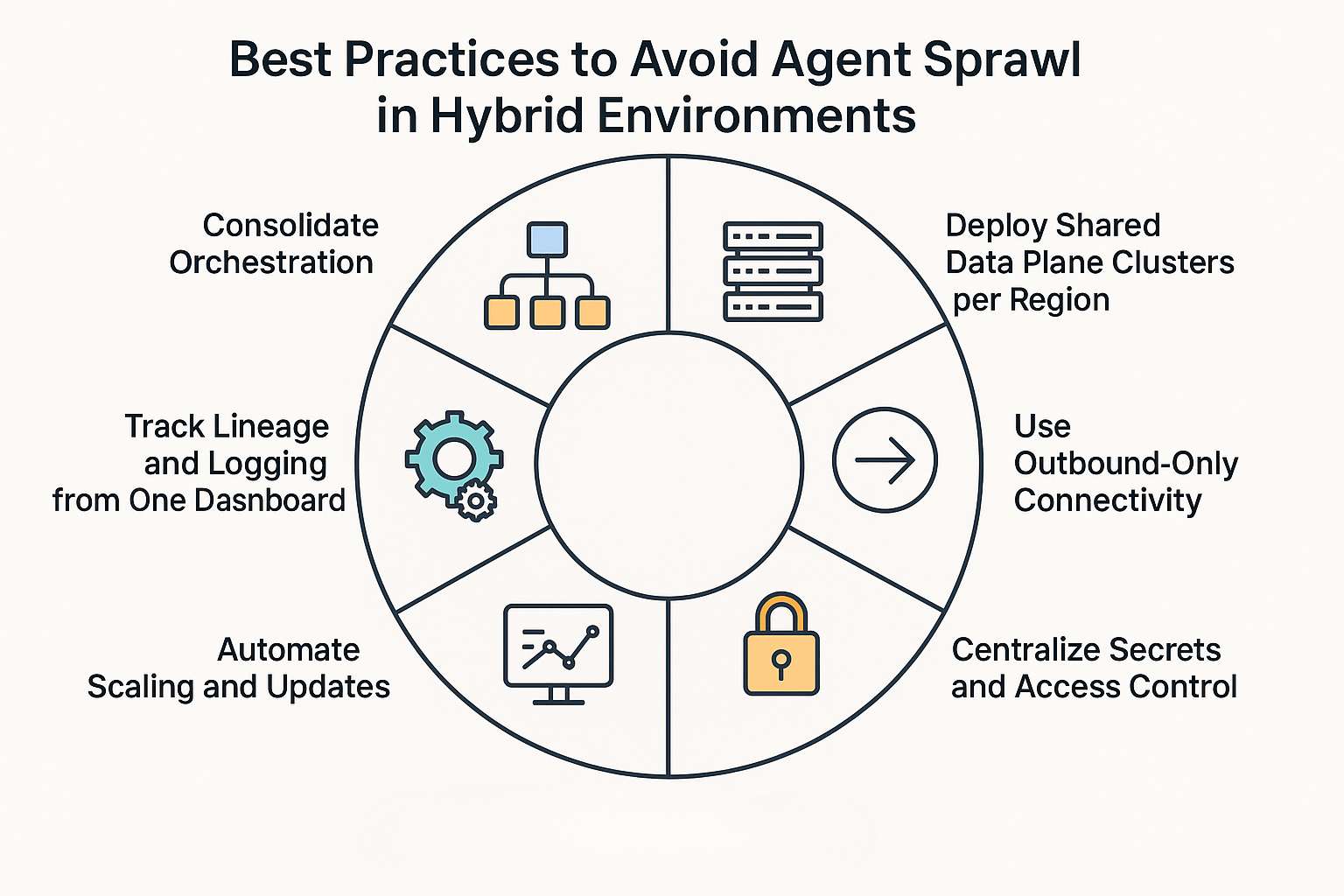

What Are the Best Practices to Avoid Agent Sprawl in Hybrid Environments?

Sprawl happens when every team solves connectivity problems by installing yet another local process. You end up supporting hundreds of daemons that drain CPU, drift out of date, and still cover less than half of your cloud assets. Stop this by adopting six habits that treat connectivity as shared infrastructure rather than per-host software.

1. Consolidate Orchestration

Give all pipelines a single control plane. Central orchestration tools handle scheduling, upgrades, and policy enforcement across clouds and on-prem. This removes the need for one-off installations on every server. A unified layer also shows you exactly which workloads are running where, eliminating governance gaps that sprawl creates.

2. Deploy Shared Data Plane Clusters per Region

Run a pooled execution cluster inside every jurisdiction that matters to you instead of spinning up a process for each source. This pattern respects data-sovereignty rules, workloads stay local, while reporting back to the cloud control plane. Sizing is straightforward: allocate baseline capacity for steady workloads, then let the cluster burst when demand spikes.

3. Use Outbound-Only Connectivity

Processes that wait for inbound calls expose open ports and privileged sockets. Flip that flow. With an outbound-only model the cluster initiates all traffic. This fits behind existing firewalls and shrinks the attack surface that traditional installations create.

4. Centralize Secrets and Access Control

Hard-coded credentials living inside scattered processes invite breaches. Replace them with references to an enterprise vault or IAM system. Central secret stores rotate keys automatically and provide audit trails, a requirement for regulated industries.

5. Automate Scaling and Updates

Treat each regional cluster as reusable infrastructure. Auto-scaling frameworks grow capacity during peak loads and shrink when idle. This eliminates over-provisioning. Rolling updates keep every node on the same version, preventing the configuration drift that manual patching causes.

6. Track Lineage and Logging from One Dashboard

Wire every cluster into a central observability stack. End-to-end lineage and real-time logs in one place cut mean-time-to-resolution and satisfy auditors who need provable data paths. When issues arise, you debug the pipeline, not a farm of opaque processes scattered across VPCs.

These six habits replace connectivity sprawl with governed, elastic service you can trust.

When Should You Still Use Agents?

Processes aren't completely obsolete. In specific situations, installing a lightweight daemon on the host remains the most practical, or only, option:

- Edge sites with spotty links present a clear use case. When a factory floor or remote branch loses WAN access for hours, a local process can buffer data until connectivity returns.

- Fully air-gapped environments in defense or healthcare workloads that can't reach the internet at all require an on-box collector to maintain visibility while respecting strict sovereignty controls.

- Short-lived proofs of concept sometimes warrant the expedient approach. For a two-week pilot, dropping a single process is faster than standing up a whole data plane.

- Stubborn legacy systems like older hypervisors and bespoke appliances that block API calls may still require an in-guest collector to close visibility gaps.

Treat these installs as transitional. Keep them outbound-only, pin them to the least-privileged role, and automate patches so you're not babysitting version drift. As connectivity, compliance, or throughput needs grow, migrate the workload into a shared resource pool and retire the individual process. The same data plane architecture you adopt elsewhere will absorb it without a rewrite.

Why Resource Pooling Defines the Modern Hybrid Deployment Model?

Resource pooling creates a shared compute and connectivity layer that every pipeline can access, replacing the need for hundreds of host-level processes scattered across your infrastructure. A cloud control plane handles scheduling, while a unified pool of CPU, memory, and network resources inside your environment executes the work.

Key advantages of this approach:

- Eliminates configuration drift by launching each task from the same image under one policy engine, enforcing governance automatically

- Cuts costs through real-time allocation as idle capacity returns to the pool immediately, increasing utilization and eliminating over-provisioning

- Reduces infrastructure waste from under-utilized servers and licenses, especially in hybrid environments where costs span cloud and on-premises infrastructure

- Scales across regions without compromising data sovereignty by keeping data planes inside regulated VPCs while the control plane operates in the cloud

- Maintains compliance while enabling centralized management through separation of control and data planes

Airbyte Enterprise Flex uses this pattern: one hybrid control plane orchestrates jobs while customer-owned clusters execute them. You get elastic scale, 600+ connectors, and unified governance without managing dozens of individual processes.

How Can You Eliminate Agent Sprawl in Your Infrastructure?

By pooling resources under a single control plane, you replace scattered processes with shared capacity that scales on demand, costs less, and stays visible for audits. Airbyte Enterprise Flex delivers this modern hybrid deployment model with centralized orchestration, shared data plane clusters, and zero sprawl.

Talk to Sales to simplify and secure your hybrid infrastructure with complete data sovereignty.

Frequently Asked Questions

How does resource pooling reduce operational overhead compared to traditional agent-based monitoring?

Resource pooling centralizes compute capacity behind a single control plane, eliminating the need to manage, patch, and monitor individual agents on every host. Instead of maintaining hundreds of separate processes with their own versions, configurations, and credentials, you manage one unified pool that serves all workloads. This cuts administrative tasks like patch management by consolidating updates at the pool level and provides a single dashboard for observability rather than fragmented logs across machines.

What security advantages does a hybrid control plane offer over distributed agent installations?

A hybrid control plane with outbound-only connectivity closes firewall ports that traditional agents require, shrinking your attack surface. Centralizing authentication through enterprise secret managers eliminates hard-coded credentials scattered across hosts. You also reduce the number of privileged processes running in your environment from hundreds to a handful of tightly scoped service accounts, making it easier to audit access and respond to security incidents.

Can resource pooling handle the same workload diversity that individual agents support?

Yes. Resource pools use dynamic allocation to serve diverse workloads from the same shared infrastructure. Whether you're running real-time CDC replication, batch analytics, or IoT data ingestion, the pool allocates capacity on demand and releases it when jobs complete. Modern container orchestration enables workload isolation within the pool, so different teams and pipeline types coexist without interference while benefiting from shared scale and unified management.

How do I migrate from agent sprawl to a pooled architecture without disrupting existing pipelines?

Start by deploying a shared data plane cluster in one region alongside your existing agents. Migrate lower-risk pipelines first to validate the architecture, then gradually shift production workloads as confidence builds. Most hybrid deployment platforms support parallel operation, allowing old and new approaches to coexist during transition. Once migration completes, decommission agents in phases and centralize observability into the new control plane, reducing operational complexity incrementally rather than requiring a risky big-bang cutover.

.webp)