How to Create Bert Vector Embeddings? A Comprehensive Tutorial

Summarize this article with:

✨ AI Generated Summary

Vector embeddings, including word embeddings, are a powerful Natural Language Processing (NLP) technique that helps machines understand and interpret text more efficiently. The introduction of Bidirectional Encoder Representations from Transformer (BERT) has further enhanced this NLP task.

BERT's ability to interpret text bi-directionally allows it to grasp the full context of a sentence. So, it can capture even subtle differences in meaning and provide a deeper understanding of language. Creating BERT embeddings enables AI systems to handle complex aspects of language with high precision.

This comprehensive tutorial will help you learn about word embeddings, BERT and its architecture, steps to create BERT embeddings, and practical use cases.

What Is Word Embedding and Why Does It Matter?

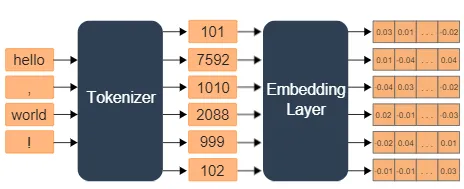

Word embedding is an NLP technique used for language modeling and feature learning. It can be unsupervised, supervised, or self-supervised, depending on the specific application. Before creating word embeddings, you first need to tokenize the text by breaking it down into individual words. Each word is then mapped to an index value in a pre-defined vocabulary. Once tokenized, you can get into the word embedding process, where you must convert these words into dense, continuous vectors of real numbers.

In word-embedding space, words with similar meanings are positioned closer together. For example, the words "king" and "queen" would be similar in the vector space and reflect their related meanings. This ability helps AI models capture the semantic meanings of words based on their context and relationships in a large corpus of text.

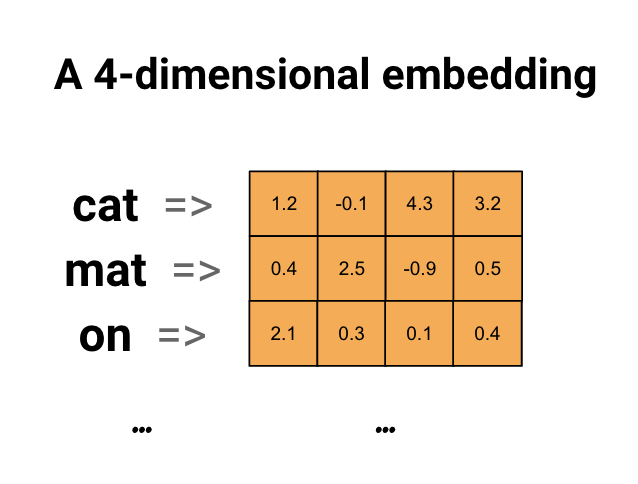

Embeddings are multidimensional, which indicates that they have varying dimensions depending on the model's complexity. An 8-dimensional embedding would be sufficient for small datasets, while large datasets may benefit from embeddings up to 1024 dimensions. A high-dimensional embedding will extract fine-grained relationships across the words but requires more data to learn.

A simple illustration of word embedding, which presents each word as a 4-dimensional vector:

What Are the Most Popular Techniques to Create Word Embeddings?

1. Word2Vec

Word2Vec is a neural-network-based NLP model introduced by Google researchers in 2013 to create word embeddings. This model takes a large corpus of text as input and generates a vector space where each unique word is assigned a corresponding vector. Word2Vec model uses two types of architectures:

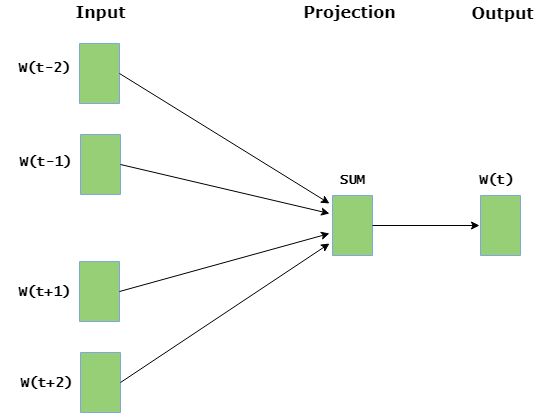

- Continuous Bag-Of-Words (CBOW): This architecture works like a fill-in-the-blank exercise. It learns how a word influences the probability of other words within the specific context window. In the illustration below, the input layer has the context words, and the output layer contains the current word. A hidden layer includes the dimensions needed to represent the words in the output layer.

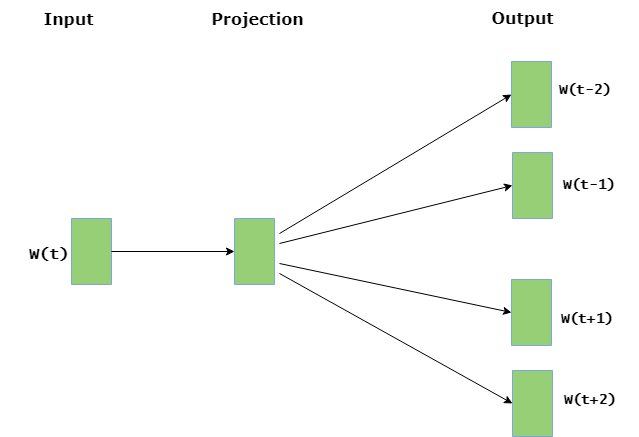

- Continuous Sliding Skip-Gram: In this model, the current word is used to predict the surrounding window of context words. The skip-gram architecture gives more priority to nearby context words compared to those that are farther away. In the image below, the input layer involves the current word, while the output layer has context words. The hidden layer contains the dimensions in which you want to represent the current word in the input layer.

2. TF-IDF

TF-IDF (Term Frequency-Inverse Document Frequency) is a statistical measure to compute the mathematical significance of words in text documents. TF-IDF is calculated as the product of TF (term frequency) and IDF (Inverse Document Frequency).

- TF: It is calculated as the ratio of the number of target terms in the document to the total number of words in that document.

- IDF: It is computed by taking the logarithm of the ratio of the total count of documents to the number of documents that contain the target term.

The TF-IDF value increases when a word appears more frequently in a specific document but decreases when it appears frequently across multiple documents. TF-IDF is often used as a weighting factor in information retrieval, text mining, and user modeling. Despite being simple and efficient, TF-IDF doesn't capture semantic similarities between words.

3. Bag-of-Words

Bag-of-Words (BoW) is a text representation method that describes text documents based on the occurrence of words within a document. This model treats each word in the document independently and ignores the order of words. After creating a vocabulary from all the words in the document, you can represent the document by counting how many times each word from the vocabulary appears.

For example, if we have two sentences: "The cat sat on the mat" and "The dog sat on the log", the vocabulary would be: ["the", "cat", "sat", "on", "mat", "dog", "log"]. Each sentence is then represented as a vector based on word frequency.

While BoW is simple to implement and understand, it loses information about word order and context, making it less effective for capturing complex semantic relationships.

What Is BERT and How Does It Work?

BERT is one of the modern large language models developed by Google in 2018. BERT uses the transformer model as its primary architecture and is trained on large-scale datasets. The major advantage of BERT over traditional NLP models is its ability to understand context by processing words in relation to all other words in a sentence, rather than one-by-one in order.

BERT training process involves two stages: pre-training and fine-tuning. During pre-training, BERT learns from a large corpus of text by predicting masked words and determining if two sentences follow each other. This pre-training helps BERT develop a general understanding of language. During fine-tuning, BERT adapts to specific tasks like sentiment analysis or question answering using task-specific datasets.

The transformer architecture enables BERT to process all words in a sentence simultaneously, allowing it to understand the relationships between words regardless of their position. This bidirectional processing capability makes BERT particularly effective at capturing context and generating meaningful embeddings.

Architecture of BERT

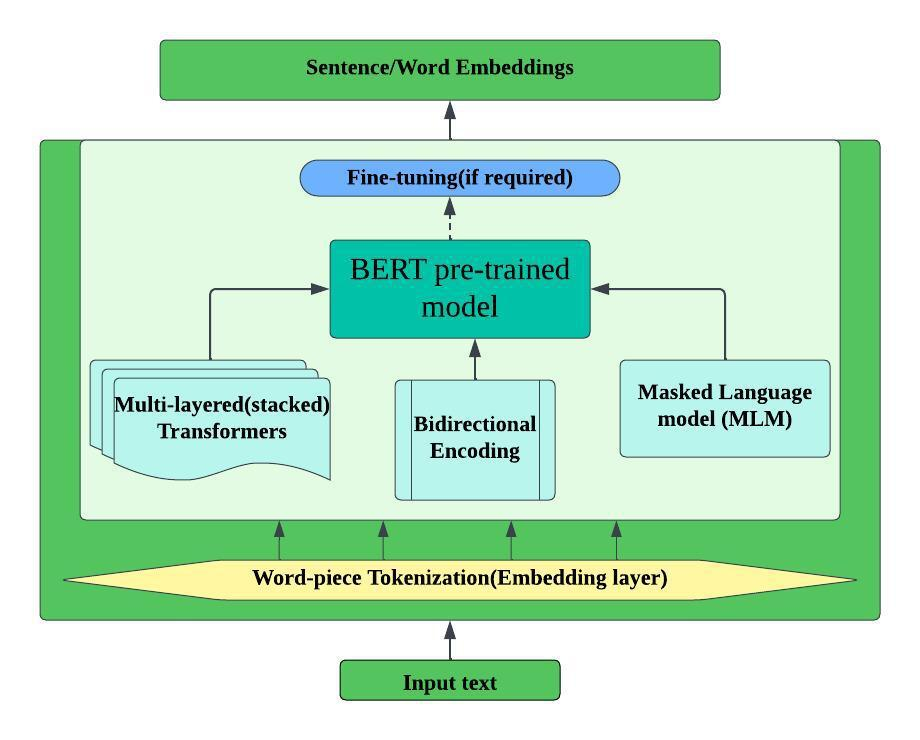

BERT consists of the following key components:

1. Word-piece Tokenization

BERT uses WordPiece tokenization, which breaks words into smaller subword units. This approach handles out-of-vocabulary words effectively by representing them as combinations of known subword pieces. For example, the word "unhappiness" might be tokenized as ["un", "##happi", "##ness"], where "##" indicates a continuation of the previous token.

2. Bidirectional Encoder

Unlike traditional models that read text left-to-right or right-to-left, BERT processes text bidirectionally. This means it considers both the left and right context of each word simultaneously, providing a more comprehensive understanding of word meaning in context.

3. Transformers

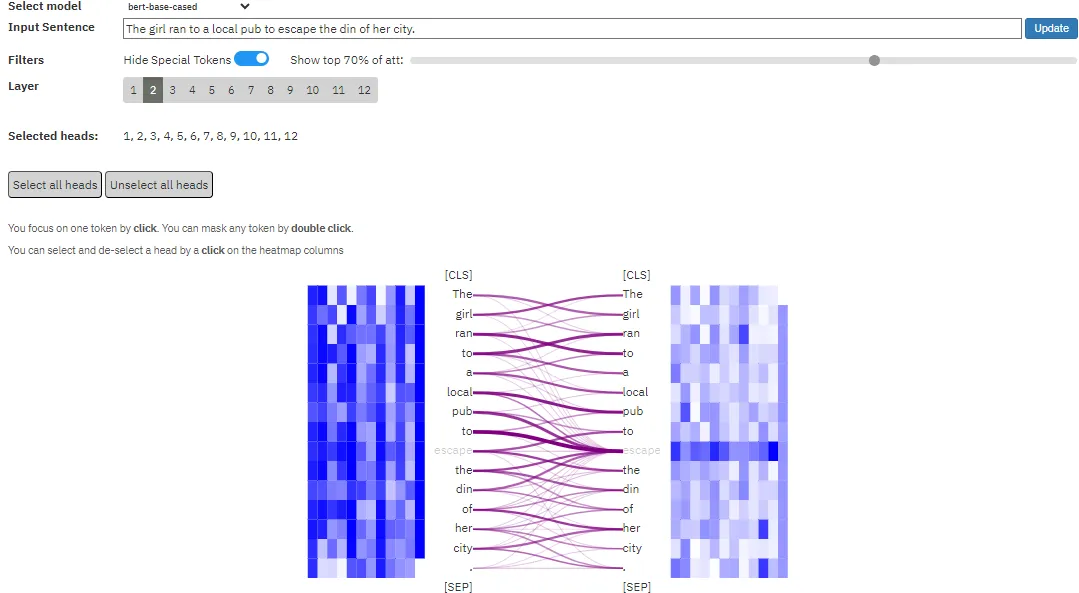

BERT is built using transformer encoders, which use self-attention mechanisms to weigh the importance of different words in a sentence. The self-attention mechanism allows BERT to focus on relevant words when processing a particular token, enabling better context understanding.

4. Masked Language Modeling (MLM)

During pre-training, BERT randomly masks some words in the input and tries to predict them based on the surrounding context. This forces the model to develop a deep understanding of language relationships and context.

5. Fine-tuning

After pre-training, BERT can be fine-tuned for specific downstream tasks by adding task-specific layers and training on domain-specific data. This approach allows BERT to adapt its general language understanding to specialized applications.

What Makes BERT Excellent for Creating Vector Embeddings?

An embedding is a numerical representation of a categorical feature, such as a movie genre, a dog's breed, or an employee's job designation. BERT embeddings represent words, phrases, or entire sentences as dense vectors that capture semantic meaning and contextual relationships.

BERT creates embeddings through three main types of representations that are combined to form the final embedding:

1. Token Embeddings

Token embeddings map each token in the input sequence to a vector representation. Each token (word or subword) is assigned a unique embedding vector that captures its basic semantic properties. These embeddings are learned during the pre-training process and form the foundation for understanding individual tokens.

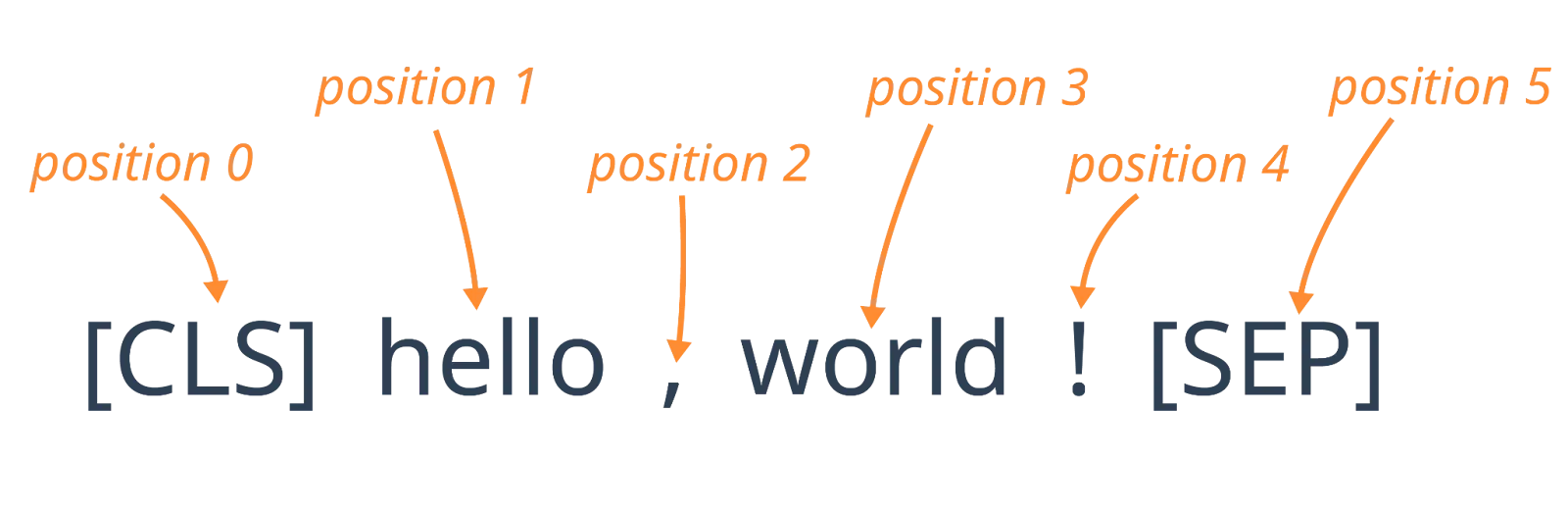

2. Position Embeddings

Since transformers don't inherently understand word order, position embeddings provide information about where each token appears in the sequence. BERT can handle sequences up to 512 tokens, and each position gets its own learned embedding vector. This allows BERT to understand that "Apple eats John" and "John eats Apple" have different meanings despite containing the same words.

3. Token-Type Embeddings

Token-type embeddings distinguish between different segments in the input. When BERT processes sentence pairs (like in question-answering tasks), it uses token-type embeddings to differentiate between the first sentence (segment A) and the second sentence (segment B). This segmentation capability is crucial for tasks that require understanding relationships between multiple text segments.

The BERT embedding layer can compute the final embedding for each token by summing up the three embeddings and then applying normalization to the sums.

A simple illustration of how the BERT embedding layer calculates the embeddings for the string “hello, world” is given below:

Generating High-Quality BERT Embeddings with Hugging Face

Hugging Face, an AI community, offers a way to work with BERT models such as Roberta, DistilBERT, BERT-Tiny, and many more to generate word embeddings. The platform provides pre-trained models and easy-to-use APIs that simplify the process of generating BERT embeddings.

Here is a simple example that creates BERT embeddings based on the given input sentence using Hugging Face’s bert-base-cased model:

How Do You Implement BERT Embeddings Step-by-Step?

Here are the detailed steps to implement the BERT model for creating embeddings:

- Launch Google Colab and click on File > New notebook in Drive.

- Install the transformers module using the pip command:

!pip install transformers- Import the following Python libraries.

import random

import torch

from transformers import BertTokenizer, BertModel

from sklearn.metrics.pairwise import cosine_similarity- Enter the code below to initialize the random seed for PyTorch to achieve high reproducibility and manage the GPU randomness.

RandomSeed = 52

random.seed(RandomSeed)

torch.manual_seed(RandomSeed)

if torch.cuda.is_available():

torch.cuda.manual_seed_all(RandomSeed)- Then, load the BERT pre-trained model and the tokenizer. Here, let’s use the “bert-base-uncased” model, which will convert all the upper characters in the text to lowercase.

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

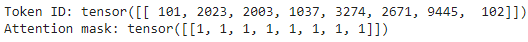

model = BertModel.from_pretrained('bert-base-uncased')- Now, consider an input sequence and tokenize it using the BERT tokenizer. This will encode these word or subword tokens into unique identifiers using batchencodeplus(). You can also add special tokens such as CLS and SEP by setting the addsepecialtokens parameter to True value.

text = "This is a computer science portal"

encoding = tokenizer.batch_encode_plus(

[text],

padding=True,

truncation=True,

return_tensors='pt',

add_special_tokens=True

)

token_ids = encoding['input_ids']

print(f"Token IDs: {token_ids}")

attention_mask = encoding['attention_mask']

print(f"Attention Mask: {attention_mask}")After executing the above code, you will get the following output:

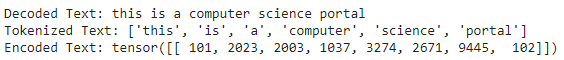

For each token, the attention value is 1, indicating that the BERT model is considering the entire input sequence when generating embeddings. Achieving an attention score of 1 is good as it ensures that the model captures all contextual information. However, the attention score may decrease if the input text is very long. In this case, all tokens receive full attention,

- In this step, you have to forward the tokens and the encoded input through the BERT model to generate embeddings for each token.

with torch.no_grad():

outputs = model(input_ids, attention_mask=attention_mask)

word_embeddings = outputs.last_hidden_state

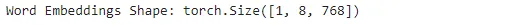

print(f"Word Embeddings Shape: {word_embeddings.shape}")Once you run the above code, you can see the shape of the generated embedding space as below:

The shape of the word embeddings is [1, 12, 768], where 768 represents the dimensionality or hidden size of the embeddings generated by the BERT model. Here, each token is encoded as a 768-dimensional vector. The number 8 corresponds to the total number of tokens in the input text after tokenization. The 1 denotes the batch dimension, indicating the number of sentences processed.

- You can verify the embeddings by decoding the token IDs back to the text using the following code snippet:

decodedText = tokenizer.decode(token_ids[0], skip_special_tokens=True)

print(f"Decoded Text: {decodedText}")

tokenizedText = tokenizer.tokenize(decodedText)

print(f"Tokenized Text: {tokenizedText}")

encodedText = tokenizer.encode(text, return_tensors='pt')

print(f"Encoded Text: {encodedText}")Here is the decoded text:

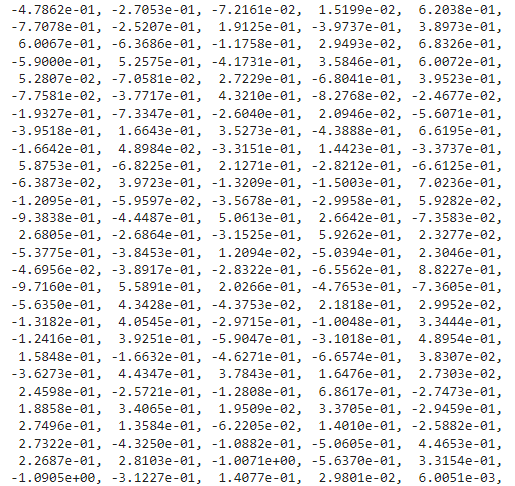

- You can finally extract and print the word embeddings generated by BERT using this Python code:

for token, embedding in zip(tokenized_text, word_embeddings[0]):

print(f"Word Embeddings are: {embedding}")

print("\n")Here is the output:

Since the output is very long, only a small portion of it has been shown for better understanding.

What Are the Most Practical Use Cases of BERT Embeddings?

Text Classification

BERT embeddings excel at text classification tasks by capturing semantic nuances that traditional methods miss. The contextual understanding allows models to distinguish between subtle differences in meaning, making BERT particularly effective for sentiment analysis, topic classification, and content categorization. Organizations use BERT embeddings to automatically classify customer feedback, categorize support tickets, and analyze social media sentiment with high accuracy.

Named Entity Recognition (NER)

Named Entity Recognition benefits significantly from BERT's contextual embeddings because the model can distinguish between different uses of the same word based on context. For example, "Apple" the company versus "apple" the fruit can be correctly identified based on surrounding words. BERT embeddings provide rich contextual information that helps NER models achieve superior performance in identifying people, organizations, locations, and other entities in text.

Question Answering Systems

BERT embeddings power sophisticated question answering systems by understanding the semantic relationship between questions and potential answers. The bidirectional context allows BERT to comprehend complex questions and identify relevant information within large text passages. Many modern chatbots and virtual assistants leverage BERT embeddings to provide more accurate and contextually appropriate responses.

Recommendation Systems

Modern recommendation systems use BERT embeddings to understand user preferences and content similarity at a deeper semantic level. By embedding user reviews, product descriptions, and browsing behavior, recommendation engines can identify subtle preference patterns and suggest relevant items even when explicit keywords don't match. This approach enables more sophisticated content-based filtering and hybrid recommendation strategies.

How Can Airbyte Help Build a Data Pipeline to Store Embeddings and Utilize Them?

Training large language models like BERT requires a lot of relevant data. This data often comes from many sources, such as Kaggle, Hugging Face, Wikipedia, etc. As the first step in training an LLM is to integrate all this data and keep it up-to-date, you need a powerful, robust data pipeline in place.

To help you with that, you can leverage Airbyte, a data integration and replication platform. It allows you to transfer large amounts of data from multiple systems to a destination of your choice using its 600+ connectors. If you cannot find a connector that meets your requirements, you can create one in minutes using the CDK feature.

Conclusion

BERT embeddings are a powerful technique that allows machines to understand, interpret, and process natural language. BERT’s contextual embeddings extract complex meanings and relationships between words with its bi-directional nature of model training. By leveraging BERT’s pre-trained models and fine-tuning them on specific tasks, you can achieve significant improvements in AI-driven applications. Hugging Face offers various BERT base and multilingual models to generate embeddings. To try it yourself with Python programming, follow the step-by-step guide outlined in this article.

Frequently Asked Questions

Can BERT be used for embedding?

Yes, BERT can be used to create embeddings. BERT generates contextual word embeddings that capture the meaning of words based on their surrounding context. Unlike static embeddings like Word2Vec, BERT embeddings change based on the context in which words appear, making them more accurate for understanding language nuances.

What is the size of the BERT embedding vector?

The size of BERT embeddings varies depending on the model variant. BERT-Base produces 768-dimensional embeddings, while BERT-Large generates 1024-dimensional embeddings. Smaller variants like DistilBERT typically produce 768-dimensional embeddings but with reduced computational requirements.

What is the difference between BERT and word-to-vector?

BERT produces contextualized embeddings using a deep transformer architecture, meaning the same word gets different embeddings based on its context. Word2Vec creates static embeddings where each word has a fixed vector representation regardless of context. BERT captures bidirectional context, while Word2Vec processes context in a fixed window around each word.

What are the advantages of BERT embeddings?

- Unlike traditional models that read text unidirectionally, BERT reads in both directions, providing complete contextual understanding.

- BERT embeddings can be fine-tuned for a variety of NLP tasks, making them adaptable to specific needs and domains.

- BERT word embeddings help machines capture nuances and different meanings of words depending on their context.

Can BERT be used for topic modeling?

Yes, BERTopic is a topic-modeling technique that leverages BERT embeddings to create more semantically meaningful topic representations. BERTopic combines BERT embeddings with clustering algorithms and class-based TF-IDF to generate coherent and interpretable topics from document collections.

What is the BERT embedding layer?

The BERT embedding layer refers to the initial layers of the BERT model responsible for converting input tokens into continuous vector representations. This layer combines token embeddings, position embeddings, and token-type embeddings to create the input representations that are processed by the transformer layers.

.webp)