How to Build a Data Warehouse from Scratch: Cost + Examples

Summarize this article with:

✨ AI Generated Summary

Modern data warehouses centralize diverse data sources to enable faster, real-time decision-making and advanced analytics, leveraging cloud-native platforms like Snowflake and BigQuery. Key steps include defining business requirements, selecting appropriate architecture (centralized, lakehouse, hybrid), designing flexible data models, building automated ETL/ELT pipelines, and implementing robust governance, security, and AI-driven optimizations. Successful implementations deliver measurable business value through improved operational efficiency, predictive insights, and scalable, cost-effective data management.

Modern data warehouses centralize diverse data sources to enable faster, real-time decision-making and advanced analytics, leveraging cloud-native platforms like Snowflake and BigQuery. Key steps include defining business requirements, selecting appropriate architecture (centralized, lakehouse, hybrid), designing flexible data models, building automated ETL/ELT pipelines, and implementing robust governance, security, and AI-driven optimizations. Successful implementations deliver measurable business value through improved operational efficiency, predictive insights, and scalable, cost-effective data management.

Modern enterprises generate data at unprecedented velocity, yet many still rely on batch processing systems that deliver yesterday's insights for today's decisions. Consider this: companies using real-time data warehousing report decision-making speeds that are much faster than those dependent on traditional batch processing, fundamentally transforming their competitive advantage in rapidly evolving markets.

Building a data warehouse from scratch represents a significant undertaking that can transform how your organization manages and leverages data. While the initial investment in data storage, tools, and expertise is considerable, a centralized data warehouse offers long-term benefits that improve data quality, streamline operations, and enhance data retrieval speed, allowing faster decision-making.

This guide will walk you through the comprehensive steps involved in building a data warehouse, from data integration and architecture selection to data model design and cost estimation. We will also share an example of how a real-world company leveraged its data warehouse to gain business advantages.

TL;DR: Building a Data Warehouse at a Glance

- A data warehouse is a centralized repository that consolidates data from multiple sources for analysis, reporting, and business intelligence

- Key steps include defining business requirements, choosing the right platform (cloud or on-premises), designing data models, and building automated ETL/ELT pipelines

- Architecture options range from centralized warehouses and lakehouse designs to hybrid approaches that balance governance with flexibility

- Costs in 2025 vary by platform—cloud storage starts around $20–$23/TB/month, with compute, integration tools, and labor adding significant investment

- Best practices focus on automated data quality, comprehensive security frameworks, and governance automation to ensure long-term success

What Is a Data Warehouse?

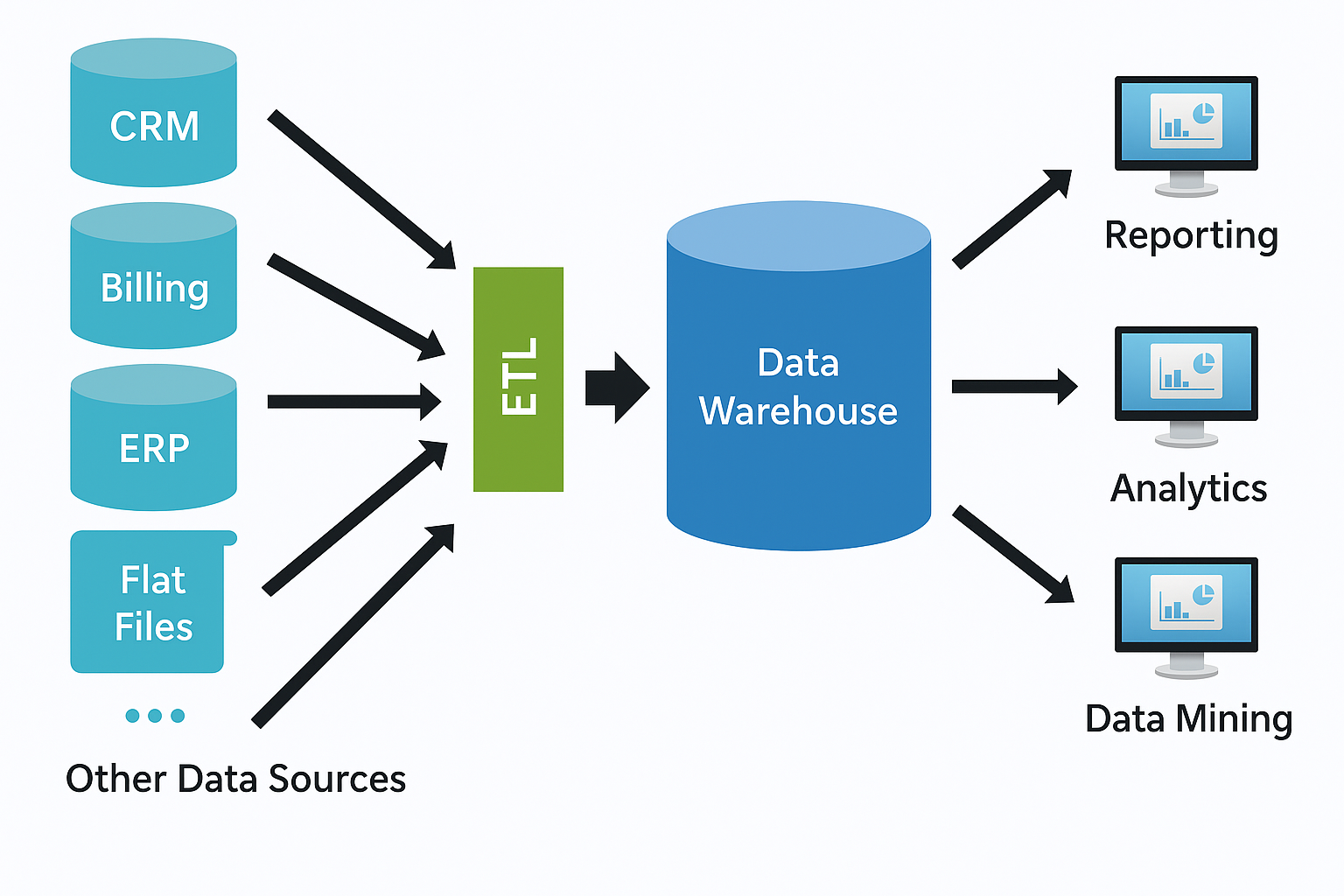

A data warehouse is a centralized repository designed to consolidate data from various data sources into a structured format that is suitable for analysis and reporting. It aggregates historical data from systems such as operational databases, raw data from IoT devices, and data generated through data collection processes. This centralized repository ensures data integrity, making the information consistent and accessible across the organization for business intelligence tools like Power BI, Tableau, or Looker.

Modern data warehouses have evolved beyond simple storage repositories to become intelligent analytics engines. They now support advanced capabilities, including machine learning model training, real-time stream processing, and automated data governance. By transforming and storing large volumes of data in an optimized format, a data warehouse supports complex data transformation and data retrieval, enabling organizations to gain insights that drive better strategic decisions.

Core Components

- Data Sources:CRM systems, ERP platforms, marketing tools, IoT sensors, streaming data platforms, and edge computing devices that generate both structured and unstructured data

- ETL / ELT Process:Advanced data extraction, transformation, and loading capabilities, including change data capture, real-time streaming, and AI-powered data mapping

- Data Warehouse Database:High-performance engines like Snowflake, BigQuery, or Redshift with cloud-native architectures. Snowflake and BigQuery support elastic scaling and serverless compute, while Redshift requires more manual scaling and cluster management.

- Metadata and Governance Layer:Intelligent catalogs that track data lineage, automate quality monitoring, enforce compliance policies, and provide self-service data discovery capabilities

- Analytics and BI Tools:Modern analytics platforms, including traditional BI tools and advanced capabilities for machine learning, predictive analytics, and real-time dashboards

What Are the Key Steps to Building a Data Warehouse from Scratch?

Whether opting for cloud-based solutions or on-premises deployment, building a data warehouse involves several critical steps to ensure effective data integration, appropriate data granularity, efficient data management, and a data model that aligns with business objectives.

1. Define Business Requirements

Understanding business objectives forms the foundation of successful data warehouse implementation. This involves identifying critical data sources, determining required data freshness levels, and selecting the BI tools that will drive analytics. Modern requirements increasingly include real-time analytics capabilities, AI/ML integration needs, and support for both structured analytics and unstructured data exploration.

Key considerations include defining service level agreements for data availability, establishing data governance requirements for compliance, and planning for future scalability as data volumes and user bases grow.

2. Choose the Right Platform

Platform selection has become more nuanced with the emergence of cloud-native solutions, lakehouse architectures, and hybrid deployment models. Cloud platforms like Snowflake, BigQuery, and Redshift offer elastic scaling and managed services, while on-premises solutions like Oracle and Teradata provide greater control over data residency and security.

Modern architectures increasingly favor lakehouse designs that combine the scalability of data lakes with the performance of traditional warehouses. This approach supports both structured analytics and AI/ML workloads while maintaining cost efficiency through tiered storage and compute separation, making it easier to work with large datasets on companies for more informed decision-making.

3. Design the Data Model

Data modeling requires balancing performance, flexibility, and maintainability. Traditional approaches include star schemas for simplicity and performance, snowflake schemas for normalized structures, and Data Vault methodologies for long-term scalability and auditability.

Contemporary modeling approaches incorporate dimensional modeling with modern considerations like support for streaming data, integration with machine learning workflows, and accommodation of both batch and real-time processing patterns.

4. Build the ETL/ELT Pipeline

Modern data integration leverages tools like Airbyte for flexible, open-source connectivity and platforms like Fivetran for managed data integration. Implementation of Change Data Capture (CDC) enables near real-time updates, while robust error handling ensures data pipeline reliability.

Advanced pipelines incorporate streaming architectures for real-time processing, AI-powered data quality monitoring, and automated schema evolution to handle changing source systems without manual intervention.

5. Develop Reporting and Analytics

Analytics development extends beyond traditional dashboards to include self-service analytics capabilities, embedded analytics, and advanced visualizations. Tools like Power BI, Tableau, and Looker provide foundational capabilities, while modern implementations add semantic layers for consistent business definitions.

Query optimization through intelligent indexing, partitioning strategies, and result caching ensures responsive performance even as data volumes scale. Integration with machine learning platforms enables predictive analytics and automated insights.

6. Implement Ongoing Maintenance and Optimization

Continuous optimization involves monitoring data quality through automated testing frameworks, enforcing role-based access control (RBAC), implementing comprehensive security measures including encryption and audit logging, and refining schemas to meet evolving business needs.

Modern maintenance strategies incorporate AI-driven anomaly detection for data quality issues, automated scaling for compute resources, and proactive optimization recommendations based on usage patterns and performance metrics.

How Do You Establish Data Governance and Model Design for Scalable Data Warehouses?

Establishing strong data governance ensures accuracy, integrity, and security while managing data velocity and variety. Modern governance frameworks incorporate automated policy enforcement, intelligent metadata management, and self-service capabilities that balance control with user productivity.

A well-designed dimensional model, such as a star or snowflake schema, can boost query performance and enable BI tools to retrieve and process data efficiently; however, snowflake schemas reduce redundancy by normalizing dimension tables, while star schemas intentionally denormalize for faster queries at the cost of increased redundancy. Contemporary approaches extend traditional modeling with support for semi-structured data, event-driven architectures, and machine learning feature stores.

Governance automation includes data lineage tracking that automatically maps data flows across complex pipelines, quality monitoring that detects anomalies in real-time, and compliance frameworks that ensure adherence to regulations like GDPR and HIPAA without manual oversight.

Advanced modeling techniques incorporate time-variant data handling for historical analysis, slowly changing dimension management for evolving business entities, and integration patterns that support both transactional consistency and analytical performance optimization.

What Are the Different Data Warehouse Architectures You Can Choose From?

Centralized Data Warehouse

Traditional centralized architectures offer strong governance capabilities and establish a single source of truth for enterprise data. These systems excel at ensuring data consistency and providing centralized security controls, though they may lack flexibility for handling diverse data types and rapid schema evolution.

Modern centralized warehouses incorporate cloud-native features like elastic scaling, serverless compute, and automated optimization while maintaining the governance and consistency benefits of traditional approaches.

Lakehouse Architecture

Lakehouse designs combine the scalability and cost-effectiveness of data lakes with the performance and governance capabilities of traditional warehouses. This architecture supports diverse workloads including structured analytics, machine learning, and real-time processing using open table formats like Apache Iceberg or Delta Lake.

Implementation typically involves object storage for raw data, metadata layers for schema management, and compute engines that can process both batch and streaming workloads with ACID transaction guarantees.

Data Marts

Department-focused data marts reduce query contention and provide specialized analytics capabilities tailored to specific business functions. While potentially creating data silos, modern data mart implementations use virtualization and shared semantic layers to maintain consistency while enabling departmental autonomy.

Contemporary data marts often leverage cloud-native architectures that share underlying storage while providing independent compute resources and customized data models for different business domains.

Hybrid Architecture

Hybrid designs integrate data lakes for raw data storage with traditional warehouses for structured analytics, providing flexibility for diverse use cases while maintaining performance for critical business reporting. This approach supports both exploratory analytics and production reporting workflows.

Implementation complexity increases with hybrid architectures, but modern data integration platforms and governance tools help manage the additional operational overhead while delivering enhanced analytical capabilities.

How Do You Build Real-Time Data Warehouses for Instant Analytics?

Real-time data warehousing transforms traditional batch-oriented systems into responsive platforms that process and analyze data within seconds of generation. This capability enables immediate response to business events, real-time personalization, and operational analytics that drive competitive advantage.

Streaming Architecture Components

Modern real-time warehouses leverage event streaming platforms like Apache Kafka for data ingestion, stream processing engines like Apache Flink for real-time transformations, and change data capture technologies for continuous synchronization with operational systems.

Implementation involves designing idempotent data pipelines that handle duplicate events gracefully, implementing exactly-once processing semantics to ensure data accuracy, and creating error-handling mechanisms that maintain system reliability during processing failures.

Event-Driven Data Integration

Change Data Capture enables continuous replication of database changes to the warehouse without impacting the source system performance. This approach captures insert, update, and delete operations in real-time, maintaining warehouse freshness while preserving historical state information.

Stream processing frameworks transform raw events into analytical formats through windowing operations, stateful computations, and complex event pattern detection. These capabilities enable real-time aggregations, trend analysis, and anomaly detection within streaming data flows.

Performance Optimization Strategies

Real-time warehouses require careful optimization of ingestion throughput, query performance, and resource utilization. Techniques include partitioning strategies that align with query patterns, indexing approaches optimized for streaming workloads, and caching mechanisms that balance freshness with performance.

Materialized views provide precomputed aggregations that update incrementally as new data arrives, enabling sub-second response times for common analytical queries while maintaining accuracy across high-velocity data streams.

How Can AI and Machine Learning Enhance Your Data Warehouse Architecture?

AI and machine learning integration transforms data warehouses from static repositories into intelligent systems that automatically optimize performance, ensure data quality, and generate predictive insights. These capabilities reduce operational overhead while enabling advanced analytics that drive business value.

Automated Pipeline Optimization

Machine learning algorithms analyze query patterns, data access frequencies, and system performance metrics to automatically optimize warehouse configurations. This includes intelligent partitioning recommendations, index suggestions, and resource allocation decisions that adapt to changing workload patterns.

AI-driven automation extends to data pipeline management through intelligent schema evolution, automated error recovery, and predictive maintenance that prevents system failures before they impact business operations.

Intelligent Data Governance

AI enhances data governance through automated data classification, anomaly detection in data quality patterns, and intelligent policy enforcement that adapts to organizational changes. Machine learning models identify sensitive data automatically, recommend appropriate security classifications, and monitor compliance across complex data ecosystems.

Automated lineage tracking uses AI to map data relationships across complex transformation pipelines, providing a transparent understanding of data provenance and impact analysis for schema changes or system modifications.

Predictive Analytics Integration

Modern warehouses embed machine learning capabilities directly into the data platform, enabling analysts to build and deploy predictive models without moving data to external systems. This integration supports feature engineering, model training, and real-time scoring within the warehouse environment.

Advanced implementations include automated feature stores that maintain consistent data definitions across analytical and operational use cases, A/B testing frameworks for model performance evaluation, and continuous learning systems that adapt to changing business conditions.

What Does It Cost to Build a Data Warehouse in 2025?

Cost Optimization Strategies

Modern cost management leverages intelligent resource scaling that automatically adjusts compute capacity based on workload demands, eliminating idle resource costs while ensuring performance during peak usage periods.

Automated data lifecycle management moves infrequently accessed data to lower-cost storage tiers, with query performance impact minimized by advanced optimization features.

Implementation of incremental data loading reduces processing costs by only transforming changed data, while intelligent compression algorithms minimize storage requirements.

Open-source tools like Airbyte for data integration and dbt for transformations significantly reduce licensing costs compared to proprietary alternatives.

Advanced optimization includes query result caching that eliminates redundant processing, materialized view management that balances storage costs with query performance, and usage monitoring that identifies optimization opportunities across the platform.

Real-World Success Story: Building a Centralized Data Warehouse for an Online Retailer

Fashion Fusion successfully integrated clickstream data, IoT sensor information, and operational databases into a hybrid lakehouse architecture combined with Snowflake for structured analytics. The implementation demonstrates how modern data warehouse design delivers measurable business value through improved decision-making and operational efficiency.

Implementation Architecture

The solution leveraged Airbyte for flexible data extraction from diverse sources, dbt for in-warehouse transformations that maintain data quality, and a star schema design optimized for sales reporting and customer analytics. Comprehensive role-based access control ensured data security while enabling self-service analytics across business teams.

Real-time components included change data capture for inventory updates, streaming analytics for customer behavior analysis, and automated alerting for critical business metrics. The architecture supported both historical analysis and real-time operational decision-making.

Key Success Factors

Implementation success resulted from aligning technical architecture with business requirements, establishing clear data governance policies that balanced control with accessibility, and investing in user training that enabled self-service analytics adoption across the organization.

Continuous optimization through performance monitoring, automated data quality checks, and regular architecture reviews ensured the platform evolved with changing business needs while maintaining reliability and cost efficiency.

How Long Does It Take to Build a Data Warehouse?

Implementation timelines vary significantly based on organizational complexity, data volume, and feature requirements:

- Small / MVP Implementation: 4–8 weeks for basic data integration and reporting capabilities

- Mid-Size Enterprise: 3–6 months, including advanced analytics and governance features

- Large Enterprise: 6–12+ months for comprehensive platforms with real-time capabilities and AI integration

Modern cloud-native platforms and pre-built integration tools significantly reduce implementation time compared to traditional approaches. Agile methodologies enable iterative delivery that provides business value throughout the development process rather than requiring complete implementation before generating insights.

What Are the Best Practices for Data Quality, Governance, and Security?

Automated Quality Management

Implement comprehensive testing frameworks using tools like Great Expectations or Soda that automatically validate data accuracy, completeness, and consistency across all data pipelines. Continuous monitoring detects quality issues in real-time and provides automated remediation for common problems.

Advanced quality management includes statistical profiling that establishes baseline data characteristics, anomaly detection that identifies unusual patterns, and automated lineage tracking that enables rapid root cause analysis when issues occur.

Comprehensive Security Framework

Security implementation encompasses multiple layers, including encryption for data at rest and in transit, role-based access control that aligns with organizational hierarchies, and audit logging that provides complete visibility into data access and modifications.

Modern security approaches include dynamic data masking that protects sensitive information based on user roles, automated compliance monitoring that ensures adherence to regulatory requirements, and threat detection that identifies unusual access patterns or potential security breaches.

Governance Automation

Implement automated governance through policy engines that enforce data handling rules consistently across all systems, metadata management that maintains accurate documentation and lineage information, and access provisioning that streamlines user onboarding while maintaining security controls.

Advanced governance includes automated data classification that identifies sensitive information, retention policy enforcement that manages data lifecycle automatically, and impact analysis that assesses the effects of proposed changes before implementation.

How Do You Scale from Basic Data Management to Automated Data Integration?

Scaling data management requires transitioning from manual processes to automated systems that handle increasing data volumes, user demands, and analytical complexity. This evolution involves implementing intelligent automation that reduces operational overhead while improving data quality and accessibility.

By aligning appropriate architecture choices with solid data modeling practices and comprehensive automation tools, organizations can scale their data management capabilities effectively. Modern platforms enable this transition through cloud-native architectures that automatically adapt to changing requirements while maintaining performance and cost efficiency.

Advanced scaling strategies include implementing data mesh architectures that decentralize data ownership while maintaining governance standards, leveraging AI-driven optimization that continuously improves system performance, and establishing self-service capabilities that enable business users to access data independently while adhering to security and compliance requirements.

Conclusion

Building a data warehouse requires careful planning across business requirements, architecture selection, and data modeling to create a system that delivers real business value. Modern warehouses have evolved beyond storage to become intelligent analytics engines supporting advanced capabilities like machine learning, real-time processing, and automated governance.

Implementing best practices for data quality, security, and governance ensures long-term success while reducing operational overhead. The investment in a well-designed data warehouse pays dividends through improved decision-making, operational efficiency, and competitive advantage in rapidly evolving markets.

Frequently Asked Questions

What are the key differences between an operational database and a data warehouse?

Operational databases handle real-time transactions and are optimized for fast inserts, updates, and individual record retrieval. Data warehouses aggregate historical data from multiple sources and are optimized for complex analytical queries, reporting, and business intelligence workloads.

How do I choose between a cloud-based and an on-premises data warehouse?

Cloud-based solutions offer flexibility, automatic scaling, lower upfront costs, and managed services that reduce operational overhead. On-premises solutions provide greater control over data residency, customization options, and may be required for strict compliance or security requirements.

What is the role of data lakes in a modern data architecture?

Data lakes store raw, diverse data types in their native formats and complement warehouses by supporting big-data analytics, machine learning workloads, and exploratory data analysis. Modern lakehouse architectures combine the benefits of both approaches for comprehensive analytics capabilities.

How do real-time data warehouses differ from traditional batch processing systems?

Real-time data warehouses process and analyze data within seconds of generation using streaming technologies and change data capture. Traditional systems process data in scheduled batches, typically daily or hourly, which introduces latency between data generation and analytical availability.

What are the main benefits of AI integration in data warehouse architectures?

AI integration provides automated optimization of queries and resources, intelligent data quality monitoring and anomaly detection, predictive analytics capabilities embedded in the platform, and automated governance that reduces manual oversight while ensuring compliance and security.

.webp)

.webp)