What is Conceptual Data Modeling: Purpose & Examples

Summarize this article with:

✨ AI Generated Summary

When data engineering teams report that schema validation failures plague their pipelines weekly, the root cause often traces back to a fundamental disconnect between business intent and technical implementation. Organizations invest millions in sophisticated data infrastructure yet struggle with basic alignment between what stakeholders envision and what systems actually deliver. This persistent gap creates cascading failures where downstream analytics produce misleading insights, compliance audits reveal governance gaps, and data teams spend more time fixing integration problems than building business value.

The solution lies not in better technology or more resources, but in establishing robust conceptual data modeling practices that serve as the critical translation layer between business requirements and technical systems. By creating shared understanding through high-level data representations, conceptual models prevent the requirement misinterpretation that leads to failed implementations and costly rework. This foundational approach enables data teams to design systems that align with real-world business processes while maintaining the flexibility to adapt as requirements evolve.

TL;DR: Conceptual Data Modeling at a Glance

- High-level business framework – Conceptual data modeling maps entities, attributes, relationships, and cardinality without technical implementation details

- Prevents pipeline failures – aligns business requirements with technical systems from the start, reducing schema mismatches and integration errors

- Core elements – includes entities (customers, orders), attributes (name, ID), relationships (one-to-many), and cardinality definitions

- Modern advancements – AI-driven model creation, knowledge graphs, collaborative platforms, and agile techniques like Data Vault 2.0 enhance traditional approaches

- Practical integration – platforms like Airbyte provide schema visibility and drift detection that keep conceptual models aligned with operational systems

What Is Conceptual Data Modeling?

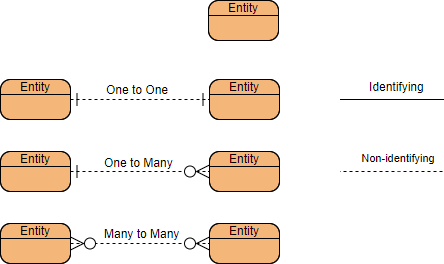

Conceptual data models are built at the first stage of the data modeling process. At its core, the conceptual modeling framework maps out abstract, high-level representations of data structure, focusing on what is necessary and how it's connected. All aspects of the system are represented in the form of graph notations, like loop diagrams.

This business-oriented approach deliberately avoids technical implementation details, instead concentrating on capturing the essential business concepts and their fundamental relationships. Unlike logical and physical models that address how data is structured and stored, conceptual models answer the fundamental question of what data matters to the business and why these data elements relate to each other.

The conceptual data model serves as a communication bridge between business stakeholders who understand domain requirements and technical teams who implement data solutions. By maintaining this high-level perspective, conceptual models ensure that subsequent technical decisions remain aligned with business objectives throughout the development lifecycle.

Key Elements of the Conceptual Data Model

- Entities – real-world events, people, objects, or concepts (e.g., customers, orders, products)

- Attributes – characteristics that describe an entity (e.g., customer name, email address, phone number)

- Relationships – associations or dependencies between entities (e.g., customers ↔ orders)

- Cardinality – the possible number of relationships between entities (one-to-one, one-to-many, many-to-many)

What Is the Purpose of a Conceptual Data Model?

The primary objective of a conceptual data model is to help you understand business data and how different data elements relate to one another, fostering clear communication across diverse stakeholder groups.

- Structuring Business Operations – captures essential entities, attributes, and relationships in a way that aligns with business goals and processes

- Facilitating Clear Data Visualization – simplifies data relationships visually so stakeholders and technical teams share a common understanding, reducing miscommunication that leads to implementation errors

- Laying the Foundation for Database Design – serves as the initial blueprint for more-detailed logical and physical data models

- Enabling Strategic Data Governance – embeds governance policies directly into data architecture through clear entity definitions and relationship constraints

How Do You Create a Conceptual Data Model?

When creating a conceptual data model, the focus is on what data is needed rather than how it will be stored or implemented. You can use data modeling tools such as Erwin, SQL Database Modeler, or IDERA / ER Studio.

- Identify the Key Entities – determine the main entities that represent the core objects or concepts relevant to your domain

- Define the Relationships – specify how data flows between entities to capture business processes accurately

- Outline Entity Attributes – list the primary attributes for each entity, remaining abstract without specifying data types yet

- Set Cardinality – define how many instances of one entity relate to another and whether relationships are mandatory or optional

- Establish Business Rules – capture conditions, constraints, and requirements that govern entity behavior

- Review and Validate – validate the model with stakeholders and data teams, refining it based on feedback

What Does a Conceptual Data Model Example Look Like?

Consider an online shopping application that captures information about customers, their shopping habits, and the products they interact with.

Key entities include:

- Online customers – app users

- Shopping cart – temporary cart created when products are added

- Cart items – individual products within a cart

- Products – items available for purchase

Example attributes

- Online customers: customer ID, name, email, password, address, phone number

- Products: product ID, name, description, price

A customer can add many products to their cart (one-to-many). Each addition is recorded uniquely, even if the same product is added multiple times.

What Are Common Misconceptions About Conceptual Data Modeling?

Many organizations fall into persistent traps that undermine the effectiveness of their conceptual modeling efforts. These misconceptions often stem from outdated practices or fundamental misunderstandings about the purpose and scope of conceptual models.

The "Big Data Only" Fallacy

A pervasive myth suggests that conceptual modeling only benefits large-scale enterprise projects with massive datasets. This misconception limits modeling to complex scenarios while smaller implementations suffer from structural problems that proper conceptual design would prevent. Even simple CRM systems or local business applications benefit significantly from clear entity definitions and relationship mapping. Without conceptual foundations, these smaller projects frequently require costly architectural refactoring when they inevitably expand or integrate with other systems.

Schema Creation vs. Semantic Representation

Many teams mistakenly view conceptual modeling as merely an early step toward database schema creation. This reductive approach overlooks the core function of conceptual models as semantic representation systems that capture business meaning and context. Healthcare organizations exemplify this challenge when they create structurally sound models that fail to represent clinical realities like patient treatment pathways or care coordination workflows. The result is technically functional systems that cannot support actual business processes.

The IT-Centric Trap

Excluding business stakeholders from modeling processes virtually guarantees misalignment between technical implementations and operational needs. Marketing teams defining "customer lifetime value" differently from finance creates reporting inconsistencies that manifest as conflicting dashboards and analytical insights. Cross-functional involvement during conceptual modeling ensures that models reflect accurate business rules and prevents the costly rework that occurs when domain experts discover misaligned systems during deployment.

Over-Normalization and Performance Myths

The persistent belief that denormalization automatically improves performance leads to premature optimization decisions at the conceptual level. Modern columnar databases and indexing strategies often make properly normalized schemas perform better than denormalized alternatives. This misconception causes teams to compromise data integrity for theoretical performance benefits that sophisticated query optimization would achieve more effectively.

How Do Modern Collaborative Frameworks Enhance Conceptual Modeling?

Traditional conceptual modeling often occurred in isolation, with data architects working separately from business stakeholders and development teams. Modern collaborative approaches transform this process into an interactive, cross-functional discipline that improves both model accuracy and stakeholder buy-in.

Real-Time Collaborative Platforms

Contemporary modeling platforms enable simultaneous collaboration across distributed teams through shared canvases and version-controlled workflows. Business analysts can sketch process flows alongside data architects developing entity relationships, while real-time change tracking attributes each model element to specific contributors. This approach mirrors software development practices where multiple developers contribute to codebases without conflicts, ensuring that conceptual models evolve through structured collaboration rather than individual assumptions.

Low-Code and Natural Language Interfaces

Modern tools democratize conceptual modeling by providing natural language interfaces that allow domain experts to participate directly in model creation. Retail analysts can define inventory concepts using business terminology while the platform translates these definitions into formal entity-relationship structures. This capability bridges the communication gap between technical and business stakeholders, ensuring that models capture authentic business requirements rather than technical interpretations of business needs.

Semantic Layer Integration

Advanced collaborative frameworks integrate conceptual models directly with semantic layers that provide consistent definitions across analytical tools and dashboards. When marketing teams define "active customer" within the conceptual model, this definition propagates automatically to downstream reporting systems, preventing the definitional inconsistencies that create contradictory business insights. This integration ensures that conceptual models serve as living documentation rather than static artifacts that quickly become outdated.

Automated Validation and Consistency Checking

Modern collaborative platforms include validation engines that identify logical inconsistencies and suggest corrections during the modeling process. These systems flag potential issues like circular relationships or ambiguous cardinality definitions before they become implementation problems. Automated consistency checking enables teams to iterate rapidly on model designs while maintaining structural integrity, supporting agile development practices that require frequent model refinements.

What Are Advanced Agile Modeling Techniques?

Modern data environments demand modeling approaches that balance structural rigor with iterative flexibility. Advanced agile techniques like Data Vault 2.0 and Anchor Modeling address the limitations of traditional conceptual modeling by providing frameworks that accommodate rapid business change while maintaining data integrity and auditability.

Data Vault 2.0 Methodology

Data Vault modeling represents a hybrid approach that combines traditional data-warehousing principles with agile methodologies suitable for evolving business environments.

- Hub-Link-Satellite Architecture – hubs represent unique business keys, links establish relationships between entities, satellites store descriptive attributes with full historical context

- Temporal Capabilities – metadata for auditing and lineage tracking is built in from the conceptual stage

- Parallel Development Support – multiple teams can extend the model simultaneously without conflicts

Anchor Modeling Framework

Anchor modeling provides an extreme-normalization approach that treats every model element as independently evolvable.

- Component Structure – anchors (entity identities), attributes (descriptive properties), ties (relationships), knots (standardized domains)

- Non-Destructive Evolution – new elements are added without altering existing structures

- Built-in Temporal Support – supports both transaction-time and valid-time perspectives within the same framework

How Do Temporal Modeling Approaches Handle Time-Dependent Data?

Bitemporal Data Architecture

Bitemporal modeling manages historical data across dual timelines:

- Valid Time and Transaction Time – track real-world truth vs. system-recorded chronology

- Immutable Audit Trails – complete traceability without information loss

- Historical Query Capabilities – reconstruct any historical state by querying specific time coordinates

Event Sourcing Pattern

Event sourcing captures business events as immutable facts rather than current entity states.

- Append-Only Event Streams – events are recorded chronologically as permanent facts

- State Reconstruction – current states are computed by replaying the event sequence

- Predictive Analytics Foundation – rich event history supports pattern analysis and forecasting

How Do Knowledge Graphs Transform Conceptual Data Modeling?

Knowledge graphs shift from rigid entity-relationship diagrams toward semantic networks where entities interconnect through contextual relationships.

- Structural Advantages – multi-relational ontologies support dynamic inference

- Implementation Frameworks – platforms like Amazon Neptune and Neo4j operationalize semantic schemas

- Strategic Applications – faster diagnosis in healthcare, supply-chain risk modeling in manufacturing, network-based fraud detection in financial services

What Role Does AI Play in Modern Conceptual Data Modeling?

- AI-Driven Model Creation and Validation – NLP converts requirements into preliminary models; generative AI identifies ontological inconsistencies

- Large Language Models – analyze user stories and legacy docs to draft conceptual structures

- Automated Pattern Recognition – algorithms discover hidden relationships within existing data

- Self-Service Modeling – natural-language interfaces empower domain experts to define entities and relationships directly

How Do Conceptual Data Models Differ From Logical Data Models?

What Are the Limitations of Conceptual Data Modeling?

- Requires Deep Project Understanding – insufficient domain knowledge can lead to inaccuracies

- Time-Consuming if Misaligned – incorrect entities or relationships force rework

- Potential Conflicts Due to Abstraction – high-level assumptions may not align with implementation constraints

- Complexity Increases With Project Size – larger systems complicate relationship management

- Stakeholder Alignment Challenges – different business units may have conflicting perspectives

How Does Airbyte Integrate With Data Modeling?

Airbyte is an open-source data-integration platform offering over 600+ pre-built connectors. By centralizing data from databases, APIs, and analytics platforms, it gives modelers visibility into source schemas, schema drift, and data anomalies that refine conceptual models and ensure ongoing alignment with business operations.

Airbyte's approach to data integration supports conceptual modeling initiatives by providing comprehensive source system visibility that informs model accuracy. The platform's connector ecosystem reveals actual data structures from operational systems, enabling modelers to validate their conceptual assumptions against real-world implementations. This feedback loop ensures that conceptual models remain grounded in operational reality rather than theoretical business requirements that may not reflect actual system capabilities.

The platform's schema evolution detection capabilities alert modeling teams when source systems undergo structural changes that could affect conceptual model validity. This proactive monitoring prevents the disconnect between conceptual models and operational systems that often develops over time as business requirements evolve. By maintaining alignment between conceptual designs and actual data sources, Airbyte helps organizations preserve the business value that well-designed conceptual models provide throughout the data architecture lifecycle.

Conclusion

Conceptual data modeling serves as the critical foundation that bridges business requirements and technical implementation, preventing costly misalignments in data systems. By establishing clear entity relationships and business rules before implementation begins, organizations ensure their data architecture supports rather than constrains operational objectives. Modern approaches including knowledge graphs, temporal modeling, and AI-assisted techniques further enhance these benefits, enabling data teams to build resilient systems that evolve alongside changing business needs.

Frequently Asked Questions

1. What is a conceptual data model, and why is it important?

A conceptual data model (CDM) is a high-level, business-focused view of key data entities and their relationships. It abstracts technical details, clarifies core concepts, and bridges communication between business and technical teams, preventing flawed architectures and costly rework.

2. How does a conceptual data model differ from a logical data model?

A conceptual data model defines high-level entities, relationships, and business rules, focusing on "what" matters. A logical data model translates this into detailed attributes, data types, and normalization, specifying "how" data should be structured for database design.

3. What are the main elements included in a conceptual data model?

A conceptual data model includes entities (e.g., Customers, Orders), key attributes (e.g., name, ID), relationships between entities, cardinality (how many instances relate), and essential business rules, providing clarity before progressing to technical database design.

4. How can conceptual modeling help avoid data pipeline failures?

Many integration problems and schema mismatches stem from unclear requirements during system design. By using conceptual models as a shared blueprint, organizations align business expectations with technical implementation from the start. This prevents misinterpretations that result in broken data pipelines, inconsistent reporting, and compliance risks.

5. What are some modern advancements in conceptual data modeling?

Today's data environments benefit from agile techniques like Data Vault and Anchor Modeling, which support incremental changes and historical tracking. Tools like knowledge graphs and AI-driven modeling also enable dynamic, real-time updates to conceptual models, ensuring they evolve alongside business processes without disrupting existing systems.

.webp)