How to Create LLM with Salesforce Data: A Complete Guide

Summarize this article with:

✨ AI Generated Summary

Large Language Models (LLMs) built with Salesforce data can automate workflows, enhance customer interactions, and improve decision-making by leveraging sales, marketing, and support data. Key steps include data preparation, secure extraction (e.g., using Airbyte), model selection (open-source or cloud-based), and integration with CRM, analytics, and reporting tools.

- Ensure data privacy with encryption, access control, and compliance (GDPR, HIPAA).

- Use batch or real-time processing based on use case, with incremental updates via change data capture.

- Implement core LLM features like query understanding, context injection, and confidence scoring.

- Deploy with staging, monitoring, rollback, and scalability planning for reliable production use.

Large Language Models (LLMs) are the cornerstone of technological advancements in the 21st century. With models like ChatGPT revolutionizing every professional sector worldwide, these tools now empower businesses to automate redundant processes.

By creating a custom LLM with the help of a business tool like Salesforce, you can enhance customer interactions, automate workflows, and streamline data-driven decision-making. However, building an LLM from scratch can be quite challenging, requiring time and effort.

In this article, you will learn the steps to create LLM with Salesforce data and how to optimize business processes efficiently.

Understanding the Foundation

LLMs are machine learning models that are developed using vast amounts of data. You can consider an LLM to be a black box that processes your data. After training, the model can produce accurate results based on the data it has been trained on. In terms of business use cases, leveraging Salesforce data to train a custom model can significantly enhance various business processes. Read along to discover some popular use cases.

Salesforce Data Structure Overview

Salesforce data consists of customer relationships and sales information. The types of data stored in this platform include sales transactions, customer data, marketing campaigns, support tickets, and custom objects.

Sales transactions outline the entire sales process from lead generation to closure. Customer data captures the customer profile information, such as purchase history, contact information, and interactions. Marketing campaign data includes marketing strategies, conversion rates, engagement metrics, and audience segmentation data.

Support ticket data consists of customer issues, feedback, resolutions, and the time taken to resolve the issue. To further enhance user experience, Salesforce provides a custom object-storing option, which enables you to store objects that you consider useful.

Data Privacy and Compliance

When training an LLM using Salesforce data, it becomes necessary to safeguard the data from unauthorized access. This might include stripping away personally identifiable information or adding access control principles to foster a secure environment. To further enhance data security, you must comply with industry-specific standards like GDPR and HIPAA.

Use Cases and Business Values

Incorporating an LLM trained on your Salesforce data has multiple use cases. The key use case is the ability of LLM to summarize your data and give you an overview of the leads and revenue. It can also help you simplify the generation of insights by representing complex data understandings through simple visualizations.

The visual representation of data can be effortlessly comprehended by technical as well as non-technical individuals, increasing data understanding within the organization.

Resource Requirements

To create a custom LLM, you must have several resources available. The model uses complex algorithms and requires training on huge amounts of data. Better computational resources can help reduce its complexity and time consumption during the creation process. Resources such as RAM, CPU, GPU, and networking contribute to simplifying the LLM training. Along with these, tools for data ingestion, cleaning, and storage can help you perform complex operations with ease.

Let’s get into the steps required to create LLM with Salesforce data.

Setting up Your Environment

The first stage of building an LLM from scratch is configuring the environment you will work with for the rest of the journey. This is a multi-step process that requires you to prepare for the further steps. To set up your environment, you must have:

Required Permissions and Access

Certain permissions and access credentials are required when working with Salesforce data. To export data from the platform, the “Modify All Data” permission is essential, as it allows access to the Data Loader. You can use the Data Loader to export all data objects from Salesforce.

API Authentication Setup

Salesforce REST API requires an access token to authenticate your account and send requests. With the help of Salesforce CLI, you can effortlessly generate an access token. Accessing the API is an essential step, enabling you to migrate large volumes of data. An alternative option is to use bulk API to load large datasets in bulk.

Development Tools Selection

After accessing the data, the next step is to select the LLM development tools. Two available options are open-source frameworks and cloud-based tools.

Open-source tools like TensorFlow, PyTorch, and HuggingFace Transformer library provide you with free alternatives to create LLM with Salesforce data.

Conversely, cloud-based tools, such as Azure Machine Learning, AWS SageMaker, and GCP AI tools, offer a paid method of building LLM without requiring infrastructure management.

Security Configurations

When creating a custom LLM with multiple libraries and personal data, you must create a virtual environment isolated from the rest of the applications. By creating a virtual environment, developers from across the globe have adopted the practice of eliminating the risk of deployment in the development stage.

To further enhance data privacy, you must adhere to industry-specific rules and regulations, such as ISO 27001, and incorporate data encryption functionality.

Testing Environment Preparation

Before getting started with the LLM creation steps, you must ensure the environment that you just configured is working properly. For testing purposes, install and import the necessary libraries. For example, if you are using the Transformers library, execute the given code in Jupyter Notebook or any other code editor in your virtual environment:

Running sample scripts and checking the version of the required libraries is another way to test the virtual environment.

Data Preparation in Salesforce

Data preparation is one of the most essential steps of the LLM development workflow. With many sub-procedures involved, this step ensures that the data is prepared in an analyst-friendly format. Here are the crucial data preparation steps to follow:

Identifying Relevant Objects: Selecting the appropriate marketing objects is the first stage of data preparation. Segregating nonessential information allows you to select only a section of data that is truly relevant to the LLM’s purpose. By filtering out unnecessary objects, you can optimize the performance during model training, significantly reducing the time required.

Data Cleaning Strategies: Prepare data cleaning strategies to enhance data quality, accuracy, and consistency. This stage requires you to perform operations such as handling missing values, eliminating duplicates, and managing data skewness. Such operations remove biasing from the data and enable you to improve LLM’s accuracy.

Field Selection and Filtering: Some fields present in the object can be irrelevant or weakly correlated to your LLM’s objective. These fields might not be significantly contributing to the model's decision-making. Filtering out such fields is another way to optimize model performance and reduce training time.

Relationship Mapping: The process of associating various data entities together is known as relationship mapping. For example, mapping the relationship between Salesforce’s customer and transaction entities allows you to create a comprehensive view of your data.

Data Export Methods: Reviewing the data export methods is also an important method of data preparation that provides information about the data movement process.

Although there are manual methods of extracting data from Salesforce, including Data Loader and APIs, the recommended method is to use SaaS-based tools like Airbyte. Using this tool, you can swiftly migrate data from Salesforce to any destination of your choice.

Airbyte is a no-code data integration and replication tool that enables you to move data from various sources to your preferred destination. It offers 400+ pre-built connectors, allowing you to streamline data synchronization by handling structured, semi-structured, and unstructured data.

If the connector you are looking for is unavailable in the available options, Airbyte provides you with the capability to build custom connectors. Features like AI-enabled no-code Connector Builder and Connector Development Kits (CDKs) enhance your connector development journey.

Let’s explore the most critical features offered by Airbyte:

- AI-Powered Connector Builder: The AI-assist functionality of the Connector Builder reads through your preferred connector’s documentation and auto-fills most fields on the UI, simplifying connector development.

- Streamlining GenAI Workflows: Airbyte supports popular vector databases, including Pinecone, Milvus, and Weaviate. You can utilize Airbyte’s built-in RAG transformations, such as chunking, embedding, and indexing, to transform raw data into vector embeddings. These vectors can then be stored in vector databases to streamline LLM response.

- PyAirbyte: Using PyAirbyte, an open-source Python library, you can leverage Airbyte connectors in a developer environment. It enables you to extract data from Airbyte connectors into popular SQL caches, which can easily be converted into Pandas Dataframe. With this feature, you get the flexibility to transform and work with data as per your specific requirements.

Handling Sensitive Information: After you select the data extraction method, you must create an outline for handling sensitive information in the data. This includes methods like masking personal information and removing information like credit card numbers from the data.

If you consider Airbyte to migrate data, you don’t have to stress about this issue, as it provides multiple features to protect data. Its enterprise edition offers features like personally identifiable information (PII) masking that hashes sensitive data as it moves via the pipeline. For enhanced data security, this version provides multitenancy and role-based access control, enabling you to manage multiple teams and projects within a single deployment.

Extracting Data From Salesforce Using Airbyte

Now that you have understood the preliminary steps required to create LLM with Salesforce data, it's time to initiate the model building process. For this first step, you must migrate data using the data exportation method you selected in the previous step. Let’s use Airbyte to extract data from Salesforce and move it into Pinecone.

Data Extraction from Salesforce

- Login to your Airbyte account.

- A dashboard will appear on your screen. Click on the Sources tab from the left panel of the page.

- On the Set up a new source page, enter Salesforce in the Search connectors box.

- Select the available option, and on the new page, authenticate your Salesforce account by providing access credentials.

- Click on the Set up source button to configure Salesforce as a data source.

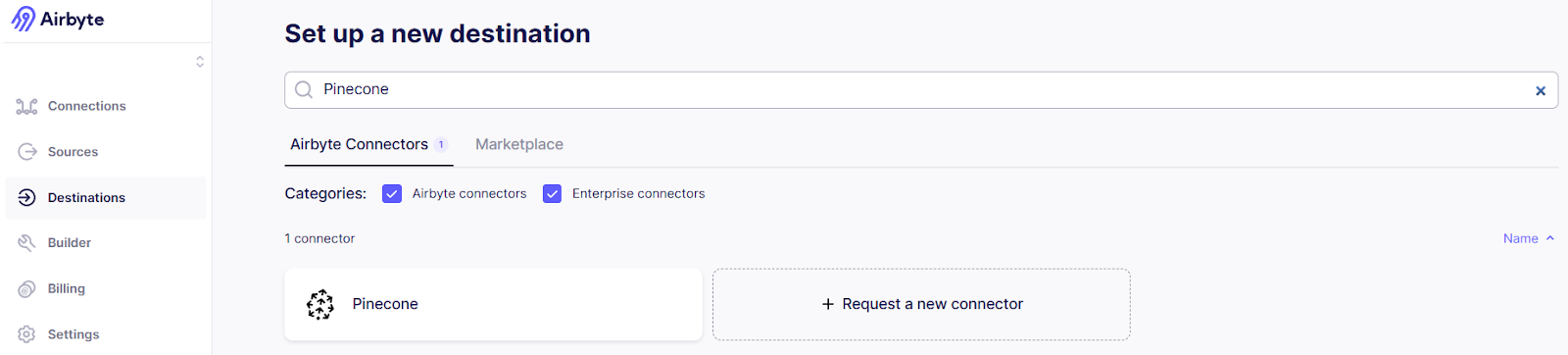

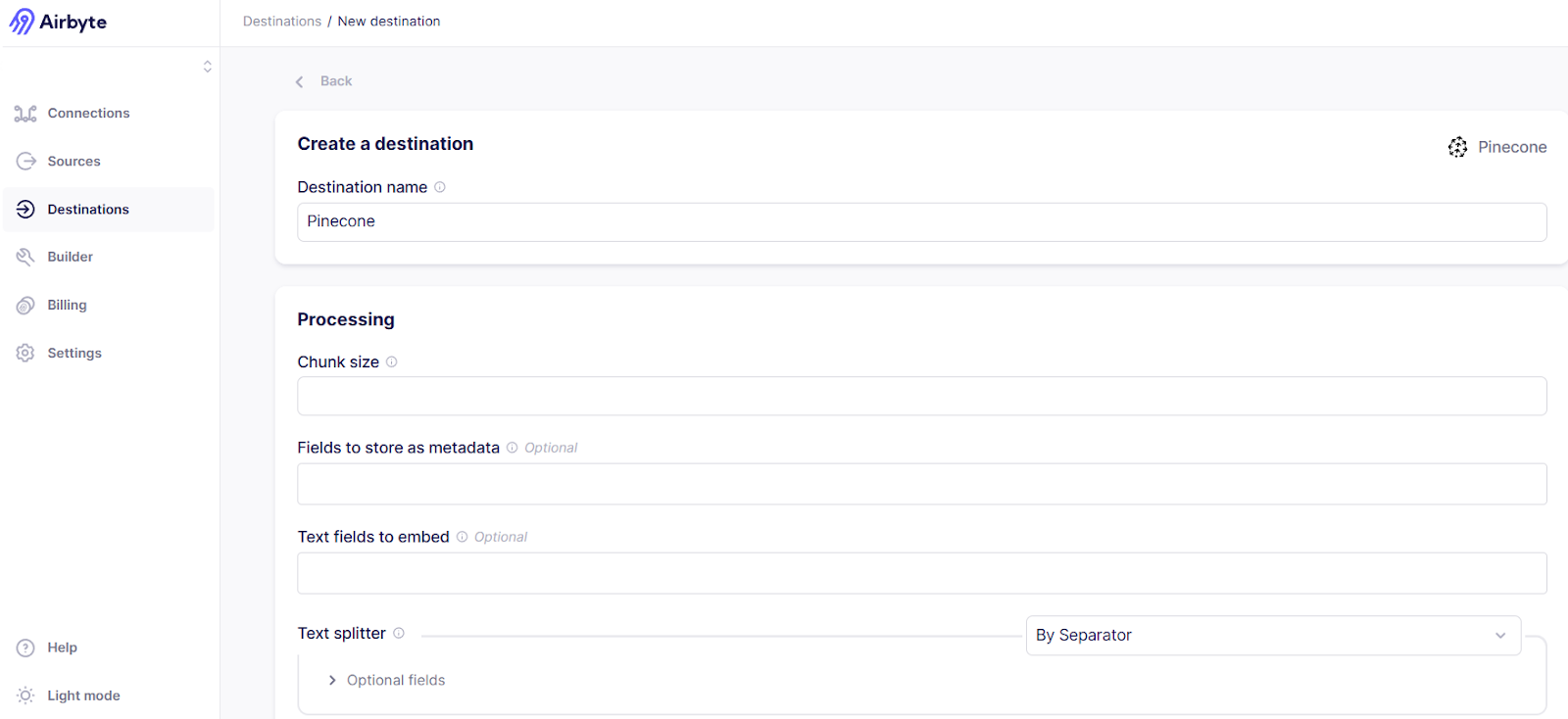

- To configure Pinecone as a destination, click the Destinations tab on the left panel. In the Search connector box, enter Pinecone.

- Select the available option and enter the necessary information in the Processing, Embedding, and Indexing sections. These options will automatically perform LLM tokenization on your data.

- Click on the Set up destination button and navigate to the Connections tab on the left panel to set up this connection.

Real-Time vs Batch Processing

The data transfer method mentioned above follows batch processing. Primarily, there are two methods of data processing: batch processing and real-time processing. Both these processes have their own advantages and disadvantages.

Processing data in batches allows better handling of large datasets. This is useful, especially for use cases where you do not want to process large sums of data without instant analytical results. Utilizing batch processing for LLM training is a great way of feeding large volumes of data to your model.

Real-time processing, also known as stream processing, is effective for instant data handling use cases such as fraud detection. It would be a preferable option for applications like stock analysis, sensor data management, and social media interactions.

Incremental Updates

You might have to continuously monitor and update the destination dataset to handle incremental updates in the Salesforce data and synchronize the destination with it. With Airbyte, you get the option to enable change data capture (CDC).

Learn how to enable Salesforce CDC with our detailed guide.

Data Transformation Steps

After replicating the data into your preferred destination, you must transform it to make it compatible with the machine learning model you are using. This step requires you to perform complex transformations like tokenization, normalization, and encoding. However, you can skip this step by using Airbyte, as it automates data transformation when storing the data in a vector database.

If you are working on an application other than LLM training with a different destination, you can use dbt. By integrating data built tool (dbt) with Airbyte, you can effortlessly perform complex transformations.

Quality Checks and Validation

To enhance LLM results, you must implement data quality checks and validation strategies. The better the quality of the data, the better your application's performance in producing unbiased outcomes.

Airbyte provides a schema management feature that lets you outline how it should handle changes in the schema in the source. For cloud users, Airbyte automatically performs source schema checks every 15 minutes, while for the self-managed version, these inspections are performed every 24 hours.

Error Handling Procedures

Error handling is an essential step that protects the model from getting stalled due to any issues. Methods like exception handling must be employed to auto-manage any challenges that might occur while processing data.

Airbyte offers an automated notification-sharing feature that alerts you if the synchronization process fails. By developing data pipelines with this tool, you can receive notifications via email or Slack about job failures, source schema changes, and connector updates.

LLM Integration Architecture

Selecting an LLM integration architecture and model selection process are crucial for building modern LLM applications. This step involves considering the key aspects of developing an LLM that can handle your specific requirements. Here’s an overview of the appropriate architecture you must go for in order to build a robust tool:

Model Selection Criteria

Selecting a machine learning model depends on your requirements and budget. There are two options readily available, including open-source options and cloud-support tools.

Open-source options include using Python libraries such as TensorFlow, PyTorch, or HuggingFace’s Transformers. These tools provide you the flexibility to create LLM with Salesforce data on a free budget.

In hindsight, cloud-based platforms like AWS, GCP, and Azure offer infrastructure management at a cost, relieving you from management worries. Depending on your budget and management requirements, you can select any of the cloud tools to create LLM with Salesforce data.

API Integration Patterns

API stands for Application Programming Interface. It defines how software can communicate with each other.

For LLMs, having a robust API is crucial to integrate the model with other systems applications, such as ERP, CRM, or chat applications. API integration simplifies data exchange and automation within your organization.

Authentication Flow

Authentication flow involves designing a secure path that authorizes users and services that interact with your LLM. Using LLM gateways and user authentication mechanisms, you can develop systems with enhanced security.

Rate Limiting Considerations

Rate limiting is an important step in building a reliable LLM application to control the number of API requests an application can make within a specific time frame. This helps prevent application latency and optimize resource utilization.

Scalability Planning

There are multiple key points to consider when scaling your LLM. The choice of your model, whether open-source or proprietary cloud applications, defines how scalability is handled. Considering cloud-based applications like AWS, you will have ample scalability features to handle growing user requests automatically. However, open-source tools require you to manually handle scalability using functionalities like sharding and replication.

Fallback Mechanisms

The fallback mechanism enables you to manage and isolate issues without compromising the overall user experience when working with your LLM. By creating effective fallback strategies, you can eliminate potential disruptions and provide an alternative response to users whenever an error occurs. Issues that might lead to the usage of a fallback plan include reaching the rate limit and producing low-confidence responses.

Implementing Core Features

Here are a few core features of LLM:

- Query Understanding: The LLM must understand and interpret user intent properly. This involves parsing user queries to identify key components and relevant parameters that can help produce effective responses.

- Context Injection: Passing densely informative data with queries is necessary to enable the model to produce contextually relevant information. By injecting contextual information, you can enhance LLM’s capabilities.

- Response Generation: The LLM must generate responses that directly clarify user doubts. Thoroughly training the LLM with multitudes of data is crucial for gaining effective results.

- Answer Validation: Before providing the users with the response, the system needs to verify the output is accurate and error-free. This might involve confidence scoring, consistency checking, or applying business rules to the output.

- Error Detection: Identifying issues in the response is critical to maintaining user trust in your model. Implementing user feedback and performing error identification methods like anomaly detection ensures model reliability.

- Confidence Scoring: This feature outlines the probability of how sure the model is of producing an accurate response. The machine learning algorithm used to develop the LLM helps highlight the confidence score of any output, providing insights into model performance.

Security and Compliance

Here are some of the common security steps:

- Data Encryption: Before you create LLM with Salesforce data, ensure that any personally identifiable information (PII) is encrypted or masked to protect user privacy.

- Access Control: When preparing the data for LLM training, limiting the number of individuals accessing it can help implement proper authorization for viewing and modifying the information.

- Audit Logging: Maintaining logs of the model’s performance and accountability are necessary steps to facilitate investigations during any breach.

- Compliance Monitoring: Regular monitoring and auditing of the LLM is essential to ensure it is compliant with the ethical guidelines of your organization.

- Data Retention Policies: Establish regulations to set policies that outline your organization's data management and lifecycle principles.

Common Use Cases

Let’s explore the common use cases of creating an LLM with Salesforce data.

- Customer Service Automation: The LLM trained on your Salesforce data can analyze user queries and produce effective and relevant responses while being empathetic. By analyzing customers’ tones, your model can label data on an urgency scale, marking high to low customer priority.

- Sales Intelligence: With the help of your LLM, you can automate the creation of marketing campaigns that target specific customers based on past experiences. To enhance business decision-making, the LLM can analyze past trends in the Salesforce data to predict future sales, enabling you to strategize for optimal results.

- Account Management: The model can manage your accounts by automating document drafting using original data. Automatic account management reduces manual intervention in handling different aspects of your Salesforce account, such as creating service agreements.

- Lead Qualification: By processing historical Salesforce data, the model can predict the probability of converting leads into potential customers. It can also help you segment leads into different categories for targeted marketing campaigns.

- Pipeline Analysis: Most data processing issues that might be difficult to track manually would become easy to identify by developing custom LLM with Salesforce data. The model automatically highlights the issues in your workflow by automatically detecting anomalies, saving time and resources.

- Report Generation: Using the capabilities of LLM, you can easily visualize market trends, which can help produce market strategies. By painting a visual picture of the data, the business team of your organization can effectively create outlines for better results.

Deployment Strategies

When deploying your model, it becomes crucial to follow certain best practices to enhance operational efficiency. Let’s explore some LLM deployment strategies.

- Staging Environment Setup: A staging environment is a middle ground between the development and production stages. By setting up a staging environment, you can perform thorough tests, quality assurance, and version control operations on your application.

- Production Deployment Steps: In the production environment, you must check for model fairness, transparency, accountability, authenticity, and ethical considerations. The key components of the production environment include easy accessibility, continuous monitoring, and model reliability.

- Rollback Procedures: If an issue occurs, rollback procedures can help restore a system to its previous state. These include validation methods, error analysis, and process documentation.

- Monitoring Setup: Creating proper monitoring strategies and feedback loops allows you to improve model performance at any stage continuously.

- Scaling Considerations: Analyze your model's growth according to the workload. Deploying the LLM on cloud-based applications automatically handles scalability, reducing manual intervention.

- Maintenance Planning: If you have chosen custom LLM development, you must map out proper maintenance plans to ensure the application works as expected. This involves monitoring model performance, handling user feedback, and documenting each update.

Integration with Other Systems

An advantage of creating an LLM using Salesforce data is that you can facilitate cross-platform synergy by integrating different platforms with the LLM. Here are a few beneficial integrations that you can perform:

1. CRM Integration

By synchronizing the model with popular CRM platforms like HubSpot, you can create a unified view of customer interactions. This integration allows you to extract customer data to provide more personalized interactions.

2. Knowledge Base Connection

Connecting your LLM with a knowledge base like Confluence can provide it with the necessary documentation to produce an efficient response. By referring to the knowledge base, LLM can improve customer support.

3. External API Integration

By integrating LLM with external API, you can add real-time data sources to Salesforce, streamlining external operations. This integration allows you to provide Salesforce with real-time data from external sources, such as social media insights and stock prices, for enhanced information availability.

4. Data Warehouse Connection

You can also integrate the model with a data warehouse like Amazon Redshift to magnify analytical workflows further. This combination facilitates the generation of data-driven insights in a centralized environment.

5. Analytics Platform Integration

Collaborating analytics platforms like Google Analytics with your LLM enables you to access and act according to real-time analytics. The integration can help you improve customer targeting, sentiment analysis, and performance optimization.

6. Reporting Tools

By synchronizing the LLM with a reporting tool like Power BI, you can automate the creation of reports and dashboards from the data. Utilizing these dashboards allows you to streamline complex tasks within your organization.

Conclusion

Considering the necessary best practices is a crucial step in creating LLM with Salesforce data. Although there are multiple ways to create a custom model, you must choose the process that best suits your workflow. If you want to create an application with full control over the process, you can select the custom method working with Python. Using Python libraries and frameworks such as Tensorflow and PyTorch provides you with high flexibility in terms of LLM development.

However, if you want to simplify the process by reducing the infrastructure overhead, you can select a cloud-based tool like AWS, GCP, or Azure. These tools provide you with all the necessary resources to manage large-scale workloads with ease. No matter what path you choose, following proper model training, security and compliance methods, and deployment strategies will result in effective LLM creation.

Suggested Read: How to Train LLM on Your Own Data