What is Data Automation: Uses & Benefits

Summarize this article with:

✨ AI Generated Summary

The article highlights the critical role of data automation in managing exponential data growth by automating extraction, transformation, and loading (ETL/ELT) processes to improve scalability, data quality, and operational efficiency. Key benefits include accelerated decision-making, reduced manual errors, and resource optimization, supported by advanced methodologies like DataOps and MLOps for continuous integration and machine learning operations. It also emphasizes practical implementation steps, common challenges, and the advantages of platforms like Airbyte that offer extensive connectors, real-time data sync, and scalable, secure automation solutions.

The exponential growth of business data presents both unprecedented opportunities and significant challenges for modern organizations. Manual data-management approaches simply cannot scale to handle such massive volumes while maintaining accuracy and timeliness. Smart organizations are turning to data automation as the foundation for transforming raw information into actionable business intelligence that drives competitive advantage.

This comprehensive guide explores how data-process automation can revolutionize your data operations—from basic implementation strategies to advanced methodologies that position your organization for long-term success in the data-driven economy.

What Is Data Automation and Why Does It Matter?

Data automation refers to the strategic use of technology to automatically collect, process, and manage data across your organization, eliminating time-consuming manual interventions that create bottlenecks and introduce errors. This systematic approach optimizes operations, enhances efficiency, and minimizes human error while ensuring data accuracy and consistency at scale.

Modern data-process automation extends beyond simple task execution to encompass intelligent workflows that adapt to changing business conditions. By implementing automated data processes, organizations can allocate human resources strategically toward high-value analytical work while ensuring critical data operations continue seamlessly around the clock.

The strategic importance of data automation becomes evident when considering the compound effect of manual processes on business agility. Organizations relying on manual data handling face exponentially increasing operational overhead as data volumes grow, while automated systems scale efficiently with minimal additional resource requirements. This scalability difference often determines which organizations can successfully leverage data as a competitive advantage.

Understanding what data automation encompasses helps organizations recognize opportunities for transformation across their entire data lifecycle. From initial data collection through final business intelligence delivery, automation touches every aspect of modern data operations.

What Are the Key Components of Data Process Automation?

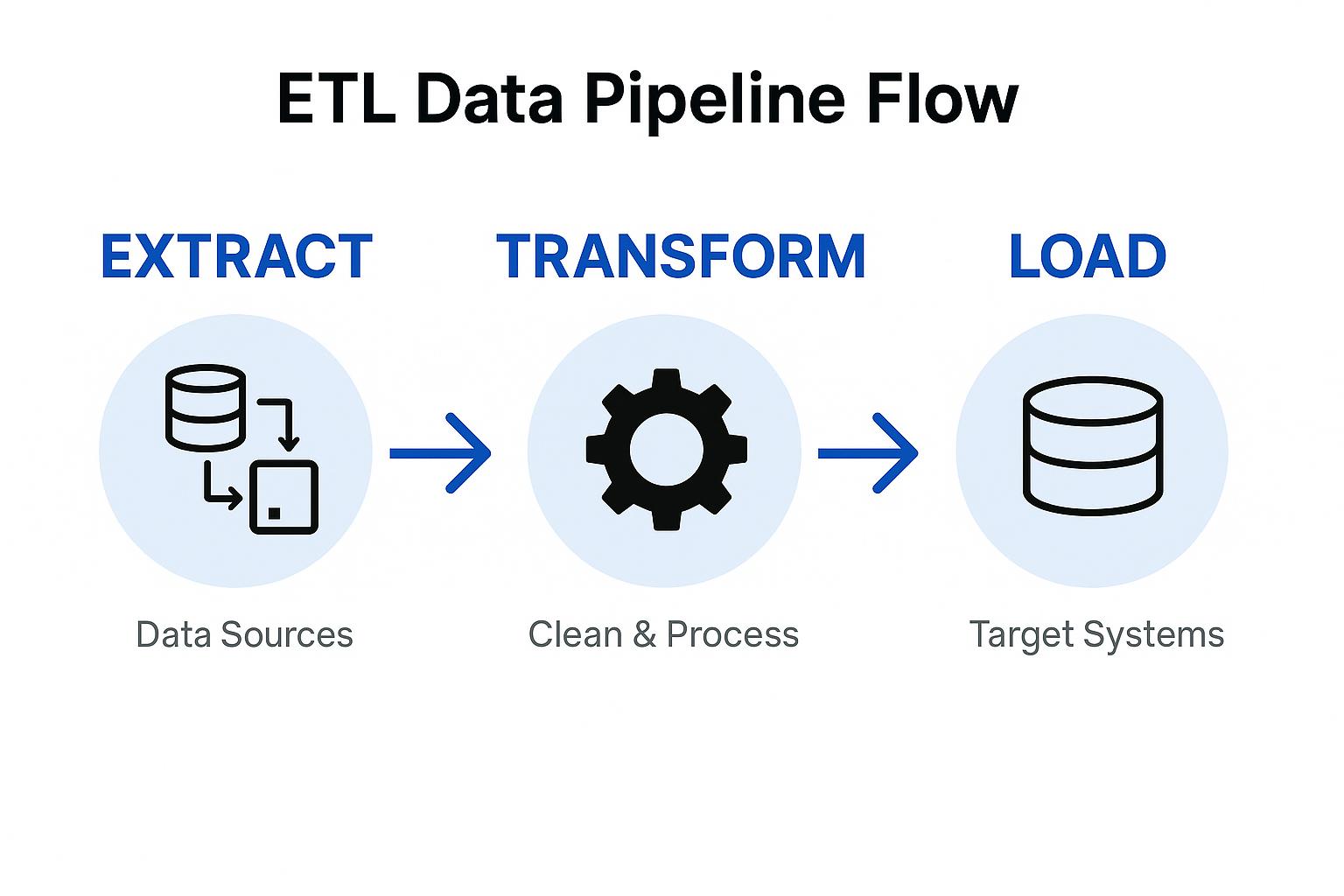

The foundation of effective data automation rests on three core components that work together to create seamless data workflows. Each component serves a critical role in transforming raw data into business-ready information.

- Extract – Systematically collect and extract data from diverse sources—including databases, APIs, social platforms, IoT devices, and enterprise apps—while maintaining data lineage and secure access.

- Transform – Clean, restructure, and enrich data to ensure compatibility with downstream systems and analytical requirements, applying data quality rules and ML-powered anomaly detection.

- Load – Intelligently store transformed data in target destinations such as cloud data warehouses or analytical platforms, optimizing for performance, partitioning, and historical-version retention.

These components form the ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) backbone that enables organizations to move from manual data handling to automated data operations.

What Are the Primary Benefits of Implementing Data Automation?

Data automation delivers transformative benefits that extend far beyond simple efficiency gains. Organizations implementing comprehensive automation strategies experience fundamental improvements in their data operations and business capabilities.

Scalability That Grows with Your Business

Manual data processing scales linearly with headcount; automation scales exponentially, handling massive growth without proportional cost increases. This scalability advantage becomes increasingly important as organizations expand their data footprint and analytical requirements.

Accelerated Data-Driven Decision Making

Real-time, automated data pipelines reduce time-to-insight from weeks to hours, enabling agile responses to market changes. Business teams gain access to current information that supports rapid decision-making and competitive positioning.

Superior Data Quality and Consistency

Automation applies validation, standardization, and data observability checks consistently, significantly reducing human error. Automated quality controls substantially enhance data reliability across downstream analytical processes, though some human oversight remains necessary.

Operational Efficiency and Resource Optimization

Organizations typically cut significant manual data-prep time, allowing teams to focus on high-value analytical work. This resource reallocation enables data teams to concentrate on strategic initiatives rather than routine maintenance tasks.

The compound effect of these benefits creates sustainable competitive advantages for organizations that successfully implement data automation. Teams become more productive, decisions become more informed, and operations become more reliable.

How Are Different Industries Leveraging Data Automation?

Industry applications of data automation demonstrate the versatility and impact of these technologies across diverse business contexts. Each sector faces unique data challenges that automation addresses through tailored solutions.

What Advanced Methodologies Can Enhance Your Data Automation Strategy?

Advanced methodologies build upon basic automation foundations to create sophisticated data operations that support complex business requirements. These approaches integrate best practices from software engineering and operations management.

DataOps: Bringing DevOps Principles to Data Operations

DataOps applies automation, monitoring, and collaboration across the data lifecycle, leveraging data orchestration and automated quality management. This methodology emphasizes continuous integration and deployment practices adapted for data workflows.

DataOps implementations focus on version control for data pipelines, automated testing for data quality, and collaborative development practices that enable multiple team members to contribute safely to data operations. These practices reduce deployment risks while accelerating development cycles.

MLOps: Industrializing Machine-Learning Operations

MLOps standardizes model development, deployment, and monitoring—including automated retraining and feature engineering—to keep ML applications performing at scale. This discipline extends automation beyond traditional data processing into predictive analytics and artificial intelligence.

MLOps practices ensure machine learning models remain accurate and relevant as business conditions change. Automated model monitoring detects performance degradation, while automated retraining processes maintain model effectiveness without manual intervention.

These methodologies represent the evolution of data automation from simple task automation to comprehensive operational frameworks that support enterprise-scale data operations.

How Does Real-Time Data Processing Transform Modern Automation?

Real-time processing capabilities transform data automation from batch-oriented operations to continuous, event-driven systems that respond immediately to changing business conditions. This transformation enables new categories of applications and business processes.

Event-Driven Automation Architectures

Real-time data streaming and event-driven automation enable instant reactions to fraud, capacity changes, or customer behavior. These architectures process data as it arrives rather than waiting for scheduled batch processing windows.

Event-driven systems react to business events in milliseconds, enabling applications like real-time personalization, instant fraud detection, and immediate inventory updates. This responsiveness creates competitive advantages in customer experience and operational efficiency.

Streaming Technology Integration

Technologies such as Apache Kafka, Flink, and Spark—or managed services like Amazon Kinesis and Azure Stream Analytics—bring low-latency analytics into existing automation workflows. These platforms handle high-volume, continuous data streams while maintaining consistency and reliability.

Streaming integration enables organizations to combine real-time data with historical information, creating comprehensive views that support both immediate decision-making and long-term trend analysis. This capability bridges the gap between operational and analytical data systems.

How Can You Successfully Implement Data Process Automation?

Successful implementation requires systematic planning and execution across technical and organizational dimensions. Following proven methodologies increases the likelihood of successful outcomes while minimizing risks and disruptions.

1. Define Clear Objectives and Success Metrics

Establish specific, measurable goals for your automation initiative. Identify the business processes that will benefit most from automation and define metrics that will demonstrate success.

2. Assess Your Current Data Ecosystem

Catalog existing data sources, systems, and workflows to understand integration requirements. This assessment reveals dependencies and constraints that influence implementation approaches.

3. Design Comprehensive Integration Workflows

Map data flows from sources through transformations to final destinations. Consider error handling, monitoring, and recovery procedures as part of workflow design.

4. Specify Required Data Transformations

Document data quality rules, standardization requirements, and business logic that must be applied during processing. This specification guides tool selection and implementation decisions.

5. Select Appropriate Automation Tools and Platforms

Choose tools that align with your technical requirements and organizational capabilities. Consider platforms like Airbyte, Apache Airflow, and dbt that support comprehensive automation workflows.

6. Implement Automated Scheduling and Monitoring

Establish scheduling frameworks that handle dependencies and resource management. Implement comprehensive monitoring that tracks performance, quality, and availability.

7. Establish Performance Measurement and Optimization

Create feedback loops that enable continuous improvement of automated processes. Regular performance review and optimization ensure automation continues delivering value as requirements evolve.

What Challenges Should You Expect When Implementing Data Automation?

Understanding common challenges helps organizations prepare for successful automation implementations. Proactive planning addresses these issues before they become obstacles to progress.

Data quality and consistency issues often surface during automation implementation as manual processes may have masked underlying data problems. Establishing data quality standards and validation procedures addresses these challenges systematically.

Legacy system integration complexity requires careful planning and often involves building custom connectors or adapters. Modern automation platforms provide tools and frameworks that simplify legacy integration while maintaining security and reliability.

Scalability and performance optimization become critical as automated systems handle increasing data volumes. Cloud-native architectures and auto-scaling capabilities address scalability requirements while managing costs effectively.

Security and compliance requirements must be built into automation systems from the beginning rather than added later. Comprehensive security frameworks ensure automated processes meet regulatory and organizational requirements.

Initial investment and resource allocation require careful justification and planning. Organizations should plan for both technology costs and team training to ensure successful adoption.

Organizational change management addresses the human aspects of automation implementation. Training programs and change management processes help teams adapt to new workflows and responsibilities.

How Can Airbyte Streamline Your Data Automation Workflows?

Airbyte provides comprehensive data integration capabilities that form the foundation of effective automation workflows. The platform eliminates the complexity and cost barriers that prevent organizations from implementing scalable data automation.

Comprehensive Integration Capabilities

- 600+ Pre-built Connectors – Access the complete connector library covering databases, APIs, cloud services, and enterprise applications without custom development overhead.

- Advanced Change Data Capture (CDC) enables real-time synchronization that keeps data current across all systems, supporting event-driven automation workflows.

- Flexible Connector Development through the Connector Development Kit enables rapid creation of custom connectors for specialized data sources and requirements.

Enterprise-Grade Platform Features

Deploy via Airbyte Open Source, Airbyte Cloud, or Airbyte Self-Managed Enterprise depending on your security, compliance, and operational requirements. Each deployment option provides the same core functionality while addressing different organizational needs.

SOC 2 and GDPR compliance ensure automated data processes meet regulatory requirements across industries and jurisdictions. Built-in security features protect data throughout the integration process.

Cloud-native, auto-scaling architecture handles growing data volumes without manual intervention. The platform scales automatically to meet demand while optimizing resource utilization and costs.

Developer-Friendly Integration Approaches

Choose from UI-based configuration, API automation, Terraform Provider infrastructure management, or PyAirbyte Python integration depending on your team's preferences and requirements.

Modern ELT architecture with dbt integration enables transformation workflows that leverage cloud data warehouse capabilities for optimal performance and cost management.

Built-in monitoring and observability provide comprehensive visibility into data pipeline performance, quality, and reliability. Automated alerting ensures issues are addressed quickly before they impact business operations.

Airbyte serves as a replacement for expensive legacy integration platforms while providing superior flexibility and control. Organizations reduce integration costs while improving deployment speed and operational reliability.

Conclusion

Data-process automation represents a strategic imperative for organizations seeking to transform growing data volumes into competitive advantages. By implementing systematic automation approaches and leveraging modern platforms, businesses eliminate manual bottlenecks while enhancing data quality and generating real-time insights that drive superior decision-making. The methodologies and implementation strategies outlined in this guide provide a roadmap for successful automation initiatives that scale with business growth. Organizations that embrace comprehensive data automation position themselves to thrive in the data-driven economy while their competitors struggle with manual processes and operational limitations.

Start your automation journey today to unlock the full potential of your data assets.

FAQs

How Can You Ensure Data Quality While Implementing Automation?

Implement automated profiling, quality scoring, and anomaly detection with clear thresholds and continuous monitoring. Establish validation rules that prevent poor-quality data from entering downstream systems while providing alerts for quality issues that require attention.

What's the Difference Between Data Orchestration and Automation?

Data orchestration coordinates workflow sequencing and dependencies, while automation removes manual tasks; modern data operations combine both. Orchestration manages the timing and coordination of automated processes to ensure reliable execution across complex data workflows.

How Does Real-Time Processing Differ From Traditional Batch Automation?

Real-time processing handles data continuously for immediate action; batch automation processes data on set schedules for efficiency. Real-time systems respond to events as they occur, while batch systems optimize resource utilization by processing data in scheduled intervals.

What Role Does Machine Learning Play in Modern Data Automation?

ML powers intelligent data-quality checks, anomaly detection, and self-optimizing pipelines, operationalized through MLOps practices. Machine learning enables automation systems to adapt and improve over time while detecting patterns that human operators might miss.

How Do You Choose the Right Data Automation Platform for Your Organization?

Evaluate platforms based on connector availability, scalability requirements, security features, deployment flexibility, and total cost of ownership. Consider factors like existing technical expertise, integration requirements, and long-term strategic goals when making platform decisions.

.webp)