What is Data Exploration: Techniques & Best Practices

Summarize this article with:

✨ AI Generated Summary

Data exploration is essential for understanding dataset characteristics, identifying patterns, and improving decision-making by using statistical and visualization techniques. Key methods include descriptive statistics, cluster and correlation analysis, outlier detection, and Pareto analysis, often enhanced by AI-powered tools that automate insights and enable natural language queries.

- Challenges include time/resource constraints, technical complexity, scalability, and security concerns.

- Best practices emphasize clear objectives, appropriate technique selection, automation, collaboration, and robust data governance.

- Platforms like Airbyte facilitate data integration, improving exploration by consolidating diverse sources securely and efficiently.

Data is widely used in various fields, from business and healthcare to science and technology. However, harnessing the true potential of large datasets can be impacted by challenges like storage limitations, data quality issues, security breaches, and lack of expertise in analyzing complex datasets.

These factors lead to missed business opportunities and flawed conclusions. A robust technique known as data exploration can help you overcome these challenges.

Data exploration enables you to examine your datasets and their characteristics meticulously before you utilize them for analysis and decision-making. This article delves deep into data exploration, equipping you with best practices and techniques to unlock valuable insights from your datasets.

What Is Data Exploration and Why Does It Matter?

Data exploration is the practice of reviewing dataset characteristics such as structure, size, data types, and accuracy before performing data analysis. It involves using statistical techniques and visualization tools to thoroughly understand datasets before drawing key insights.

By exploring your data, you uncover hidden patterns and trends within massive datasets. This knowledge assists you in making informed decisions for your enterprise's growth. Additionally, data exploration saves valuable time by identifying and eliminating redundant aspects of your data beforehand, allowing you to focus on critical factors.

Modern Tools for Data Exploration

To streamline your data exploration process, various software solutions are available. Popular options include traditional tools like Excel and Tableau, modern libraries like Pandas, and emerging AI-powered platforms like ThoughtSpot and Powerdrill AI. These solutions help you automate your exploration methods by providing several interactive, visual, and statistical analysis features.

Why Is Data Exploration Critical for Your Organization?

- Understanding Your Data: Data exploration helps you delve into the intricacies of your datasets. You gain an in-depth knowledge of data structure, data types, and size. This understanding enables you to effectively organize and prepare data for further processing, such as data integration or transformation.

- Visualizing Data: It can be challenging to understand and obtain meaningful insights from vast amounts of data arranged in rows and columns. Some data visualization solutions can be utilized to get a complete knowledge of data exploration output. You can use tools like Power BI or Tableau to transform raw data into clear, colorful, and interactive reports. These reports can help you easily discover hidden patterns within your data, making it easier to identify crucial insights.

- Drives Business Growth: Following data exploration, you can use specialized methods like Exploratory Data Analysis (EDA) to delve deeper into trends related to business-critical factors such as sales figures, customer behavior patterns, or finance metrics. This data-driven approach enables you to find areas where your business leads and lags, allowing you an opportunity to enhance your business growth.

What Are the Essential Data Exploration Techniques You Should Master?

Mastering key data exploration techniques empowers you to extract meaningful insights from your datasets. These fundamental approaches form the foundation of effective data analysis and help you understand complex data patterns.

Descriptive Statistics

Descriptive statistics summarize dataset parameters and help identify data trends and patterns. They measure central tendency (mean, median, mode), dispersion (range, standard deviation, variance), and distribution shape (skewness, kurtosis).

For example, you can employ descriptive statistics to determine valuable insights into customer behavior and identify potential risk factors for churn. This information can then be used to develop targeted customer-retention strategies. Modern AI-powered tools can now automatically generate these statistics and provide contextual explanations, making this technique accessible to non-technical users.

Cluster Analysis

Cluster analysis groups similar data points into clusters. Besides pattern recognition, it aids in data compression and building machine-learning models. These clusters can then be used, for instance, to target customers by gender or location.

Advanced clustering techniques now incorporate machine learning algorithms that can automatically determine optimal cluster numbers and handle high-dimensional data more effectively. Tools like autoencoders are revolutionizing how we approach clustering in complex datasets, especially when dealing with unstructured data from multiple sources.

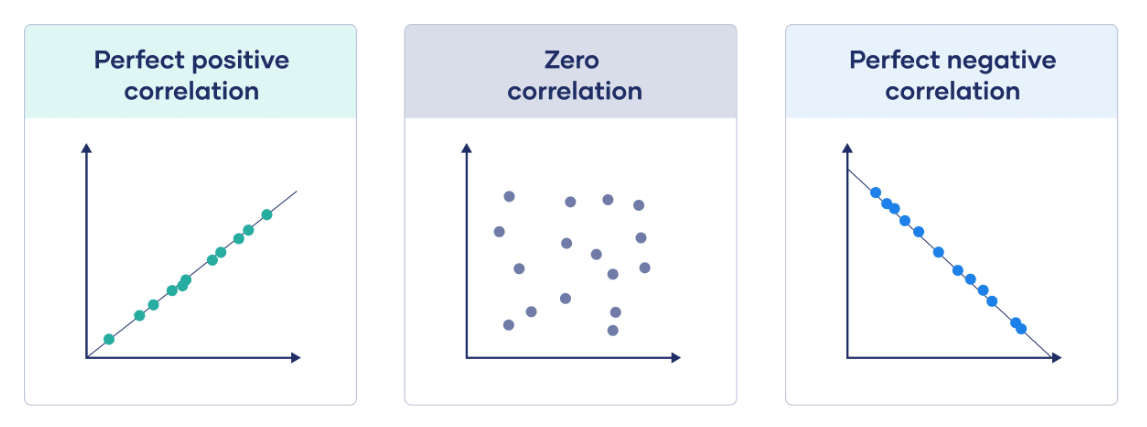

Correlation Analysis

Correlation analysis reveals relationships between two or more variables. It is measured using Spearman, Kendall, and Pearson coefficients (values from –1 to 1). A higher absolute value indicates a stronger correlation.

For example, you might analyze the relationship between time a customer spends on a product page and the likelihood of adding that product to their cart. Modern correlation analysis has evolved to include causal discovery methods that help distinguish between correlation and causation, providing more actionable insights for business decision-making.

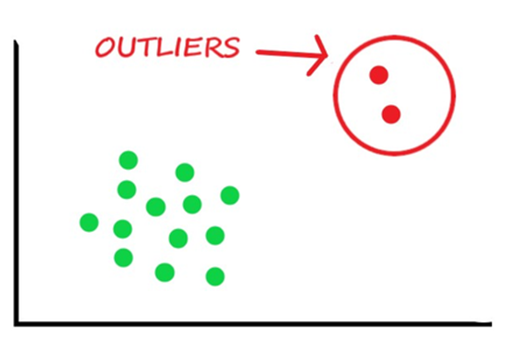

Outlier Detection

Outliers are data points that deviate significantly from others. Detection methods include Z-score, Interquartile Range, K-Nearest Neighbors (KNN), and Local Outlier Factor (LOF). For instance, analyzing aircraft sensor data can reveal abnormal spikes or dips that signal potential malfunctions.

AI-powered anomaly detection systems now provide real-time monitoring capabilities, automatically flagging unusual patterns as they occur and providing explanations for why certain data points are considered outliers.

Pareto Analysis

Pareto analysis helps identify the factors that have the greatest impact on your outcome, following the 80/20 rule. A Pareto chart combines bars (individual impact) with a line (cumulative impact).

Contemporary Pareto analysis leverages automated data processing to continuously update priority rankings as new data becomes available. This dynamic approach helps organizations maintain focus on the most impactful factors even as business conditions change.

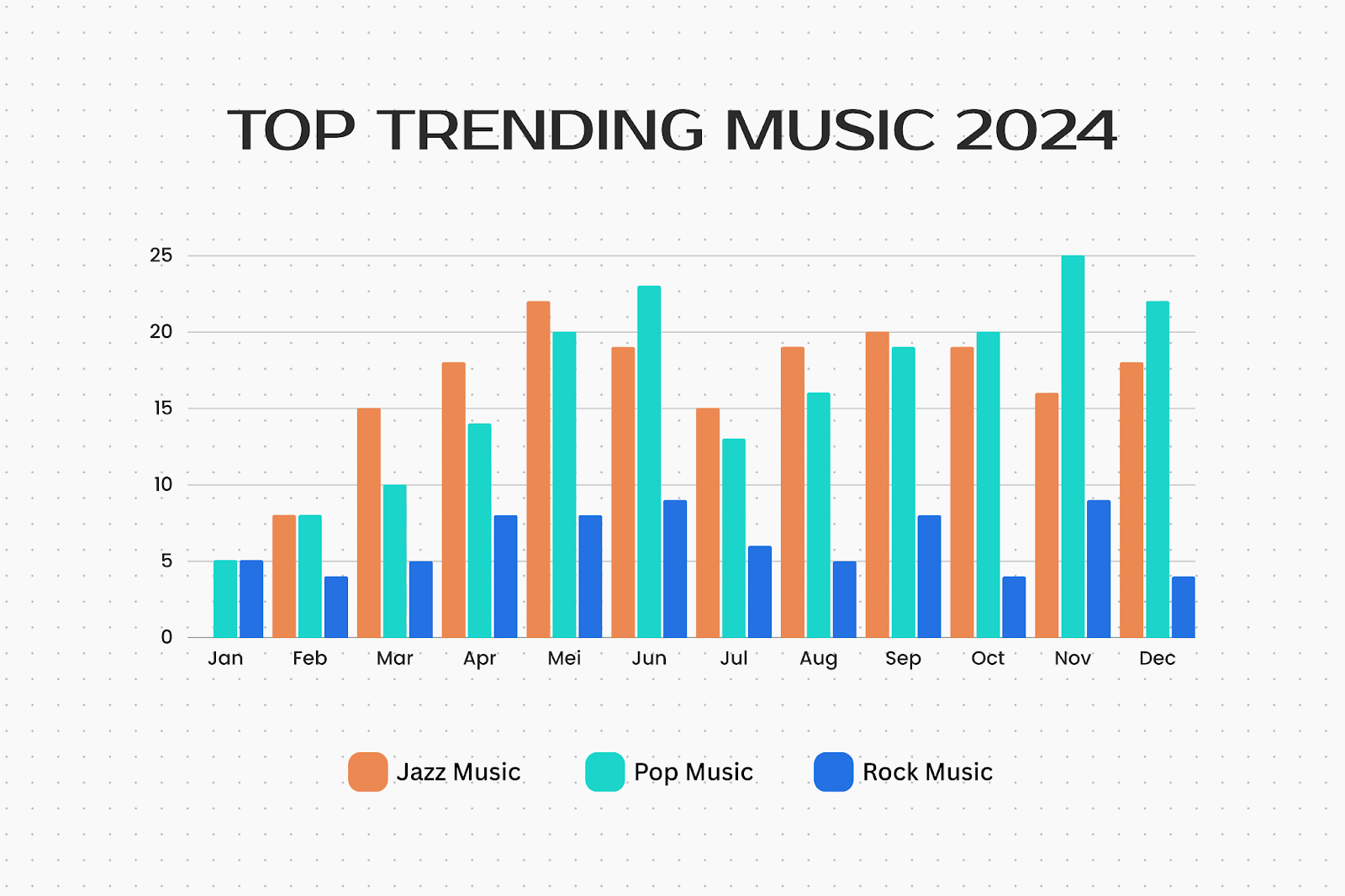

Visualization Techniques

Combining descriptive statistics with visualizations enables comprehensive data understanding. Common visualization types include bar charts, pie charts, radar charts, and histograms.

Modern visualization techniques now include interactive dashboards that update in real-time, allowing users to explore data dynamically. Graph-based visualizations are particularly valuable for understanding complex relationships in interconnected data.

AI-generated visualizations can automatically select the most appropriate chart types based on data characteristics and analysis objectives.

How Is AI Transforming Data Exploration Practices?

Artificial intelligence is revolutionizing data exploration by automating traditionally manual processes and uncovering insights that might be missed through conventional analysis. AI-powered data exploration represents a paradigm shift from reactive to proactive analysis, where systems can generate hypotheses and identify patterns without explicit human direction.

Generative AI for Automated Insights

Modern AI tools can automatically analyze datasets and generate comprehensive reports highlighting key findings, anomalies, and trends. Platforms like ThoughtSpot's Sage and Powerdrill AI leverage large language models to create natural language summaries of complex data patterns. These systems can process vast amounts of information quickly and present findings in easily digestible formats, significantly reducing the time data professionals spend on initial exploration phases.

For example, an AI system might automatically detect that customer churn rates spike during specific months and correlate this with external factors like seasonal trends or marketing campaign timing. The AI can then generate hypotheses about causation and suggest further investigation areas.

Conversational Data Analysis

Natural language interfaces are making data exploration accessible to non-technical users. You can now ask questions like "What factors most influence customer satisfaction scores?" or "Show me sales trends by region over the past year" and receive comprehensive analyses complete with visualizations and statistical insights.

These conversational systems translate business questions into appropriate statistical methods and data queries, democratizing access to advanced analytics capabilities. Observable Notebooks enable collaborative exploration where multiple stakeholders can contribute questions and insights in real-time, while RATH focuses on automated data analysis and visualization for individuals or teams.

Automated Feature Engineering and Discovery

AI systems now excel at automatically identifying relevant features and creating new variables that enhance analysis quality. Machine learning algorithms can discover non-obvious relationships between variables and generate composite features that provide better predictive power than individual data points.

This automation extends to data quality assessment, where AI can identify inconsistencies, missing values, and potential data integrity issues across massive datasets. The systems can then recommend appropriate cleaning and preprocessing steps, significantly reducing manual data preparation time.

What Strategies Help You Identify and Address Critical Data Gaps?

Data gaps represent one of the most significant challenges in effective data exploration, occurring when essential information is missing, outdated, or insufficient for comprehensive analysis. Recognizing and addressing these gaps is crucial for maintaining data integrity and ensuring that exploration efforts lead to actionable insights.

Systematic Gap Identification Methods

Effective gap identification begins with stakeholder engagement to understand what data should theoretically exist versus what is actually available. You should conduct comprehensive data audits that map expected data elements against actual data availability, identifying temporal gaps, categorical gaps, and resolution gaps.

Statistical approaches can help identify gaps by analyzing data distribution patterns and detecting anomalies that suggest missing information. For instance, if customer demographic data shows unusual concentrations in certain age groups while others are sparsely represented, this might indicate systematic data collection issues rather than actual population characteristics.

Documentation review is equally important, as comparing current data availability against original collection specifications can reveal where data sources have degraded over time or where collection processes have changed without proper documentation updates.

Bridging Data Gaps Through Strategic Approaches

Once gaps are identified, several strategies can help address them effectively. Data fusion techniques allow you to combine information from multiple sources to create more complete datasets.

For example, combining internal customer transaction data with external demographic databases can fill missing customer profile information.

Proxy variable development involves identifying alternative measurements that can substitute for missing direct measurements. If specific customer satisfaction scores are unavailable, you might use customer service interaction frequency or product return rates as proxy indicators.

Collaborative data sharing initiatives can help organizations pool resources to address common data gaps. Industry associations, government agencies, and research institutions often maintain datasets that can supplement organizational data when proper agreements are established.

Implementing Gap Prevention Strategies

Proactive gap prevention requires establishing robust data governance frameworks that include regular data quality monitoring and systematic collection process reviews. You should implement automated data validation rules that flag potential gaps as they occur rather than discovering them during analysis phases.

Cross-functional collaboration between data teams and operational departments helps ensure that data collection processes align with analytical needs. Regular stakeholder feedback sessions can identify emerging data requirements before gaps become critical issues.

Investment in data infrastructure improvements often provides long-term gap prevention benefits. Modern data integration platforms can help maintain data consistency across sources and provide real-time monitoring of data availability and quality metrics.

What Are the Main Challenges You'll Face in Data Exploration?

Understanding common data exploration challenges helps you prepare effective strategies to overcome them. These obstacles can significantly impact your exploration outcomes if not properly addressed.

- Time and Resource Constraints: The iterative nature of exploring large datasets can be slow, particularly when dealing with complex data structures or when multiple exploration cycles are required to uncover meaningful patterns. Organizations often underestimate the time and computational resources required for thorough exploration.

- Technical Complexity: Choosing appropriate techniques becomes increasingly complex as the number of available methods expands. Selecting unsuitable methods may lead to incorrect conclusions.

- Effective exploration demands a solid grasp of statistical and domain knowledge alongside emerging AI capabilities.

- Scalability and Integration Issues: Modern datasets often exceed the capacity of traditional tools, requiring distributed computing approaches. Multiple data sources with different formats and quality levels increase exploration difficulty.

- Security and Compliance Concerns: Exploration can expose data to unauthorized access without proper controls, especially in cloud-based environments. Organizations must balance accessibility with security requirements, particularly when dealing with sensitive information.

What Best Practices Should Guide Your Data Exploration Approach?

- Clarity of Objectives: Define exploration goals upfront to ensure focused analysis and efficient resource allocation. Clear objectives help determine appropriate techniques and success metrics.

- Choose the Right Techniques: Align methods with objectives, data type, and available resources. Consider both traditional statistical approaches and modern AI-powered alternatives based on your specific requirements.

- Leverage Automation: Automate repetitive tasks, including data collection, cleaning, and transformation. Modern platforms can handle routine exploration tasks, freeing human analysts to focus on interpretation and strategic insights.

- Invest in Essential Resources: Allocate budget for appropriate tools, skilled staff, and necessary infrastructure. This includes both technical resources and training to keep teams current with evolving methodologies.

- Secure Your Data: Implement comprehensive access controls and encryption protocols. Establish clear data handling procedures that maintain security throughout the exploration process.

- Maintain Documentation: Record each step for reproducibility and error tracing. This includes documenting data sources, transformation steps, analytical choices, and findings to support future analysis and validation.

- Seek Feedback: Review findings with colleagues and domain experts to validate insights and identify potential blind spots. Cross-functional collaboration often reveals perspectives that purely technical analysis might miss.

- Collaborate With Experts: Engage domain specialists and experienced analysts to gain diverse perspectives and improve problem-solving effectiveness. This collaboration is particularly valuable when working with complex or unfamiliar data types.

- Implement Quality Controls: Establish systematic validation processes to verify data quality and analytical accuracy throughout the exploration process. Regular quality checks help maintain confidence in exploration results.

How Can Airbyte Enhance Your Data Exploration Efforts?

When your data resides in a unified and standard format, you can greatly improve data exploration outcomes. Data integration consolidates information from multiple sources into a single destination, creating the comprehensive datasets necessary for effective exploration.

Airbyte is a modern data-integration platform offering comprehensive capabilities for data consolidation and preparation.

Key Airbyte Features for Data Exploration

Conclusion

Data exploration uncovers patterns, correlations, and anomalies that drive informed decision-making across organizations. The field continues evolving with AI-powered automation, real-time processing capabilities, and sophisticated gap identification strategies that enhance analytical outcomes. By mastering core techniques, acknowledging modern challenges, and following established best practices, you can unlock the full potential of your organizational data. Success in data exploration requires balancing statistical rigor with emerging AI capabilities while maintaining data security and quality standards through comprehensive tools like Airbyte.

Frequently Asked Questions

What Is Data Exploration and How Does It Benefit Organizations?

Data exploration is the systematic process of examining dataset characteristics, structure, and quality before performing detailed analysis. It involves using statistical techniques and visualization tools to understand data patterns, identify anomalies, and uncover relationships between variables. Organizations benefit from data exploration by gaining deeper insights into their data assets, improving decision-making quality, and identifying opportunities for business growth through data-driven strategies.

Which Visualization Tools Can You Use for Data Exploration?

You can use libraries like Matplotlib and Seaborn—or comprehensive platforms like Power BI and Tableau—for traditional visualization needs. Modern options include AI-powered platforms like ThoughtSpot for advanced interactive exploration. Observable Notebooks provide modern, highly interactive environments (with AI capabilities possible via user implementation), while RATH's AI-powered features are less widely documented. The choice depends on your technical expertise, collaboration requirements, and the complexity of your datasets.

What Is the Difference Between Data Discovery and Data Exploration?

Data exploration involves understanding dataset characteristics, structure, and quality as a preliminary step in the analysis process. Data discovery, on the other hand, uses already curated and prepared data to solve specific business problems and generate actionable insights. Exploration is typically an initial step within the broader data discovery workflow.

How Has AI Changed Data Exploration Practices?

AI has revolutionized data exploration by automating hypothesis generation, providing natural language interfaces for non-technical users, and detecting complex patterns that might be missed through manual analysis. Modern AI systems can automatically generate insights, suggest relevant visualizations, and identify anomalies in real-time, significantly reducing the time and expertise required for effective data exploration.

What Should You Do When Critical Data Is Missing from Your Exploration?

When encountering missing data, first identify the scope and nature of the gaps through systematic auditing. Use strategies like proxy variables, data fusion from multiple sources, or statistical imputation methods to address missing information. Establish robust data governance processes to prevent future gaps and consider collaborative partnerships to access external datasets that can supplement your internal data sources.

.webp)