What Is Data Flow Architecture: Behind-the-Scenes & Examples

Summarize this article with:

✨ AI Generated Summary

Data flow architecture models how data moves and transforms through system components, with key patterns including Batch Sequential, Pipe-and-Filter, and Process Control, each suited for different processing needs. Advantages include modularity, concurrency, and flexibility, while challenges involve latency, maintenance complexity, and system stability. Effective implementation relies on choosing appropriate tools (e.g., Apache Kafka, Airbyte, Snowflake) and addressing data consistency, security, and performance balancing.

- Batch Sequential: simple, linear, but high latency

- Pipe-and-Filter: supports parallelism and modularity, harder to debug

- Process Control: ideal for continuous monitoring, sensitive to component failures

- Common patterns: Lambda (batch + real-time), Kappa (streaming only), Event-Driven, Microservices

- Key tools: streaming (Kinesis, Kafka, Spark), ETL (Airbyte), data warehousing (Redshift, BigQuery), orchestration (Airflow, Prefect)

- Security essentials: encryption, RBAC, PII masking, audit logging

Data flow is an important abstraction in computing that outlines the transmission of data within your system's architecture via nodes and modules. Understanding data-flow architecture is critical for optimizing system performance and enabling data processing across distributed systems.

This article highlights the concept of data-flow architecture, its pros and cons, patterns, tools, and common pitfalls.

What Is Data Flow Architecture?

Data-flow architecture is a schematic representation of how data moves within your system. In this architecture, the software systems are visualized as a series of transformations applied to smaller subsets of input data.

The data flows through different transformation modules until it reaches the output. This approach enables systematic processing where each component has a specific role in handling and transforming data.

How Does Data Flow Architecture Work Behind the Scenes?

Data-flow architecture consists of three execution sequences that determine how data moves through your system.

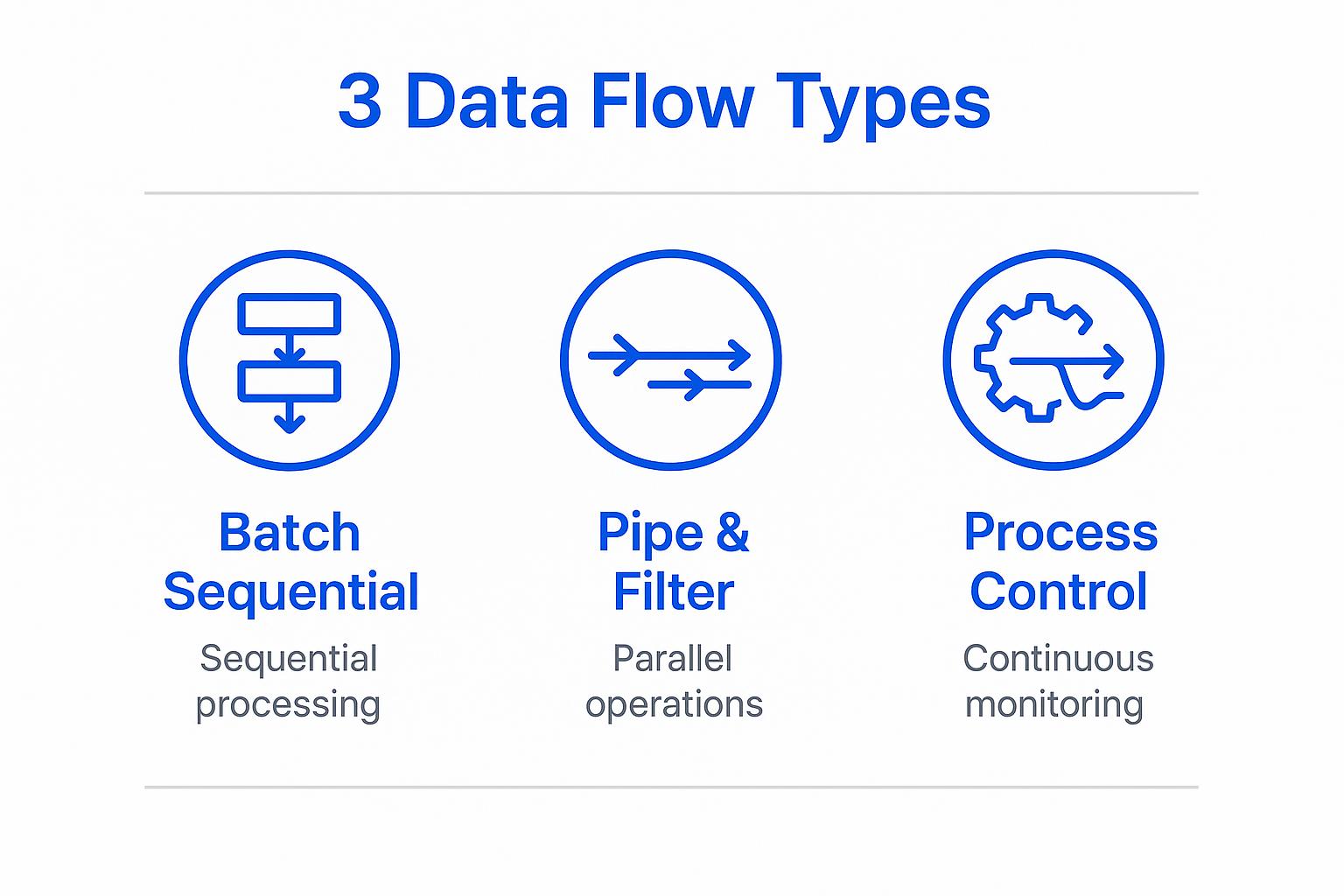

Batch Sequential

A traditional model in which each data-transformation subsystem starts only after the previous subsystem completes. Data, therefore, moves in batches from one subsystem to another.

This sequential approach ensures data integrity but can create bottlenecks in processing speed. Each stage must wait for the previous stage to finish completely before beginning its work.

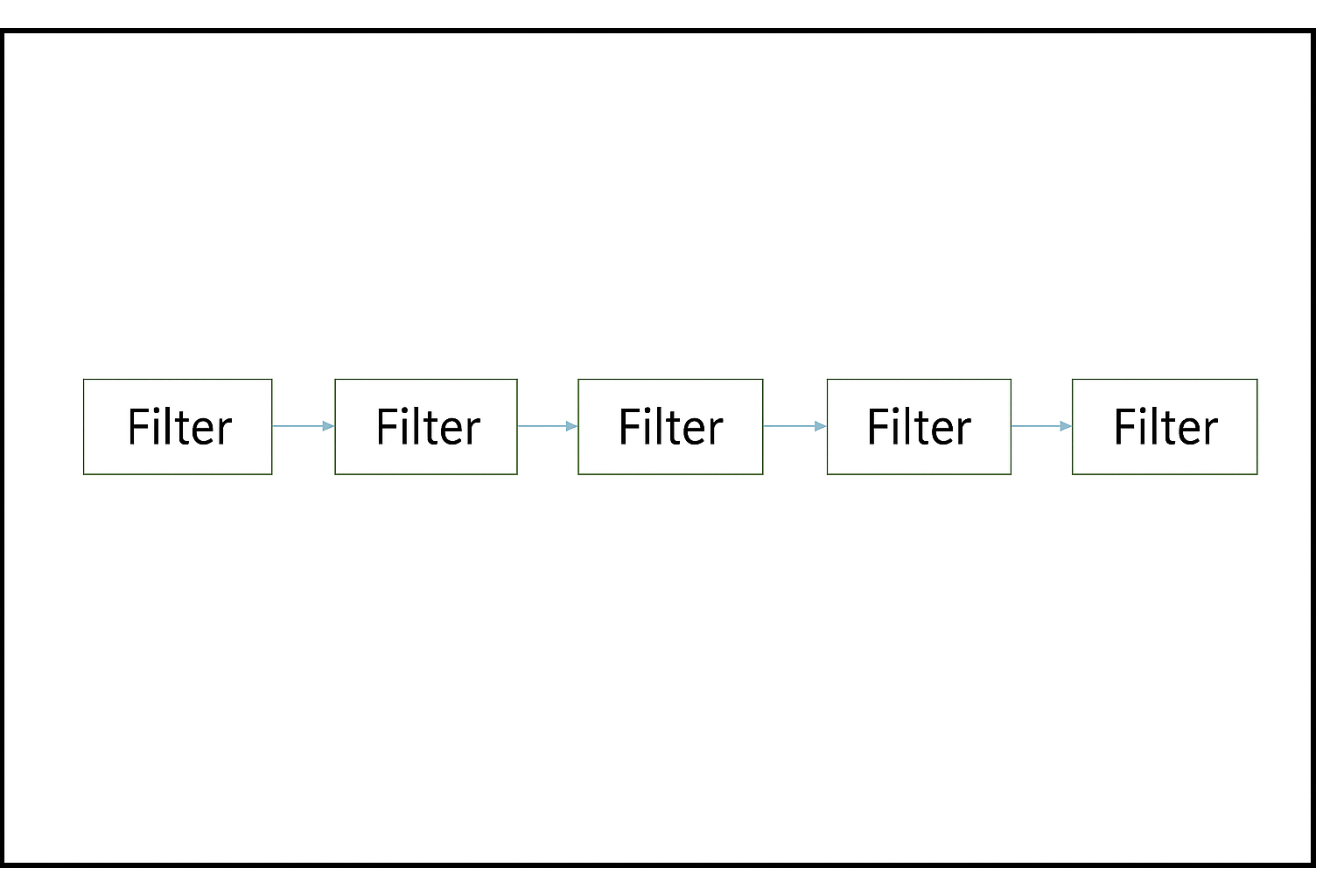

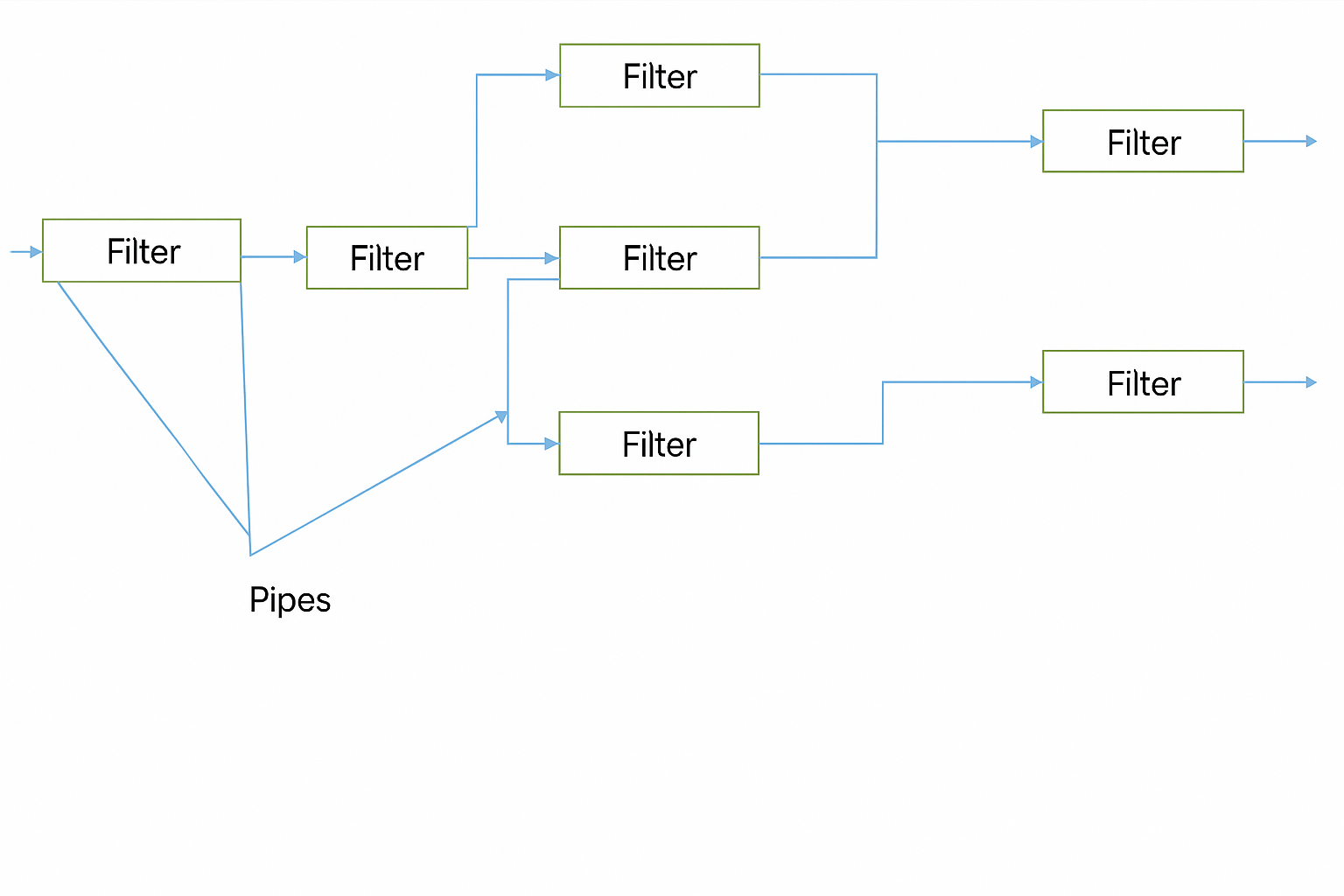

Pipe and Filter Flow

Pipe-and-filter architecture arranges a system into two main components:

- Filters: Components that process, transform, and refine incoming data.

- Pipes: Unidirectional data streams that transfer data between filters without applying logic.

This pattern allows for modular design where each filter performs a specific transformation. Pipes serve as connectors that enable data to flow smoothly between processing stages without interference.

Process Control

Unlike Batch Sequential and Pipe-and-Filter, Process Control architecture splits the system into a processing unit and a controller unit. The processing unit alters control variables, while the controller calculates how much change to apply.

Common use cases include embedded systems, nuclear-plant management, and automotive systems such as anti-lock brakes. This architecture excels in environments requiring continuous monitoring and adjustment.

What Are the Advantages and Disadvantages of Data Flow Architecture?

Understanding the benefits and limitations helps you make informed decisions about implementing data flow architecture in your systems.

Advantages

- Batch Sequential is easy to manage because the data follows a simple, linear path. This predictability makes debugging and maintenance straightforward for development teams.

- Pipe-and-Filter allows both sequential and parallel operations, enabling concurrency and high throughput. You can process multiple data streams simultaneously while maintaining system modularity.

- Process Control allows you to update control algorithms without disrupting the entire system. This flexibility enables continuous improvement and adaptation to changing requirements.

Disadvantages

- Batch Sequential suffers from high latency because each batch must finish before the next starts. This limitation can impact real-time processing requirements and overall system responsiveness.

- Pipe-and-Filter can be hard to maintain; it lacks dynamic interaction and may introduce transformation overhead. Complex filter chains can become difficult to debug when issues arise.

- Process Control makes it difficult to handle unexpected disturbances caused by malfunctioning components. System stability depends heavily on the reliability of individual control elements.

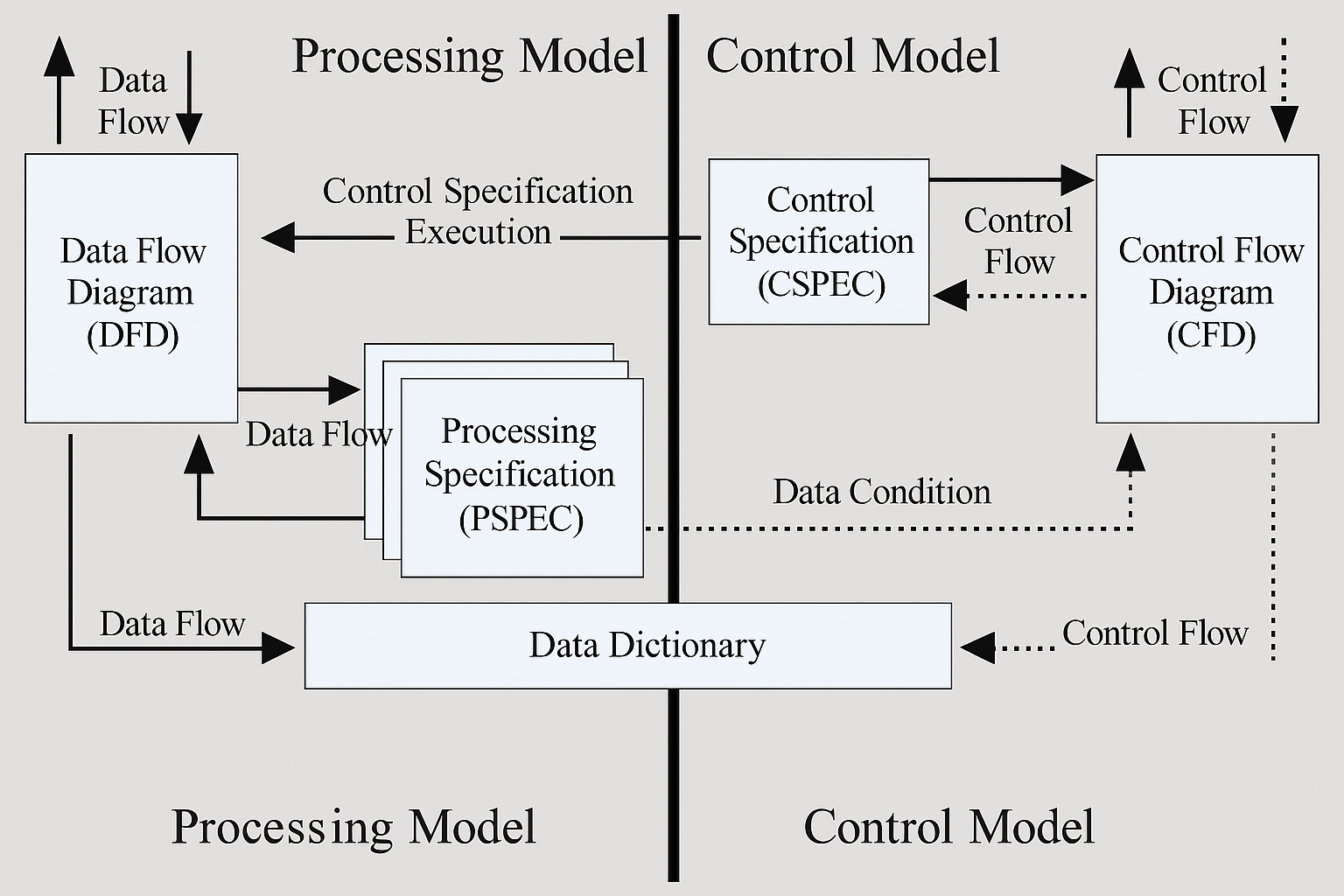

How Does Control Flow Differ from Data Flow Architecture?

The distinction between these two approaches affects how you design and implement your systems:

What Are the Most Common Data-Flow Architectural Patterns?

These patterns provide proven approaches for implementing data flow architecture in different scenarios:

- Lambda: A big-data model that combines batch and real-time processing. It has three layers: batch, speed, and serving, providing scalability and fault tolerance. This pattern handles both historical data analysis and real-time stream processing. The serving layer merges results from both batch and speed layers to provide comprehensive data views.

- Kappa: A simplified model focused purely on stream processing; all data is treated as streams. This approach eliminates the complexity of maintaining separate batch and streaming codebases. Kappa architecture processes both real-time and historical data through the same streaming pipeline. This unification reduces operational complexity and development overhead.

- Event-Driven: Applications react to system, user, or external events. Decoupled components improve flexibility and scalability. Events trigger specific actions or workflows within the system. This pattern enables loose coupling between components and supports asynchronous processing.

- Microservices: An application is split into small, autonomous services communicating over well-defined APIs. Each service can be developed, deployed, and scaled independently. This pattern promotes modularity and enables teams to work on different services simultaneously. Communication between services typically occurs through lightweight protocols like HTTP or messaging queues.

Which Data Flow Architecture Tools Should You Use?

Selecting the right tools depends on your specific requirements and existing technology stack.

Stream & Batch Processing

Tools like Amazon Kinesis (for stream processing), Apache Kafka (primarily for streaming, with batch-like support), and Apache Spark (for both stream and batch processing) help handle high-volume data ingestion and processing across distributed systems.

Amazon Kinesis excels at real-time streaming analytics. Apache Kafka provides robust message queuing and stream processing capabilities. Apache Spark offers unified analytics for both batch and streaming workloads.

ETL/ELT Tools

Airbyte and other data-integration platforms simplify extract-load-transform workflows and provide over 600 pre-built connectors.

Key Airbyte features include:

- Custom Connector Development: You can build connectors with the no-code Connector Builder or low-code Connector Development Kit (CDK).

- AI-Enabled Connector Builder: This includes an AI assistant that automatically fills configuration details from API documentation.

- Enterprise-Level Support: The Self-Managed Enterprise Edition provides multitenancy, RBAC, encryption, PII masking, and SLA-backed support.

- Vector Database Support: Airbyte offers integrations with Pinecone, Milvus, Weaviate, and other vector databases.

Data Warehousing

Cloud warehouses such as Amazon Redshift, Google BigQuery, and Snowflake centralize large datasets while minimizing infrastructure management. These platforms provide scalable storage and compute resources for analytical workloads.

Modern data warehouses support both structured and semi-structured data formats. They offer built-in optimization features that improve query performance and reduce costs.

Monitoring

Data-observability platforms like Monte Carlo automatically track data pipelines to detect bottlenecks and quality issues, while tools like Datadog and Grafana provide visibility into system performance and can be configured to monitor aspects of data health.

Monitoring solutions help identify performance degradation before it impacts users. They also support alerting mechanisms that notify teams when issues require immediate attention.

Data Orchestration

Tools like Dagster, Prefect, Kestra, and Apache Airflow coordinate tasks and schedule workflows. These platforms manage dependencies between different data processing stages.

Orchestration tools provide visual interfaces for monitoring workflow execution. They support error handling, retry logic, and notification systems for failed tasks.

What Challenges Should You Expect When Designing Data Flow Architecture?

Understanding these common obstacles helps you prepare for successful implementation:

- Data consistency challenges: Maintaining data consistency and accuracy across multiple processes requires careful coordination. You must synchronize updates and prevent corruption as data moves through different transformation stages.

- Security requirements: Securing data in transit and at rest demands comprehensive protection strategies. Encryption, PII masking, and role-based access control become essential components of your architecture.

- Performance balancing: Achieving low latency for real-time workloads while preserving data quality presents ongoing technical challenges. You need to balance processing speed with validation and error-handling requirements.

Conclusion

By incorporating data-flow architecture, you can optimize performance and scalability, but you must weigh its limitations. The right combination of architectural patterns and supporting tools can automate routine operations and enhance reliability. Understanding the different execution models helps you choose the most appropriate approach for your specific use case. Successfully implementing data flow architecture requires careful consideration of security, consistency, and latency requirements.

Frequently Asked Questions

What is the main difference between data flow and control flow architecture?

Data flow architecture focuses on how data moves and transforms through a system, emphasizing the path and processing of information. Control flow architecture centers on the sequence and conditions under which operations execute, prioritizing task scheduling and workflow management over data movement.

When should I choose batch sequential over pipe and filter architecture?

Choose batch sequential when you need simple, predictable data processing with clear stages and can tolerate higher latency. Select pipe and filter when you require parallel processing capabilities, need to handle multiple concurrent data streams, or want modular components that can be independently maintained and updated.

How does Lambda architecture handle both real-time and batch processing?

Lambda architecture uses three layers: a batch layer for historical data processing, a speed layer for real-time stream processing, and a serving layer that merges results from both.

This approach provides comprehensive data analysis by combining the accuracy of batch processing with the immediacy of stream processing.

What are the key security considerations for data flow architecture?

Essential security measures include encrypting data in transit and at rest, implementing role-based access control to restrict data access, masking personally identifiable information during processing, and maintaining comprehensive audit logs. You should also secure all connection points between components and validate data integrity at each transformation stage.

How do I choose the right tools for my data flow architecture?

Select tools based on your data volume, processing requirements, existing infrastructure, and team expertise. Consider factors like real-time versus batch processing needs, scalability requirements, budget constraints, and integration capabilities with your current technology stack. Start with proven solutions that match your immediate needs and can scale as your requirements grow.

.webp)