What Is Sharding in Database? A Comprehensive Guide

Summarize this article with:

✨ AI Generated Summary

Database sharding is a horizontal scaling technique that partitions large datasets into independent shards distributed across multiple servers, enhancing scalability, performance, and fault tolerance. Modern AI-driven sharding automates optimization tasks like predictive scaling, query routing, and self-healing, enabling dynamic adaptation to workload changes and reducing manual intervention. Key benefits include increased storage capacity, improved throughput, and compliance support, while challenges involve operational complexity and data consistency; cloud-native and autonomous sharding solutions further simplify management and cost-efficiency.

When predictive models serving millions of users experience performance degradation due to database bottlenecks, organizations face a critical decision: continue scaling vertically with increasingly expensive hardware upgrades, or implement horizontal scaling through database sharding to distribute load across multiple servers.

Database sharding emerges as the definitive solution for applications requiring massive scalability, enabling systems to handle exponential data growth while maintaining sub-second response times and high availability.

TL;DR: Database Sharding at a Glance

- Database sharding partitions large datasets into smaller, independent segments distributed across multiple servers, enabling horizontal scaling and parallel query processing

- Evaluate alternatives first—vertical scaling and replication may suffice for smaller workloads before committing to sharding's operational complexity

- Modern AI-driven sharding systems automate optimization tasks like predictive scaling, intelligent query routing, and self-healing recovery from failures

- Key benefits include increased storage capacity, enhanced throughput, and fault tolerance, but watch for data hotspots and cross-shard query overhead

- Airbyte provides 600+ connectors and native support for sharded environments, enabling seamless data integration across distributed architectures

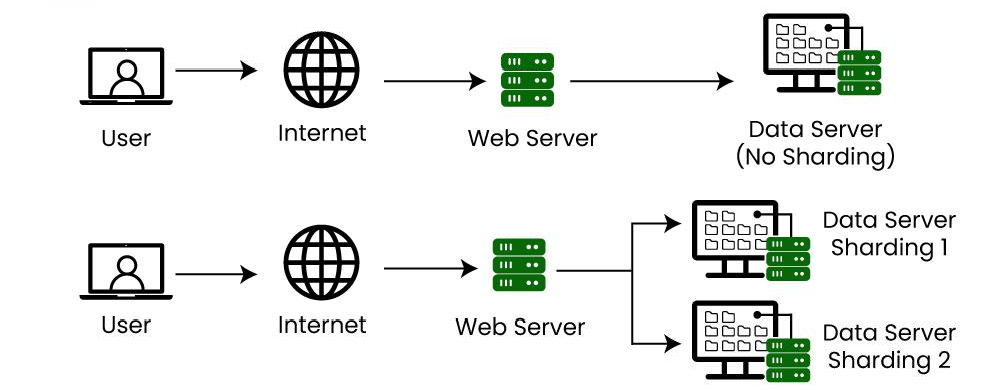

What Is Database Sharding and How Does It Work?

Database sharding is a horizontal scaling solution that partitions large datasets into smaller, independent segments called shards. Each shard contains the same database schema as the original database, ensuring structural consistency across all partitions. However, the data within each shard is unique, with no overlap between shards, creating a distributed architecture where queries can be processed in parallel across multiple servers.

These shards are distributed across different servers or nodes, enabling the database system to handle more users, queries, and transactions simultaneously. Although the shards operate independently on separate nodes, they share the same underlying infrastructure and technologies like DBMS, networks, or storage devices. Modern sharding implementations incorporate intelligent routing layers that direct queries to appropriate shards based on data location, significantly reducing query response times and improving overall system performance.

The sharding process involves three critical components:

- Shard key selection

- Data distribution strategy

- Query routing mechanisms

Do You Really Need Database Sharding?

Sharding in a database is a powerful technique to improve performance and scalability, but you should first evaluate alternative strategies that might be easier to manage.

Vertical Scaling

Vertical scaling (scaling up) involves upgrading your existing server by adding more resources such as CPU, RAM, or storage.

Pros

- Simple to implement—just upgrade a single server; no changes to application logic or architecture

- Avoids the complexity of managing multiple shards

- Suitable when scalability requirements are limited

Cons

- Hard limit on how much a single server can be upgraded

- Upgrades (especially high-end servers) are expensive

- A single point of failure—if the server goes down, so does the application

Replication

Replication creates exact copies of your database on multiple servers, allowing you to distribute read workloads.

Pros

- Improves read performance by spreading traffic across replicas

- High availability—replicas can take over if the primary fails

- Easier to adopt than sharding

Cons

- Does not improve write performance—writes still go to the primary

- Storage costs rise because every replica stores a full copy of the data

- Requires synchronization between replicas

Specialized Services or Databases

You can also turn to specialized types of databases or services designed for specific workloads (e.g., MongoDB, Cassandra, Redis).

Pros

- Tailored for specific use cases (event streaming, real-time analytics, caching, etc.)

- Offloads certain workloads, potentially downsizing primary DB infrastructure

Cons

- Requires learning new architectures and query patterns

- May require architectural changes and extra development effort

What Are the Key Features of Database Sharding?

Horizontal Scaling

Sharding lets you scale horizontally by distributing data across multiple shards, each stored on a separate server according to a shard key (for example, user ID or region).

When the data volume grows, you simply add new servers to handle the load—often at a lower cost than a single, high-end machine.

Improved Performance

Queries hit only the shard that contains the relevant data, drastically reducing response times.

Fault Tolerance

Because shards operate independently, the failure of one shard or server does not bring down the entire system.

Database Standardization

Consistent schema design, naming conventions, and indexing across shards ensure seamless distribution and retrieval of data. Learn more about database standardization.

Partitioning Strategies & Data Locality

Common strategies include partitioning by geography or tenant so data is closer to end users, reducing latency. Explore more about data partitioning strategies for distributed systems.

What Are the Primary Benefits of Database Sharding?

- Increased storage capacity — add shards to expand capacity beyond a single server

- Enhanced read/write throughput — distributes operations across servers

- Efficient resource utilization — balanced load = better overall performance

- Data isolation — store sensitive data by region or tenant to support compliance

- Reduced costs — multiple commodity servers can be cheaper than one large server

What Are the Main Disadvantages of Database Sharding?

- Data hotspots — uneven distribution can overload a shard

- Operational complexity — you must manage, monitor, back up, and query multiple DBs

- Query overhead — cross-shard joins and aggregations require coordination

- Data consistency challenges — keeping data synchronized across shards is hard

- Over-sharding risk — too many small shards hurt performance and add overhead

- Fragmentation — sub-optimal data layout can hinder some access patterns

How Are AI-Driven Sharding Automation Systems Transforming Database Performance?

Modern sharding implementations leverage artificial intelligence to automate complex optimization tasks that traditionally required manual administration and deep expertise. AI-driven sharding systems represent a fundamental shift from static partitioning approaches to dynamic, self-optimizing architectures that continuously adapt to changing workload patterns and data access behaviors.

Intelligent Key Management and Predictive Scaling

AI-enhanced sharding platforms like Oracle 23c analyze access frequencies and query patterns using machine learning algorithms to automatically redistribute data and prevent hotspots. These systems detect shifting usage patterns—such as seasonal spikes in e-commerce platforms—and proactively migrate user segments to optimally sized shards without manual intervention. Predictive elastic scaling integrates time-series forecasting to anticipate infrastructure needs, with systems like MongoDB 7.0's AutoMerger preemptively spawning or merging shards before congestion occurs.

Automated Query Optimization and Routing

Machine learning models now dynamically restructure sharded queries for optimal performance across distributed environments. AI-driven query optimizers employ reinforcement learning to rewrite cross-shard joins into parallelized scan operations, convert complex subqueries into batched multi-get operations, and dynamically adjust batch sizes based on network conditions.

Geographic Intelligence and Latency Minimization

AI-enhanced geographic sharding transcends basic region-based partitioning by incorporating real-time network congestion metrics, data sovereignty regulations, and user density heatmaps. Oracle 23c's implementation dynamically routes queries through multilayer decision engines that evaluate current network conditions and regulatory compliance requirements.

What Are Self-Healing Adaptive Sharding Architectures?

Self-healing sharding systems represent the next evolution in distributed database management, incorporating biological resilience principles to create architectures that autonomously recover from failures and adapt to workload fluctuations. These systems eliminate the traditional dependency on human intervention during outages and performance degradation events.

Autonomous Node Recovery and Regeneration

Self-healing architectures implement fractal regeneration mechanisms where damaged nodes reconstruct their operational state through distributed snapshots. When failures occur—such as disk corruption or network partitions—adjacent nodes collectively regenerate lost shard segments using cryptographic checksums and consensus protocols.

Behavioral Clustering and Adaptive Data Placement

Temporal sharding with behavioral clustering revolutionizes data placement by incorporating access-pattern intelligence that goes beyond traditional time-based partitioning. Systems classify data into hot, warm, and cold tiers based on access recency, seasonality detection through machine learning, and entropy-based prioritization algorithms.

Predictive Failure Mitigation

Advanced self-healing systems employ anomaly detection algorithms to preempt node failures before they impact system performance. By continuously analyzing hardware vitals, query latency deviations, and error-rate trends, these systems trigger proactive shard migration when early warning indicators suggest impending failures.

Cognitive Load Balancing

Self-healing architectures incorporate neuroplastic topologies that mimic brain adaptability, enabling shard reconfiguration based on real-time workload analysis. These systems learn from access patterns and automatically adjust data distribution to optimize query performance while minimizing cross-shard coordination overhead.

How Are Cloud-Native and Autonomous Sharding Solutions Transforming Database Management?

Modern cloud platforms have revolutionized sharding, automating tasks that once required significant manual administration.

- Oracle Globally Distributed Autonomous Database automatically distributes data, handles replication, failover, and scaling.

- Elasticsearch Serverless adjusts shard counts based on indexing load, redistributing shards during maintenance windows.

- Amazon Aurora PostgreSQL's Limitless Database separates routing and storage layers, allowing independent scaling and zero-downtime shard splits.

What Role Does Artificial Intelligence Play in Modern Sharding?

AI and ML have moved sharding from static partitioning to predictive optimization.

Machine Learning-Enhanced Shard Placement

Reinforcement-learning agents test partitioning scenarios against historical workloads, balancing load and minimizing cross-shard operations.

Vector Database Sharding for AI Workloads

Vector databases (e.g., Pinecone, Weaviate, Milvus) employ proximity-based sharding, where k-means clustering groups similar embeddings into shards. Learn more about vector databases and their role in AI applications.

Predictive Shard Management

Autonomous databases use neural networks to forecast shard load, triggering rebalancing before hotspots develop and using anomaly detection to isolate skewed access patterns.

What Are the Security and Compliance Considerations for Sharding?

Geographic Sharding for Data Residency

Systems like Oracle Sharding enable explicit shard-placement policies that bind data to specific countries or regions, supporting GDPR and CCPA. Explore data governance best practices for distributed environments.

Cross-Shard Security Protocols

Each shard can use region-specific encryption keys managed by cloud HSMs. Distributed queries may apply differential privacy when aggregating data across shards.

Compliance Automation

Modern platforms include policy engines that automatically configure encryption, access controls, and audit settings based on a shard's location—blocking cross-border transfers when needed.

What Are the Advances in Distributed SQL and NewSQL Sharding?

- CockroachDB automatically shards data across nodes while exposing a single SQL interface.

- MongoDB 7.0 AutoMerger combines undersized chunks to reduce fragmentation.

- Hybrid Transactional/Analytical Processing (HTAP) uses sharding to separate OLTP and OLAP workloads while keeping data synchronized.

- Zero-Downtime Resharding — logical replication and storage-level cloning (e.g., Aurora PostgreSQL) enable online resharding at petabyte scale.

How Does Airbyte Support Your Sharding Implementation?

Airbyte has evolved beyond traditional data integration to become an AI-enabling platform with breakthrough capabilities in unstructured data handling and global compliance management. The platform provides over 600+ pre-built connectors and a Connector Builder for custom sources and destinations, enabling seamless data movement between sharded and non-sharded environments while supporting the critical data preparation workflows required for modern distributed architectures.

Advanced Sharding Support Capabilities

Airbyte's recent enhancements specifically address the data integration challenges associated with sharded database environments. The platform's hybrid synchronization mode allows simultaneous transfer of structured records and unstructured files within the same pipeline, preserving metadata relationships critical for distributed database architectures. This capability addresses previous limitations where unstructured data handling required separate pipelines, streamlining data preparation for sharded analytical stores.

AI-Ready Data Integration

The platform's mid-2025 updates target foundational requirements for AI-driven sharding implementations through native support for vector databases, including Pinecone, Weaviate, and Milvus, with automatic chunking and indexing options. Airbyte enables RAG pipeline optimization by synchronizing raw files alongside structured records, preserving metadata relationships essential for retrieval-augmented generation accuracy in sharded environments.

Performance and Cost Optimization

Airbyte's July 2025 release introduced direct loading capabilities for BigQuery and Snowflake that reduce compute costs by 50-70% and increase speed by 33% through bypassing intermediate processing. Multi-region deployments enable enterprise customers to deploy isolated data planes across geographic regions governed by a single control plane, reducing cross-region egress fees by up to 45%—a critical capability for geographically sharded database architectures.

Enterprise Governance and Compliance

The platform addresses data sovereignty requirements through workspace-specific region assignment, ensuring data residency compliance under GDPR, CCPA, and other regulations. Stream namespace enforcement prevents destination collisions across connections, while hybrid deployment security supports custom Docker registries and image provenance validation for organizations implementing sharding across multiple regulatory jurisdictions.

Key capabilities include high-volume, low-latency data movement that aligns with AI-driven sharding strategies across multi-cloud and hybrid environments, connectors for sources like Bing Ads and GNews, and comprehensive support for both structured and unstructured data types required for modern sharded architectures.

Get started with Airbyte Cloud today to implement sharding-optimized data pipelines that scale with your distributed database architecture.

Conclusion

Database sharding has evolved from a manual scaling technique to a sophisticated, AI-driven, cloud-native practice. With predictive analytics, automated rebalancing, and intelligent routing, modern sharding delivers unprecedented scalability while simplifying operations.

As data volumes soar and AI workloads multiply, sharding strategies must accommodate vector similarity searches, real-time analytics, and strict compliance demands. Organizations that evaluate business requirements carefully and adopt cloud-native, autonomous solutions can transform scaling challenges into competitive advantages.

Frequently Asked Questions

When should I choose vertical scaling versus database sharding?

Vertical scaling is simpler and cost-effective for smaller databases (≤ 1 TB) or fewer than ~10 k concurrent users. Sharding makes sense when:

- The dataset or user count continually outgrows the largest affordable server

- Write throughput saturates a single instance

- High availability demands multiple failure domains

Beyond 64+ CPU cores (or comparable cloud tiers), the economics usually favor sharding.

How do I determine if my application needs database sharding?

Monitor indicators such as:

- Sustained CPU utilization > 80 %

- Average query latency rising despite indexing and tuning

- I/O waits dominating performance metrics

- Infrastructure cost > 15-20 % of total spend

Tools like PostgreSQL's pg_stat_statements or MySQL's Performance Schema quantify these metrics.

How are AI and ML techniques enhancing modern sharding implementations?

AI/ML bring:

- Predictive autoscaling — forecasting growth to split shards proactively

- Reinforcement-learning-based partitioning — testing configurations to reduce cross-shard traffic

- Anomaly detection — identifying skewed access patterns in real time

These techniques are invaluable for dynamic workloads such as e-commerce seasonal peaks or volatile financial markets.

.webp)