Optimizing ETL with Parallel Processing for Faster Data Loads

Summarize this article with:

✨ AI Generated Summary

ETL transforms unstructured data into structured formats for easier analysis, but large datasets can cause performance bottlenecks. Parallel processing breaks tasks into smaller chunks executed simultaneously, improving speed, resource utilization, and scalability.

- Key parallel processing types: SIMD, MIMD, SPMD, MPMD.

- Techniques for ETL optimization: data partitioning, pipeline processing, and batching.

- Challenges include complexity, data integrity risks, and security concerns.

- Tools like Airbyte support parallel processing with features such as pre-built connectors, incremental syncs, multithreading, and batch processing to enhance ETL workflows.

ETL goes beyond just a set of operations. It is a complex process that enables you to transform unstructured data into a structured, usable format. This transformation is important as you can easily analyze, visualize, and derive insights from structured data. When dealing with large datasets, the complexity of transformations within the ETL processes can cause delays and performance bottlenecks.

Parallel processing allows you to implement multiple tasks that can be executed simultaneously. This process allows you to break down the workload into smaller and more manageable chunks. By combining parallel processing within ETL operations, you can reduce processing times and improve overall performance.

In this article, you will learn how parallel processing in the ETL workflow can optimize performance and accelerate the delivery of critical data.

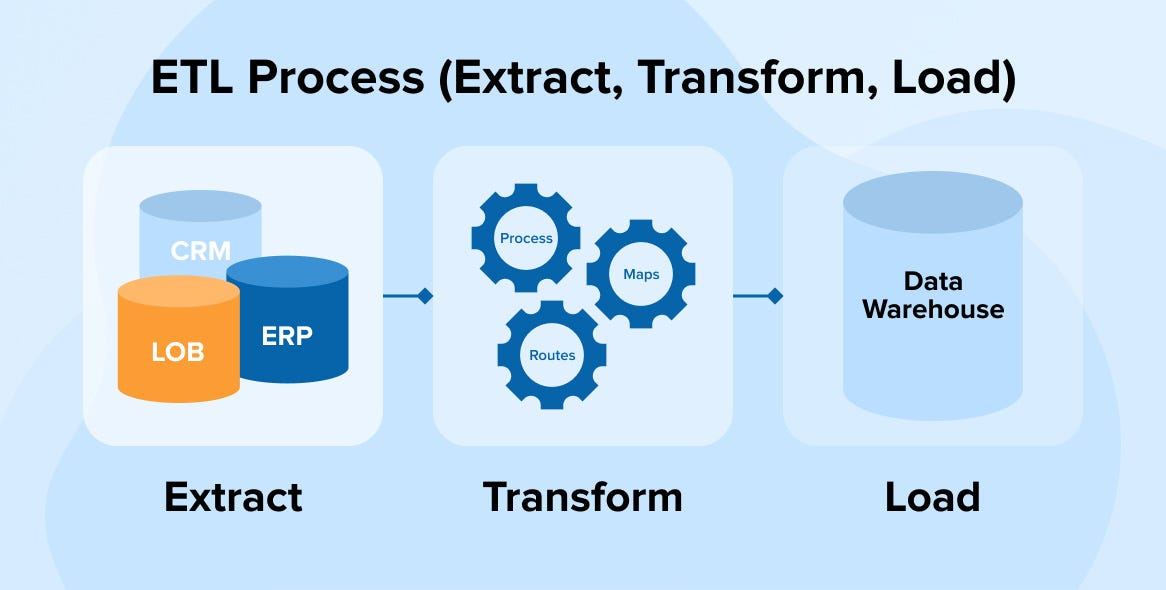

Understanding ETL Process

ETL is a data migration process that helps you collect data from a source, transform it, and then load that data into a central repository. The first step involves extracting raw data from sources like databases, API, or flat files. A staging area (landing zone) temporarily stores the extracted data. This staging area serves as a buffer zone where data can be stored before any transformation occurs.

Once you collect all the relevant data and review it in the staging area, the next step is to transform it. In the transformation phase, raw data is converted into usable format by applying various operations to the data, such as cleaning, filtering, aggregation, and normalization. These operations prepare the data and ensure it meets the requirements for downstream applications like analytics, reporting, or machine learning.

Once the data is reviewed, cleansed, and transformed, you can incorporate parallel loading to move the data into a database or data warehouse system, where it can be further processed.

What is Parallel Processing?

Parallel processing is a computational method in which a particular task is broken down into smaller sub-tasks, which are then executed simultaneously on multiple processing units. This approach notably improves the performance of ETL tools by reducing execution time and enhancing scalability.

Types of parallel processing based on how tasks are executed concurrently:

- Single Instruction, Multiple Data (SIMD): SIMD is a method that processes multiple data simultaneously just by enabling a single instruction. In this method, you apply the same operation on multiple data items at the same time. SIMD is often used in loops to perform more work per iteration.

- Multiple Instruction, Multiple Data (MIMD): MIMD is a parallel computing method where multiple processors execute different instructions on different datasets simultaneously. It is ideal for application areas such as computer-aided manufacturing, simulation, or modeling.

- Single Program, Multiple Data (SPMD): SPMD is similar to MIMD; the only difference is that the same program or set of instructions is executed on different data simultaneously on multiple processors. This type of parallelism is helpful in large-scale simulations, such as climate modeling.

- Multiple Program, Multiple Data (MPMD): MPMD parallel processing helps you execute different program logic on various datasets simultaneously. Each processor has its own program, which generates an instruction stream, and each instruction operates on other data. MPMD is suitable for tasks like object detection and navigation.

Benefits of Using Parallel Processing for ETL Optimization

There are several benefits of using parallel processing to optimize the ETL process. Some of them are:

- Fast Execution Time: With parallel processing, your ETL systems can run multiple tasks simultaneously, reducing the time needed to complete the ETL pipeline. By executing extraction, transformation, and loading tasks concurrently, systems can handle large data efficiently.

- Resource Optimization: Parallel processing optimizes resource utilization by dividing tasks across available processors and nodes. This distribution ensures that resources work to their full potential, helps balance workloads, and improves the capacity of ETL systems without overloading a single node.

- Scalability: As the data volume grows, additional computational resources are required to handle the increased load. Parallel processing allows ETL systems to scale by inducing more processors or nodes to distribute tasks across multiple units evenly.

Techniques to Implement Parallel Processing in ETL Workflows

To maximize ETL performance and optimize data handling, you can implement any of the following parallel processing techniques. These techniques can be used to split and process your organization’s workloads efficiently.

Data Partitioning

Data partitioning is a technique that allows you to split larger datasets into smaller, more manageable chunks of data based on specific criteria. You can individually process each chunk of data and parallelly run tasks across multiple nodes. This method is widely used for both data extraction and transformation.

Some of the data partitioning techniques that you can optimize for parallel processing include range partitioning, key-based partitioning, hash partitioning, round-robin partitioning, and pipelining. For example, you can partition transaction data by the range of amounts and apply different logic to each range.

Pipeline Processing

Pipeline processing is also known as pipelining. It helps you organize tasks into sequences where the output of one operation is used as the input for the next. This method is similar to the assembly line, where each station performs a specific task on a partially assembled product.

In an ETL workflow, pipelining facilitates parallel loading, where data is passed from one stage to another. The data flows continuously through each stage, which is advantageous for real-time data processing and minimizing delays. This method improves throughput and reduces latency.

Batching

Batching is a parallel processing technique used to process large amounts of data by dividing them into batches. Instead of processing data records individually, these batches are processed simultaneously, reducing overhead costs and optimizing resource usage.

By processing data in batches, ETL systems can perform operations more efficiently, enabling parallel execution of multiple tasks across different servers. Batching is useful in scenarios where data is collected over a period of time, such as on a daily or hourly basis, and processed at scheduled intervals. This method helps reduce idle times, optimize computing resources, and make processing tasks more scalable.

Example of Optimizing ETL Process Using Parallel Processing and SQL

Modern database systems are optimized to handle large volumes of data efficiently, especially during batch processing when server activity is low. It allows full use of hardware resources, and you can divide data into smaller chunks to process them simultaneously. This enables the ETL systems to maximize their processing power.

How Data is Processed in ETL WorkFlows:

The ETL workflow follows these steps to load data into any central repository:

- Validate: Ensure the data meets the required format.

- Filter: Removing irrelevant or duplicate data as needed to ensure only necessary information is retained for analysis.

- Transforming: Change data into usable format and structure to user-defined rules, making it more ready for the target system.

- Moving to Production: Transferring the processed data to its final storage area.

Each record must pass certain checks, including business rules, checking other tables, joining data, or adding missing information. Processing these tasks record-by-record takes time. To increase the efficiency of large-scale ETL operations, parallel processing can be introduced as an advanced stage, where multiple records and tasks are processed simultaneously. In addition to this, optimizing SQL queries for techniques like data partitioning and materialized view is even more valuable, as they help reduce processing time further.

Here is an example of how you can implement ETL Parallel Processing:

Let’s assume the incoming data is in CSV file format. For each CSV file loaded into the staging table, you can divide it into manageable chunks, creating a separate thread for each chunk. Each thread processes a subset of data. The data is transferred from the staging area to a temporary table where you can apply business rules for validation and transformation.

For each chunk thread, execute data validation and transformation tasks in parallel, such as checking for null values or formatting inconsistencies. You can check each thread's progress using the ThreadStatus parameter.

To maximize processing performance, you can apply SQL set-based operations to execute validation and transformation. SQL queries allow you to perform these tasks in a single statement, which is much faster and more efficient. For example:

In the above SQL query, the program sets the RecordStatus of the thread to 3 for all records that don’t meet certain conditions. The WHERE clause checks the fields, such as firstname, DOB, and City, have null values. The condition is met if any of these fields have a NULL value. RecordStatus is set to 3, marking these records as incomplete or invalid.

After your data is validated and transformed, you can load threads into the production table. When transferring data from staging to production, you can implement batch processing, which allows you to load data in bulk and reduces the number of operations. The bulk parallel loading improves database write speed.

Challenges of Parallel Processing in ETL

- Complexity: To manage tasks like parallel loading, you might have to implement a complex architecture so that the data coordination and synchronization are appropriate. Ensuring all parallel tasks work together simultaneously can be difficult, especially as the number of dependencies increases.

- Data Quality and Integrity Risks: As different data is processed separately over different processors or nodes, it becomes difficult to ensure data quality and integrity. The risk of inconsistencies or overlapping data can increase. For example, if one node processes outdated data or encounters errors, those issues can propagate throughout the pipeline.

- Security Concerns: Distributing tasks across multiple servers in a parallel processing environment exposes data to potential security vulnerabilities. The data can be susceptible to breaches or unauthorized access, requiring an additional layer of security to be saved from threats.

Leveraging Airbyte for Efficient ETL Workflows and Parallel Processing

Airbyte is an AI-powered robust data migration tool that allows you to transfer data between different sources and destinations. With its easy-to-use interface and library of 400+ pre-built connectors, Airbyte enables you to consolidate datasets from the source into your desired destinations, including databases, data lakes, or APIs.

You can utilize its no-code Connector Builder or Low-Code Connector Development kit (CDK) to develop custom connectors quickly. It has also introduced an AI assistant within the Connector Builder feature, which speeds up the process of building new connectors and configuring the existing ones. The assistant reads the API documentation and pre-fills the configuration fields automatically.

Airbyte supports both ETL and ELT workflows and offers some features to optimize your data pipelines through parallel processing:

1. Using Pre-Built Connectors for MPP Databases

Airbyte’s pre-built connectors help you extract data from various sources and load it into MPP databases such as Amazon Redshift and Snowflake. Once your data is loaded into these databases, you can leverage their parallel processing capabilities to implement various operations simultaneously over multiple nodes.

2. Data Partitioning in Connector Builder

When building a custom connector in Airbyte using Connector Builder, you can implement data partitioning. This technique allows you to break down your incoming data into manageable chunks. Data partitioning can be achieved by configuring the Parameterized Request component within the Connector Builder.

3. Incremental Syncs

Incremental sync allows you to only pull that data from the source that has been updated or modified since the last sync. This reduces the amount of data transferred or processed. You can enable this feature while configuring your source connector in Airbyte, optimizing the sync process by focusing on changes instead of replicating entire datasets.

4. Multithreading in Python Environment with PyAirbyte

Multithreading is a technique that allows a program to run multiple tasks concurrently. Using PyAirbyte, a Python library for building ETL pipelines, you can import Python’s threading module to create and run multiple threads simultaneously. You can also import the IPython parallel framework to set up and execute tasks on various nodes connected to a network.

5. Batch Processing in Airbyte Cloud

Airbyte uses a batch processing approach to move data from source to destination. This helps you to optimize your data pipeline, especially when transferring large amounts of data, as data is grouped together, optimizing resources and increasing speed.

6. Scaling Airbyte after Installing Airbyte-Self-Managed Version

The Self-Managed version allows you to deploy Airbyte locally or in your own infrastructure. Once you have installed Airbyte, you can scale the setup to adapt to the growing data needs. You can implement concurrent syncs and also rightsize Airbyte deployment by configuring the limit to the number of syncs that can be run at the same time.

Conclusion

Integrating parallel processing into ETL workflows can significantly improve performance of your systems and reduce processing time. Using techniques like data partitioning, pipeline processing, or batching, your organization can process and implement parallel loading of datasets, speeding up the entire workflow.