Best Practices for Hybrid Cloud AI Infrastructure Deployment

Summarize this article with:

✨ AI Generated Summary

Hybrid cloud AI infrastructure enables secure, compliant AI workloads by keeping sensitive data local while leveraging cloud orchestration and elastic GPU compute. Key best practices include:

- Maintaining data sovereignty by processing regulated data on-premises or within jurisdictional boundaries.

- Centralizing orchestration via a managed control plane to unify job scheduling and policy enforcement.

- Integrating identity management and secrets handling across environments for consistent security and auditability.

- Designing elastic compute strategies to balance steady on-prem workloads with cloud bursting for peak demand.

- Implementing continuous compliance monitoring and disaster recovery testing to ensure regulatory adherence and resilience.

- Using unified data movement layers and AI-ready pipelines to reduce data silos and improve model quality.

Airbyte Enterprise Flex exemplifies this approach by orchestrating pipelines from the cloud while keeping sensitive data and credentials within private environments, supporting compliance with HIPAA, GDPR, and SOC 2, and enabling seamless hybrid deployments with consistent tooling and connectors.

Cloud AI gives you instant GPU access, but every data transfer and compliance requirement creates new risks. Your sensitive training data can't leave regulated environments, yet you need cloud-scale compute and orchestration.

Healthcare teams face strict constraints around moving patient records to public clouds under HIPAA compliance. Financial services encounter similar barriers with customer data under GDPR. Manufacturing companies need AI insights but can't risk exposing proprietary processes. Meanwhile, your team juggles disconnected tools across environments, watching costs spiral from unused GPU instances and data transfer fees.

Fragmented pipelines strip context from unstructured data just when your models need it most. As a result, projects that work in development fail in production, or never leave the pilot phase.

The practices below show you how to run AI workloads locally while using cloud orchestration, keeping sensitive data under your control without sacrificing performance or automation capabilities.

What Are The Best Practices for Hybrid Cloud AI Infrastructure?

Hybrid cloud deployment only pays off when you translate theory into day-to-day engineering habits. The eight practices below come from teams that already run regulated workloads across both on-premises clusters and public clouds.

1. Keep Sensitive Data Local

Regulated data should never cross the boundary of your private data plane. By running extraction and loading jobs inside the same VPC or even the same rack, you eliminate the "credential hop" that often leaks keys or temporary buffers.

Hospitals provide a clear pattern: they train clinical language models inside HITRUST-certified environments so that electronic health records remain on-prem while less sensitive inference traffic can burst to cloud GPUs. That approach upholds data sovereignty, the legal principle that data is governed by the laws of the country where it physically sits.

Knowing the geographic region or jurisdiction where a dataset lives helps satisfy rules like GDPR or HIPAA. Data sovereignty requirements become increasingly critical as AI workloads span multiple jurisdictions.

2. Centralize Orchestration in a Managed Control Plane

A single control plane gives you one place to schedule jobs, push connector updates, and enforce global policies. This beats coordinating ad-hoc scripts across disconnected tribes of data teams.

Central orchestration also removes ambiguity about who owns production incidents, a gap that derails many pilots before they reach production.

3. Bring Data Security and Identity Management Together

Hybrid architectures only work when every environment honors the same identity and secrets policies. Plugging Kubernetes clusters into enterprise SSO with RBAC tiers means you can grant a data scientist read access to a staging connector without touching production keys.

Pair that with a secrets manager such as AWS Secrets Manager or HashiCorp Vault, and every credential change can be logged for auditors if audit logging is properly configured. This becomes critical when workloads span clouds with different baseline controls.

This scheme closes the multi-environment visibility gaps that contribute to project failures.

4. Design for Elastic Compute and Regional Scalability

GPU demand is spiky. Let on-prem clusters handle steady-state training, then burst to cloud capacity when experiments need extra memory. Containerized workloads on Kubernetes keep the move transparent, and maintaining the same driver versions across both sites prevents model drift.

This elasticity addresses the growing trend toward GPU-as-a-service usage. It also prevents the orphaned clusters that inflate bills when teams book resources without oversight, a pattern that contributes to the coordination failures plaguing enterprise AI initiatives.

5. Establish a Single Data Movement Layer

Moving data is safer than copying scripts if the pipeline captures lineage and schema history. Change-data-capture (CDC) replication preserves ordering; external audit logs prove nothing was modified in transit.

Modern data orchestration platforms handle these pipelines from the cloud while running connectors inside your VPC, ensuring credentials never leave your walls. Enterprise-grade data movement solutions provide this capability at scale.

A unified data layer dissolves the silos that otherwise stall AI projects in fragmented warehouses.

6. Enable Continuous Compliance and Monitoring

Regulations don't pause for quarterly reviews. Capture lineage, access events, and transformation metadata in real time, then feed that into automated SOC 2, HIPAA, or DORA reports.

Monitoring cross-region transfers for anomalies can spot policy violations before auditors do. This is increasingly important, as many organizations report bandwidth constraints that have the potential to mask illicit traffic.

Continuous evidence collection closes the compliance gap exposed when data hops between jurisdictions.

7. Optimize for AI-Ready Data Pipelines

Models fail fast on dirty data. Standardize on open formats like Parquet or Iceberg to reduce conversion overhead, making it easier for downstream feature stores to ingest files with minimal integration effort.

Automate schema evolution and keep unstructured context like PDFs, call transcripts, and log lines alongside structured rows. Retrieval-augmented generation depends on that richness.

This directly tackles the poor-quality data and bias issues that contribute to AI project failures across the industry.

8. Test Disaster Recovery Across Environments

Failover drills reveal whether metadata, checkpoints, and secrets really sync between on-prem and cloud clusters. Define recovery-time (RTO) and recovery-point (RPO) objectives, then simulate a region outage each quarter.

Copying configuration as-code ensures a rebuild mirrors production instead of a six-month-old snapshot. The exercise pays off when network instability becomes a regular challenge, as latency incidents are a growing concern according to industry reports.

What Pitfalls Should You Avoid?

Even with solid architecture, common mistakes can derail your deployment:

- Uncontrolled data transfers between clouds. Moving training data between clouds without clear governance creates significant risk. Each transfer multiplies compliance exposure and can strain network resources. Bandwidth constraints and latency incidents have become a growing concern for enterprises adopting multi-cloud strategies.

- Orphaned GPU clusters driving cost overruns. Teams spin up GPU clusters and vector stores ad-hoc, then forget to tear them down. This pattern emerges as a leading cause of wasted spend and fragmented initiatives. The same coordination gaps create siloed model registries, leaving you debugging drift instead of shipping features.

- Over-monitoring without response plans. Tracking every metric looks thorough, but instrumentation without response plans only increases cost and cognitive load. Focus on visibility that drives action: data lineage for auditors, GPU utilization for ops, adoption metrics for product owners.

- Letting tactical decisions outpace unified strategy. Failed pilots, legacy-system complications, and runaway budgets share the same root cause: letting tactical decisions outpace unified strategy. Avoid this by enforcing policy before migration, centralizing registries, and measuring observability by decisions made, not charts rendered.

How Airbyte Enterprise Flex Enables Secure Hybrid AI Infrastructure

Airbyte Enterprise Flex orchestrates pipelines from the cloud without surrendering data control. The control plane runs in Airbyte Cloud, but extraction and loading execute inside your hosted data plane, on-prem or in any VPC. Sensitive tables and credentials never cross your boundary.

Region-locked processing keeps EU data in Frankfurt and U.S. ePHI in Virginia, meeting GDPR and HIPAA residency requirements. Built-in encryption, granular RBAC, and SOC 2 audit logs support regulatory compliance, though full DORA alignment may require further assessment or controls.

Flex shares the same codebase as Airbyte Cloud, maintaining the full feature set. The 600+ connectors run unchanged, whether you're streaming events into Snowflake, fine-tuning models on Databricks, or indexing unstructured text in a vector store. New sources deploy anywhere in hours using the low-code CDK.

Upgrades flow automatically through the control plane, eliminating patch gaps that surface when teams fork tooling for on-prem work. You get uniform APIs, Terraform modules, and audit semantics across edge, private cloud, and public GPU clusters. Pipeline behavior stays consistent as workloads shift.

What's the Takeaway for Enterprise AI Teams?

Hybrid deployment offers the safest path to production-grade AI. By keeping sensitive data local while centralizing orchestration, you avoid the coordination failures that cause enterprise AI projects to fail at alarming rates. This approach delivers the performance and automation capabilities your team needs while maintaining complete control over regulated data.

The eight practices outlined here, from data sovereignty to disaster recovery testing, provide a proven framework for teams already running successful AI workloads across multiple environments. When implemented together, they create the foundation for scaling AI initiatives without adding hybrid cloud security risks or missing vital compliance requirements.

Airbyte Flex delivers hybrid control plane architecture with complete data sovereignty for regulated AI workloads. Talk to Sales to discuss your AI compliance requirements and regional data plane deployment.

Frequently Asked Questions

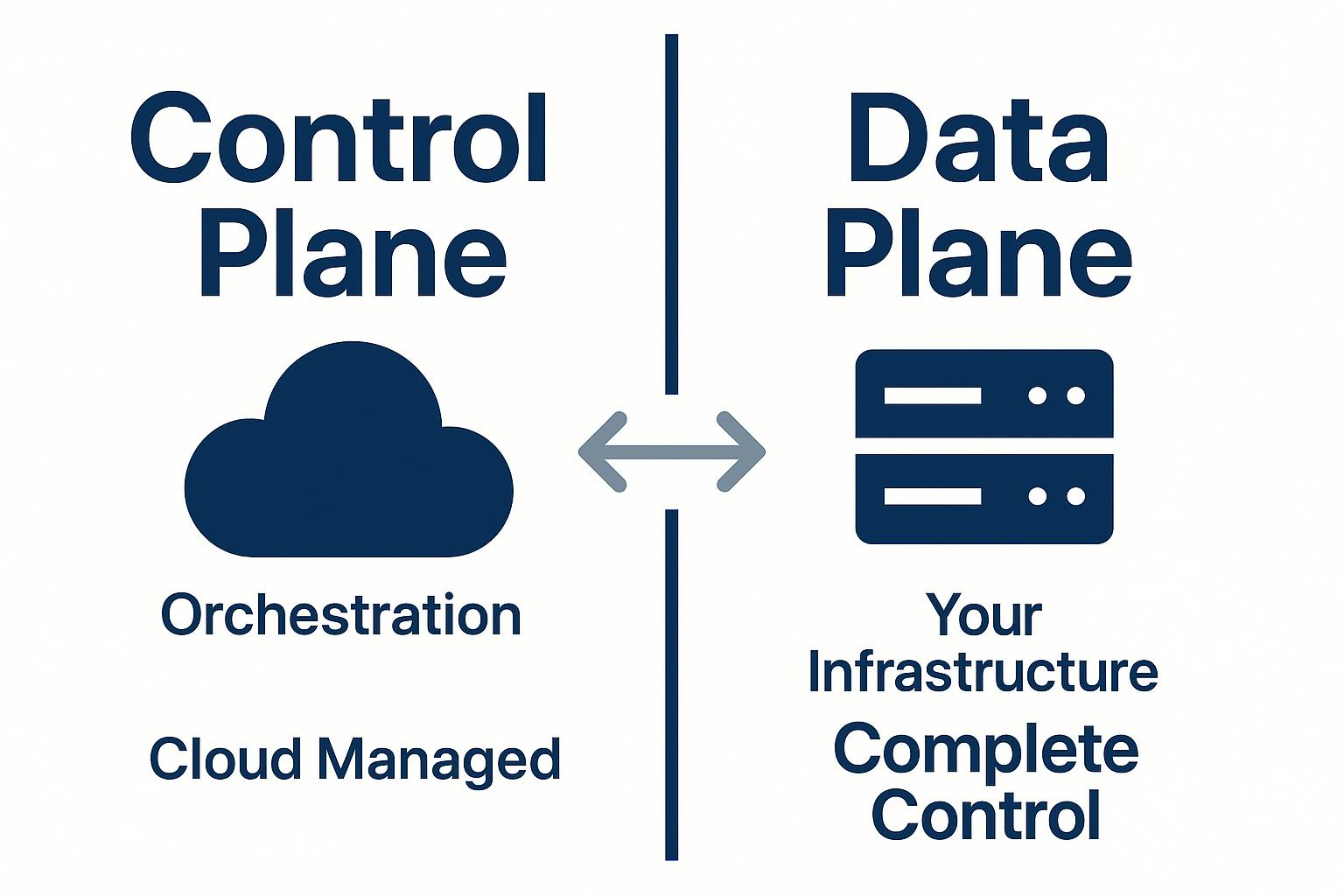

What is the difference between a control plane and a data plane in hybrid AI infrastructure?

The control plane manages orchestration, job scheduling, and pipeline configuration from a centralized location. The data plane executes the actual data movement, transformations, and processing within your private infrastructure. This separation lets you maintain data sovereignty while benefiting from managed orchestration.

How does hybrid cloud deployment help with AI compliance requirements?

Hybrid deployment keeps sensitive training data in regulated environments that meet HIPAA, GDPR, or industry-specific requirements while allowing you to use cloud GPU resources for computation. Your data never leaves your controlled infrastructure, but you still access cloud-scale orchestration and elastic compute capacity.

Can I use the same connectors across on-premises and cloud environments?

Yes. Modern data integration platforms like Airbyte use a unified codebase across deployment models. The same 600+ connectors work identically whether running in your VPC, on-premises clusters, or fully managed cloud environments, eliminating the need to rebuild integrations when moving workloads.

What are the main cost drivers in hybrid AI infrastructure?

The primary cost drivers include unused GPU instances that teams forget to tear down, data transfer fees between regions, and over-provisioned storage for model artifacts. Establishing clear governance for resource provisioning, automating cluster teardown, and using a single data movement layer helps control these costs.

.webp)