How to Optimize ETL to Reduce Cloud Data Warehouse Costs?

Summarize this article with:

✨ AI Generated Summary

Your business can significantly reduce cloud data warehouse costs by optimizing ETL pipelines using Airbyte's features. Key strategies include:

- Using PyAirbyte for local data caching and processing to minimize expensive warehouse compute and storage costs.

- Implementing batch processing and incremental loading (with Change Data Capture) to reduce data transfer volumes and network overhead.

- Applying advanced data filtering, deduplication, and resumable full refreshes to lower storage and processing expenses.

- Leveraging AI-powered automation for schema management, anomaly detection, and predictive performance optimization to enhance pipeline efficiency.

- Employing multi-cloud workload distribution and flexible sync scheduling to optimize costs and avoid vendor lock-in.

- Utilizing comprehensive monitoring and automated cost controls to prevent budget overruns and maintain operational reliability.

Your business generates data from multiple sources, including databases, SaaS applications, social media, IoT devices, and more. However, many organizations discover that their ETL processes have become significant cost drivers, consuming excessive compute resources and generating unnecessary data movement charges that can quickly spiral beyond budget projections. The challenge isn't just moving data efficiently—it's building intelligent, cost-aware pipelines that scale with your business while maintaining optimal performance and data quality standards.

Consolidating this information into a cloud data warehouse provides a single source of truth for business intelligence and reporting, leading to better decision-making. However, managing and reducing the costs associated with data warehousing is important for maintaining a reliable data strategy.

A key factor in controlling these costs is optimizing the ETL pipelines that you use to extract, transform, and load data into your warehouse. An inefficient ETL solution can result in excessive data transfers and redundant storage, all of which can increase expenses. By enhancing ETL processes, you can boost performance and lower expenses without compromising data quality.

In this blog, let's see how Airbyte helps you streamline your ETL pipelines and minimize cloud data warehouse costs efficiently.

How Does PyAirbyte Enable Cost-Effective Data Processing?

Airbyte's open-source library, PyAirbyte, facilitates efficient data extraction from various sources using Airbyte connectors directly within your Python environment. Loading raw data directly into a cloud data warehouse leads to high storage and compute costs. Using PyAirbyte, you can move data into SQL caches like DuckDB, Postgres, and Snowflake.

This cached data is compatible with Pandas (Python library), SQL tools, and LLM frameworks like LlamaIndex and LangChain. Therefore, you can transform raw data according to your specific requirements and load it into your data warehouse.

Local Processing Advantages

The caching approach significantly reduces warehouse compute costs by enabling local data processing and transformation before final loading. You can perform complex data manipulations, quality checks, and business logic applications within the cached environment, minimizing expensive warehouse operations.

This preprocessing capability allows you to optimize data structures, eliminate unnecessary columns, and apply transformations that would otherwise consume substantial warehouse resources. PyAirbyte's integration with popular data science libraries enables sophisticated data analysis and validation workflows without requiring expensive cloud compute resources.

You can leverage local processing power for exploratory data analysis, data quality assessment, and transformation logic development before committing resources to warehouse operations.

Why Is Batch Processing More Cost-Effective Than Individual Record Processing?

Airbyte follows a batch processing approach to replicate data from sources to a destination, which involves grouping multiple records together and processing them as batches. This method reduces the network overhead associated with processing individual records. By handling data in larger chunks, Airbyte minimizes the number of data transfer operations, leading to lower data transfer expenses.

Resource Utilization Optimization

Batch processing optimizes resource utilization by amortizing connection establishment costs across multiple records. Each database connection and API call involves overhead costs in terms of network latency, authentication, and resource allocation.

By processing records in batches, these fixed costs are distributed across many records rather than incurred for each individual operation. The approach also enables better compression and encoding optimizations during data transfer.

Enhanced Compression Benefits

Larger batches can leverage more efficient compression algorithms that work better with substantial data volumes, reducing network bandwidth requirements and associated transfer costs. Modern compression techniques achieve better ratios when applied to larger datasets, translating to measurable cost savings in cloud environments where data transfer costs can be significant.

Batch processing supports more efficient resource scheduling and allocation in cloud environments. Cloud providers often offer better pricing for sustained resource usage compared to frequent short-duration operations.

How Does Incremental Loading Minimize Data Transfer Costs?

Incremental loading in Airbyte offers a cost-effective solution for data warehousing as this minimizes the volume of data transfers. Rather than reloading entire datasets each time, Airbyte enables you to fetch only new or modified records since the last sync. This reduces data transfer costs, as cloud data warehouses often charge based on the amount of data ingested and stored.

However, standard incremental periodically runs queries on the source system for recent changes, which returns only existing records. Thus, any record deleted from the source still exists in the destination warehouse, taking up unnecessary storage space.

Change Data Capture Benefits

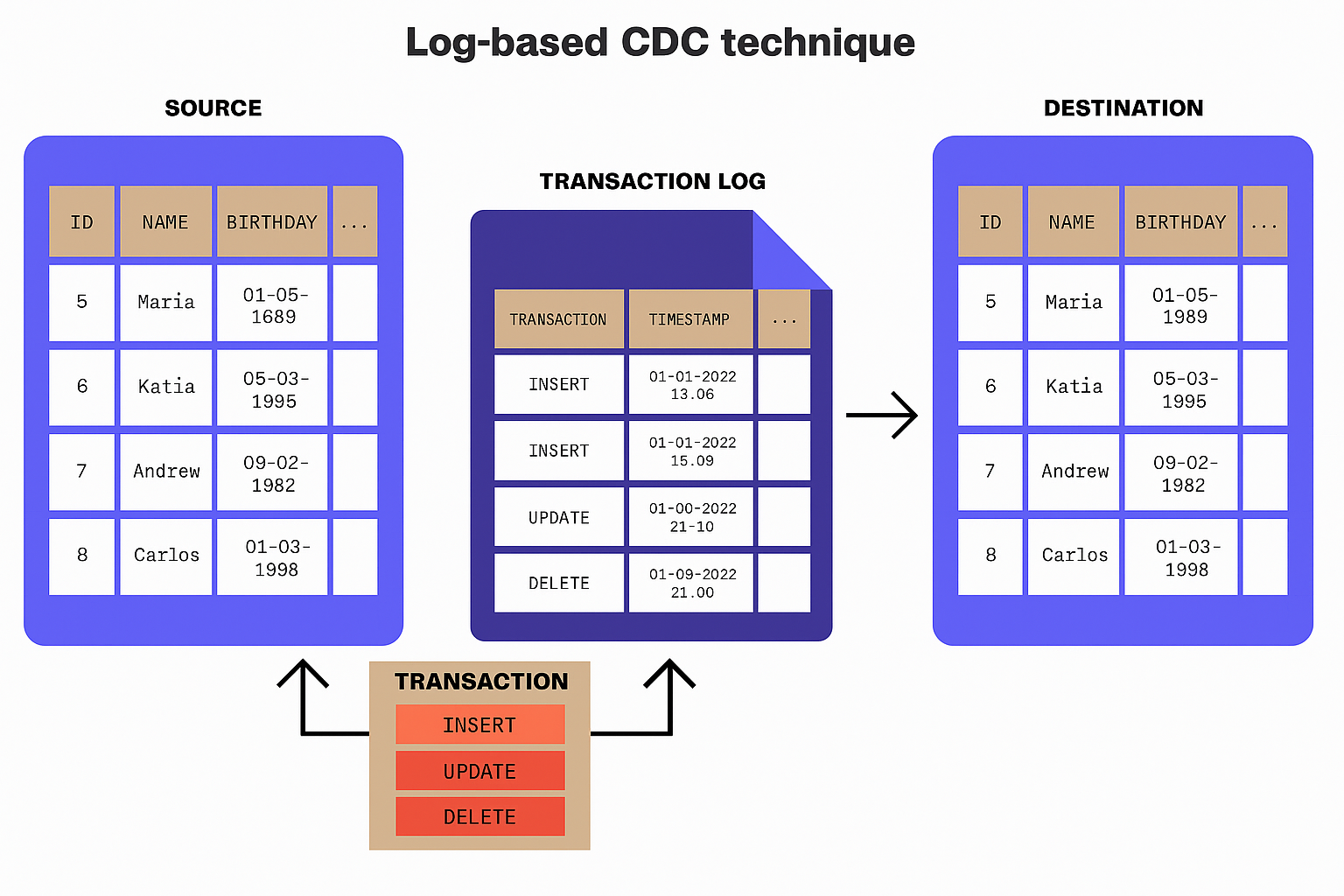

To address this issue, Airbyte also supports Change Data Capture (CDC) replication, which can be used alongside incremental replication.

Unlike regular incremental syncs, the CDC tracks changes by reading the transaction log and captures all data modifications, including deletions. This ensures that the destination remains consistent with the source and prevents unnecessary storage use and associated costs.

Scalability and Performance Improvements

Incremental loading strategies become particularly valuable for large datasets where full refreshes would consume substantial bandwidth and processing resources. The approach enables you to maintain up-to-date information without the exponential cost growth that accompanies full dataset replication.

This scalability benefit becomes more pronounced as your data volumes grow over time. Advanced incremental loading implementations can optimize checkpoint management to minimize redundant data processing.

What GenAI Workflow Optimizations Can Reduce Compute Costs?

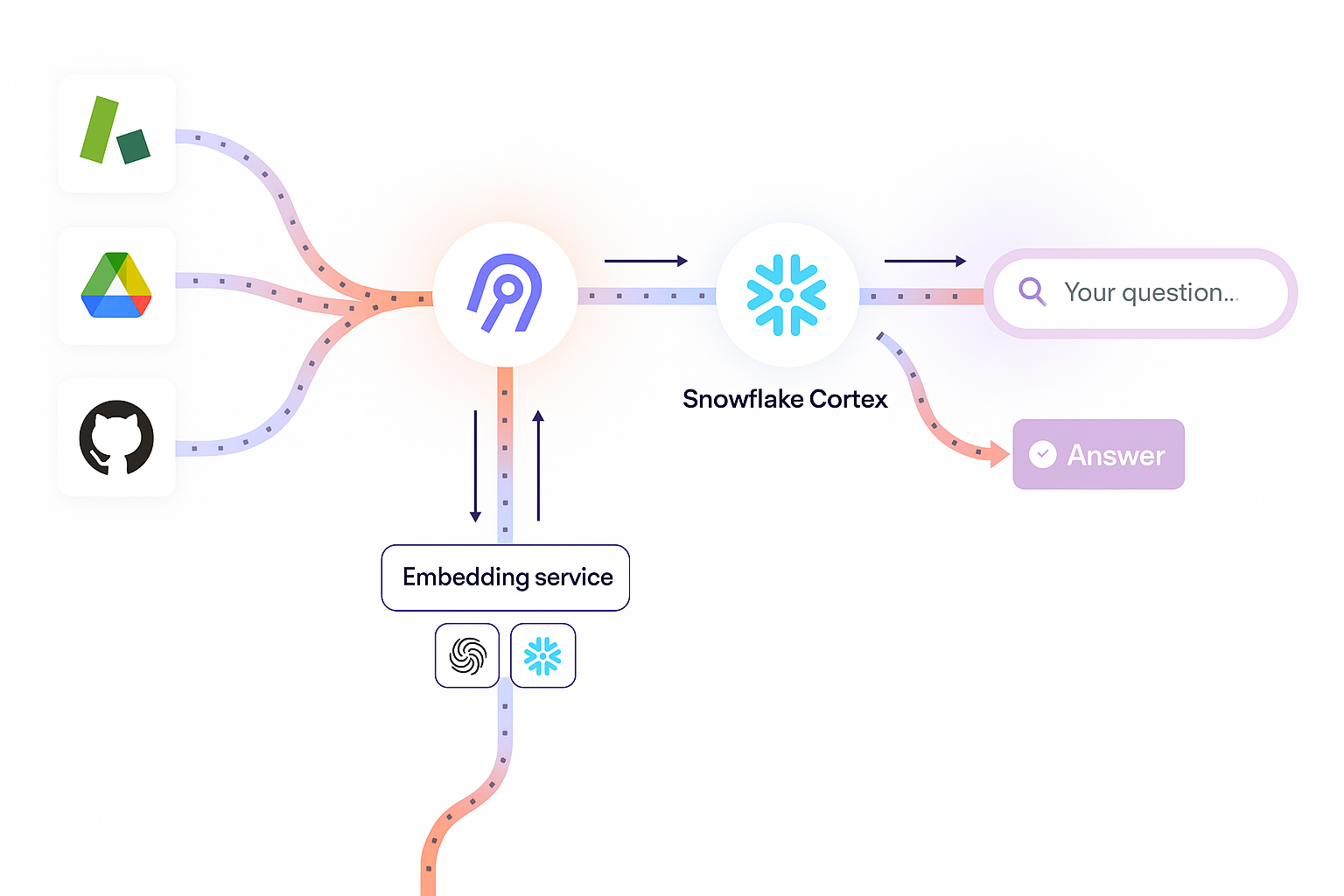

Airbyte lets you enhance your GenAI workflows by moving data into AI-enabled data warehouses such as BigQuery's Vertex AI and Snowflake Cortex. For example, using Airbyte's Snowflake Cortex destination, you can create your own dedicated vector store directly within Snowflake.

Vector Store Efficiency

These vector stores facilitate efficient similarity searches by representing data as high-dimensional embeddings, enabling faster and more accurate retrieval than traditional keyword-based searches. This approach significantly lowers computation overhead by reducing the need for complex queries and full-table scans, which in turn minimizes query execution time and compute costs.

The integration with AI-enabled data warehouses enables you to leverage native machine learning capabilities without requiring separate infrastructure for model training and inference. This consolidation eliminates data movement costs between different systems while taking advantage of optimized compute resources specifically designed for AI workloads.

Smart Embedding Optimization

Vector embedding generation and storage strategies within these integrated environments can be optimized for cost efficiency through intelligent caching and reuse mechanisms. Rather than regenerating embeddings for similar content, the system can identify and reuse existing embeddings, reducing the computational overhead associated with large language model operations.

Preprocessing and data preparation workflows can be optimized to minimize the computational resources required for AI model training and inference.

How Can AI-Powered Automation Enhance ETL Pipeline Efficiency?

Modern ETL systems are increasingly leveraging artificial intelligence and machine learning to automate complex data processing tasks, optimize performance, and reduce operational overhead. AI-powered pipeline optimization represents a fundamental shift from manual, rule-based approaches toward intelligent systems that can learn, adapt, and optimize data flows automatically.

Automated Schema Management

Automated schema mapping and transformation logic generation significantly reduces the development time and expertise required to create sophisticated data integration workflows. Machine learning algorithms can analyze source and destination data structures to automatically suggest optimal mapping strategies, identify potential data quality issues, and generate transformation code that handles complex data type conversions and business logic applications.

Intelligent anomaly detection capabilities monitor data flows continuously, identifying patterns and deviations that might indicate data quality issues, system failures, or security threats. These AI-powered monitoring systems can distinguish between normal data variations and genuine problems, reducing false positive alerts while ensuring that critical issues receive immediate attention.

Predictive Performance Optimization

Predictive optimization algorithms analyze processing patterns, resource utilization metrics, and data characteristics to automatically adjust pipeline configurations for optimal performance and cost efficiency. These systems can predict optimal resource allocation patterns, identify bottlenecks before they impact operations, and recommend configuration changes that improve efficiency without compromising data quality or reliability.

Automated error detection and recovery mechanisms can identify pipeline failures, determine appropriate recovery strategies, and implement corrections without manual intervention. Machine learning models trained on historical failure patterns can predict likely failure scenarios and implement preventive measures that maintain system reliability while reducing operational overhead.

Natural Language Integration

Natural language processing capabilities enable business users to create and modify integration workflows using conversational interfaces, democratizing access to sophisticated data integration capabilities. Users can describe desired transformations in plain English, and the system automatically generates appropriate pipeline configurations, reducing dependence on specialized technical expertise while accelerating time-to-value for data integration projects.

What Role Does Multi-Cloud Cost Optimization Play in Modern ETL Strategies?

Multi-cloud and hybrid architecture strategies have become essential for organizations seeking to optimize costs while maintaining flexibility and avoiding vendor lock-in risks. Modern ETL systems must navigate complex pricing models across different cloud providers while leveraging best-of-breed capabilities from diverse platforms to achieve optimal cost-performance ratios.

Strategic Workload Distribution

Strategic workload placement across multiple cloud environments enables organizations to take advantage of competitive pricing for specific services while distributing risk and maintaining operational flexibility. Different cloud providers offer varying cost structures for compute, storage, and data transfer services, creating opportunities for significant cost optimization through intelligent workload distribution.

Cross-cloud data transfer optimization requires sophisticated approaches to minimize expensive inter-cloud data movement while maintaining required data availability and consistency. Organizations can implement intelligent data placement strategies that position data closer to processing resources, reduce unnecessary data replication, and optimize transfer patterns to take advantage of favorable pricing windows and volume discounts.

Serverless Architecture Benefits

Serverless computing architectures across multiple cloud platforms provide elasticity and cost optimization opportunities that traditional fixed-resource approaches cannot match. These architectures automatically scale processing resources based on actual workload demands while enabling pay-per-execution pricing models that align costs directly with business value generation.

Automated resource scheduling and optimization across multi-cloud environments can identify cost-saving opportunities through temporal workload distribution. Non-critical processing tasks can be scheduled during off-peak pricing periods or distributed to regions where resources are available at reduced costs, achieving substantial savings without impacting performance requirements.

Hybrid Deployment Strategies

Hybrid deployment strategies enable organizations to maintain sensitive data processing on-premises while leveraging cloud resources for scalable analytics and processing capabilities. This approach provides cost optimization opportunities by avoiding expensive data transfer fees for sensitive information while accessing cloud-native scaling and processing capabilities for analytical workloads.

Cost monitoring and alerting systems specifically designed for multi-cloud environments provide comprehensive visibility into spending patterns across diverse platforms. These systems can identify cost anomalies, recommend optimization strategies, and automatically implement cost containment measures that prevent budget overruns while maintaining operational reliability.

How Can Flexible Sync Frequency Control Warehouse Costs?

Frequent data synchronization can impact your data warehouse costs, especially when dealing with large datasets. For example, if your source data is updated only once a day but you run syncs every hour, you incur unnecessary data transfer and compute costs.

Airbyte provides flexible synchronization scheduling options:

Sync Type Description Best Use Case Scheduled syncs Run every 24h, 12h, etc. Regular batch processing Cron syncs Use cron expressions for precise timing Complex scheduling requirements Manual syncs Trigger ad-hoc jobs through the UI Testing and one-time migrations

Intelligent Frequency Optimization

Intelligent sync frequency optimization based on data change patterns can significantly reduce unnecessary processing overhead. By analyzing historical data modification patterns, you can establish optimal sync schedules that balance data freshness requirements with cost efficiency.

This approach ensures that resources are consumed only when meaningful data changes have occurred. Event-driven synchronization capabilities enable more efficient data processing by triggering syncs only when source systems generate relevant changes.

Business-Aligned Scheduling

Rather than polling sources on fixed schedules, event-driven approaches respond immediately to actual data modifications, eliminating wasteful processing cycles while ensuring immediate data availability when changes occur. Business-aligned synchronization schedules can optimize costs by aligning data refresh patterns with actual business requirements.

Critical data that drives real-time decisions can be synchronized frequently, while historical or reference data can be updated on more economical schedules that match business consumption patterns.

What Data Filtering Techniques Minimize Storage and Processing Costs?

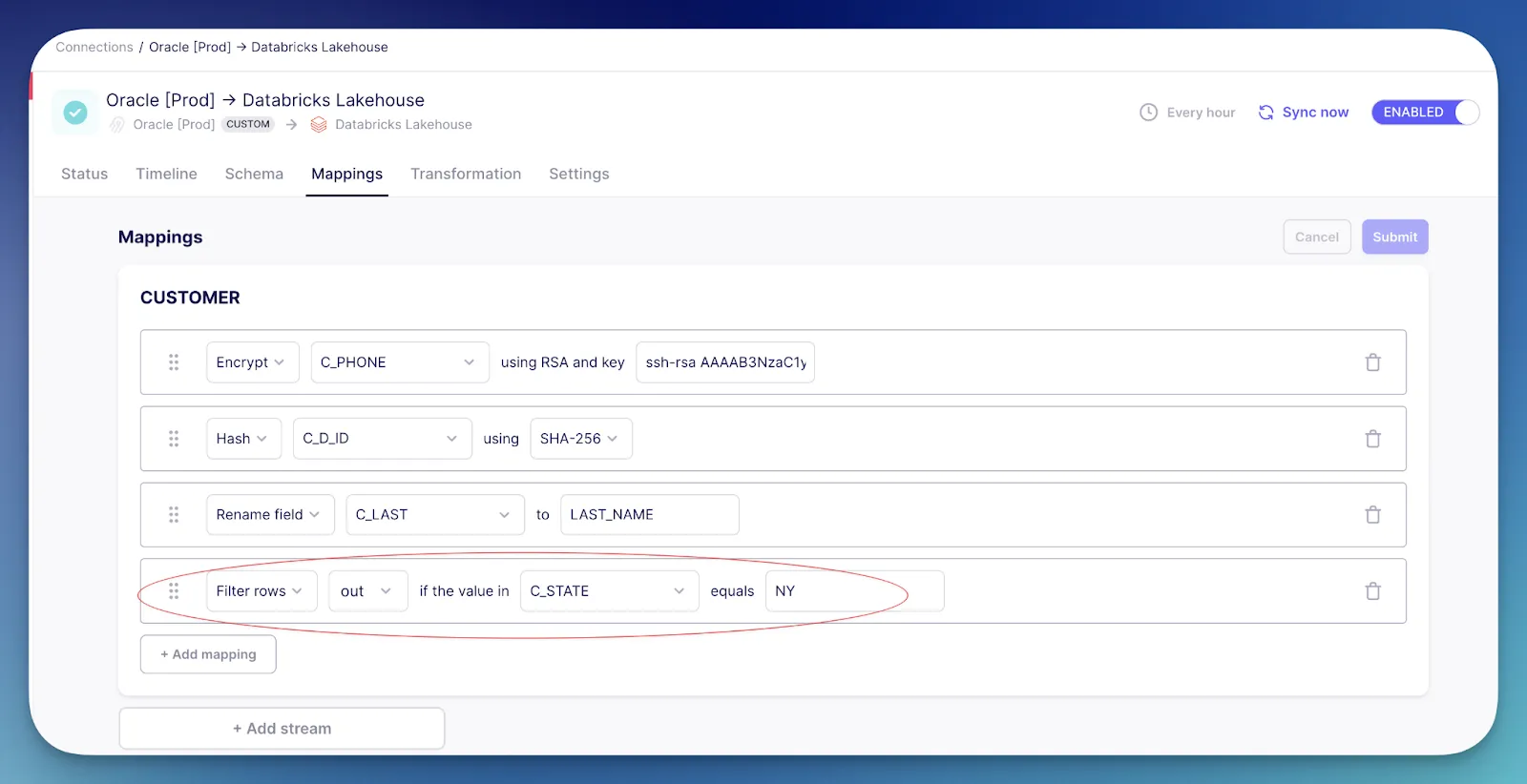

Including unnecessary columns increases storage costs and slows down processing times, especially in cloud data warehouses that bill based on resource consumption. To overcome this, Airbyte provides a column selection feature that lets you choose the columns you replicate.

Additionally, Airbyte's Mappings solution offers advanced filtering capabilities, letting you filter rows based on string or numerical values and remove irrelevant entries.

Automated Data Profiling

Intelligent data profiling capabilities can automatically identify columns with low information value, suggesting opportunities for exclusion based on statistical analysis of data characteristics. These automated recommendations help you optimize data selection decisions by assessing the underlying structure and quality of your data.

Advanced filtering logic enables complex data selection criteria that combine multiple conditions, date ranges, and business rules to ensure only relevant data consumes warehouse resources. This precision filtering reduces both storage costs and processing overhead while maintaining the data quality required for accurate analytics.

Dynamic Filtering Strategies

Dynamic filtering based on data usage patterns can automatically adjust data selection criteria based on changing business requirements and consumption patterns. Rather than maintaining static filter configurations, these systems adapt to evolving data needs while continuously optimizing for cost efficiency.

How Does Data Deduplication Reduce Storage Expenses?

Data deduplication removes duplicate copies of data to free up storage and save costs. Airbyte supports Incremental Sync – Append + Deduped mode, which updates existing records rather than blindly appending new rows.

When a record is updated, the system retains only the latest version of that record based on a primary key, ensuring that the final dataset in the destination contains unique entries.

Advanced Duplicate Detection

Sophisticated deduplication algorithms can identify and eliminate subtle duplicates that might not be immediately obvious through simple key-based matching. These algorithms can handle variations in data formatting, minor field differences, and fuzzy matching scenarios that occur when data originates from multiple systems with different data entry standards.

Real-time deduplication processing during data ingestion eliminates the need for expensive post-processing deduplication operations in the data warehouse. By handling duplicate detection and resolution during the ETL process, you avoid consuming warehouse compute resources for these operations while ensuring clean data from the moment it arrives.

Historical Data Cleanup

Historical data cleanup capabilities can identify and remove accumulated duplicates from existing datasets, recovering storage space and improving query performance. These cleanup operations can be scheduled during low-usage periods to minimize impact on operational workloads while achieving significant storage cost reductions.

Why Are Resumable Full Refreshes Essential for Cost Control?

If a sync fails due to network issues or resource constraints, replicating the entire dataset again can be costly. Airbyte's resumable full refreshes allow full-refresh syncs to continue from the last checkpoint, reducing compute overhead, data transfer costs, and overall sync time.

Checkpoint Optimization

Checkpoint optimization strategies ensure that resumable operations restart from the most efficient points, minimizing redundant processing while maintaining data consistency. Intelligent checkpoint placement considers data characteristics, processing patterns, and failure probabilities to optimize recovery efficiency.

Automatic failure detection and recovery mechanisms can identify transient issues and implement appropriate recovery strategies without human intervention. These systems distinguish between temporary connectivity problems and persistent failures, implementing recovery procedures that minimize cost impact while maintaining data pipeline reliability.

Progressive Retry Strategies

Progressive retry strategies with exponential backoff prevent resource waste during temporary system unavailability while ensuring eventual successful data synchronization. These strategies balance persistence with resource efficiency, avoiding excessive retry operations that consume resources without achieving successful outcomes.

How Can Data Pipeline Monitoring Prevent Cost Overruns?

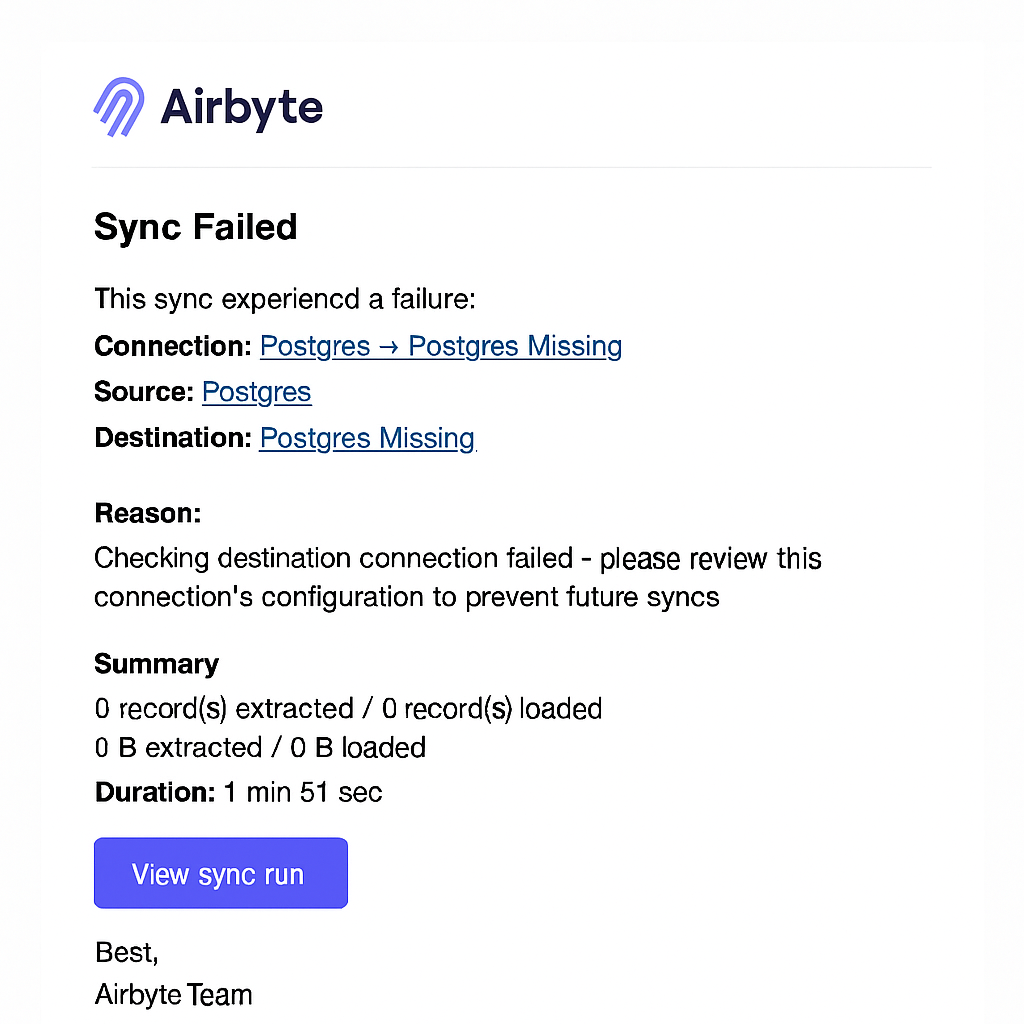

Improper sync failures can result in unnecessary compute usage and increased storage costs. To address this, Airbyte provides notifications and webhooks to monitor pipeline health.

You'll receive alerts for successful syncs, failed jobs, and schema changes via email or Slack. Airbyte also integrates with observability tools like Datadog and OpenTelemetry, enabling proactive performance tracking.

Cost-Aware Monitoring

Cost-aware monitoring capabilities track resource consumption patterns and spending trends, providing early warnings when operations deviate from expected cost profiles. These monitoring systems can identify resource-intensive operations, predict cost escalation scenarios, and recommend optimization strategies before expenses exceed budget thresholds.

Performance correlation analysis helps identify relationships between pipeline configurations and cost outcomes, enabling data teams to optimize settings for better cost-performance ratios. This analysis can reveal which configuration changes provide the greatest cost benefits while maintaining required performance levels.

Automated Cost Controls

Automated alerting systems can implement cost containment measures when spending patterns indicate potential budget overruns. These systems can temporarily throttle resource-intensive operations, implement emergency cost controls, and escalate issues to appropriate personnel for resolution while preventing catastrophic cost escalation.

Comprehensive audit trails and cost attribution capabilities enable detailed analysis of resource consumption patterns across different pipelines, data sources, and business units. This visibility supports informed decision-making about resource allocation and optimization priorities while providing accountability for data processing costs.

Wrapping Up

Optimizing ETL processes is crucial for controlling cloud data warehouse costs. Airbyte offers a robust suite of features—incremental syncs, advanced data filtering, data deduplication, resumable refreshes, and more—that help minimize data transfer and storage expenses.

By adopting Airbyte's flexible and cost-conscious approach to data integration, you can build scalable, budget-friendly data pipelines that deliver optimal performance. The combination of intelligent automation, multi-cloud optimization strategies, and comprehensive monitoring capabilities positions Airbyte as more than just a data integration platform.

It serves as a strategic enabler that transforms data infrastructure challenges into competitive advantages while maintaining strict cost controls and operational efficiency. Modern organizations require data integration solutions that evolve with changing business requirements while maintaining cost predictability and operational reliability.

FAQ

What are the main factors driving cloud data warehouse costs?

The primary cost drivers include data ingestion volumes, compute resource usage during processing, storage consumption, and data transfer fees between systems. ETL processes can significantly impact these costs through inefficient data movement, unnecessary full refreshes, and lack of optimization strategies like incremental loading and data filtering.

How much can organizations typically save by optimizing their ETL pipelines?

Organizations commonly achieve 30-60% reductions in cloud data warehouse costs through ETL optimization strategies. Savings come from implementing incremental loading, data deduplication, intelligent filtering, and automated monitoring that prevents resource waste and unnecessary data movement.

Which Airbyte features provide the most significant cost savings?

The most impactful cost-saving features include incremental syncs with CDC capabilities, column and row filtering through Mappings, data deduplication, resumable full refreshes, and flexible sync scheduling. PyAirbyte's local processing capabilities also reduce warehouse compute costs significantly.

How does batch processing reduce data transfer costs compared to real-time processing?

Batch processing amortizes connection overhead across multiple records, enables better compression ratios, and takes advantage of sustained usage pricing models. This approach typically reduces data transfer costs by 40-70% compared to individual record processing while maintaining data freshness requirements.

Can Airbyte help organizations avoid vendor lock-in while optimizing costs?

Yes, Airbyte's open-source foundation generates portable code and supports deployment across multiple cloud providers and on-premises environments. This flexibility enables organizations to optimize costs through strategic workload placement and avoid the premium pricing associated with vendor lock-in scenarios.

.webp)