What is Streaming Data Pipeline: Examples & Working

Summarize this article with:

✨ AI Generated Summary

The need for real-time data analytics has increased significantly, primarily driven by the fast pace of business operations and decision-making. Several organizations now require skills related to streaming data pipelines to optimize data flow between sources and destinations. This enables real-time analytics, enhances operational efficiency, and reduces the risk of data loss.

However, streaming data from a source to a destination is associated with various challenges. This article highlights the most prominent type of data pipeline—the streaming data pipeline—and explains how it works with examples.

What is a Streaming Data Pipeline?

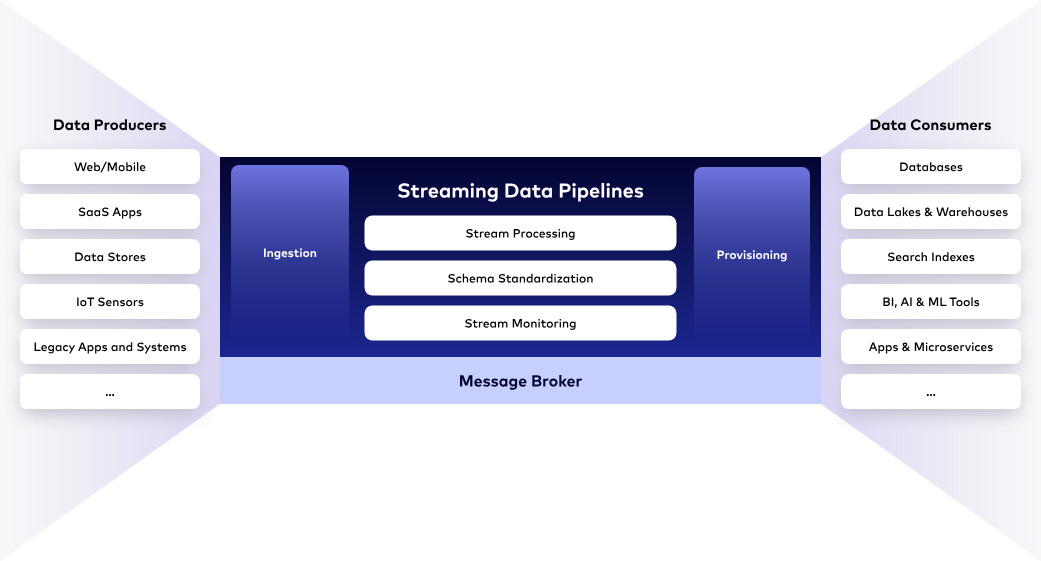

A streaming data pipeline is an automated, continuous process that integrates data from multiple sources, transforms it as per requirements, and delivers it to a destination in near real-time. This allows for the data at the destination to be updated almost instantaneously to reflect changes in the source data.

Streaming data pipelines are designed to handle, transform, and analyze high volumes of data continuously. This makes it easier to derive timely insights while the processed data can be stored in your choice of storage system. Some applications of streaming data pipelines include banking for real-time fraud detection and e-commerce for dynamic pricing, among others.

Why Consider a Streaming Data Pipeline?

Streaming data pipelines are essential for organizations requiring data-driven decisions based on up-to-date information. Being able to process and analyze data in real-time helps uncover patterns to generate better business insights and opportunities.

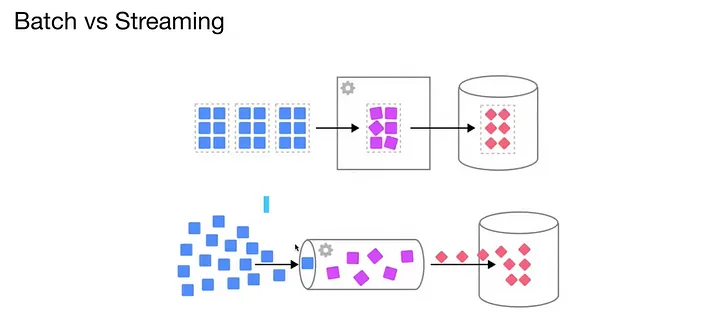

Unlike batch processing, which introduces significant delays due to data collection and processing in batches, streaming data pipelines are designed to minimize latency.

How Does Streaming Data Pipeline Work?

A streaming data pipeline operates through multiple steps that run iteratively to achieve real-time streaming functionality. Here are the critical steps involved in streaming data pipeline workflow:

1. Data Ingestion

This step involves capturing data from multiple sources and inputting it into the pipeline. The source data can have various data types, missing values, and a format that differs from the destination schema.

2. Data Transformation

In this step, the data is cleaned and manipulated to eliminate discrepancies and make it compatible with the target system and analysis-ready. The process involves correcting errors, standardizing formats, and operations like filtering and aggregation.

3. Mapping Schema

This step converts the incoming data into a standard schema that aligns with the destination’s requirements, reducing operational complexity by maintaining a consistent data format.

4. Monitoring Event Streams

Constant monitoring of data streams is critical to detect any changes in the source data. This helps ensure that the pipeline detects any changes in the source, hence achieving real-time data integration.

5. Event Broker

It facilitates real-time data integration by tracking changes in the state of the source data and replicating the changes to the destination. The event broker observes data additions, deletions, or updates on the source and sends a message to the destination about the change.

6. Data Availability for Destination

This step ensures efficient data transfer to the destination; the data stored in the destination remains synchronized with updates from the source.

What Are the Examples of a Streaming Data Pipeline?

Almost every industry demands real-time data integration as it involves analyzing the latest data changes in the source. Here are some of the streaming data pipeline examples:

1. E-commerce

Streaming data pipelines are used in the e-commerce industry to optimize inventory management and provide customers with personalized shopping experiences.

Personalizing Customer Experience: When you navigate an e-commerce platform and search for a specific product type, the platform automatically recommends similar products. This is made possible with real-time data processing that adjusts the product recommendations dynamically.

The e-commerce platform considers information such as the products you click on, the products you add to your cart, and those you add to the interested list to recommend similar products.

Inventory Management: E-commerce stores update their inventory levels based on sales data and real-time customer interactions, which happen using a streaming data pipeline, helping maintain optimal stock levels.

2. Cybersecurity

Network Activity Monitoring: A streaming data pipeline facilitates real-time analysis of the updated data on the source end. In cybersecurity, it enables the continuous monitoring of network activity to detect and respond to security threats instantaneously.

Security Information and Event Management: SIEM receives security logs from various sources, including security and network devices like firewalls, routers, and more. Streaming data pipelines allow for real-time data analysis of these logs, enabling faster detection of security threats.

Threat Detection: By analyzing customer buying behavior in real-time, streaming data pipelines can help identify suspicious transactions, resulting in secure data transfer. If the data is an anomaly, it is classified as a potential threat and removed. The allocated security team can take action effectively and eliminate any possible threats from harming the application.

3. Financial Institutions

Market Analysis: Financial markets leverage streaming data pipelines to analyze and monitor stock fluctuations in real-time throughout the day. Analysts look at a portfolio's assets and past performance to understand potential losses. This allows institutes to automatically buy or sell assets and execute trades based on some pre-defined factor, achieving optimal returns.

Risk Management: Streaming data pipelines automate the portfolio management procedure by constantly monitoring the market, assessing risks, and strategically executing trades to optimize users' returns.

4. Real Estate Websites

Customized Recommendations: Streaming data pipelines help real estate businesses utilize real-time user data to analyze their behavior. This data might include GPS information, browsing patterns, property filters, search queries, and online surveys to offer personalized property recommendations.

With this approach, real estate websites recommend properties that are more likely to interest each user based on their preferences.

What are the Differences Between Batch vs Streaming Data Pipelines?

This section highlights the key differences between batch vs streaming data pipelines.

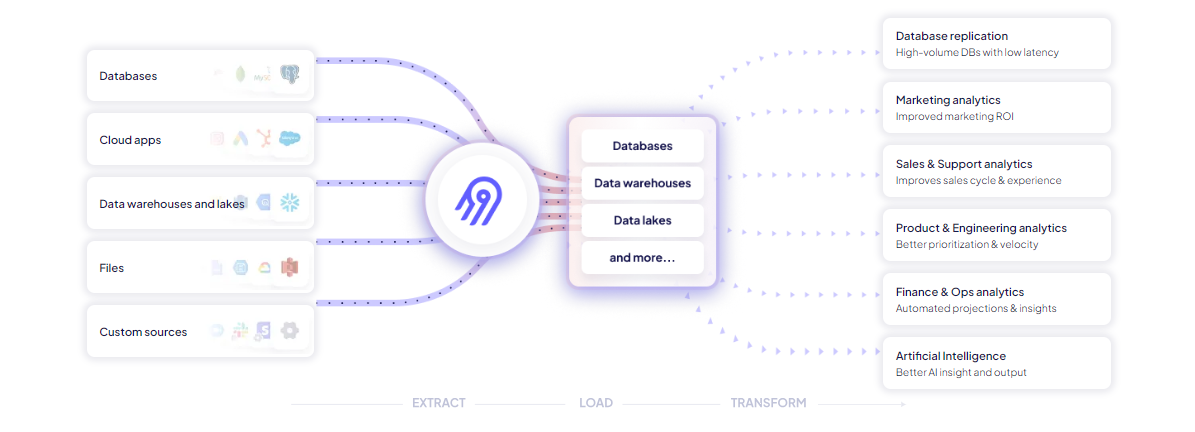

So far, you have seen the differences between batch and streaming data pipelines and examples of how streaming data can be useful in various industries. While streaming data is essential for real-time analysis, batch data processing is equally valuable for several scenarios. These might include digital media analysis, payroll processing, financial report generation, or testing software programs at regular intervals. However, designing and managing these batch data pipelines can be complex and resource-intensive. This is where data integration tools like Airbyte can help.

A Robust Data Integration Solution: Airbyte

Airbyte is a data integration and replication tool that simplies data movement between various data sources and destinations without requiring technical expertise. It provides wide range of 350+ pre-built connectors, eliminating the need for complex coding. However, if you don’t find the one that you require in the pre-built list, you can use its Connector Development Kit to create custom connectors for your data integration needs.

Here are some of the key features of Airbyte:

- Change Data Capture (CDC): With its Change Data Capture (CDC) feature, Airbyte helps minimize data redundancy while efficiently utilizing computational resources to manage large datasets.

- PyAirbyte: The Python-based library PyAirbyte extends Airbyte’s functionality, allowing you to utilize its robust connector library using Python code.

- Data Security and Compliance: Airbyte secures your data's confidentiality and reliability by adhering to stringent security standards such as HIPAA, ISO, SOC 2, and GDPR.

- Data Transformation with dbt: Airbyte supports data transformation with the help of dbt (data build tool) integration. This feature allows you to execute complex transformations in SQL and create end-to-end data pipelines.

- Integration with Orchestration Tools: Airbyte allows you to seamlessly integrate with some of the most prominent data orchestration tools, including Dagster, Airflow, and Prefect, supporting robust data operations.

Key Takeaways

A streaming data pipeline allows the transfer of real-time modified data from source to destination, enabling quick decision-making to scale business operations. Choosing a streaming data pipeline can significantly reduce the latency in the data transfer process. A streaming data pipeline also provides the benefit of maintaining data consistency and quality while continuously transferring data from source to destination.

Frequently Asked Questions (FAQs)

What are the benefits of streaming data?

The benefits of streaming data include real-time insight generation from continuously updated data, customer satisfaction by improving service response time, and reduction in latency by providing immediate response to events.

What are the main three stages in a data pipeline?

The three main stages of a data pipeline include a source to ingest data from, processing to transform the raw data, and a destination to load data to.

What is the difference between batch pipeline and streaming pipeline?

The key difference between a batch pipeline and a streaming pipeline is that the batch pipeline processes data in scheduled, discrete chunks. In contrast, the streaming pipeline processes the data in real-time and is suitable for applications that require immediate reactions to changing data.