Text Analysis in Python: Techniques and Libraries Explained

Summarize this article with:

✨ AI Generated Summary

Python is a powerful tool for text analysis, offering extensive NLP libraries like NLTK, spaCy, and TextBlob for preprocessing tasks such as tokenization, stop-word removal, and lemmatization. Advanced methods include Graph Neural Networks for modeling complex text relationships and transformer-based dynamic topic modeling for capturing evolving topics over time. Effective handling of large datasets, visualization techniques, and tools like HuggingFace and Airbyte enhance scalability and insight generation in text analytics workflows.

Data professionals working with customer feedback, social media content, and document collections face a fundamental challenge: extracting meaningful insights from vast amounts of unstructured text requires sophisticated analytical capabilities that go far beyond basic keyword counting. The complexity of modern text analysis demands not just technical proficiency, but deep understanding of how language patterns, semantic relationships, and contextual meanings can be systematically decoded to drive business decisions.

Analyzing text data—whether it is online reviews of your products or feedback from your clients—is crucial. Performing this analysis helps you create better business solutions that cater to your customers' specific requirements. However, conducting text analysis can often be daunting, requiring technical expertise and domain knowledge.

In this article, you will explore the concept of performing text analysis in Python and the different methods you can use to streamline insight generation.

How Do You Get Started with Text Analysis in Python?

The biggest challenge analysts face when analyzing text data is data quality. Most real-world applications produce messy and noisy data. Cleaning and transforming this data into an analysis-ready format is essential. This is the key reason why you should use Python for text analytics.

Python offers different data structures that allow you to work with data in any format. Its support for robust machine-learning and natural-language-processing libraries enhances your analysis experience, enabling you to perform advanced analytics.

The first step in Python text analysis is understanding the data you want to analyze. A comprehensive overview of the text data characteristics—including its format and structure—is essential for analysis. By recognizing the patterns and formats within the text data, you can proceed with the data-extraction steps. This knowledge allows you to scrape data from a website or extract it from another tool into the Python environment effectively.

After getting a brief overview of the data you will be working with, set up Python on your local machine and create a virtual environment. You can download and install Python from the official website.

To create and activate a Python virtual environment:

On Windows (Command Prompt):

On macOS or Linux:

Now that you have created a virtual environment, you can extract the data onto your local machine. The data you will be working with might be available in dispersed sources. If your task requires scraping data from a webpage, you can use Python libraries like requests and BeautifulSoup. If your workflow requires extracting data from dispersed sources into a centralized location for text analysis, SaaS-based tools like Airbyte can be beneficial.

Airbyte is a no-code data-integration tool that allows you to migrate data from multiple sources to your preferred destination. With more than 600+ connectors, it offers you the flexibility to perform text analysis on data extracted from numerous platforms.

If the connector you need is unavailable, Airbyte provides a no-code Connector Builder with AI Assistant capabilities that can automatically configure numerous fields by reading API documentation, dramatically reducing connector development time from hours to minutes. Additionally, the Connector Development Kit (CDK) enables custom connector creation for specialized requirements.

Key features include:

- Streamlined GenAI workflows that extract raw, unstructured, and semi-structured data and convert it into vector embeddings that can be stored in popular vector databases such as Pinecone, Milvus, or Qdrant.

- PyAirbyte, a Python library that lets you load data directly into SQL caches and convert them into Pandas DataFrames for analysis.

- Airbyte Embedded enables users to bring data to create AI applications at high velocity without spending significant time and resources building data infrastructure.

- Large-scale workload management through the Enterprise edition offers multi-region deployments, RBAC, SLAs, and PII safeguards with SOC 2 Type II and ISO 27001 certifications.

Essential Libraries Overview

Python has extensive libraries that enable you to perform NLP tasks. The most essential for conducting text analysis include:

- NLTK: The Natural Language Toolkit (NLTK) is an open-source library offering stemming, tokenization, lemmatization, parsing, and sentiment analysis. It also provides a diverse set of corpora for training and testing NLP models.

- spaCy: spaCy is an industry-scale NLP library written in Cython. It offers a variety of plugins that integrate with your machine-learning stack to build custom workflows.

- TextBlob: TextBlob provides a simple API for common NLP tasks such as part-of-speech tagging and sentiment analysis. Features include n-grams, word inflection, lemmatization, spelling correction, and WordNet integration.

- Scikit-learn: Scikit-learn is a free, open-source library that supports popular machine-learning algorithms, including linear and logistic regression and random forests. It offers tools to transform data and build custom ML models.

What Are the Text Preprocessing Fundamentals?

After selecting the libraries that best fit your requirements, the next step is to understand text-preprocessing fundamentals.

1. Tokenization Techniques

Tokenization breaks down complex text into individual words or subwords (tokens). For example:

Output:

Tokenization can be word-, character-, or subword-based.

2. Stop-Word Removal

Stop words do not add significant value to the contextual meaning of text. Removing them lets you focus on relevant content:

Output:

3. Stemming vs. Lemmatization

- Stemming removes suffixes to get a base form (e.g., "removing" → "remov") and ignores context.

- Lemmatization reduces words to their dictionary form, considering context (e.g., "removing" → "remove").

4. Handling Special Characters

Use regular expressions (re library) to eliminate punctuation and special characters that do not add value.

5. Case Normalization

Convert text to lower- or uppercase using .lower() or .upper() to standardize words.

6. Dealing With Multilingual Text

Detect languages with libraries such as langdetect, fastText, or NLTK's TextCat, before processing multilingual corpora.

What Are the Basic Text Analytics Techniques?

With preprocessed data, you can perform basic analytics:

- Word-frequency analysis counts word occurrences across your dataset.

- Vocabulary richness measures the set of unique words by converting the token list to a set.

- Readability scores calculate metrics such as Flesch or Gunning Fog to assess text complexity.

- Basic statistics include calculating mean document length and other descriptive measures.

- Pattern matching with RegEx allows you to extract or split text via patterns.

- N-gram analysis studies sequences of n words or characters to identify common phrases and patterns.

How Does Sentiment Analysis Work in Python?

Sentiment analysis captures user behavior—for example, analyzing product reviews to understand user experience.

- Rule-Based Approaches: Lexicon-based methods apply predefined rules and are easy to implement, but less effective on complex data.

- Machine-Learning Methods: Train models such as decision trees, SVMs, or neural networks on labeled data. These methods are suitable for large datasets but computationally expensive.

- Polarity Detection: Classify text as positive, negative, or neutral—commonly used for social-media analysis. This technique helps businesses understand overall customer sentiment toward their products or services.

- Subjectivity Analysis: Distinguish factual statements (objective) from personal opinions or feelings (subjective). This analysis helps identify which parts of the text contain opinions versus factual information.

- Emotion Detection: Identify emotions such as anger, fear, joy, or excitement. This granular analysis provides deeper insights into user emotional responses beyond simple positive or negative classifications.

- Handling Negations: Words like not or never invert sentiment polarity. Libraries such as NegSpacy help detect and handle negations effectively in sentiment analysis pipelines.

How Are Graph Neural Networks Revolutionizing Text Classification?

Graph Neural Networks offer a novel and increasingly important approach in text analysis, moving beyond traditional sequential processing to model textual information as interconnected graph structures. Unlike conventional approaches that treat text as linear sequences, GNNs conceptualize documents, words, and semantic concepts as nodes in a comprehensive network, enabling sophisticated modeling of relationships that sequential models often miss.

Traditional text classification methods process documents as bags of words or sequential tokens, missing crucial structural relationships between concepts. GNNs address this limitation by constructing graphs where nodes represent different textual elements and edges capture various types of relationships—syntactic dependencies, semantic similarities, co-occurrence patterns, or domain-specific connections.

Document-Level Graph Construction

In document-level applications, GNNs create graphs where each document becomes a node connected to other documents based on similarity metrics or shared entities. This approach proves particularly powerful for tasks like document clustering, citation analysis, and recommendation systems where understanding relationships between entire documents drives performance.

Word-level graph construction represents individual words or phrases as nodes, with edges indicating relationships such as syntactic dependencies, semantic similarities, or co-occurrence within specific contexts. This granular approach enables sophisticated analysis of language patterns and semantic relationships that benefit tasks like named entity recognition and relation extraction.

Heterogeneous Graph Networks

Modern GNN architectures support heterogeneous graphs containing multiple node types (words, entities, documents, authors) and edge types (syntactic, semantic, temporal relationships). This flexibility allows modeling of complex real-world scenarios where text analysis requires understanding multiple types of relationships simultaneously.

The power of GNNs in text analysis extends beyond traditional classification tasks to include complex reasoning over textual knowledge graphs, multi-hop relationship extraction, and sophisticated question-answering systems that require understanding of indirect connections between concepts.

What Makes Transformer-Based Dynamic Topic Modeling So Powerful?

Dynamic topic modeling with transformers represents a quantum leap in understanding how topics evolve over time within large text collections. Traditional topic modeling approaches like Latent Dirichlet Allocation (LDA) provide static snapshots of topics but fail to capture how topics emerge, evolve, and disappear across temporal dimensions.

BERTopic, combined with transformer embeddings, creates dense, contextually aware representations that capture semantic nuances missed by traditional bag-of-words approaches. This combination enables identification of topics that are semantically coherent rather than simply based on word co-occurrence patterns.

Temporal Topic Evolution

Dynamic topic modeling tracks how topics change over time, revealing patterns of emergence, growth, decline, and transformation. This capability proves invaluable for analyzing social media trends, research paper evolution, news topic development, and customer feedback patterns across product lifecycles.

The integration of transformer models like BERT, RoBERTa, or domain-specific variants provides contextual understanding that dramatically improves topic coherence and interpretability. Unlike traditional methods that treat words as isolated units, transformer-based approaches understand how word meanings change based on context.

Hierarchical Topic Structure

Advanced implementations support hierarchical topic modeling, revealing topic relationships at multiple levels of granularity. This capability enables analysis ranging from broad thematic categories to specific subtopics, providing flexibility for different analytical needs and stakeholder requirements.

The dynamic aspect extends beyond temporal changes to include adaptation to new domains, languages, or document types without requiring complete model retraining. This flexibility makes transformer-based topic modeling particularly valuable for organizations dealing with evolving content types and domains.

What Is Named Entity Recognition (NER)?

NER identifies and classifies entities such as names, locations, dates, and times.

Common entity types:

- Person identification – e.g., Dr. Jane Doe

- Organization detection – e.g., Meta, UN

- Location extraction – e.g., New York, Bengaluru

- Date/time recognition – e.g., 2024-01-05, 2:10 PM

- Custom entity training – domain-specific entities

- Entity linking – associate entities with a knowledge base

How Does Text Classification Work in Python?

Text classification structures raw text into predefined categories using various machine learning and deep learning approaches.

Multi-Label Classification

Assign multiple labels to a single text document. For example, a movie review might be labeled as both horror and thriller, capturing multiple relevant categories simultaneously.

Model-Evaluation Metrics

- Accuracy measures the proportion of correct predictions across all classes.

- Precision calculates the ratio of true positives to all positive predictions.

- Recall determines the ratio of true positives to all actual positives.

- F1-score provides the harmonic mean of precision and recall, particularly useful for imbalanced datasets.

What Are the Advanced Text Processing Techniques?

Advanced techniques extend beyond basic preprocessing and analysis to provide sophisticated insights and capabilities.

- Text Summarization: Combine spaCy with PyTextRank for extractive summarization, or use transformer models from HuggingFace for abstractive summarization.

- Cross-Lingual Analysis: Leverage transfer learning to apply knowledge from high-resource languages like English to low-resource languages.

- Text Generation: Generate text using various approaches, including statistical models like n-grams and CRFs, neural networks such as RNNs and LSTMs, or modern transformers like GPT and BERT.

How Do You Work With Large Text Datasets?

Best practices include:

- Efficient preprocessing removes non-essential components early in the pipeline to reduce computational overhead.

- Parallel processing distributes tasks across multiple nodes or cores to improve performance.

- Memory optimization uses techniques like columnar storage, partitioning, and compression to manage resource usage effectively.

- Batch processing is typically preferred over streaming for massive datasets to ensure consistent processing and better resource utilization.

- Scaling strategies include sharding, Apache Spark, MapReduce, and dimensionality reduction techniques to handle datasets that exceed single-machine capabilities.

- Performance monitoring tracks memory usage, CPU utilization, and key processing metrics to identify bottlenecks and optimization opportunities.

What Are the Best Text Visualization Techniques?

Effective visualization techniques help communicate complex text analysis findings to stakeholders.

Word Clouds

Word clouds provide intuitive visualization of word frequency and importance within text collections. They offer immediate visual impact for presentations and reports.

Network Graphs

Network graphs visualize relationships between entities, topics, or documents. These visualizations reveal connection patterns and influence relationships that are difficult to detect through other methods.

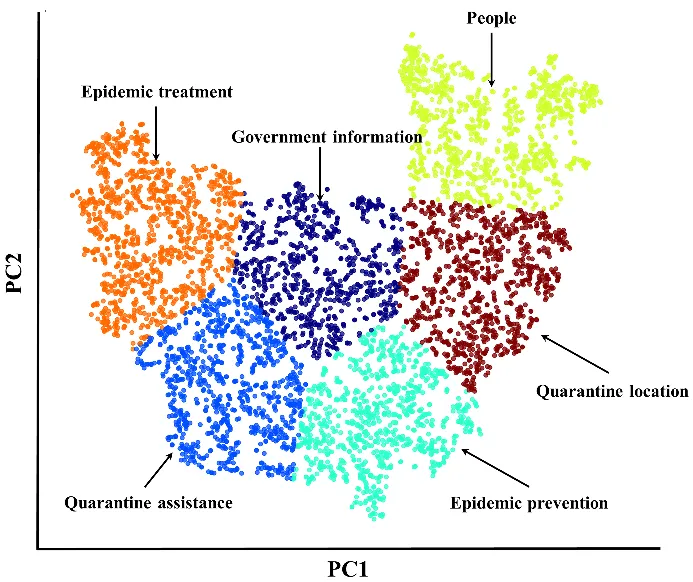

Topic Visualization

Topic visualization displays thematic clusters and their relationships within document collections. These plots help identify topic boundaries, overlaps, and hierarchical relationships.

Trend-Analysis Plots

Trend analysis visualizations track how topics, sentiment, or other text metrics change over time. These plots are essential for understanding temporal patterns and predicting future developments.

Interactive Dashboards

Interactive dashboards combine multiple visualization types to provide comprehensive analysis interfaces. Users can explore data through filtering, drilling down, and comparing different metrics.

Custom Visualizations

Custom visualizations use libraries such as Matplotlib or Seaborn to meet specific analytical and presentation requirements.

How Is HuggingFace Paving the Way for Text Analysis?

HuggingFace is an open-source ecosystem offering robust tools for machine-learning and deep-learning tasks. Features such as community-shared models, extensive datasets, and streamlined model fine-tuning have broadened access to advanced text-analysis capabilities.

Conclusion

Python's extensive NLP libraries make it a powerful tool for text analysis, providing everything from basic preprocessing to advanced machine learning capabilities. Proper preprocessing through tokenization, stop-word removal, and stemming or lemmatization forms the foundation for effective analysis. Modern approaches like Graph Neural Networks and transformer-based topic modeling represent the cutting edge of text analysis capabilities, offering unprecedented insights into complex textual relationships. Effective visualization and proper handling of large datasets ensure that insights can be communicated clearly and analysis can scale to meet production requirements.

Frequently Asked Questions

1. What is the difference between NLTK, spaCy, and TextBlob for text analysis?

NLTK is best for learning and research, offering extensive tools and corpora for detailed text analysis. spaCy is optimized for production with fast, pre-trained models suitable for large-scale NLP tasks. TextBlob is beginner-friendly, providing simple APIs for quick prototyping.

2. How do I handle text preprocessing for different languages?

Handling text preprocessing across multiple languages requires tailoring the methods to each language’s unique linguistic features. Some preprocessing steps remain common for all languages, but others must be adjusted based on language-specific rules and characteristics.

3. What are the best practices for handling large text datasets in Python?

Best practices include preprocessing text early to reduce size, using batch or chunked processing, leveraging parallelization across cores or nodes, optimizing memory with compression and partitioning, and employing scalable frameworks like Spark or Dask for very large datasets.

4. How do I choose between stemming and lemmatization for my text analysis project?

Use stemming for faster, approximate normalization when speed matters; use lemmatization when accuracy and proper dictionary forms matter, especially for context-sensitive analysis. Lemmatization improves downstream results but is computationally heavier than stemming.

5. What evaluation metrics should I use for text classification models?

Use accuracy for balanced data, precision for costly false positives, recall for costly false negatives, and F1-score to balance both on imbalanced data. For multi-class tasks, apply macro or micro averaging to evaluate overall model performance.

.webp)