11 Types Of Python Data Structures For Data Analysis

Summarize this article with:

✨ AI Generated Summary

Python data structures, including mutable types like lists, dictionaries, and sets, and immutable types like tuples, form the backbone of efficient data analysis and engineering workflows. Key advancements in Python 3.9+ improve performance and memory management, while libraries like NumPy and Pandas extend functionality for large-scale numerical and structured data processing. Integrating these structures with modern data engineering tools and techniques—such as streaming, batch processing, and schema validation—enables scalable, high-performance data pipelines.

Data professionals often find that their biggest bottlenecks aren’t complex algorithms or machine learning models, but the challenge of organizing and manipulating data efficiently with the right structures. Python data structures form the foundation of data analysis workflows, yet performance issues, memory inefficiencies, and integration challenges can significantly impact large-scale operations.

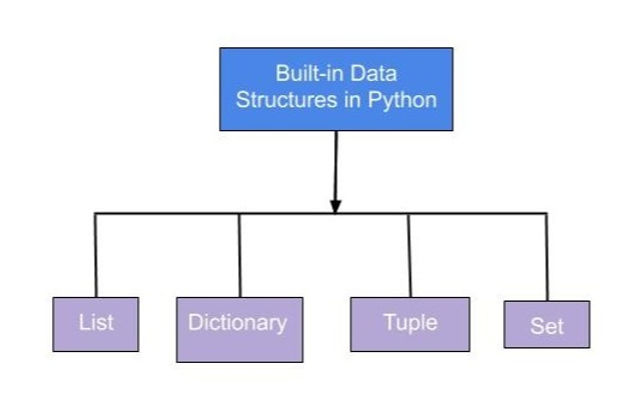

Core data structures, such as mutable types like lists, dictionaries, and sets, and immutable types like tuples, play a crucial role in building efficient workflows. A strong understanding of these structures is especially important for beginners in data science, as it enables better data manipulation, problem solving, and overall programming effectiveness.

This guide explores essential Python data structures for data analysis, covering both foundational concepts and advanced optimization techniques to address real-world performance challenges.

What Are Data Structures?

Data structures are the foundation for organizing and storing data efficiently in a computer's memory. They allow for efficient access, manipulation, and retrieval of the data. In computer science, understanding data structures is crucial as they are fundamental for programming and software development. Here are some common data structures:

What Are Python Data Structures and How Do They Work?

Python data structures are divided into two parts: mutable and immutable.

Third-party packages add more structures, such as DataFrames and Series in Pandas or arrays in NumPy.

Understanding common data structures in Python is crucial for organizing and manipulating data efficiently, which is foundational for writing effective and maintainable code.

Lists

Lists are dynamic, mutable arrays that can contain heterogeneous elements. Python 3.9+ introduced performance improvements for list operations, making them more efficient for data processing tasks.

Dictionaries

Dictionaries store ordered, changeable key: value pairs. Python 3.9 introduced the merge operator (|) and update operator (|=) for more efficient dictionary operations.

Common methods: clear(), copy(), fromkeys(), pop(), values(), update(). Special type: defaultdict (auto-creates default values for missing keys).

Sets

Sets store unique, unordered elements and are optimized for membership testing with O(1) average-case performance.

Key methods: add(), clear(), discard(), union(), pop().

Tuples

Tuples are immutable sequences that provide memory-efficient storage and can serve as dictionary keys when they contain only hashable elements.

Methods: count(), index().

What User-Defined Python Data Structures Are Available?

Stack

A stack follows Last-In-First-Out (LIFO) principle and is essential for parsing operations and recursive algorithm implementation.

Method 1: list

Method 2: collections.deque

Linked Lists

Linked lists provide dynamic memory allocation and are useful when frequent insertions and deletions are required at arbitrary positions.

Queues

Queues follow First-In-First-Out (FIFO) and are fundamental for breadth-first search algorithms and task scheduling systems.

Method 1: list

Method 2: collections.deque (O(1) operations for both ends).

Heaps (heapq)

Heaps provide efficient priority queue operations and are essential for algorithms requiring ordered data processing.

Functions: heapify(), heappush(), heappop(), nlargest(), nsmallest().

How Do Data Analysis Libraries Enhance Python Data Structures?

NumPy Arrays

NumPy arrays provide vectorized operations and memory-efficient storage for numerical computing, offering significant performance advantages over Python lists for mathematical operations.

Common constructors: np.array(), np.zeros(), np.arange(), np.ones(), np.linspace().

Pandas Series

Series provide labeled data with automatic alignment and missing data handling capabilities.

Methods: size(), head(), tail(), unique(), value_counts(), fillna().

DataFrames

DataFrames offer two-dimensional labeled data structures with integrated data analysis capabilities, making them ideal for structured data manipulation.

Methods: pop(), tail(), to_numpy(), head(), groupby(), merge(), pivot_table().

Counter (collections.Counter)

Counter provides efficient counting capabilities and statistical analysis of categorical data.

Methods: elements(), subtract(), update(), most_common().

String

String operations are fundamental for text data preprocessing and natural language processing workflows.

Methods: split(), strip(), replace(), upper(), lower(), join(), startswith(), endswith().

Matrix (NumPy)

Matrix operations enable linear algebra computations essential for machine learning and statistical analysis.

Methods: transpose(), dot(), reshape(), sum(), mean(), std(), inv().

How Do Modern Python Data Structure Advancements Address Performance Challenges?

Recent Python versions (3.9-3.13) have introduced significant improvements that directly address performance bottlenecks commonly faced by data professionals. Understanding these advancements enables you to write more efficient data processing code and avoid common performance pitfalls.

Dictionary Performance Enhancements

Python 3.9's introduction of merge (|) and update (|=) operators significantly improves dictionary operations compared to traditional methods. These operators provide cleaner syntax while maintaining or improving performance for data pipeline operations where dictionary merging is frequent.

Structural Pattern Matching for Data Processing

Python 3.10's pattern matching capabilities enable more efficient data structure decomposition, particularly valuable for processing heterogeneous data formats common in data engineering workflows.

Memory Optimization Techniques

Modern Python versions include improved memory management for data structures, but additional optimization techniques can dramatically reduce memory usage in data-intensive applications.

Generator expressions and iterator chains prevent memory bottlenecks when processing large datasets:

Concurrent Processing Optimizations

While the Global Interpreter Lock (GIL) limits CPU-bound parallelism, modern Python provides several strategies for improving data structure operations in concurrent scenarios:

How Can You Integrate Python Data Structures in Data Engineering Workflows?

Modern data engineering requires seamless integration between Python's native data structures and specialized libraries designed for large-scale data processing. Understanding these integration patterns enables you to build efficient, scalable data pipelines that leverage the best characteristics of each structure type.

Streaming Data Processing Integration

Python data structures integrate effectively with streaming data architectures through generator-based processing and asynchronous patterns. Generators enable memory-efficient processing of unbounded data streams, while dictionaries provide fast lookup capabilities for real-time data enrichment.

Batch Processing and ETL Pipeline Integration

Python data structures serve as intermediate storage and transformation layers in ETL pipelines, particularly when integrated with frameworks like Pandas, Dask, and PySpark. Lists and dictionaries handle metadata and configuration, while DataFrames manage the bulk data transformations.

Data Validation and Schema Management

Structured data validation leverages Python data structures for schema definition and validation logic, ensuring data quality throughout the pipeline. Dictionaries define schemas while sets enable efficient validation of allowed values.

High-Performance Data Structure Selection

Different data processing scenarios require optimal data structure selection for performance. Understanding when to use each structure type prevents bottlenecks in production workflows.

Integration with Modern Data Engineering Tools

Python data structures integrate seamlessly with modern data engineering tools and platforms, enabling efficient data exchange and processing across different components of the data stack.

Mastering these Python data structures, from built-ins like lists and dictionaries to library-based structures such as NumPy arrays and Pandas DataFrames, along with understanding modern performance optimizations and integration patterns, empowers you to build efficient, scalable data analysis workflows that can handle everything from real-time streaming data to large-scale batch processing operations.

Frequently Asked Questions

1. Which Python data structure is best for beginners in data analysis?

For beginners, lists and dictionaries are the best starting point. Lists help store and organize data in order, while dictionaries help manage data using key-value pairs. Once comfortable, beginners can move to NumPy arrays and Pandas DataFrames for more advanced analysis.

2. Why are Python data structures important in data analysis?

Python data structures help you store, organize, and access data efficiently. Choosing the right structure makes data cleaning, transformation, and analysis faster and easier. Without proper structure selection, even simple tasks can become slow and confusing.

3. Can I perform data analysis using only basic Python data structures?

Yes, you can perform basic data analysis using lists, dictionaries, sets, and tuples. However, for large datasets and advanced operations, libraries like NumPy and Pandas are more efficient and powerful.

Suggested Reads:

.webp)