What is Data Integration Architecture: Diagram, & Best Practices in 2026

Summarize this article with:

✨ AI Generated Summary

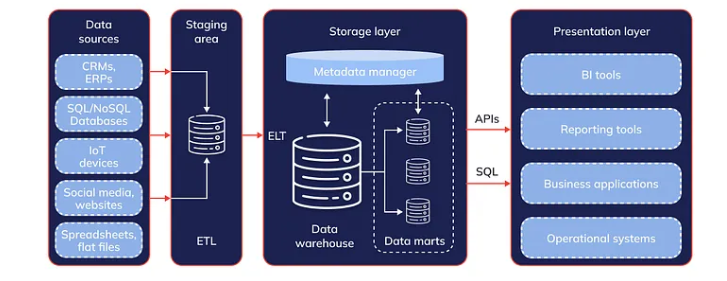

Your organization can overcome data silos by implementing a robust data integration architecture that centralizes data for improved accessibility, quality, and operational efficiency. Key components include source systems, staging, transformation, loading, and target layers, supported by metadata management for governance.

- Composable architectures with APIs and microservices enable modular, scalable, and agile integration workflows.

- AI/ML enhances integration by automating schema mapping, pipeline optimization, and self-healing capabilities.

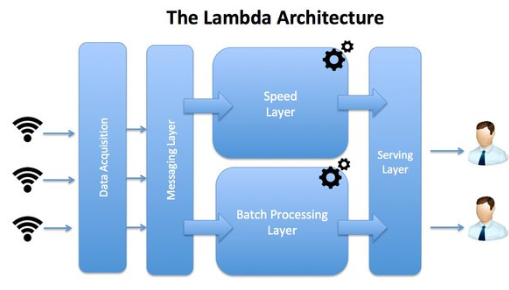

- Common architectural patterns include Hub-and-Spoke, ESB, Lambda, and Kappa, while integration approaches range from ETL/ELT to streaming and API-based methods.

- Best practices emphasize clear objectives, scalability, data quality, governance, automation, security, and performance optimization.

- Platforms like Airbyte simplify integration with pre-built connectors, CDC support, and flexible deployment options.

Your organization generates massive amounts of data that are scattered across various systems, resulting in data silos. These silos hinder a unified view of business operations, often leading to inaccurate insights. Implementing a robust data integration architecture can help break down these silos.

A well-structured data integration architecture provides a centralized location for all your data, making it accessible and usable for downstream applications. Below is a comprehensive guide covering what data integration architecture entails, including its components, patterns, and best practices.

What Is Data Integration Architecture?

Data integration architecture is a strategic framework that consolidates data from various sources into a unified system. Its main objective is to establish a single source of truth, enabling accurate and efficient data access and analysis.

What Are the Key Benefits of Implementing Data Integration Architecture?

Implementing a well-planned data integration architecture brings several benefits to your business:

1. Improved Data Visibility and Accessibility

By integrating data from multiple sources into a unified view, you gain a comprehensive understanding of your business. For example, pharma app development platforms depend on unified data to ensure precise reporting and regulatory compliance.You can easily access and analyze data from across your organization, breaking down information silos and empowering data-driven decision-making.

2. Enhanced Data Quality

The data integration process allows you to cleanse, transform, and standardize your data. This ensures the information you work with is accurate, consistent, and up-to-date, improving the reliability of your analytics.

3. Increased Operational Efficiency

Automating the data integration process reduces manual effort and errors. You can streamline workflows, eliminate redundant data entry, and free up your team to focus on higher-value tasks, boosting overall productivity and efficiency.

4. Better Business Insights

When data from various sources is integrated, it becomes easier to identify patterns, trends, and correlations. This empowers you to gain comprehensive insights into your business operations, customer behavior, market trends, and more.

5. Streamlined Workflows

With a well-designed data integration architecture, you can automate and streamline many of your data-driven processes. This saves time and effort, enabling you to focus on strategic initiatives rather than manual data manipulation.

What Are the Core Layers of Data Integration Architecture?

Data integration architecture is composed of several structured layers that work together to ensure effective data processing and delivery across systems:

- Source Layer: Databases, APIs, files, etc.

- Data Extraction Layer: Connects and pulls data from source systems using ETL/ELT tools.

- Staging Layer: Temporary storage where raw data is prepared for processing.

- Transformation Layer: Modifies and formats data based on business rules and logic.

- Loading Layer: Transfers processed data into designated target systems.

- Target Layer: Stores final datasets in warehouses or lakes for analysis.

- Metadata Management: Tracks data lineage, structure, and governance policies.

What Are the Essential Components of Data Integration Architecture?

1. Source Systems

Systems or applications from which your data originates, including databases, files, APIs, and external services.

2. Staging Area

An intermediate repository where the extracted data is cleansed, validated, and transformed before moving onward. Staging ensures data quality and consistency.

3. Transformation Layer

Where data undergoes mapping, cleansing, validation, aggregation, and other transformations to meet required formats and structures.

4. Loading Mechanisms

Batch processing, real-time streaming, or incremental loading techniques that move transformed data from staging to the target.

5. Target Systems

Data warehouses, data marts, or databases that store and manage the integrated data for analytics, reporting, and other purposes.

How Do Composable Architectures Transform Modern Data Integration?

Traditional monolithic data integration systems are giving way to composable architectures built on APIs and microservices. This shift enables modular, reusable data flows that align with agile development practices and provide unprecedented scalability across hybrid cloud environments.

API-First Development Philosophy

Modern data integration platforms prioritize defining APIs upfront using specifications like OpenAPI, allowing cross-platform consistency and reducing dependency on vendor-specific solutions. This approach enables low-code API platforms and GraphQL adoption to simplify building connectors for SaaS platforms like Salesforce and AWS, while avoiding rigid schema designs that constrain future evolution.

Microservices and Domain-Centric Integration

API-first approaches pair seamlessly with microservices architectures, where domain-specific teams across marketing, finance, and operations develop tailored integration workflows. This decentralization accelerates delivery cycles but requires careful orchestration to maintain system coherence. Tools like Talend Data Fabric and Airbyte now support multi-environment deployments, prioritizing reusability across teams while maintaining governance standards.

Decentralized Versus Centralized Trade-offs

Composable architectures replace centralized ETL pipelines with decentralized, domain-owned workflows that emphasize real-time event-driven streams over traditional batch processing. This transformation shifts from proprietary middleware to API-first, vendor-agnostic connectivity that enables organizations to build flexible data ecosystems without platform lock-in.

How Are AI and Machine Learning Revolutionizing Data Integration Pipelines?

Artificial intelligence and machine learning are no longer afterthoughts in data integration architecture. These technologies are now embedded directly into integration workflows, automating complex tasks from schema mapping to anomaly prevention while enabling intelligent pipeline optimization.

Automated Schema Mapping and Data Discovery

LLM-driven platforms now assist with schema mapping across disparate sources and can suggest matches between fields like "email" in Salesforce and "contact_email" in HubSpot, but typically require some level of manual validation or intervention. These systems reduce the engineering effort required for new integrations while maintaining accuracy through continuous learning from successful mappings and user corrections.

Predictive Pipeline Optimization

Advanced platforms analyze historical pipeline performance data including transformation latency and failure rates to suggest optimizations before issues occur. Modern cloud data warehouses like Snowflake use automated caching mechanisms and query optimization, but do not currently employ AI to pre-cache datasets or recommend partitioning schemes based on usage patterns.

Self-Healing Pipeline Intelligence

AI-powered systems detect and resolve integration issues automatically through reinforcement learning algorithms that adapt to data surges, format changes, and system anomalies. For example, fraud detection systems can automatically prioritize transactions flagged by machine learning models in real-time, adjusting resource allocation dynamically based on threat levels and processing demands.

Generative AI for Data Engineering

ChatGPT-like assistance now helps users generate SQL queries, debug ETL scripts, and document API specifications, significantly accelerating onboarding for non-technical stakeholders. This democratization of data engineering tasks enables business analysts to participate more directly in data integration projects while maintaining technical quality standards.

What Architectural Patterns Enable Seamless Data Integration?

Choosing the right architectural pattern ensures scalability, flexibility, and maintainability in your data ecosystem.

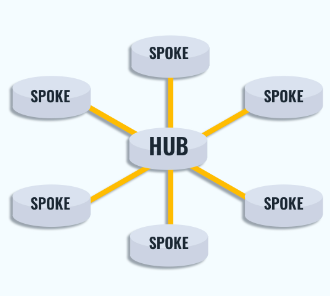

1. Hub-and-Spoke Architecture

A centralized hub handles all data interactions, transformations, and quality checks before distributing data to connected spokes (sources and destinations). See Hub-and-Spoke model.

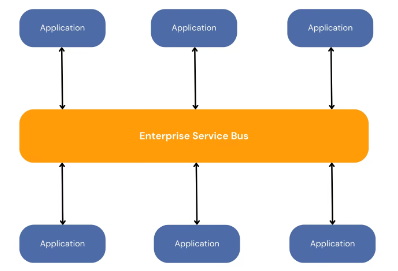

2. Enterprise Service Bus (ESB)

A centralized bus enables loose coupling and message-based communication between systems, enhancing scalability and flexibility.

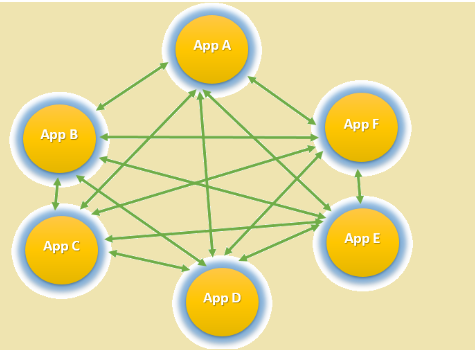

3. Point-to-Point

Direct connections between individual systems. Simple to implement but difficult to scale as connections multiply.

4. Lambda Architecture

A hybrid that combines batch processing (Batch Layer) with real-time stream processing (Speed Layer); the Serving Layer merges results for unified querying.

5. Kappa Architecture

A simplified alternative to Lambda: all data is treated as a real-time stream, eliminating separate batch and speed layers.

What Are the Different Data Integration Approaches Available?

How Do You Choose the Best Data Integration Architecture for Your Needs?

Streaming Data Integration

Streaming data is processed in real time as it's generated. Platforms such as Apache Kafka, Apache Pulsar, and AWS Kinesis enable high-throughput, fault-tolerant streams ideal for real-time analytics and monitoring.

Change Data Capture (CDC)

CDC tracks inserts, updates, and deletes in source systems and propagates them downstream, keeping targets synchronized while processing only incremental changes.

API-based Integration

APIs provide standardized, real-time connectivity, particularly useful for integrating external systems or SaaS applications.

Data Virtualization

Data virtualization tools let you access and query heterogeneous data sources through a virtual layer, reducing replication, complexity, and storage costs.

What Are the Essential Best Practices for Data Integration Architecture?

- Define Clear Objectives: Align architecture with precise business goals and data requirements.

- Adopt Scalable Design: Plan for growth using distributed storage, parallel processing, and cloud technologies.

- Prioritize Data Quality: Implement validation and cleansing to maintain accurate, consistent data.

- Implement Data Governance: Establish policies for ownership, privacy, and stewardship; see the data governance framework.

- Leverage Automation: Automate ingestion, validation, and monitoring to reduce manual effort and errors.

- Ensure Data Security: Use encryption, access controls, and compliance checks (e.g., GDPR, HIPAA).

- Optimize for Performance: Address storage, processing, and retrieval bottlenecks with indexing, partitioning, and caching.

How Can Airbyte Simplify Your Data Integration Architecture?

Airbyte is a cloud-based integration platform that simplifies data consolidation without extensive coding.

- 600+ Pre-Built Connectors: Databases, APIs, files, SaaS apps.

- Connector Development Kit (CDK): Build custom connectors quickly.

- Retrieval-Based Interfaces: Sync data for AI frameworks such as LangChain or LlamaIndex.

- Change Data Capture (CDC): Real-time synchronization.

- Vector Database Support: Integrate with Snowflake Cortex and enable workflows that can use Google Vertex AI.

- Flexible Deployment: UI, API, Terraform, or PyAirbyte.

- dbt Integration: Perform ELT transformations with dbt.

- Data Security: Built-in encryption and access controls.

Conclusion

Data integration architecture enables consistent, reliable, and secure data flow across systems. Selecting the right architecture depends on data complexity, infrastructure, scalability, and security needs. Modern ELT platforms like Airbyte make adopting and managing these architectures significantly easier.

Frequently Asked Questions

What is a data integration architect?

A professional who designs systems to ensure data consistency, quality, and accessibility across platforms while collaborating with teams to implement scalable solutions.

What are the layers of data integration architecture?

Source, Extraction, Staging, Transformation, Loading, Target, and Metadata Management.

What is the difference between ETL and ELT in data integration?

ETL transforms data before loading it into the target system; ELT loads raw data first and transforms it within the target. ELT suits cloud environments with scalable compute.

How do I choose between batch and real-time data integration?

Use batch when updates are periodic and not time-critical; choose real-time when immediate data access is crucial, such as in fraud detection or live analytics.

What role does metadata play in data integration architecture?

Metadata provides context, including origin, structure, and relationships, supporting governance, lineage tracking, and informed data usage decisions.

Suggested Read:

.webp)

.webp)