7 Best Data Virtualization Tools for 2026

Summarize this article with:

Organizations nowadays face major challenges to meet the ever-increasing demand for immediate analysis while managing data spread across siloed systems. Traditional data management methods create bottlenecks with complex processes, data redundancy, and unnecessary data movement. These inefficiencies lead to increased operational costs, delays, and errors in decision-making.

However, there’s a solution to these problems—data virtualization. It enables you to abstract and integrate data from multiple data sources without physically copying or moving it. By creating a virtual layer, data virtualization empowers your organization with enhanced data integration, efficient data access, and improved data analysis.

Let's dive into some of the popular data virtualization tools, along with their key features.

What is Data Virtualization?

Data virtualization is a modern data management approach that creates an abstract layer of data. This layer acts as an intermediary, allowing you to access data from multiple sources without knowing the exact location or structure of this data. It creates a combined view of data from various different sources, giving the appearance of complete data stored in a centralized location.

By implementing data virtualization, you can seamlessly integrate your data from various sources, including databases, cloud storage systems, and other data platforms. This eliminates the need to worry about the different structures and formats of data in each source, saving significant time and effort.

What are Data Virtualization Tools?

Data virtualization tools are applications that allow you to implement this approach, providing the functionality to manage and interact with the virtualized data layer. Some major benefits of using data virtualization tools are mentioned below:

- Faster Data Access: By utilizing data virtualization tools, you can access and query data instantly from diverse sources without physically relocating it. This ensures agility and helps faster decision-making.

- Reduced Redundancy: By creating a virtual layer, the data virtualization tools minimize the need for creating duplicate copies of data, reducing the costs of storage. This helps ensure the accuracy and consistency of the processed data.

- Convenient Data Migration: When you are switching to new systems or upgrading the infrastructure of the existing systems, these data virtualization tools enable you to streamline the data migration. The abstraction layer acts as a bridge between the old and the new data sources, minimizing disruption and downtime.

- Faster Development: Data virtualization tools enable you to access and integrate data from various sources into their applications without worrying about the underlying infrastructure complexities.

7 Best Data Virtualization Tools

Let’s dive into the overview and features of some of the popular open-source and paid data virtualization tools.

1. Red Hat JBoss

Red Hat JBoss Data Virtualization is a well-known open-source data virtualization tool designed to simplify data integration and management from various sources. It enables seamless real-time integration, access, and querying of data from multiple platforms.

JBoss Data Virtualization functions as a virtualization layer, abstracting the complexities of underlying data sources such as text files, big data sources, and third-party applications. This user-friendly approach eliminates the need to delve into data storage and management intricacies.

Some key features of JBoss Data Virtualization are:

- Scalability: JBoss offers a highly scalable architecture that enables you to conveniently scale up or down as per your requirements. This ensures enhanced performance even with continuously growing, complex, and massive datasets.

- Data Caching: The caching feature in Red Hat JBoss improves query response times and overall performance by storing frequently accessed data in memory.

- Cloud-Native Capabilities: It allows you to integrate seamlessly with cloud-native development and containerization platforms such as Red Hat OpenShift. This facilitates optimal efficiency in application management.

2. Denodo

Denodo is a renowned open-source data virtualization tool that offers robust capabilities for integrating and managing multiple data sources. It acts as a virtual layer, abstracting the underlying data sources regardless of structure, format, or location (cloud-based or on-premises). This abstraction hides the complex data management and storage and presents a unified view of the data without physically collecting or moving it. These capabilities ensure that you can easily access and organize your data and gain valuable insights.

Some of the key features of Denodo are listed below.

- Real-Time Access: Denodo provides real-time access to data, keeping all the information up-to-date. This empowers you to gain valuable insights for decision-making and increases overall efficiency.

- Data Integration: Denodo allows you to seamlessly integrate a wide range of data sources, such as databases, data warehouses, cloud storage platforms, and APIs. This promotes enhanced data management and consistency across your organization.

- Agile Data Provisioning: By composing virtual datasets dynamically, Denodo provides agile data provisioning. This facilitates enhanced and efficient data preparation with reduced effort.

3. Teiid

Teiid, developed by Red Hat, is a popular open-source data virtualization tool designed to simplify data integration and access across multiple data sources. It enables you to create unified virtualized views of your data, eliminating the need for physically moving or copying the data.

Some of Teiid's features are listed below.

- Advanced Query Optimization: Teiid lets you perform advanced query optimization through its distributed query processing engine. This ensures optimal performance and supports scalability even with large and complex data.

- Developer Productivity: Teiid provides intuitive tools and APIs to simplify building and managing virtualized data models. This enhances developer productivity by saving time and effort required during development.

- Integration with Red Hat Environment: With Teiid, you can seamlessly integrate Red Hat technologies like Red Hat OpenShift and JBoss Middleware. This allows you to build comprehensive data solutions within the Red Hat ecosystem and streamline data access.

4. Accelario

Accelario is an open-source and versatile database virtualization tool that helps you streamline your test data management processes. It enables you to optimize and consolidate your test data environments, minimizing the complexity and costs associated with them.

Some of the key features of Accelario are listed below.

- Data Masking: With its advanced data masking feature, Accelario enforces regulatory compliance. This ensures that only masked data is used for testing, protecting confidential data from unauthorized access.

- Integration with Amazon RDS: Accelario offers seamless integration with Amazon RDS, a managed database service. This enables you to leverage RDS's scalability and reliability with Accelario’s data virtualization capabilities.

5. TIBCO Data Virtualization

TIBCO Data Virtualization is a software solution specifically designed to meet your data virtualization needs. It acts as a virtual layer, abstracting the underlying complex processes of accessing data from various sources and presenting a centralized view. This eliminates the need for physical data consolidation and enables you to efficiently access, query, and manipulate the data with minimal costs.

Key features of TIBCO Data Virtualization are:

- Flexibility: It offers high flexibility and agility. With minimal effort, you can modify data access configurations to meet rapidly changing business requirements.

- Improved Decision-making: By providing instant access to larger datasets, TIBCO Data Virtualization enables you to create meaningful insights and take immediate action. You can then utilize various analytics tools to access any information according to your requirements.

Pricing: TIBCO offers a 30-day free trial. After this trial period, you can opt for its basic plan at $400/month or a premium plan at $1500/month. You can also choose any custom plans to cater to your needs.

6. AtScale

AtScale is an intelligent data virtualization platform designed to streamline data access for Business Intelligence and Artificial Intelligence applications. It eliminates the need to move or copy your data, allowing you to keep it in its original location, whether on-premises or cloud, without disrupting your existing workflow. AtScale achieves this by using a single virtual cube to generate a singular view of your data.

Key features of AtScale are:

- Data Governance: AtScale’s semantic layer enhances data governance by ensuring there’s a single and unified model describing how data is used for BI and analysis.

- Improved Data Visibility: With AtScale Design Center, you can easily access and gain a clear overview of all connected data repositories, irrespective of their location. This simplifies data discovery and management.

Pricing: AtScale offers custom pricing plans to cater to different requirements. For a detailed understanding of their pricing structure, you can contact their sales team.

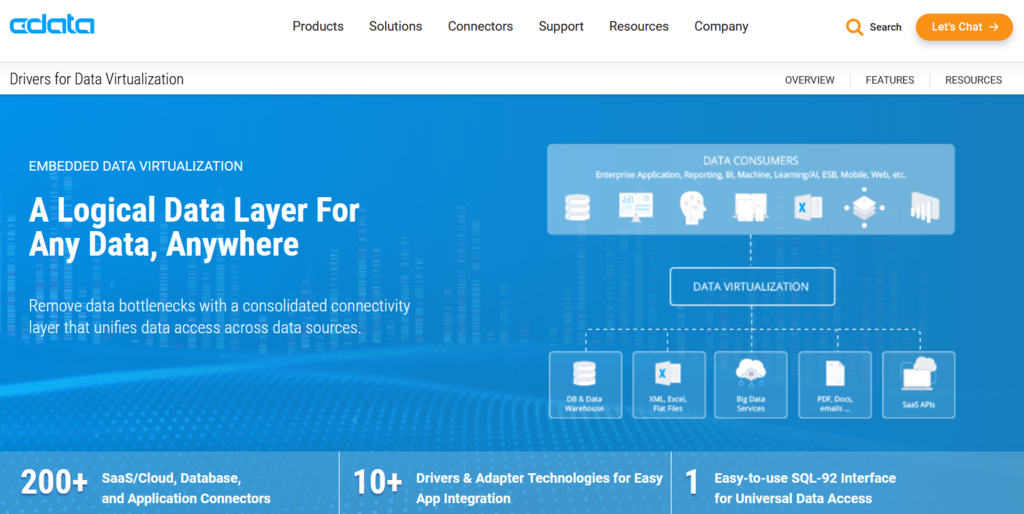

7. Cdata

CData is designed to provide a unified view of your structures and unstructured data sources without time-consuming replication. It offers CData Drivers to connect to various data sources, including SaaS applications, data warehouses, files, and APIs. This facilitates consolidated logical access to all your sources without performing any migration. You can further leverage this unified view for reporting and analytical operations.

Unique features of CData include:

- Real-Time Data: With CData, you can eliminate the need for time-consuming data replication. It efficiently allows you to access your company’s data in real time, enabling faster insights.

- Streamlined Connectivity: CData allows you to connect with 300+ data sources, such as databases and cloud platforms. This streamlines data integration and reduces development time.

Pricing: Cdata offers—CData Connect Cloud and CData Virtuality for data virtualization. For detailed information on their pricing plans and specifications, you can contact their sales team.

Enhance Your Analytics Journey with Airbyte

With all the data virtualization tools listed above, you can easily manage your data and hide all the underlying complexities. But, this enables you to focus only on the vital functionalities to save time and effort. However, for in-depth analysis or historical reporting, you need to consolidate data in a central repository.

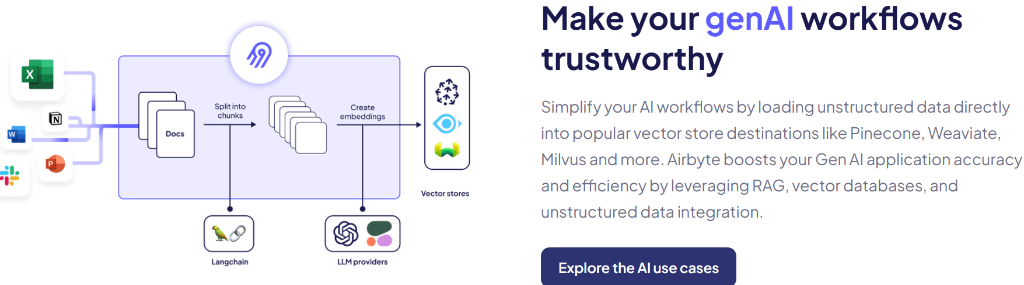

While data virtualization doesn’t involve centralized data storage, data integration tools like Airbyte can be valuable for storing data in data warehouses or data lakes. It helps you automate data extraction, loading, and transformation processes from various sources into a central location.

Some of Airbyte's features are listed below.

- Extensive Library of Connectors: Airbyte provides an extensive catalog of over 550+ connectors, enabling you to seamlessly connect to various sources, extract data, and consolidate it into a centralized platform. This makes it easy to manage your data from different sources in one place.

- AI-powered Connector Creation: If you can't find the connector you need, Airbyte provides the option to create custom connectors using the Connector Development Kit (CDK) or no-code Connector Builder. Further, the AI Assistant in Connector Builder streamlines the process of building connectors by automatically pre-filling and configuring various fields, significantly reducing setup time.

- GenAI Workflows: Airbyte facilitates integration with popular vector databases, such as Pinecone, Qdrant, Chroma, Milvus, and more. This enables you to simplify your AI workflows by loading semi-structured and unstructured data directly to vector store destinations of your choice.

- Retrieval-Based LLM Applications: Airbyte enables you to build retrieval-based conversational interfaces on top of the synced data using frameworks such as LangChain or LlamaIndex. This helps you quickly access required data through user-friendly queries.

- Change Data Capture: Airbyte's Change Data Capture (CDC) technique allows you to seamlessly capture and synchronize data modifications from source systems. This helps ensure that the target system stays updated with the latest changes.

- Complex Transformation: It follows the ELT approach, where data is extracted and loaded into the target system before transformation. However, it allows you to integrate with the robust transformation tool, dbt (data build tool), enabling you to perform customized transformations.

- Diverse Development Options: Airbyte offers multiple options to develop and manage data pipelines, making it accessible to both technical and non-technical users. These options include a user-friendly UI, API, Terraform Provider, and PyAirbyte. This allows users to choose the one that best suits their expertise and needs.

- Data Security: Airbyte prioritizes the security and protection of your data by following industry-standard practices. It employs encryption methods to ensure the safety of data both during transit and at rest. Additionally, it incorporates robust access controls and authentication mechanisms, guaranteeing that only authorized users can access and utilize the data.

Conclusion

Data virtualization tools facilitate a powerful, flexible, and cost-effective way of gaining enhanced insights from your data. Out of the multiple tools available in the market, this article discusses some of the best open-source and paid data virtualization tools—Teiid, Red Hat JBoss, Accelario, Denodo, TIBCO Data Virtualization, AtScale, and CData.

These data virtualization tools centralize your access to the data, promote better collaboration, and foster innovations and creativity. By leveraging these tools, you can gain enhanced insights and make data-driven decisions.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: