What Are Data Structures & Their Types

Summarize this article with:

✨ AI Generated Summary

Data structures are essential for efficient data organization, retrieval, and modification, impacting system performance and scalability across applications like operating systems, databases, and AI. Key types include:

- Linear (arrays, stacks, queues, linked lists) for ordered data and fast insertions/deletions.

- Non-linear (trees, graphs, BSTs) for hierarchical and networked data.

- Hash-based (hash tables, sets, bloom filters) for near O(1) average operations.

- Specialized structures (heaps, tries, vector and time-series databases) for priority scheduling, autocomplete, AI similarity searches, and real-time analytics.

Choosing the right data structure depends on data nature, dominant operations, performance trade-offs, and hardware considerations like cache locality, with modern trends favoring specialized databases for AI, IoT, and streaming analytics.

Data structures are the backbone of computer science, providing the foundation for organizing, storing, and retrieving data efficiently. Understanding the types of data structures and their characteristics is essential for software developers, data engineers, and anyone working with complex data systems.

This guide will take you through the different types of data structures, key operations, time and space complexity, and how to choose the best data structure for your project in today's rapidly evolving data landscape.

What Is Structure in Data Structure?

A data structure is a way of organizing and storing data in memory so it can be accessed and modified efficiently. It provides a systematic way of managing data that enables quick operations such as searching, inserting, updating, and deleting.

Choosing the right data structure can drastically improve system performance—whether you're building operating systems, databases, or mobile apps.

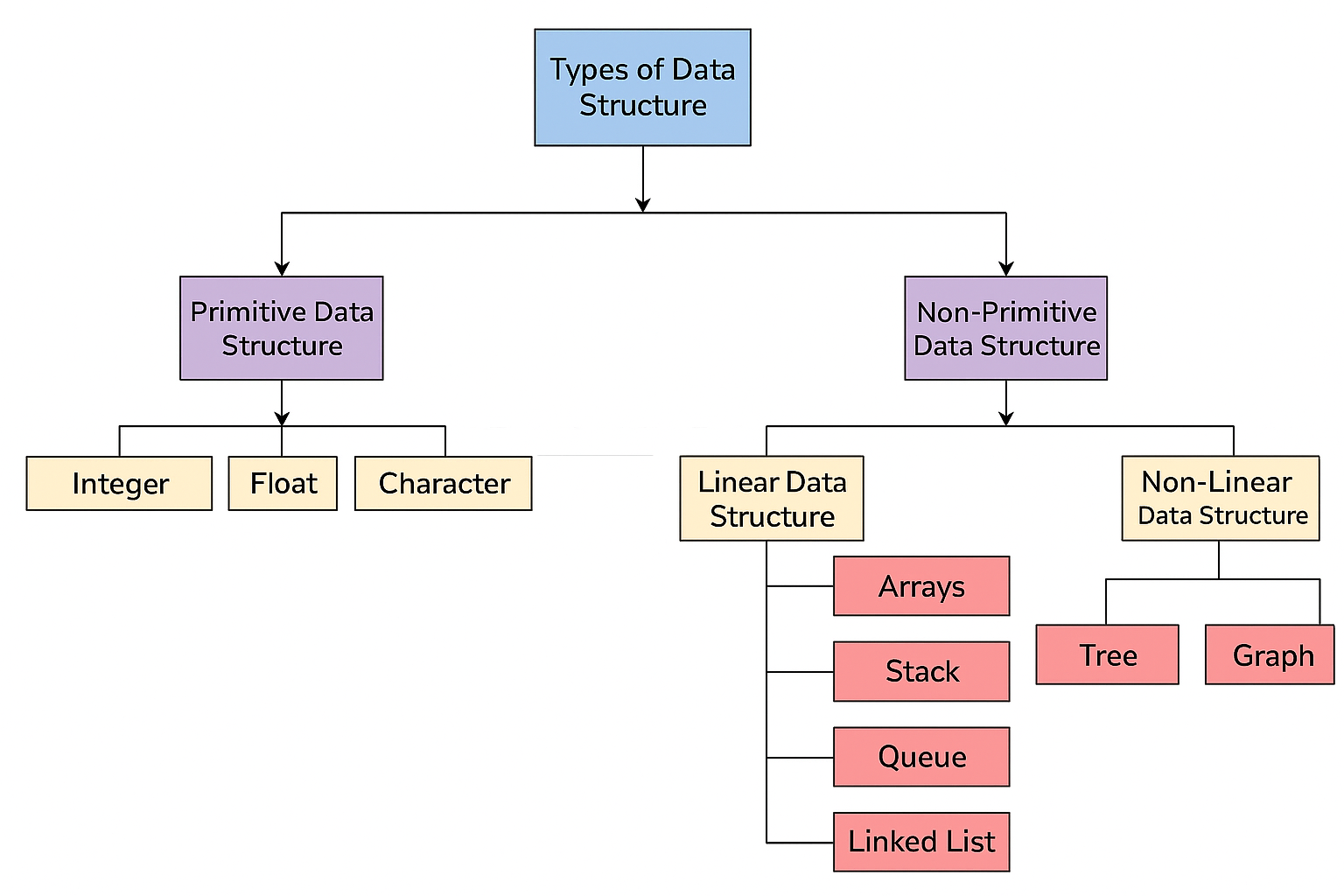

Data structures can be classified into various types based on their structure, behavior, and operations. These include linear data structures like arrays and linked lists, non-linear data structures such as trees and graphs, and more specialized hash-based data structures like hash tables and bloom filters.

Why Are Data Structures Important?

- Handle Different Data Types – Manage text, numbers, images, and more within the same abstract data types.

- Boost Developer Productivity – Most languages ship with rich data-structure libraries.

- Improve Runtime – The right structure dramatically speeds up data retrieval.

- Scalability – Structures suited for large volumes maintain performance as data grows.

- Clean Abstraction – They separate business logic from storage concerns, making code easier to maintain.

What Operations Can You Perform on Data Structures?

- Search – Find an element matching a condition.

- Insertion – Add new data.

- Deletion – Remove data.

- Traversal – Visit every element systematically.

- Sorting – Re-order data for quicker future retrieval.

- Update – Modify an existing value.

How Are Data Structures Classified?

1. Linear Data Structures

Linear structures store data sequentially and are ideal when order matters.

Array

An array stores items of the same type in contiguous memory. It offers O(1) indexed access, perfect for numerical computing and image buffers.

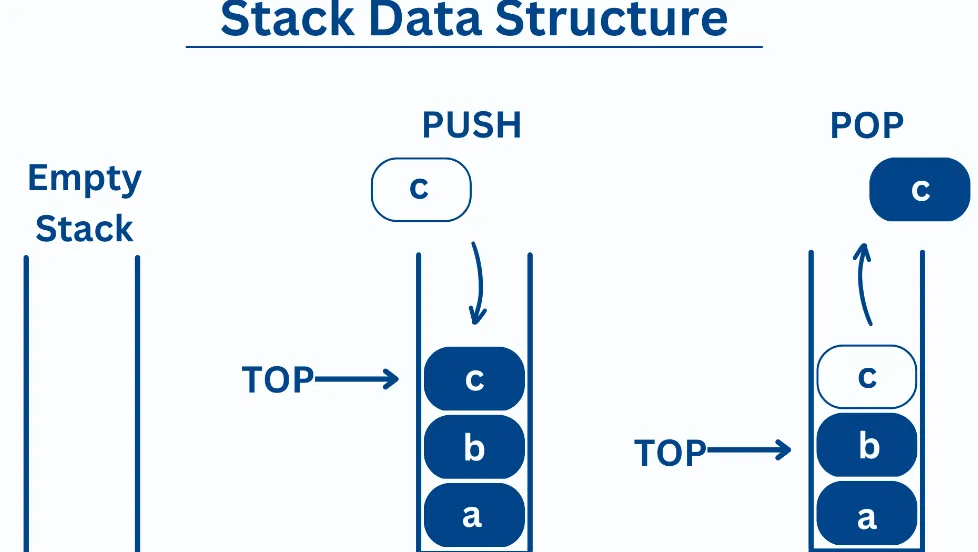

Stack

Stacks follow the last-in, first-out (LIFO) principle. Typical operations include:

- Push – Insert an element.

- Pop – Remove the top element.

- Peek – View the top element without removing it.

- IsEmpty / IsFull – Check capacity status.

Stacks excel in data management scenarios where operation order is critical.

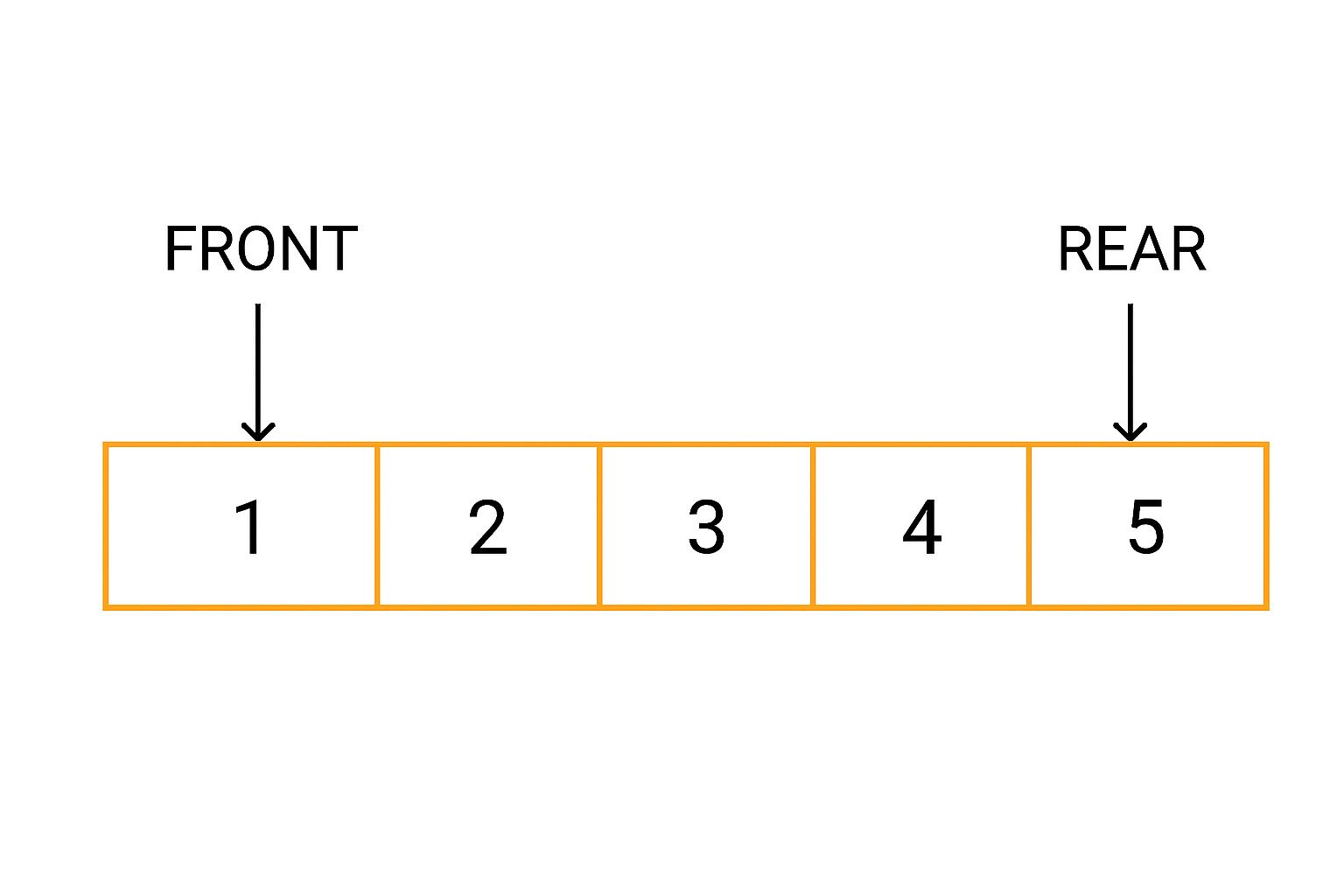

Queue

Queues implement first-in, first-out (FIFO). Common operations:

- Enqueue – Insert at the rear.

- Dequeue – Remove from the front.

- Peek – View the front element.

- Overflow / Underflow – Full or empty conditions.

Operating systems use queues for job scheduling, processing tasks in arrival order.

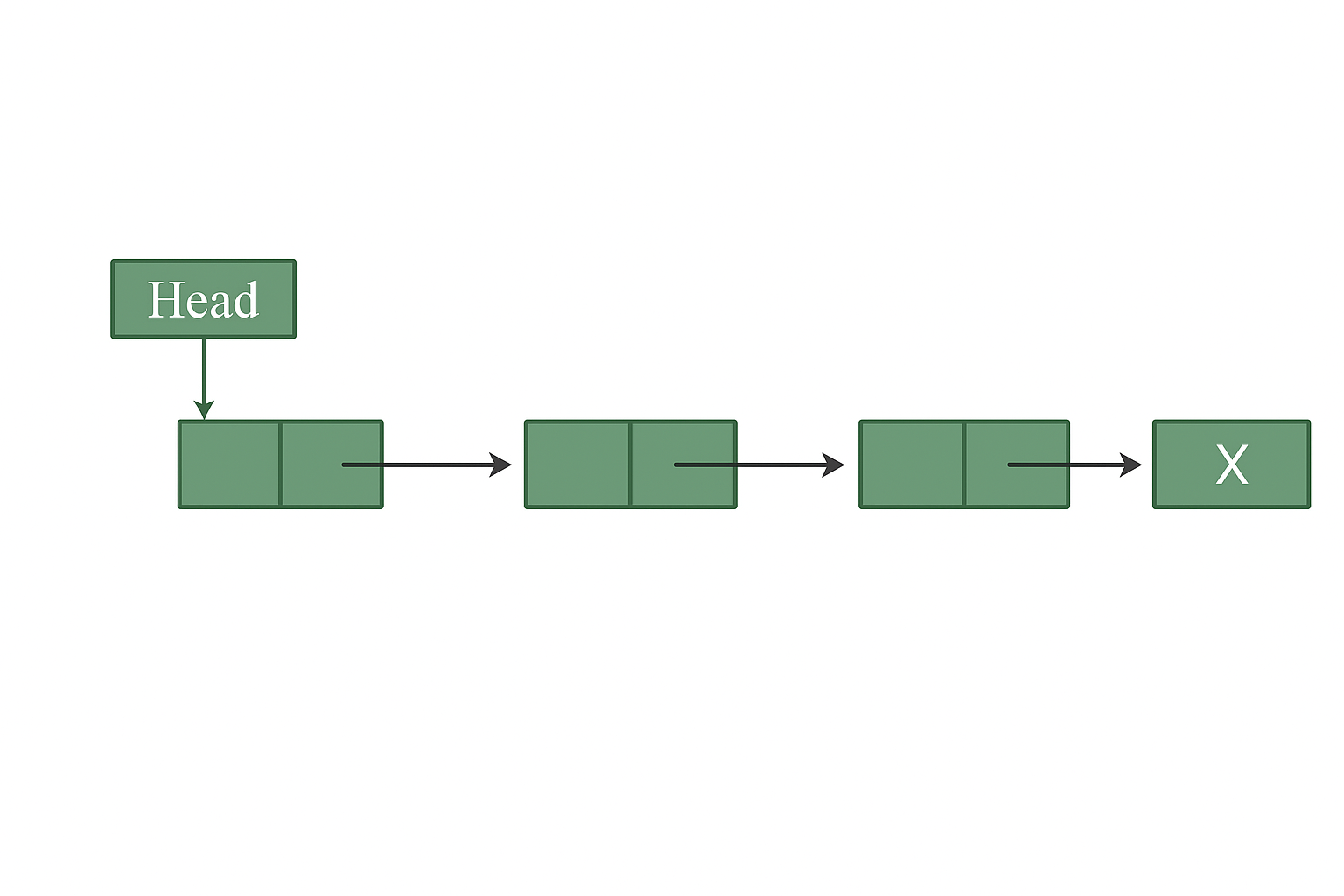

Linked List

A linked list is a sequence of nodes, each pointing to the next. It allows O(1) insertions and deletions at the beginning (or when a pointer to the position is known), without shifting elements.

2. Non-Linear Data Structures

Non-linear structures model hierarchies and complex networks, with graph databases experiencing explosive 21.9% CAGR growth from USD 2.57 billion in 2022 to a projected USD 10.3 billion by 2032.

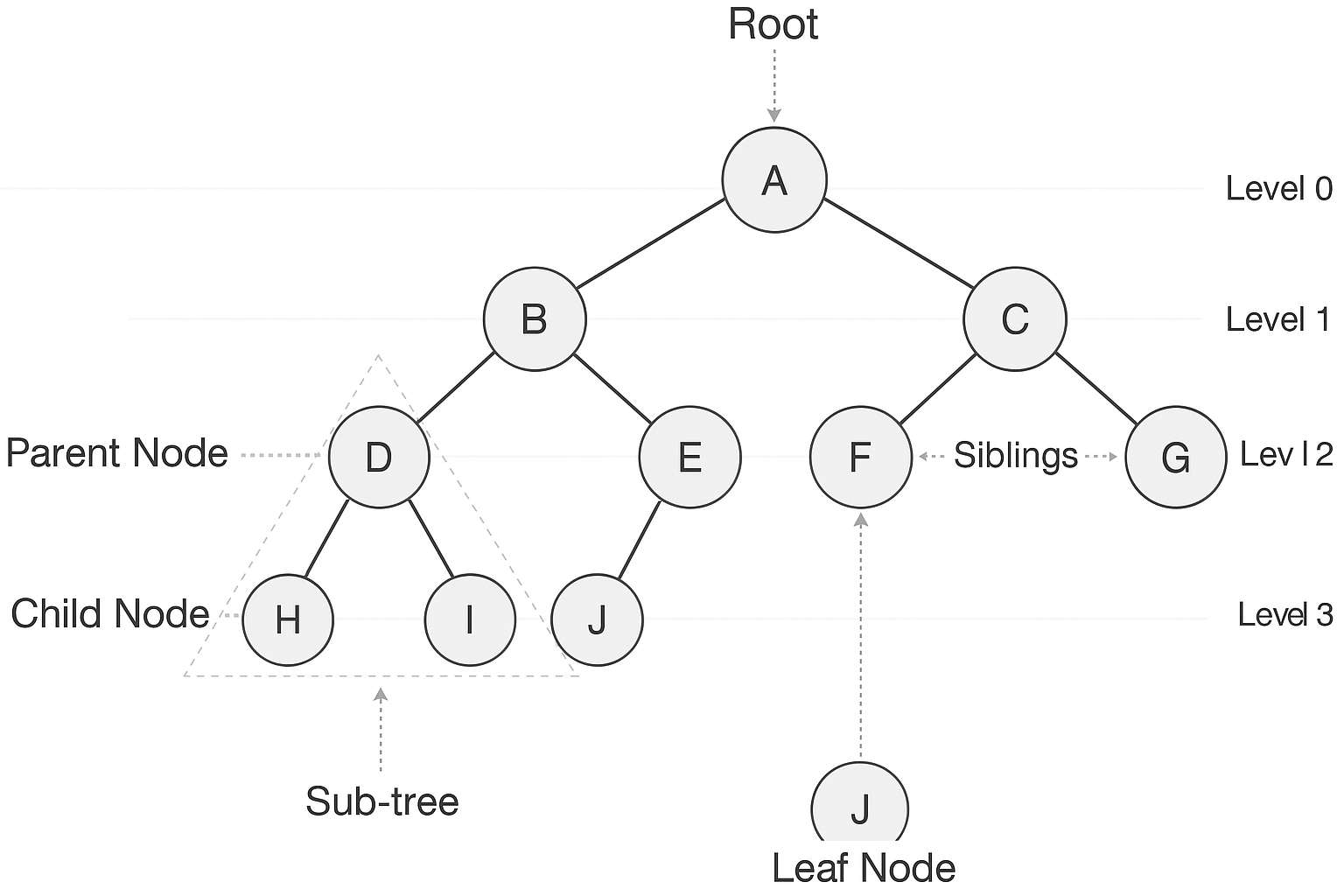

Tree

A tree consists of a root and nested parent/child nodes. Typical in file systems and databases.

Key terms: root, parent, child, siblings, leaf, internal node, ancestors, descendants, subtree.

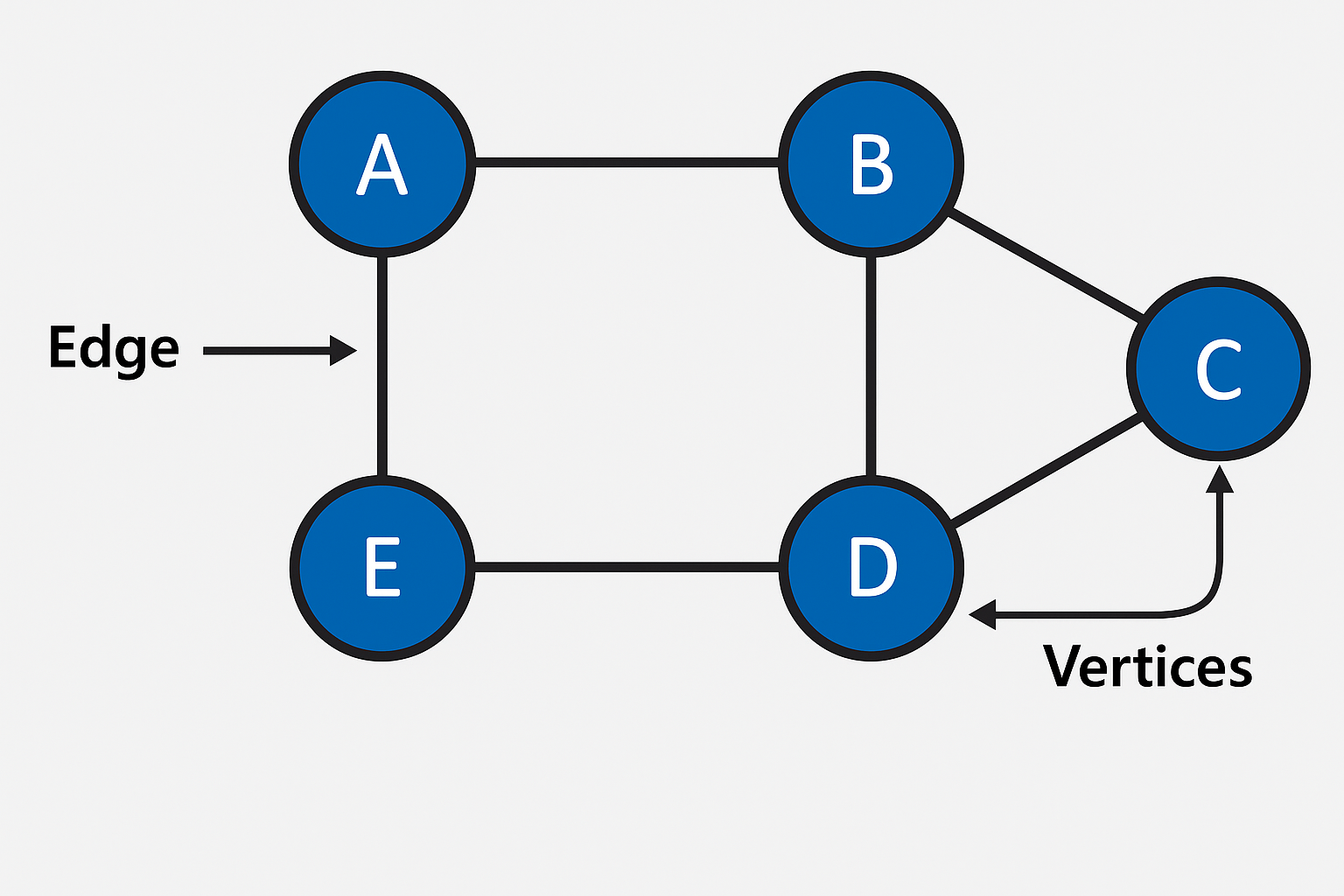

Graph

Graphs comprise vertices connected by edges and are suited for modeling networks. The telecommunications and IT sector leads graph database adoption due to the inherently networked nature of telecommunications data, while financial services organizations use them extensively for fraud detection and risk analysis.

- Vertices (nodes) – fundamental units.

- Edges – connections; directed or undirected.

Binary Search Tree (BST)

A BST is a binary tree where, for every node, all values in the left subtree are less than the node's value and all values in the right subtree are greater, enabling fast ordered lookups and range queries.

3. Hash-Based Data Structures

Hash Tables / Hash Maps

Map keys to indices via a hash function, giving near-O(1) average search, insert, and delete times.

Sets

Store unique elements, typically implemented with hash tables for quick membership checks.

Bloom Filters

Probabilistic structures for membership testing with minimal memory—useful in distributed systems to avoid unnecessary disk reads.

4. Specialized & Other Data Structures

Heaps & Priority Queues

Tree-based structures maintaining a heap property (min-heap or max-heap). Vital for priority scheduling.

Tries (Prefix Trees)

Efficient for storing strings by shared prefixes—used in autocomplete, IP routing, and dictionaries.

Vector Databases

A rapidly emerging category optimized for high-dimensional vector data and similarity searches.

Time Series Databases

Specialized for timestamped data, primarily for IoT data processing and real-time analytics.

What Are Common Misconceptions About Data Structure Selection?

Hardware-Ignorant Selection Practices

A pervasive misconception treats data structures as abstract mathematical entities divorced from underlying hardware realities. Modern processors feature complex memory hierarchies with L1 caches operating at sub-nanosecond speeds while main memory access requires hundreds of cycles. This performance gap means that cache-friendly data structures often outperform theoretically superior alternatives. In performance-critical domains, where responsiveness and efficiency directly impact user experience, understanding cache-aware data structure design is essential.

Arrays demonstrate superior real-world performance for many operations despite their theoretical O(n) insertion complexity because of excellent cache locality. Consecutive memory access patterns enable prefetching mechanisms to hide memory latency, while linked lists suffer from cache misses due to pointer chasing across scattered memory locations.

Hash Table Universality Misconceptions

While hash tables provide excellent average-case O(1) performance, their worst-case O(n) behavior during hash collisions can severely impact systems processing adversarial inputs. Many developers incorrectly assume hash tables universally outperform tree-based alternatives without considering workload characteristics.

Cryptographic hash functions like SHA-256, while secure, introduce unnecessary computational overhead for general-purpose hash tables. Non-cryptographic alternatives like MurmurHash or CityHash provide superior performance for data structure applications while maintaining excellent distribution properties for typical data.

Algorithm Analysis Oversimplification

Big O notation analysis frequently oversimplifies real-world performance characteristics by ignoring constant factors and assuming uniform operation costs. A structure with O(log n) complexity might outperform an O(1) alternative for practical dataset sizes due to lower constant factors or better hardware utilization patterns.

Additionally, amortized analysis concepts often confuse practitioners who expect consistent per-operation performance. Dynamic arrays exemplify this challenge—while most insertions execute in O(1) time, periodic resize operations require O(n) time, creating performance hiccups that may be unacceptable for real-time systems despite favorable average-case behavior.

What Is the Time and Space Complexity of Different Data Structures?

*Array search refers to indexed access (array[i]); searching for a specific value in unsorted array is O(n).

**Average case; worst-case search can degrade to *O(n)* with many collisions.

What Are the Key Principles for Arranging Data Efficiently?

Selecting the right data structure shapes application performance:

• Hash tables offer near-instant look-ups based on unique keys.

• Trees capture hierarchical relationships.

• Arrays maximize cache locality for primitive data types.

• Vector databases enable efficient similarity searches for AI applications.

• Columnar formats optimize analytical query performance through compression and vectorization.

Profile early; even a small O(n²) routine can cripple large-scale systems.

What Are the Real-World Applications of Data Structures?

- Job Scheduling – Heaps choose the highest-priority task.

- Caching – Hash tables back LRU caches in web servers.

- Social Graphs – Graphs model relationships in networking apps.

- Change Data Capture(CDC) – Linked lists/logs track ordered changes.

- Autocomplete – Tries enable rapid prefix look-ups.

- AI Applications – Vector databases power recommendation systems and semantic search.

- IoT Analytics – Time series databases process sensor data streams efficiently.

- Fraud Detection – Graph databases identify suspicious relationship patterns.

How Do You Decide Which Data Structure to Use?

- Understand Your Data – Hierarchical or flat? Temporal patterns?

- Identify Dominant Operations – Inserts vs. look-ups vs. deletions vs. similarity searches.

- Evaluate Time vs. Space – Cache-friendly arrays vs. memory-heavy hashes.

- Plan for Growth – Will it scale gracefully? Consider modern lakehouse architectures for analytics.

- Leverage Libraries – Use optimized, battle-tested implementations.

- Consider Workload Patterns – Real-time streaming vs. batch processing requirements.

Tip: Profile early and often!

Unlock the Power of Data Structures with Airbyte

Modern data pipelines rely on multiple structures to handle the growing complexity of enterprise data environments.

Airbyte's open-source platform uses queues for extraction scheduling, arrays for record batching, and hash tables for schema mapping—helping you synchronize databases, SaaS apps, and files with 600+ pre-built connectors.

Whether you're processing time-series data for IoT analytics, implementing vector databases for AI applications, or building real-time streaming pipelines, Airbyte provides the flexible infrastructure to support diverse data structure requirements across cloud, hybrid, and on-premises environments.

FAQ

How do I choose the right data structure for my project?

Start by understanding the nature of your data and dominant operations. For AI applications requiring similarity search, consider vector databases. For time-series data, specialized time-series databases offer optimized performance. For analytics workloads, columnar formats in lakehouse architectures can provide significant cost savings and performance improvements.

What are the performance implications of different data structures?

Performance varies significantly based on workload patterns. Hash tables provide O(1) average-case operations but can degrade under adversarial inputs. Tree-based structures offer consistent O(log n) performance. Modern columnar databases can process billions of rows per second for analytical queries, while vector databases enable efficient similarity searches for high-dimensional data.

How do emerging trends affect data structure selection?

Current trends toward real-time analytics, AI integration, and cloud-native architectures influence data structure choices. Organizations are increasingly adopting specialized databases: vector databases for AI applications, time-series databases for IoT data, and lakehouse architectures combining the flexibility of data lakes with warehouse performance.

What are the key considerations for enterprise data structure implementation?

Enterprise implementations must consider scalability, security, compliance, and integration capabilities. Modern data structures must support cloud-native deployment, real-time processing, and integration with existing enterprise systems while maintaining governance and security requirements.

How do modern data structures support streaming and real-time applications?

Streaming analytics architectures leverage specialized data structures including log-structured storage for change data capture, circular buffers for real-time data ingestion, and in-memory structures for low-latency processing. Time-series databases optimize for continuous data ingestion while maintaining query performance for real-time dashboards and analysis.

What role do data structures play in AI and machine learning applications?

AI applications require specialized data structures including vector databases for storing and querying high-dimensional embeddings, graph structures for representing relationships in neural networks, and tensor data structures optimized for parallel computation. The rapid growth of the vector database market reflects the increasing importance of these specialized structures.

.webp)