What is Snowflake Data Warehouse? Architecture & Key Features

Summarize this article with:

✨ AI Generated Summary

You know the drill with on-premises warehouses: endless index tuning, weekend downtime to add disks, and bills that spike the minute workloads grow. Snowflake flips that script entirely.

Snowflake is a fully managed cloud data warehouse that keeps storage, compute, and services in separate layers, so you can scale each one independently and stop babysitting infrastructure. The platform runs on cloud object storage and spins up virtual warehouses on demand.

The result is faster analytics with fewer headaches. You get automatic elasticity, predictable performance, and a pay-only-when-active model that lets you forecast costs.

TL;DR: Snowflake Data Warehouse at a Glance

- Snowflake is a fully managed cloud data warehouse that separates storage, compute, and services, so you scale each independently instead of babysitting infrastructure.

- Data lands in compressed micro-partitions on cloud object storage, while elastic “virtual warehouses” pull from shared storage to run SQL without stepping on each other’s workloads.

- The services layer handles SQL parsing, optimization, security, RBAC, and metadata, removing chores like index tuning, vacuuming, and manual stats collection.

- Features like Time Travel, zero-copy cloning, secure data sharing, and Streams & Tasks turn risky schema changes, dev sandboxes, and partner feeds into simple metadata operations.

- Common use cases include centralized analytics, ELT inside the warehouse, ML feature stores, real-time dashboards with Snowpipe, and multi-team environments on separate virtual warehouses.

- Airbyte plugs into Snowflake as an ingestion layer with 600+ connectors, handling CDC, schema drift, and COPY INTO orchestration so you can stand up reliable pipelines quickly.

How Does the Snowflake Data Warehouse Architecture Work?

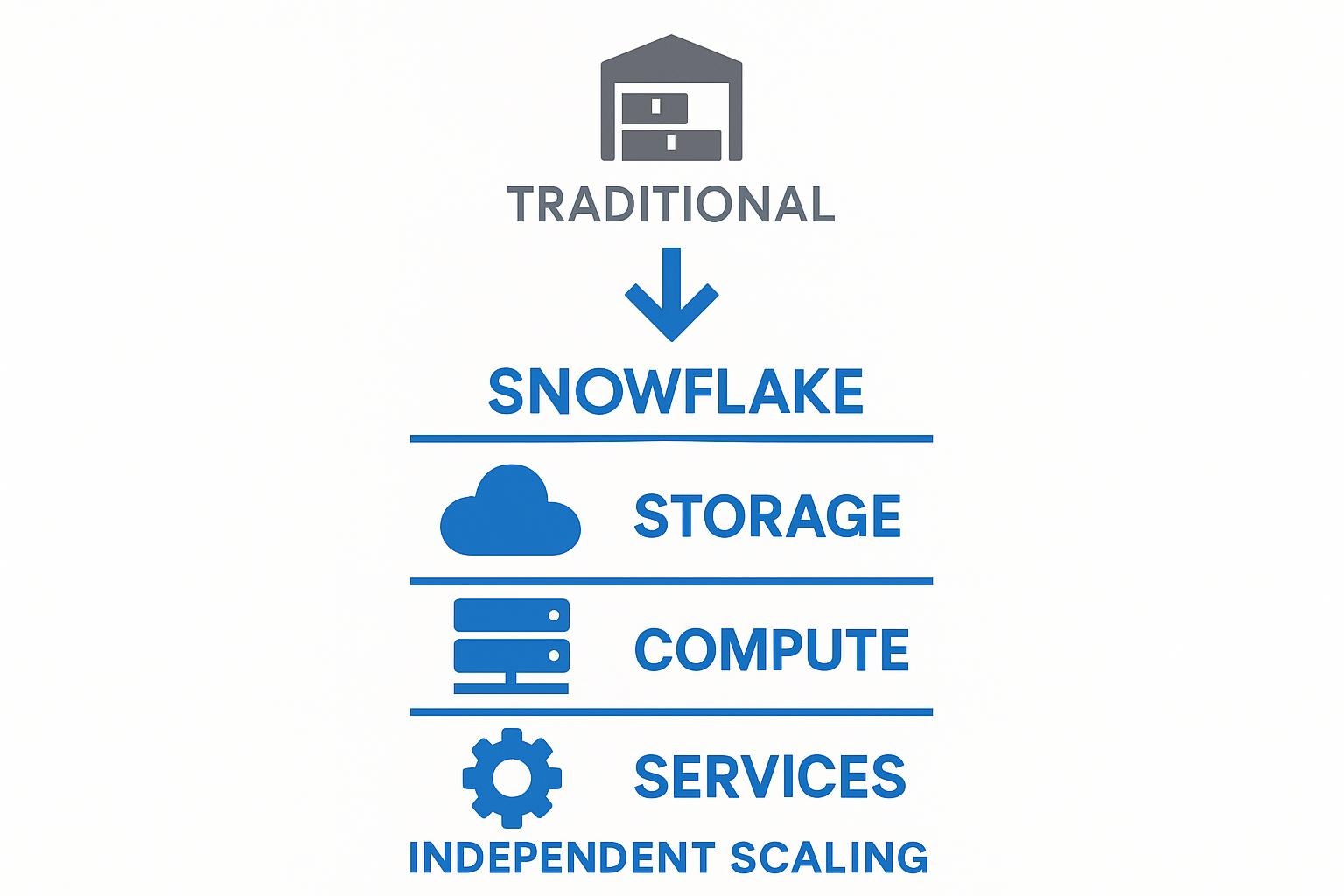

Legacy systems bolt storage and compute into the same box. When you outgrow one, you pay to scale both, then watch idle resources drain your budget.

Snowflake's three-layer, multi-cluster design lets you grow storage, compute, or services independently, so you only pay for what the workload actually needs. The platform organizes into distinct but tightly coordinated layers: a cloud storage plane, elastic compute "virtual warehouses," and a services brain that handles metadata, optimization, and security.

Because each layer scales on its own, you can batch-load terabytes overnight without slowing interactive BI queries. Or spin up extra clusters the moment your dashboards start to spike.

1. Storage Layer

Every record lives in cloud object storage (S3, Azure Blob, or GCS), whether structured or semi-structured. Incoming data is automatically broken into immutable micro-partitions about 50-500 MB each, written in a compressed columnar format that the platform selects and manages for you.

Because partitions carry their own min/max statistics, queries skip irrelevant blocks and read only what they need, keeping scan costs low.

2. Compute Layer (Virtual Warehouses)

A virtual warehouse is an independent MPP cluster that pulls partitions from shared storage, executes SQL, then returns results. You can resize a warehouse in seconds, pause it when idle, or run several in parallel so ELT jobs never steal cycles from analysts.

This isolation guarantees predictable performance even during heavy batch windows.

3. Cloud Services Layer

Sitting above storage and compute, the services layer authenticates users, parses SQL, optimizes query plans, manages transactions, and enforces RBAC. Because the platform owns this control plane, routine DBA chores like index tuning, vacuuming, and statistics collection disappear.

You focus on modeling data, not babysitting infrastructure.

4. Multi-Cluster Architecture

When concurrency spikes (think Monday-morning dashboards or API bursts), the system can automatically add sibling clusters to the same warehouse, then scale them back once demand fades. Queries keep a consistent SLA, and you never have to pre-provision for worst-case traffic.

This decoupled architecture delivers elastic performance and cost control that legacy appliances struggle to match.

What Makes Snowflake Data Warehouse Different From Other Cloud Warehouses?

Most cloud warehouses still carry forward architectural decisions from the on-premises era. The platform started with a clean slate, building exclusively for cloud infrastructure from day one. That fundamental difference shows up in ways that directly impact your daily operations.

- Separate storage and compute layers: Your data lives in low-cost object storage while virtual warehouses spin up only when you need them for queries or data loading. This separation means you never overpay for idle CPU just because your tables grew larger.

- Instant scaling: Resize a warehouse or add multi-cluster capacity in seconds, not hours. Let it auto-suspend when work stops. Teams running nightly ETL jobs park compute during the day and restart automatically for the next run.

- Native semi-structured data handling: JSON, Parquet, ORC, and XML flow directly into VARIANT columns where the system automatically flattens and indexes them. No custom parsers, no ETL bottlenecks.

- Cloud agnosticism: Whether your organization runs on AWS, Azure, or GCP, identical functionality is available everywhere. Multi-cloud strategies become feasible without rebuilding your entire data stack, reducing vendor lock-in risk.

- Consumption-based pricing: Pay for storage by the terabyte and compute by the second. No fixed licensing tiers, no capacity planning guesswork. The pricing model matches how you actually work.

What Are the Most Important Features of Snowflake Data Warehouse?

Traditional warehouses force you to choose between collaboration and control. You patch together copies for development, run risky manual restores, and juggle permissions that never quite map to the way your team works.

The platform bakes the missing capabilities directly into its architecture: versioning, cloning, sharing, automation, and fine-grained security. You can focus on building rather than babysitting infrastructure.

- Time Travel: Query or restore data as it existed at any point within a configurable retention window (up to 90 days on Enterprise editions). The system keeps immutable micro-partitions and metadata deltas, so reverting a table or recovering a dropped schema is an instant metadata action, not a full reload. This safety net turns risky schema changes into routine work.

- Zero-Copy Cloning: Issue a single CLONE command and the system creates a pointer-based copy that adds no storage until data diverges. Developers test migrations on real datasets without ballooning costs, and analysts can build "what-if" models without waiting for overnight refreshes. Because cloning is metadata-only, the clone is available in seconds regardless of database size.

- Secure Data Sharing: Publish live, queryable data to another account (or through the public Marketplace) without ETL or file transfers. Recipients query the data where it sits, while you retain full control over revocation and column-level masking. Enterprises use this capability to monetize data feeds and distribute governed datasets to partners.

- Materialized Views: The platform persists the results of complex joins or aggregations and refreshes them automatically when source tables change. Because refresh runs inside the services layer, BI dashboards read from pre-computed data instead of recalculating heavy logic on every request, reducing both latency and compute spend.

- Streams & Tasks: Streams capture change data on tables, exposing inserts, updates, and deletes as a consumable delta. Tasks schedule SQL or native Python to run on cron-like intervals or in response to event chains. Combined, they form serverless ELT pipelines that stay entirely inside the platform, eliminating external schedulers and keeping transformation logic close to the data.

- RBAC and Governance: Role-based access control is enforced at every object level (warehouse, database, schema, table, even column). Roles inherit privileges, making it easy to grant analysts read-only access while admins retain full DDL rights. Integrated auditing, data encryption, and compliance certifications (HIPAA, PCI DSS, GDPR) satisfy enterprise governance without bolt-on tools.

These features turn the platform into a development-friendly, governable warehouse where you can experiment freely, share securely, and recover quickly, all while keeping costs in check.

What Are Common Snowflake Data Warehouse Use Cases?

Data teams face a common problem: batch ETL jobs, real-time dashboards, and ML experiments all compete for the same infrastructure resources. The decoupled architecture lets every workload run independently without resource conflicts.

How Do You Load Data Into Snowflake Data Warehouse Reliably?

Moving data becomes complex when late-night batch jobs stall or dashboards show yesterday's numbers. Reliable pipelines match the platform's architecture (separate storage, elastic compute) with loading methods that minimize latency and failure risk.

Consider these proven patterns for consistent data ingestion:

1. Using Snowpipe

Snowpipe watches a cloud storage stage and ingests new files in micro-batches as soon as they arrive. The service auto-scales, eliminating the "one big nightly load" bottleneck so you see data in minutes.

Event notifications from S3, Azure Event Grid, or Pub/Sub trigger the process without polling for changes. The pay-per-second model keeps compute costs predictable.

2. Using Bulk Stages (S3/GCS/Azure)

For large backfills or periodic jobs, bulk loading with COPY INTO remains the workhorse. Target 100-250 MB gzip-compressed files to maximize parallelism and reduce warehouse time.

A typical command:

sql

COPY INTO analytics.raw_orders

FROM @stage/orders

FILE_FORMAT = (TYPE = 'CSV' FIELD_OPTIONALLY_ENCLOSED_BY='"')

ON_ERROR = 'CONTINUE';The stage decouples extraction from loading, letting you validate files before they hit production tables.

3. Using ELT Tools (dbt, orchestration)

After loading raw data, tools like dbt orchestrate SQL transforms directly inside virtual warehouses. You write modular models, schedule them with your preferred runner, and let the platform handle the processing.

This approach keeps logic version-controlled while removing brittle on-premises ETL servers.

4. Using Airbyte to Load 600+ Sources

When you need Salesforce data one hour and Postgres the next, hand-built scripts break down. Airbyte connects to over 600 sources, stages data in cloud storage, autogenerates COPY INTO statements, and handles schema drift using open-standard code without vendor lock-in.

Incremental syncs and CDC replication reduce both bandwidth and compute credits. Matching the right loading pattern to each workload gets data where it belongs without constant pipeline maintenance or failed job recovery.

What Are the Advantages and Limitations of Snowflake Data Warehouse?

The cloud-native architecture eliminates infrastructure maintenance while introducing new cost and vendor considerations. Here's where it delivers value and where you'll need additional planning.

For most data teams, the elastic scaling and developer features outweigh the drawbacks. Invest early in cost monitoring and understand how proprietary capabilities affect your broader stack.

How Do You Choose the Right Snowflake Warehouse Size?

Incorrect warehouse sizing hits teams twice: undersized warehouses slow critical queries during peak hours, while oversized ones waste thousands in monthly credits. Since storage is separate from compute, you can resize warehouses in seconds without touching data, but you need a systematic approach to find the right starting point.

- Match workload to warehouse size: Heavy ETL batches benefit from large warehouses that process quickly and shut down, minimizing credit consumption. Dashboard refreshes and ad-hoc analysis typically perform well on medium warehouses. Virtual warehouses are stateless MPP clusters you can pause or resize without affecting stored data.

- Account for concurrency patterns: Enable multi-cluster mode when multiple analysts query simultaneously, since throughput matters more than raw compute power. Auto-scaling prevents heavy queries from blocking dashboard users during business hours.

- Benchmark and adjust systematically: Run representative queries on different warehouse sizes and compare execution time against credit consumption. Set auto-suspend to 60-120 seconds of idle time and monitor daily credit usage. Start with medium warehouses, then scale up only when performance becomes a bottleneck.

How to Get Started With Snowflake and Airbyte

Snowflake's three-layer architecture solves the infrastructure management problems that traditional warehouses create. The separation of storage, compute, and services means you can scale each independently while focusing on data modeling rather than database administration.

Ready to move data into Snowflake? Try Airbyte to connect 600+ data sources using pre-built connectors that handle schema drift automatically. Start building reliable pipelines in minutes, not months.

Frequently Asked Questions

How much does Snowflake cost compared to traditional data warehouses?

Snowflake uses consumption-based pricing where you pay for storage by the terabyte and compute by the second. Unlike traditional warehouses with fixed licensing costs, you only pay for active compute resources. This model typically costs 30-50% less than on-premises warehouses when you account for hardware, maintenance, and staffing overhead. The key to controlling costs is proper warehouse sizing and auto-suspend settings that pause idle warehouses after 60-120 seconds.

Can Snowflake handle real-time data ingestion?

Yes, through Snowpipe. This feature continuously loads data from cloud storage stages as files arrive, typically processing new data within seconds to minutes. Unlike batch loading that runs on fixed schedules, Snowpipe responds to event notifications and scales automatically. For teams needing sub-second latency, Snowflake supports streaming through Kafka connectors and other event platforms.

Does Snowflake work with my existing BI tools?

Snowflake provides JDBC, ODBC, and native connectors for major BI platforms including Tableau, Power BI, Looker, and others. The platform speaks standard SQL, so existing reports and dashboards typically require minimal changes during migration. Performance is often better than legacy warehouses because virtual warehouses isolate BI query workloads from batch ETL jobs, preventing morning dashboard refreshes from slowing down when data engineering runs overnight transformations.

How does Snowflake handle disaster recovery and data protection?

Snowflake automatically replicates data across multiple availability zones within your chosen cloud region. Time Travel provides point-in-time recovery for up to 90 days (on Enterprise editions), letting you restore accidentally dropped tables or revert bad updates with simple SQL commands. For multi-region redundancy, database replication creates continuously updated copies in different geographic regions, though this increases storage costs proportionally to the number of replicas.

Suggested Read:

.webp)