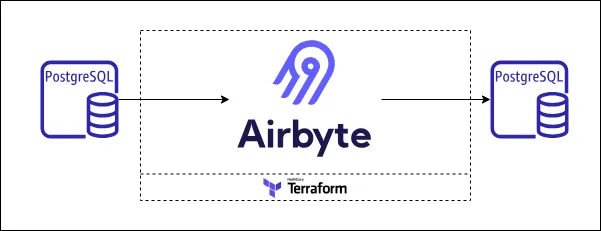

Data Replication from Postgres to Postgres with Airbyte

Harness Airbyte's Terraform provider to seamlessly synchronize two Postgres databases, leveraging Change Data Capture and the Write Ahead Log.

Summarize with Perplexity

Harness Airbyte's Terraform provider to seamlessly synchronize two Postgres databases, leveraging Change Data Capture and the Write Ahead Log.

Summarize with Perplexity

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.

Welcome to the "Postgres Data Replication Stack" repository! This repo provides a quickstart template for building a postgres data replication solution using Airbyte. We will easily synchronize two Postgres databases with Airbyte using Change Data Capture (CDC) and Postgres Write Ahead Log (WAL). While this template doesn't delve into specific data, its goal is to showcase how the data replication solution can be achieved.

This quickstart is designed to minimize setup hassles and propel you forward. To satisfy your appetite for learning, click to dive deep into a detailed article about Data Replication & its need.

Before you embark on this integration, ensure you have the following set up and ready:

Get the project up and running on your local machine by following these steps.

1. Clone the repository (Clone only this quickstart):

2. Navigate to the directory:

3. Set Up a Virtual Environment (If you don't plan to develop or contribute, you can skip this and the following step):

For Linux and Mac:

For Windows:

4. Install Dependencies:

Airbyte allows you to create connectors for sources and destinations, facilitating data synchronization between various platforms. In this project, we're harnessing the power of Terraform to automate the creation of these connectors and the connections between them. Here's how you can set this up:

Navigate to the Airbyte Configuration Directory:

Change to the relevant directory containing the Terraform configuration for Airbyte:

Modify Configuration Files:

Within the infra/airbyte directory, you'll find three crucial Terraform files:

Adjust the configurations in these files to suit your project's needs. Specifically, provide credentials for your Postgres connections. You can utilize the variables.tf file to manage these credentials.

Initialize Terraform:

This step prepares Terraform to create the resources defined in your configuration files.

Review the Plan:

Before applying any changes, review the plan to understand what Terraform will do.

Apply Configuration:

After reviewing and confirming the plan, apply the Terraform configurations to create the necessary Airbyte resources.

Verify in Airbyte UI:

Once Terraform completes its tasks, navigate to the Airbyte UI. Here, you should see your source and destination connectors, as well as the connection between them, set up and ready to go.

Once you've set up and launched this initial integration, the real power lies in its adaptability and extensibility. Here’s a roadmap to help you customize and harness this project tailored to your specific data needs:

Extend the Project:

The real beauty of this integration is its extensibility. Whether you want to add more data sources, integrate additional tools, or add some transformation logic – the floor is yours. With the foundation set, sky's the limit for how you want to extend and refine your data processes.

Download our free guide and discover the best approach for your needs, whether it's building your ELT solution in-house or opting for Airbyte Open Source or Airbyte Cloud.