Best ETL Tools for Clickhouse Integration to follow in 2026

Summarize this article with:

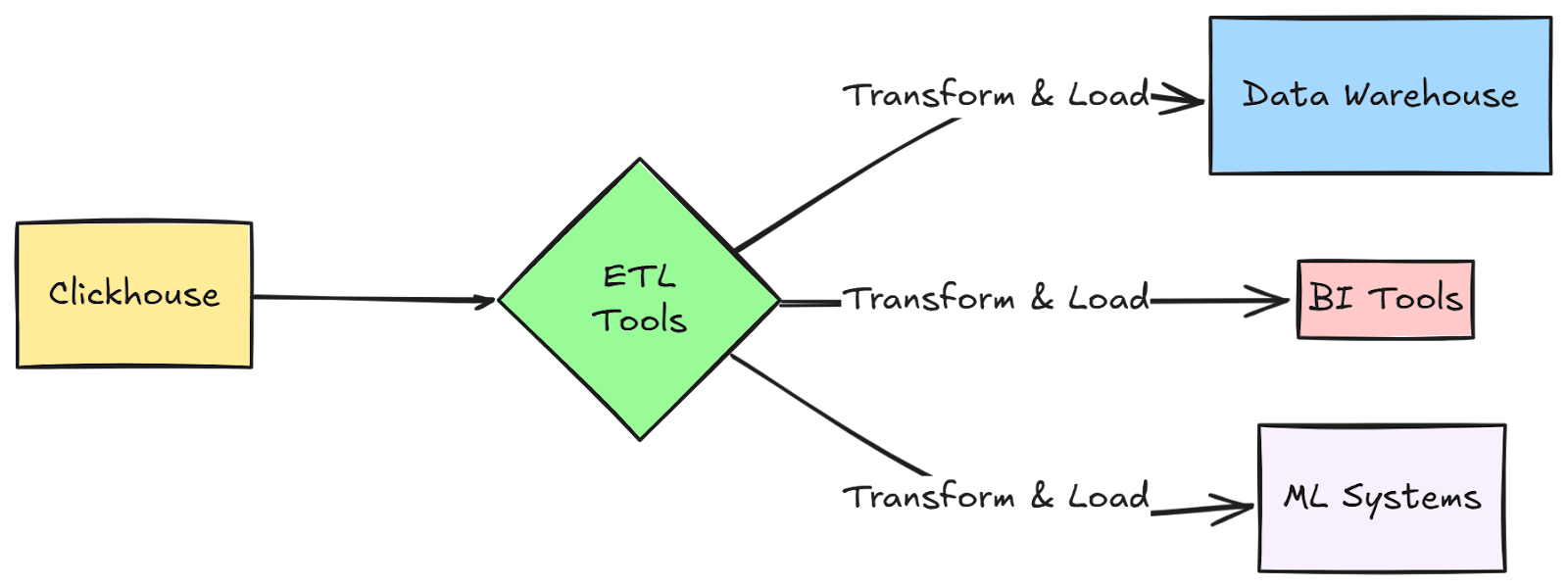

As a data engineer, you've got Clickhouse humming along nicely, handling those massive analytical workloads like a champ. But now comes the fun part - you need to move that data somewhere else. It could be feeding a downstream ML pipeline, syncing with your data warehouse, or updating those BI dashboards your analysts keep asking about.

Let's cut to the chase: you need Clickhouse ETL tools that can efficiently extract data from it and load it into your target destinations. This isn't about generic data pipelines - this is specifically about getting your Clickhouse data where it needs to go, with the right tools for the job.

In this guide, we'll cover the ETL tools that actually work with Clickhouse. No fluff, no theoreticals - just practical options you can start evaluating today.

What is Clickhouse ETL?

Clickhouse ETL is the process of extracting your analytical data from Clickhouse (the source) and loading it into other systems or databases (the destinations) using ETL tools. Think of it as building a data pipeline that moves your Clickhouse data where you need it - whether that's a data warehouse, BI tool, or any other system that needs access to your Clickhouse data.

3 Common Use Cases for Clickhouse ETL Tools

Data Warehouse Synchronization

Moving your high-volume analytics data from Clickhouse to data warehouses like Snowflake or BigQuery is a common requirement. This lets you combine Clickhouse's fast ingestion capabilities with your warehouse's broader analytics ecosystem, giving you the best of both worlds.

BI Tool Integration

Your business analysts need fresh data in their BI tools like Tableau or Looker, but these tools might not directly connect to Clickhouse. ETL tools bridge this gap by regularly syncing Clickhouse data to supported databases that your BI tools can easily query.

Machine Learning Pipeline Support

Data scientists often need Clickhouse data in their ML training environments or feature stores. Clickhouse ETL tools can automatically transform and load your Clickhouse data into formats and destinations that ML frameworks can readily consume, keeping your models updated with fresh training data.

Top 10 ClickHouse ETL tools

Here are the top ClickHouse ETL tools based on their popularity and the criteria listed above:

1. Airbyte

Airbyte is an open-source data integration platform that moves data from Clickhouse to various destinations with pre-built connectors. It automates the entire ETL process while giving you full control over your data pipelines and the flexibility to customize connectors when needed.

Deployment Options: Both cloud and self-hosted

Platform Type: Open-source with managed cloud option

Key Features:

- Pre-built Clickhouse connector with support for incremental sync

- Visual interface for pipeline configuration and monitoring

- CDC support and flexible data transformation options

2. Fivetran

Fivetran offers a reliable, fully managed solution for extracting data from Clickhouse and loading it into popular destinations. It handles schema changes and pipeline maintenance without requiring engineering resources.

Deployment Options: Cloud-only

Platform Type: Proprietary SaaS

Key Features:

- Modern pipeline architecture

- Automated schema change management

- Built-in data quality monitoring and alerts

Here are more critical insights on the key differentiations between Airbyte and Fivetran

3. Stitch Data

Stitch is a cloud-based platform for ETL that was initially built on top of the open-source ETL tool Singer.io. More than 3,000 companies use it.

Stitch was acquired by Talend, which was acquired by the private equity firm Thoma Bravo, and then by Qlik. These successive acquisitions decreased market interest in the Singer.io open-source community, making most of their open-source data connectors obsolete. Only their top 30 connectors continue to be maintained by the open-source community.

What's unique about Stitch?

Given the lack of quality and reliability in their connectors, and poor support, Stitch has adopted a low-cost approach.

Here are more insights on the differentiations between Airbyte and Stitch, and between Fivetran and Stitch.

4. Matillion

Matillion is a self-hosted ELT solution, created in 2011. It supports about 100 connectors and provides all extract, load and transform features. Matillion is used by 500+ companies across 40 countries.

What's unique about Matillion?

Being self-hosted means that Matillion ensures your data doesn’t leave your infrastructure and stays on premise. However, you might have to pay for several Matillion instances if you’re multi-cloud. Also, Matillion has verticalized its offer from offering all ELT and more. So Matillion doesn't integrate with other tools such as dbt, Airflow, and more.

Here are more insights on the differentiations between Airbyte and Matillion.

5. Airflow

Apache Airflow is an open-source workflow management tool. Airflow is not an ETL solution but you can use Airflow operators for data integration jobs. Airflow started in 2014 at Airbnb as a solution to manage the company's workflows. Airflow allows you to author, schedule and monitor workflows as DAG (directed acyclic graphs) written in Python.

What's unique about Airflow?

Airflow requires you to build data pipelines on top of its orchestration tool. You can leverage Airbyte for the data pipelines and orchestrate them with Airflow, significantly lowering the burden on your data engineering team.

Here are more insights on the differentiations between Airbyte and Airflow.

6. Talend

Talend is a data integration platform that offers a comprehensive solution for data integration, data management, data quality, and data governance.

What’s unique with Talend?

What sets Talend apart is its open-source architecture with Talend Open Studio, which allows for easy customization and integration with other systems and platforms. However, Talend is not an easy solution to implement and requires a lot of hand-holding, as it is an Enterprise product. Talend doesn't offer any self-serve option.

7. Pentaho

Pentaho is an ETL and business analytics software that offers a comprehensive platform for data integration, data mining, and business intelligence. It offers ETL, and not ELT and its benefits.

What is unique about Pentaho?

What sets Pentaho data integration apart is its original open-source architecture, which allows for easy customization and integration with other systems and platforms. Additionally, Pentaho provides advanced data analytics and reporting tools, including machine learning and predictive analytics capabilities, to help businesses gain insights and make data-driven decisions.

However, Pentaho is also an Enterprise product, so hard to implement without any self-serve option.

8. Rivery

Rivery is another cloud-based ELT solution. Founded in 2018, it presents a verticalized solution by providing built-in data transformation, orchestration and activation capabilities. Rivery offers 150+ connectors, so a lot less than Airbyte. Its pricing approach is usage-based with Rivery pricing unit that are a proxy for platform usage. The pricing unit depends on the connectors you sync from, which makes it hard to estimate.

9. HevoData

HevoData is another cloud-based ELT solution. Even if it was founded in 2017, it only supports 150 integrations, so a lot less than Airbyte. HevoData provides built-in data transformation capabilities, allowing users to apply transformations, mappings, and enrichments to the data before it reaches the destination. Hevo also provides data activation capabilities by syncing data back to the APIs.

10. Meltano

Meltano is an open-source orchestrator dedicated to data integration, spined off from Gitlab on top of Singer’s taps and targets. Since 2019, they have been iterating on several approaches. Meltano distinguishes itself with its focus on DataOps and the CLI interface. They offer a SDK to build connectors, but it requires engineering skills and more time to build than Airbyte’s CDK. Meltano doesn’t invest in maintaining the connectors and leave it to the Singer community, and thus doesn’t provide support package with any SLA.

All those ETL tools are not specific to ClickHouse, you might also find some other specific data loader for ClickHouse data. But you will most likely not want to be loading data from only ClickHouse in your data stores.

Criterias to select the right ClickHouse ETL solution for you

As a company, you don't want to use one separate data integration tool for every data source you want to pull data from. So you need to have a clear integration strategy and some well-defined evaluation criteria to choose your ClickHouse ETL solution.

Here is our recommendation for the criteria to consider:

- Connector need coverage: does the ETL tool extract data from all the multiple systems you need, should it be any cloud app or Rest API, relational databases or noSQL databases, csv files, etc.? Does it support the destinations you need to export data to - data warehouses, databases, or data lakes?

- Connector extensibility: for all those connectors, are you able to edit them easily in order to add a potentially missing endpoint, or to fix an issue on it if needed?

- Ability to build new connectors: all data integration solutions support a limited number of data sources.

- Support of change data capture: this is especially important for your databases.

- Data integration features and automations: including schema change migration, re-syncing of historical data when needed, scheduling feature

- Efficiency: how easy is the user interface (including graphical interface, API, and CLI if you need them)?

- Integration with the stack: do they integrate well with the other tools you might need - dbt, Airflow, Dagster, Prefect, etc. - ?

- Data transformation: Do they enable to easily transform data, and even support complex data transformations? Possibly through an square integration with dbt

- Level of support and high availability: how responsive and helpful the support is, what are the average % successful syncs for the connectors you need. The whole point of using ETL solutions is to give back time to your data team.

- Data reliability and scalability: do they have recognizable brands using them? It also shows how scalable and reliable they might be for high-volume data replication.

- Security and trust: there is nothing worse than a data leak for your company, the fine can be astronomical, but the trust broken with your customers can even have more impact. So checking the level of certification (SOC2, ISO) of the tools is paramount. You might want to expand to Europe, so you would need them to be GDPR-compliant too.

How to start pulling data in minutes from ClickHouse

If you decide to test Airbyte, you can start analyzing your ClickHouse data within minutes in three easy steps:

Step 1: Set up ClickHouse as a source connector

1. First, navigate to the Airbyte dashboard and click on the "Destinations" tab on the left-hand side of the screen.

2. Next, click on the "Add Destination" button in the top right corner of the screen.

3. Select "ClickHouse" from the list of available destinations.

4. Enter the necessary information for your ClickHouse database, including the host, port, username, and password.

5. Choose the database and table you want to connect to from the dropdown menus.

6. Configure any additional settings, such as the batch size or maximum number of retries.

7. Test the connection to ensure that everything is working properly.

8. Once you have successfully connected to your ClickHouse database, you can begin syncing data from your source connectors to your ClickHouse destination.

Step 2: Set up a destination for your extracted ClickHouse data

Choose from one of 50+ destinations where you want to import data from your ClickHouse source. This can be a cloud data warehouse, data lake, database, cloud storage, or any other supported Airbyte destination.

Step 3: Configure the ClickHouse data pipeline in Airbyte

Once you've set up both the source and destination, you need to configure the connection. This includes selecting the data you want to extract - streams and columns, all are selected by default -, the sync frequency, where in the destination you want that data to be loaded, among other options.

And that's it! It is the same process between Airbyte Open Source that you can deploy within 5 minutes, or Airbyte Cloud which you can try here, free for 14-days.

Conclusion

This article outlined the criteria that you should consider when choosing a data integration solution for ClickHouse ETL/ELT. Based on your requirements, you can select from any of the top 10 ETL/ELT tools listed above. We hope this article helped you understand why you should consider doing ClickHouse ETL and how to best do it.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: