7 Best Data Tokenization Tools Worth Consideration

Summarize this article with:

With the increasing number of cyberattacks, protecting sensitive data such as financial statements, social security numbers, and medical records has become a top priority. Organizations across all sectors have adopted several safety measures, including data tokenization tools, to safeguard such information from unauthorized access.

This article thoroughly explains how data tokenization can help your organization if a data breach occurs. It also lists seven tokenization software solutions that you can explore to enhance your security management while complying with industry regulations.

What Is Data Tokenization?

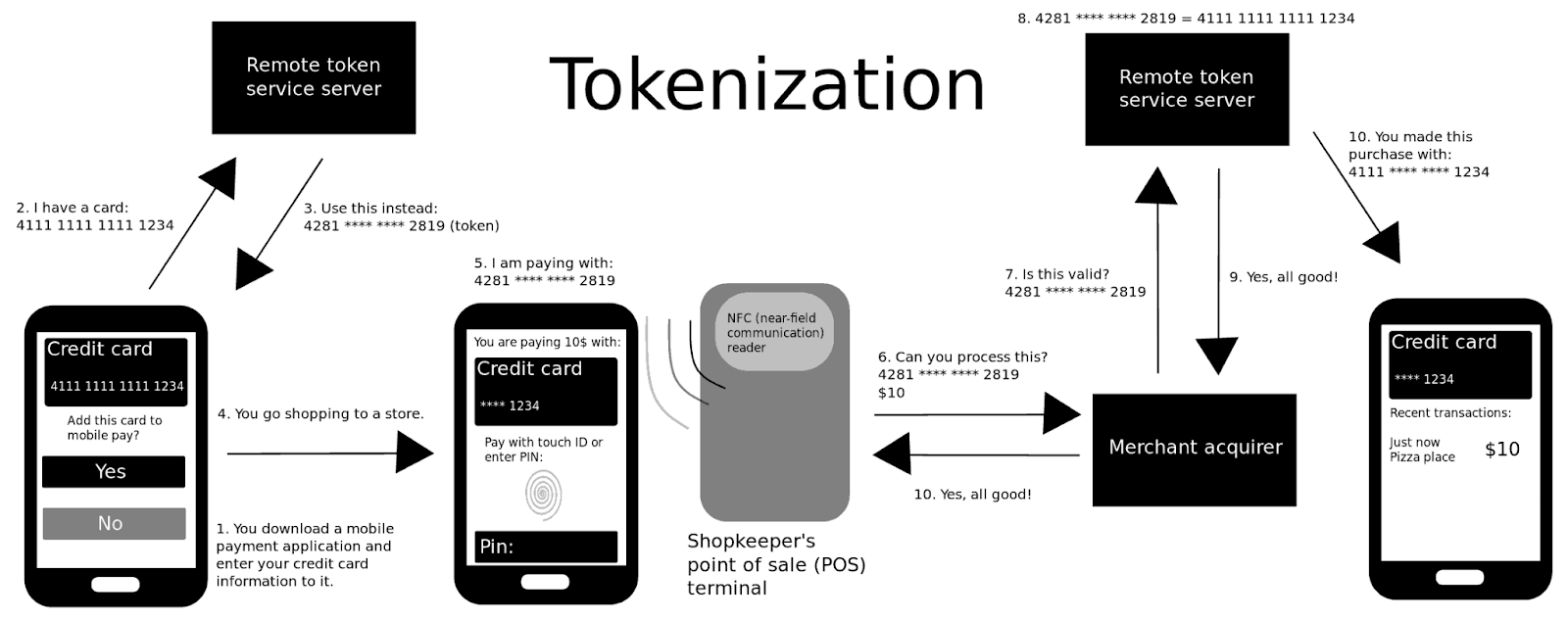

Data tokenization is a powerful security technique that allows you to replace Personally Identifiable Information (PII) with randomly generated alphanumeric values (tokens). Unlike encryption, where data is transformed into different formats but can be restored with a key, tokenization is impossible to reverse-engineer.

The tokens have no intrinsic value outside the tokenization system, making them useless to hackers and reducing the risk of exposing confidential data. Tokenization is a two-step process: generating tokens to represent data elements and securely storing them in a virtual vault using encryption.

For example, the tokenization process begins when a payment is initiated and the user enters their credit card details. The token generated is used for the payment, and a secure vault matches it to the user's actual card information to complete the transaction.

7 Best Data Tokenization Tools

In this section, you will find seven data tokenization solutions to explore and incorporate into your data infrastructure. Every tool has special features and capabilities that you can utilize to streamline your data security implementation.

#1 CipherTrust Tokenization

CipherTrust Tokenization is a comprehensive tokenization solution for anonymizing PII and payment card data. It helps your organization meet regulatory requirements like the Payment Card Industry Data Security Standard (PCI DSS) and offers both vaulted and vaultless tokenization options. This makes CipherTrust flexible for various IT environments, including data centers, cloud, and big data platforms.

Key Features of CipherTrust Tokenization

- Format-Preserving Tokenization: Vaultless tokenization solutions, such as CipherTrust RESTful Data Protection (CRDP) and CipherTrust Vaultless Tokenization (CT-VL), use REST API support to provide format-preserving tokenization.

- Dynamic and Static Data Masking: CipherTrust offers dynamic (hides data based on user roles) and static masking (permanently redacts parts of data) for granular control over data visibility.

- Centralized Management: Using CipherTrust Manager, a central platform, you can effortlessly manage tokens and access policies to simplify administration and improve security. This feature also facilitates multi-tenancy through CT-VL.

#2 Very Good Security (VGS)

Very Good Security (VGS) is a SaaS platform that enables you to interact with protected data without taking on the associated risks or compliance burdens. You can tokenize most data elements, including payments, ID verification images, Protected Health Information (PHI), and PII. VGS acts as a modern security layer and provides a tokenization API to reduce liability and speed up privacy compliance frameworks.

Key Features of VGS

- Data De-Identification: You have the flexibility to choose when and how to access sensitive data through customizable data redaction and reveal options.

- Processor-Neutral Vault: VGS functions independently from payment processors, letting you integrate it with multiple payment service providers without vendor lock-in while maintaining control over the data flow.

- VGS Proxies and Routes: With VGS Proxies, you can handle several data transmission protocols such as FTPS, SMTP, HTTPS, and SFTP. On the other hand, VGS Routes allow you to transform any binary or text-based payload (PDFs, JSON, HTML, XML, and compressed files) into tokens.

#3 Enigma Vault

Enigma Vault is ISO 27001 certified and a PCI Level 1 compliant platform that simplifies field-level tokenization and AES encryption of sensitive data. It saves you from expensive PCI audits and provides a simple Self-Assessment Questionnaire (SAQ). Enigma Vault is a versatile solution that lets you integrate it with most payment providers, such as Clover, WorldPay, and First Data.

Key Features of Enigma Vault

- Speed and Scalability: The platform utilizes modern technologies and methods to help you perform millions of search operations within milliseconds.

- Secure Data Storage: Enigma Vault enables secure storage for files of sizes ranging from kilobytes to gigabytes, safeguarding your data against unauthorized access.

- Multi-Factor Authentication (MFA): You can access developer portals with client credentials only through time-based one-time passwords (TOTP) MFA using apps like Cisco Duo or Google Authenticator.

#4 ShieldConex

Bluefin is a data tokenization vendor that offers ShieldConex—a solution for managing sensitive data, including Primary Account Numbers (PANs), PII, and PHI. With ShieldConex, you can comply with regulations like PCI DSS and standardize the payment experience across mobile, in-store, online, and other channels.

Key Features of ShieldConex

- Vaultless Tokenization: ShieldConex enables you to tokenize every data element, including payment and personal information, without needing a data vault while preserving the original format.

- iFrame Data Entry: The tokenization software provides a secure iFrame (an HTML element used to capture data) for the initial tokenization of sensitive data. This simplifies the process for organizations and allows data masking from the point of input.

- API Integration: You can perform tokenization, detokenization, health checks, and validation via ShieldConex API for smoother implementation.

#5 Rixon

Rixon is a cloud-native, vaultless data tokenization solution designed to maximize security and address data privacy concerns like breaches and cyberattacks. Its patented keyless ciphering process minimizes potential threats, letting your organization focus on business development.

Key Features of Rixon

- AI and ML Monitoring Systems: The data tokenization vendor (Rixon) continually enhances its platform to leverage advancements in fields like AI and quantum processing. You can use its AI and ML monitoring systems to get real-time insights and detect anomalies.

- Continuous Logging: This feature helps you maintain data integrity by facilitating complete transparency and traceability of data access and actions.

- Advanced Compliance: The tokenization tool adheres to SOC II Type 2, PCI DSS, GDPR, ADPPA, and NIST 800-171 standards, showcasing its dedication to abide by industry-specific regulations.

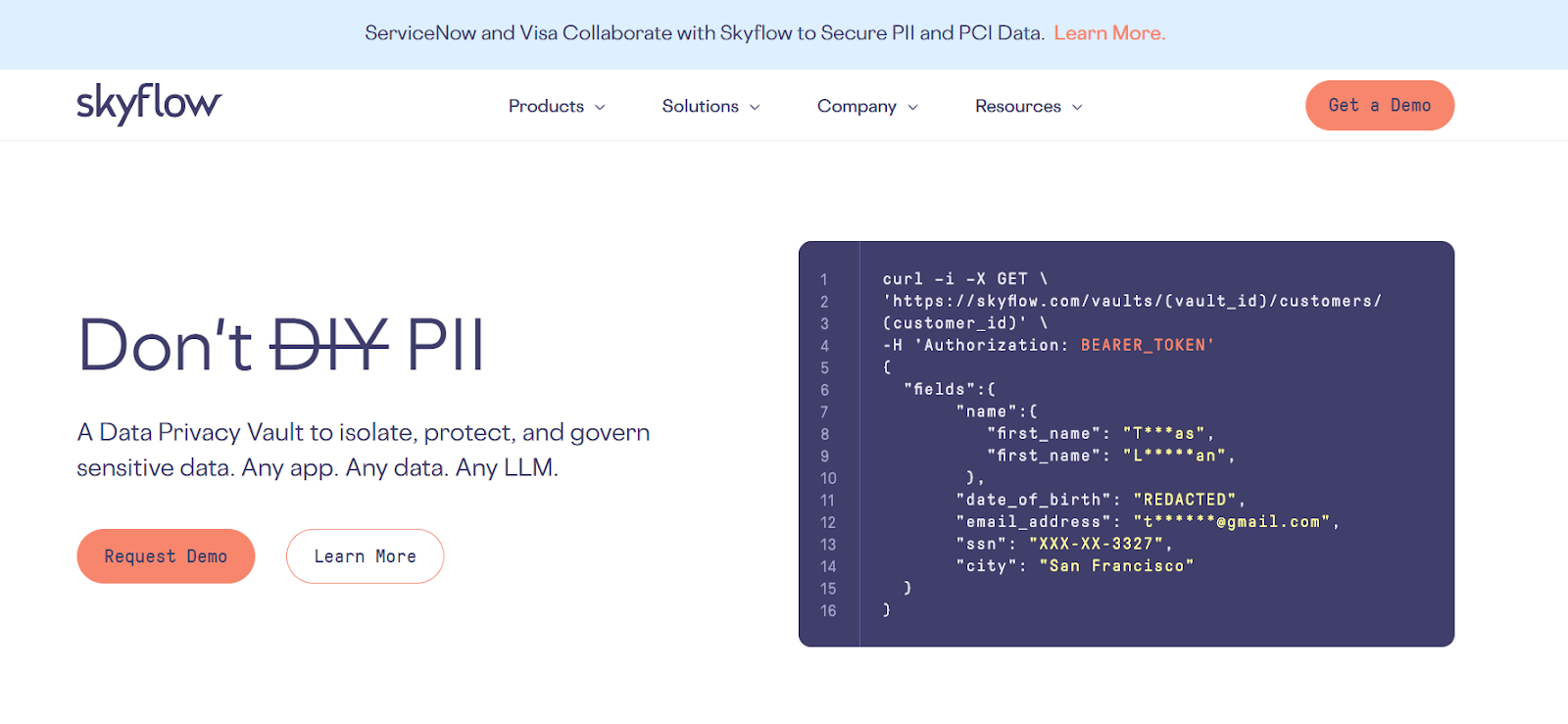

#6 Skyflow

Skyflow is a data tokenization software that allows you to run workflows, logic, and analytics on encrypted data while exercising maximum security through advanced encryption. With easy-to-integrate REST or SQL APIs, auditable logs, and data residency controls, Skyflow simplifies intellectual property management even on virtual private clouds.

Key Features of Skyflow

- De-Identification and Re-Identification of Sensitive Data: Using Skyflow, you can automatically detect, redact, and re-identify sensitive data during various workflows, including data collection, RAG, LLM model training, and inference.

- Encrypted Data Analytics: This feature enables you to keep your data confidential without compromising its utility for analytics.

- LLM Privacy Vault: Skyflow offers an LLM Privacy Vault that lets you implement LLM tokenization. It includes a sensitive data dictionary for you to define terms that should not be fed to LLMs, preventing access to unauthorized information.

Airbyte: A Data Movement Platform Supporting Data Privacy

Airbyte is an AI-powered data movement platform that allows you to make unstructured and semi-structured data accessible to vector databases and run GenAI applications. It is crucial for building context pipelines for AI workflows and LLM frameworks.

The platform offers a library of over 550 pre-built connectors to extract required data from sources, such as APIs, databases, cloud services, and files. Additionally, it provides Connector Development Kits (CDKs) and Connector Builder to create custom connectors. The latter has an AI assistant feature that automatically scans the selected API documentation and auto-fills most configuration fields, speeding up the development process.

To further support context building for your AI use cases, Airbyte is developing a “Context Collection Playground” to help you prepare data in near-real-time. This includes steps like filtering, transforming, enriching, document creation, calculating embeddings, and evaluations. Through these steps, your LLM models will receive rich data in the specified format.

While loading data into these AI/ML/LLM models, you might encounter sensitive or personal information that needs to be kept protected. Airbyte can help you with this as it facilitates the EtLT approach, where you can pseudonymize or remove sensitive fields to protect your data’s privacy. The platform’s data tweaking process can help you de-identify or mask your PII, acting as an indirect tokenizer to secure personal information.

Airbyte further offers robust security features like encryption at rest, logging, encryption in transit, SSO/SAML authorization, and more to safeguard your data. It also complies with global standards like HIPAA, GDPR, SOC2, and ISO 27001. The company has also announced the general availability of the Airbyte Self-Managed Enterprise edition, which provides PII masking and role-based access features for added security.

7 Ways Data Tokenization Helps

With emerging technology like AI Tokenization, you can avoid the risk of financial losses and penalties associated with breaking privacy laws. Here are some more ways how tokenization can be useful to your organization:

- Reduced Risk of Data Breaches: Replacing sensitive data with tokens minimizes the impact of a breach as attackers gain access to meaningless substitutes rather than the actual data.

- Secure Data Sharing: You can effortlessly share your data with third parties without worrying about information leaks, as you can leverage tokenization to hide crucial details. This allows you to perform other tasks like auditing and analyses without any compromise.

- Secure Cloud Migration: If your company decides to move data assets from on-premises to the cloud, tokenizing them before reduces the risk of exposure during transit and storage.

- Simplified Compliance: By protecting confidential data, tokenization can help your firm meet regulatory requirements such as PCI DSS, GDPR, CCPA, and HIPAA.

- Enhances Customer Trust: Tokenization enables you to preserve your customers' data. This commitment reinforces their trust in your brand and improves your organization’s reputation.

- Multi-Channel Systems: Whether your data is accessed via mobile apps, websites, or in-store systems, tokenization ensures consistent security irrespective of the channels.

- Cost-Effective Security: Data tokenization offers a less resource-intensive way to secure your data, reducing encryption overheads and operational costs.

Wrapping It Up

Data tokenization tools are essential for safeguarding sensitive information from cyber threats and hackers. By replacing confidential data with tokens, you can reduce the risk of exposure during breaches, enable secure data sharing, and simplify compliance with industry regulations.

After evaluating your organization’s data security and privacy requirements, you can narrow it down to specific tools with features that are your top priority. Whether you want to secure transactions, manage PII, or support AI workflows, data tokenization solutions can provide your organization with scalable, cost-efficient, and long-term protection.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: