How to Build an AI Data Pipeline Using Airbyte: A Comprehensive Guide

Summarize this article with:

✨ AI Generated Summary

AI data pipelines are essential for modern organizations to manage diverse, real-time data sources and support continuous machine learning workflows, enabling applications like fraud detection and personalization. Airbyte simplifies building these pipelines with:

- 600+ connectors for seamless data integration and AI-native processing features like embedding generation.

- Developer-friendly tools, enterprise-grade security, and flexible deployment options.

- Support for real-time streaming, monitoring, and governance to ensure data quality, compliance, and scalable AI operations.

Building robust AI data pipelines has become critical for organizations seeking to harness artificial intelligence for competitive advantage, yet many teams struggle with the complexity of integrating diverse data sources while maintaining quality and security standards. Modern AI applications demand sophisticated data infrastructure that can handle real-time streaming, ensure data governance, and support continuous model training and deployment.

This comprehensive guide demonstrates how to build production-ready AI data pipelines using Airbyte, exploring both fundamental concepts and advanced implementation strategies that enable organizations to transform raw data into intelligent, actionable insights.

What Is an AI Data Pipeline and Why Is It Essential for Modern Organizations?

An AI data pipeline represents a sophisticated, automated system that orchestrates the complete flow of data from diverse sources through processing, transformation, and storage stages specifically designed to support artificial intelligence and machine-learning applications. Unlike traditional data pipelines—often designed for business intelligence, analytics, and diverse data processing—AI data pipelines must accommodate unique requirements, including real-time processing, vector storage for embeddings, feature engineering for ML models, and continuous model-training and deployment workflows.

AI data pipelines serve as the foundation for numerous business-critical applications that require immediate insights and rapid response capabilities. These include fraud detection, personalization engines, predictive maintenance, and automated decision-making systems. Their complexity reflects the sophisticated requirements of modern AI applications, which often combine multiple data modalities and require continuous learning from new information.

The distinction between traditional and AI-focused pipelines becomes evident in their architectural requirements.

Traditional pipelines optimize for batch processing and structured data warehousing, while AI pipelines must handle unstructured data, support real-time inference, and maintain feature stores for machine learning models.

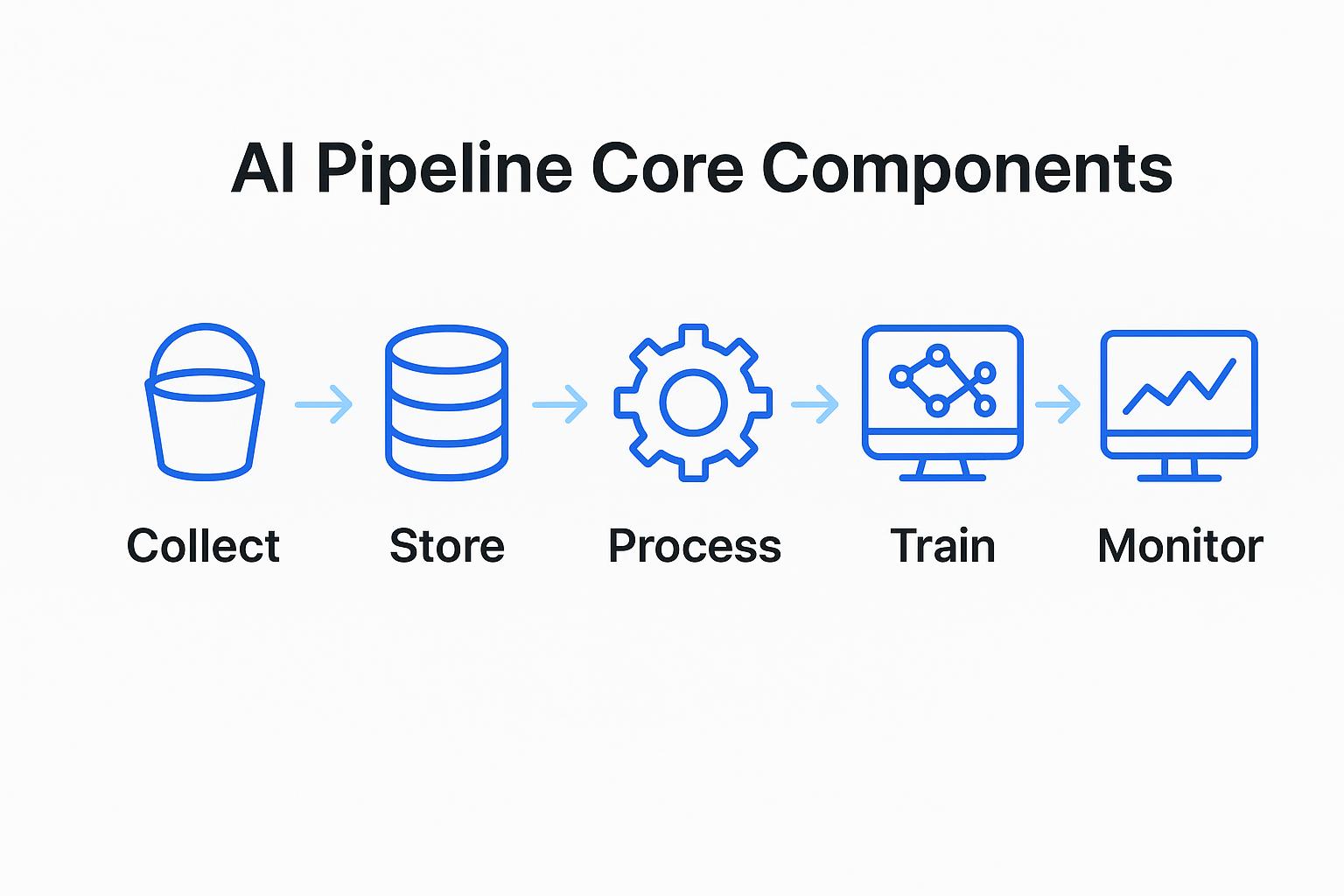

What Are the Core Components That Make AI Data Pipelines Effective?

Effective AI data pipelines integrate multiple specialized components that work together to support machine learning workflows and intelligent applications. Understanding these components helps organizations design systems that can scale with their AI initiatives while maintaining reliability and performance.

1. Data Collection and Ingestion Systems

Modern AI applications require data from diverse sources including databases, APIs, streaming platforms, and unstructured content repositories. The ingestion layer must handle different data formats, velocities, and volumes while maintaining data lineage and quality controls. This component often determines the overall pipeline performance and reliability.

2. Data Storage and Management Infrastructure

AI workloads demand specialized storage solutions that go beyond traditional data warehouses. Vector databases store embeddings for similarity search, feature stores manage ML features, and data lakes accommodate unstructured content. The storage layer must balance performance, cost, and accessibility for different types of AI workloads.

3. Data Processing and Transformation Engines

Processing components transform raw data into formats suitable for machine learning models. This includes feature engineering, data cleaning, normalization, and embedding generation. The transformation layer must handle both batch and streaming workloads while maintaining data quality and consistency.

4. Model Training and Deployment Infrastructure

AI pipelines include infrastructure for training machine learning models and deploying them to production environments. This component manages model versioning, A/B testing, and continuous integration for ML workflows. It ensures that models can be updated and retrained as new data becomes available.

5. Monitoring and Observability Systems

Comprehensive monitoring tracks data quality, model performance, and system health across the entire pipeline. This includes data drift detection, model accuracy monitoring, and infrastructure performance metrics. Observability systems enable proactive identification and resolution of issues before they impact business operations.

What Are The 5 Key Features Make Airbyte Ideal for Building AI Data Pipelines?

Airbyte provides specialized capabilities that address the unique challenges of AI data pipeline development, from initial data ingestion through advanced AI application deployment. These features eliminate common bottlenecks and enable organizations to focus on business value rather than infrastructure complexity.

1. Extensive Connectivity and Integration Capabilities

Airbyte offers 600+ pre-built connectors that handle the complexity of integrating diverse data sources commonly used in AI applications. These connectors support databases, APIs, file systems, and SaaS applications with built-in error handling, schema management, and incremental synchronization. The connector ecosystem eliminates custom integration development while providing enterprise-grade reliability and performance.

2. AI-Native Data Processing Features

The platform includes specialized processing capabilities for AI workloads, such as embedding generation for vector search and integration with vector databases like Pinecone and Weaviate, and enables chunking workflows for large documents through its connector and integration ecosystem. These features transform traditional data integration into AI-ready data preparation without requiring additional processing steps.

3. Developer-Friendly Tools and Integration

PyAirbyte enables Python developers to build data-enabled applications quickly while maintaining integration with popular AI frameworks like LangChain, Pandas, and scikit-learn. This integration allows data scientists and engineers to work with familiar tools while leveraging enterprise-grade data infrastructure.

4. Enterprise-Grade Security and Governance

Airbyte provides comprehensive security features, including end-to-end encryption, role-based access control, and audit logging, that meet enterprise requirements for AI data governance. These capabilities ensure compliance with data protection regulations while enabling secure access to sensitive data for AI applications.

5. Flexible Deployment and Scaling Options

Organizations can choose between managed cloud services, self-hosted deployments, and open-source implementations based on their specific requirements for control, compliance, and cost management. This flexibility enables organizations to optimize their AI infrastructure for specific use cases and regulatory requirements.

How Do You Build an AI Data Pipeline Using Airbyte Step by Step?

Building an AI data pipeline with Airbyte involves systematic configuration of data sources, destinations, and processing workflows that support machine learning applications. This step-by-step approach ensures reliable data flow while maintaining quality and security standards.

1. Prerequisites and Environment Setup

Begin by establishing the foundational infrastructure and credentials required for your AI pipeline. You will need an Airbyte Cloud account or self-hosted Airbyte installation, API keys for your AI services such as OpenAI, credentials for your vector database like Pinecone, and a Python development environment. Ensure that your development environment has access to all required services and that network connectivity allows secure communication between components.

2. Configuring Data Sources and Destinations

Identify and configure the data sources that will feed your AI applications. For customer support AI applications, you might configure Freshdesk as your primary data source to access ticket data, customer interactions, and knowledge base articles. Configure your vector database destination, such as Pinecone, to store embeddings generated from your source data. This configuration includes setting up the appropriate indexing parameters and embedding dimensions that match your AI model requirements.

3. Creating and Configuring the Data Connection

Establish the data synchronization settings that determine how data flows from sources to destinations. Define the specific data streams you want to sync, such as tickets, conversations, or knowledge base articles. Set the replication frequency based on your real-time requirements and choose appropriate sync modes like Incremental - Append + Deduped for efficient data processing. Configure any necessary data transformations or filtering to ensure only relevant data reaches your AI applications.

4. Implementing the AI Application Layer

Install the required libraries for your AI application stack, including packages for vector storage interaction, language model integration, and conversation management. Configure your environment variables to ensure secure access to AI services like OpenAI and your vector database.

Initialize the core components of your AI system by setting up the embedding model that converts user queries into vector representations compatible with your stored data. Connect to your vector store (such as your Pinecone index) and configure your language model with appropriate parameters like temperature settings to control response consistency. Create a prompt template that defines your AI assistant's role, communication style, and approach to handling queries based on your customer support data.

Assemble these components into a cohesive conversational AI application that retrieves relevant information from your vector database and generates accurate, context-aware responses to customer inquiries.

5. Testing and Validation

Thoroughly test your pipeline with diverse queries and scenarios to ensure reliable performance. Validate that data synchronization works correctly, embeddings are generated properly, and the AI application returns accurate responses. Test edge cases and error conditions to ensure robust operation in production environments.

What Are the Advanced Governance and Security Features in Modern AI Data Pipelines?

Advanced AI data pipelines require sophisticated governance and security capabilities that go beyond traditional data protection to address the unique challenges of artificial intelligence applications. These features ensure compliance, protect sensitive information, and maintain trust in AI-driven business processes.

Comprehensive Data Quality Management and Validation

Data quality frameworks for AI pipelines include automated validation rules that check data completeness, accuracy, and consistency before processing. Schema evolution management ensures that changes in source data structures do not break downstream AI applications. Data profiling and anomaly detection identify potential quality issues that could impact model performance, while data lineage tracking provides complete visibility into data transformations and their impact on AI outcomes.

Enterprise-Grade Access Control and Authentication

Role-based access control systems provide granular permissions that determine which users can access specific data sources, modify pipeline configurations, or deploy AI models. Integration with enterprise identity providers enables single sign-on and centralized user management. API key management and rotation ensure secure programmatic access while audit logging tracks all user activities for compliance and security monitoring.

Privacy Protection and Regulatory Compliance

Privacy-preserving techniques include automatic PII detection and masking, data minimization controls that limit data retention and access, and emerging approaches such as differential privacy mechanisms for statistical analysis. Compliance frameworks support GDPR, CCPA, HIPAA, and industry-specific regulations through automated policy enforcement and reporting. Cross-border data transfer controls help ensure compliance with data residency requirements while enabling global AI applications.

How Do Real-Time Processing and Streaming Analytics Enhance AI Data Pipelines?

Real-time processing capabilities transform AI data pipelines from batch-oriented systems into responsive, event-driven architectures that can react immediately to changing conditions and new information.

Event-Driven Architecture and Stream Processing

Event-driven architectures use streaming data platforms to trigger AI processing as new data arrives rather than waiting for batch processing schedules. Stream processing engines handle continuous data flows while maintaining low latency and high throughput. This architecture enables real-time fraud detection, instant personalization, and immediate response to operational anomalies.

Scalable Infrastructure and Performance Optimization

Auto-scaling infrastructure adjusts computing resources based on data volume and processing demands without manual intervention. Distributed processing frameworks handle high-velocity data streams across multiple nodes while maintaining fault tolerance. Performance optimization includes intelligent caching, parallel processing, and resource allocation strategies that ensure consistent response times under varying loads.

Integration with AI/ML Workflows

Real-time feature computation generates machine learning features from streaming data for immediate use in predictive models. Continuous model training updates AI models with new data without interrupting production services. Online inference capabilities serve predictions directly from streaming data pipelines, enabling immediate decision-making based on the latest information.

How Can You Ensure Success When Implementing AI Data Pipelines?

Successful AI data pipeline implementation requires systematic planning, technical excellence, and organizational change management that addresses both technology and human factors. These success factors help organizations avoid common pitfalls and accelerate time-to-value from AI investments.

1. Strategic Planning and Requirements Definition

Begin with clear business objectives that define specific outcomes and success metrics for your AI initiative. Conduct thorough data assessment to understand available data sources, quality levels, and integration complexity. Define architectural requirements that balance performance, scalability, and cost while ensuring compliance with security and regulatory standards. Establish governance frameworks that define roles, responsibilities, and decision-making processes for ongoing pipeline management.

2. Technical Architecture and Infrastructure Design

Design scalable architecture that can grow with your AI initiatives while maintaining performance and reliability standards. Implement comprehensive monitoring and observability from the beginning to enable proactive issue identification and resolution. Choose technology components based on long-term strategic goals rather than short-term convenience. Establish automated testing and deployment processes that ensure pipeline reliability and enable rapid iteration on AI applications.

3. Team Development and Change Management

Build cross-functional teams that combine data engineering, data science, and business domain expertise to ensure technical solutions address real business needs. Provide comprehensive training on new tools and processes while establishing clear communication channels between technical and business stakeholders. Create documentation and knowledge sharing processes that enable team members to understand and maintain complex AI systems. Implement gradual rollout strategies that minimize risk while demonstrating value to stakeholders.

Conclusion

Building effective AI data pipelines represents a fundamental shift from traditional data processing to intelligent, automated systems that can adapt to changing business requirements while maintaining high standards for quality, security, and performance. Airbyte simplifies complex AI implementations and provides the enterprise-grade capabilities necessary for production deployments.

By following proven methodologies and leveraging specialized AI features, organizations can deliver immediate business value while establishing scalable infrastructure for future innovation. The combination of comprehensive connectivity, AI-native processing, and enterprise security creates a foundation for transformative AI applications across industries. Success requires strategic planning, technical excellence, and organizational commitment to data-driven decision making that puts AI at the center of competitive advantage.

Frequently Asked Questions

What makes AI data pipelines different from traditional data pipelines?

They add components for ML workflows including vector storage, real-time feature computation, automated training, continuous monitoring, and unstructured data processing.

How long does it typically take to implement an AI data pipeline?

Simple use cases can be implemented in a few weeks, while complex, multi-source projects may require several months depending on organizational readiness and technical complexity.

What are the most common challenges organizations face when building AI data pipelines?

Source integration complexity, data quality management, security and compliance requirements, real-time scaling demands, and talent shortages represent the primary implementation challenges.

How do you measure the success of an AI data pipeline implementation?

Success measurement includes technical KPIs like latency and reliability, business impact metrics such as decision speed and operational efficiency, cost optimization measures, and user adoption statistics.

What security considerations are most important for AI data pipelines?

End-to-end encryption, granular access controls, comprehensive audit logging, privacy protection measures, secure model serving infrastructure, and compliance with data residency regulations are critical security considerations.

.webp)