From API to Database: A Step-by-Step Guide on Efficient Data Integration

Summarize this article with:

✨ AI Generated Summary

An API for databases enables secure, efficient programmatic interaction with data, supporting operations like querying and updating across various database types (relational, non-relational, cloud-native). Key benefits include real-time data access, improved data quality, automation of workflows, and scalable integration patterns such as CQRS and event-driven architectures.

- Integration involves connecting APIs to databases with authentication, data validation, error handling, and synchronization strategies like Change Data Capture (CDC).

- Modern tools like Airbyte simplify data ingestion with automated connectors, while best practices emphasize secure data transfer, consistent formatting, and performance monitoring.

- Advanced patterns and technologies ensure scalable, reliable, and maintainable API-database workflows for diverse business needs.

An API (Application Programming Interface) for a database—often referred to as a database application programming interface—allows applications to communicate with the database. It enables developers to interact with the database programmatically—querying, inserting, updating, and deleting data. These database APIs play a crucial role in managing sensitive data securely and efficiently.

Whether you're building a dynamic e-commerce platform or a cutting-edge analytics dashboard, API-to-database integrations can empower data engineers to create efficient, scalable, and innovative solutions. Java Database Connectivity (JDBC) is a popular example of an API that facilitates interaction with databases in Java applications, enabling database management.

In this article, we will delve into the benefits of using API-to-database integrations and provide the tools and best practices needed for it.

What Are APIs and Databases in the Context of Integration?

API (Application Programming Interface)

An API allows software components to communicate and exchange the same data seamlessly. Database APIs are crucial for enabling interactions with cloud databases and other systems. They include REST APIs, which use standard HTTP methods, and SOAP APIs, which rely on XML messaging.

Public APIs, like Google Maps, are accessible to developers, while private APIs cater to internal organizational needs. Modern API architectures have evolved beyond simple request-response patterns to include GraphQL for flexible querying, WebSocket APIs for real-time communication, and event-driven APIs that trigger actions based on specific conditions.

These advanced API types enable more sophisticated integration patterns that can adapt to complex business requirements and high-performance scenarios.

Databases

Databases store and organize data through a database management system (DBMS). They come in relational forms, like MySQL, which use a structured database schema, and non-relational forms, like MongoDB, for flexible data handling. Database APIs facilitate interactions with these databases, supporting various programming languages for querying and data management.

Contemporary database architectures increasingly support cloud-native deployments, distributed systems, and specialized workloads. Vector databases handle AI and machine-learning applications, while time-series databases optimize for IoT and monitoring data.

Understanding these database types helps you select the most appropriate database application programming interface for your specific integration requirements.

How Does API-to-Database Integration Actually Work?

Integrating a database API with a database involves connecting the API to the database, enabling applications to interact with cloud databases and various database types.

API requests from software components specify actions like data retrieval or updates. Servers process these requests, ensuring data is accessible for manipulation. This integration supports real-time data access, leveraging database APIs for efficient and secure data management.

The unified interface provided by database APIs facilitates seamless communication between existing systems. REST APIs play a crucial role in this process by providing a standardized method for interactions.

The Integration Process Flow

The integration process typically follows a request-response cycle where client applications send HTTP requests to API endpoints, which then execute corresponding database operations. Middleware layers often handle authentication, rate limiting, and data transformation between API responses and database schemas.

Modern integrations also support batch processing for high-volume operations and implement caching strategies to reduce database load while maintaining data freshness.

What Are the Key Benefits of Integrating APIs with Databases?

Integrating database APIs enhances the functionality, efficiency, and reliability of applications. Let's explore some of the key advantages.

Real-time Data Access and Updates

API integration allows for real-time data access and updates between different systems. Connecting APIs to databases enables applications to retrieve the most up-to-date information, ensuring accurate and timely data availability in your destination.

This enhances analysis and allows users to connect with business intelligence tools, like Power BI, to gain insights. Real-time data access is particularly valuable for applications that require instantaneous synchronization across multiple platforms or when dealing with time-sensitive information.

For example, in a stock-trading app, integrating real-time market data APIs with a specialized time-series or high-performance database ensures that traders can access the most current and accurate data, while relational databases like MySQL are often used for transactional or historical record-keeping.

Enhancing Data Quality and Consistency

API-database integration is crucial in improving data quality and maintaining consistency across different systems. Organizations can establish data-validation and cleansing mechanisms at the entry point, ensuring that only high-quality datasets enter the system.

For instance, when integrating a CRM system with an email-marketing API, the integration can validate and sanitize contact data before storage in an SQL server. Modern data-quality frameworks implement automated schema validation, duplicate detection, and data profiling to maintain high standards throughout the integration pipeline.

Enabling Automation and Efficient Data Workflows

Data workflows can be automated and streamlined using API integrations, reducing manual effort and enhancing operational efficiency. Applications can automate data retrieval, synchronization, and updates, eliminating redundant tasks.

For example, in an e-commerce application, integrating the payment-gateway API with a MySQL database allows for automated order processing. Advanced automation capabilities include trigger-based workflows that respond to specific data changes, scheduled batch processing for large datasets, and intelligent retry mechanisms that handle temporary failures gracefully.

These automated workflows can significantly reduce operational overhead while improving data reliability and processing speed.

What Are Advanced Architectural Patterns for Scalable API-Database Integration?

Modern enterprises require sophisticated architectural approaches to handle complex API-database integrations at scale. These patterns address challenges like high throughput, distributed systems, and maintaining data consistency across multiple services.

Command Query Responsibility Segregation (CQRS)

CQRS separates read and write operations into distinct models, optimizing each for its specific purpose. In API-database integrations, this pattern allows you to design write-optimized database schemas for data ingestion while maintaining read-optimized views for query performance.

For example, when integrating multiple sales APIs with your database, you might use a normalized write model for maintaining data integrity during API ingestion, while creating denormalized read models optimized for analytics dashboards. This separation enables independent scaling of read and write workloads, improving overall system performance.

Event-Driven Architecture

Event-driven patterns complement traditional request-response API integrations by enabling asynchronous processing and loose coupling between systems. Instead of direct API calls to databases, events trigger database operations, allowing for better fault tolerance and scalability.

This approach is particularly valuable for high-volume integrations where immediate consistency isn't required. For instance, when processing user activity data from mobile APIs, events can trigger database updates through message queues, ensuring the system remains responsive even during traffic spikes.

Microservices Database Patterns

The database-per-service pattern ensures each microservice maintains its own database, preventing tight coupling while enabling independent scaling and deployment. When implementing API-database integrations in microservices architectures, each service exposes its own API for data access rather than allowing direct database connections.

This pattern requires careful consideration of data consistency across services, often implementing eventual consistency models and compensating transactions to maintain business-logic integrity across distributed database operations.

How Do You Implement Change Data Capture and Real-Time Synchronization?

Change Data Capture (CDC) represents a fundamental shift from traditional batch processing to real-time data synchronization, enabling organizations to react immediately to data changes and maintain current information across systems.

Database-Level CDC Implementation

Modern databases like PostgreSQL, MySQL, and MongoDB provide built-in CDC capabilities through transaction logs and replication streams. These mechanisms capture every insert, update, and delete operation, creating events that can be consumed by downstream systems.

When implementing CDC for API-database integration, you can configure database triggers or log-based capture to publish changes to message brokers like Apache Kafka. API consumers can then subscribe to these change streams, ensuring they receive updates as soon as data modifications occur in the source database.

API-Driven Change Detection

For systems where database-level CDC isn't available, API-based change detection provides an alternative approach. This involves polling APIs for changes using timestamps, version numbers, or dedicated change endpoints.

Incremental synchronization strategies minimize data transfer by identifying only modified records since the last sync operation. Many modern APIs support webhook notifications that proactively push change notifications to registered endpoints, eliminating the need for continuous polling while maintaining real-time responsiveness.

Conflict Resolution and Data Consistency

Real-time synchronization introduces challenges around data conflicts when multiple systems modify the same records simultaneously. Implementing effective conflict resolution strategies requires careful consideration of business rules and data precedence.

Common approaches include last-writer-wins policies, field-level merging based on timestamps, and business-logic-based resolution rules. Vector clocks and operational transformation techniques provide more sophisticated conflict resolution for collaborative environments where multiple users or systems may modify data concurrently.

How Can You Simplify API Data Ingestion Using Modern Integration Platforms?

Airbyte is an open-source data-integration platform with over 600 connectors to load data from sources to many destinations. It offers APIs and integrations to build and automate no-code data pipelines for your app and developer tools to easily create unique integrations for your specific use case.

Airbyte's platform addresses the most common API-database integration challenges through automated authentication management, including OAuth 2.0 support and credential handling. The Connector Builder AI Assistant can prepopulate API configurations from OpenAPI specifications, significantly reducing setup time and potential configuration errors.

Recent platform updates include native support for complex data formats like CSV, ZIP, and compressed files, along with GraphQL query capabilities that enable sophisticated data extraction patterns. The platform's change data capture implementation provides near real-time synchronization for database sources and uses intelligent error handling (such as exponential backoff) in response to API rate limits to help minimize service disruptions.

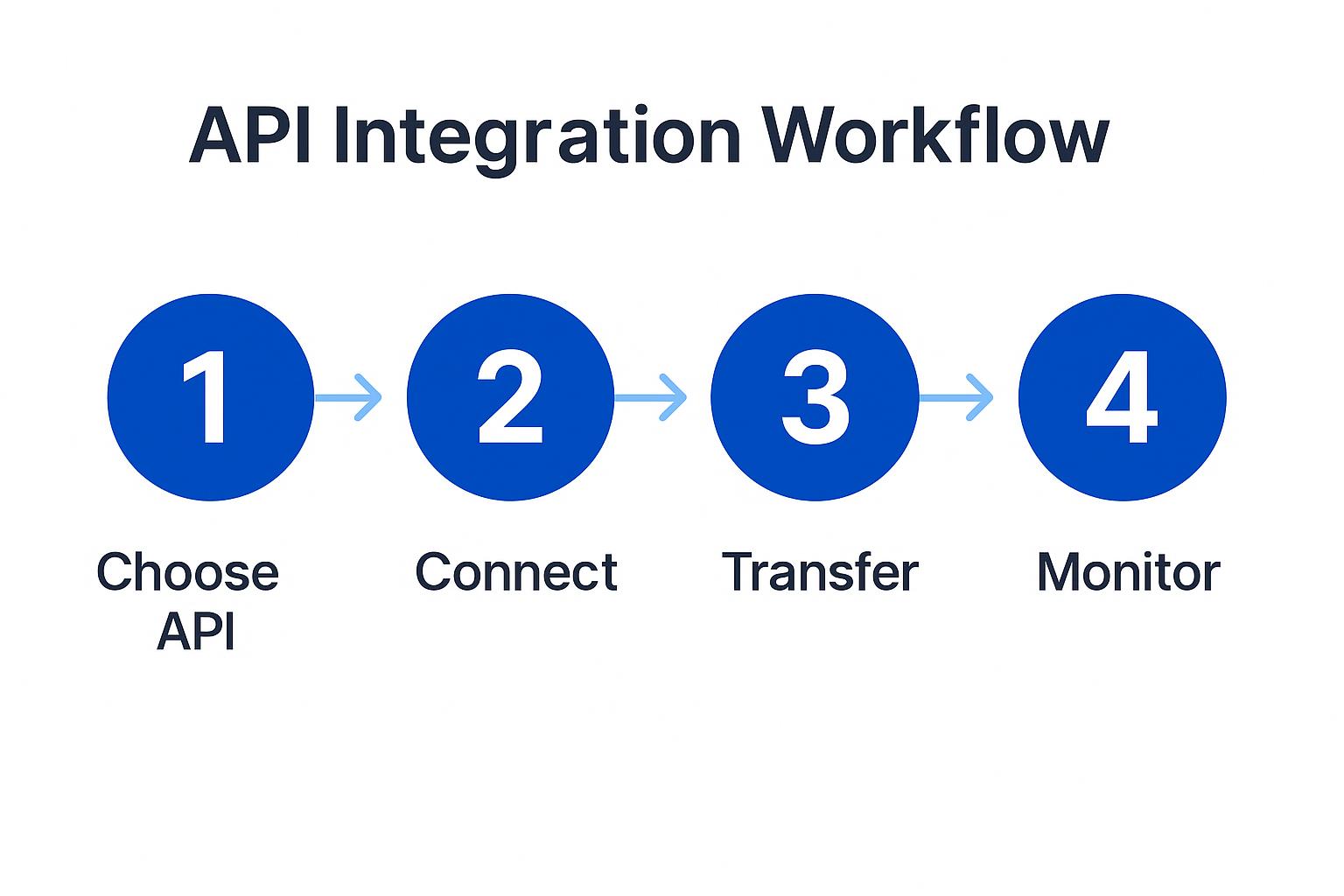

What Are the Essential Steps to Integrate Database APIs?

Here's a guide on how to connect a database API to a database:

1. Choosing the Right API and Database System

API Selection

Choose a database API that aligns with your application requirements—functionality, reliability, performance, community support, and documentation. Every API has a different communication style—REST, SOAP, or GraphQL—so select one that best suits your needs.

You must also configure an API endpoint (a specific URL) to which a client application can send HTTP requests to access a resource or perform an action. Consider API versioning strategies to ensure long-term compatibility and evaluate rate-limiting policies to understand throughput constraints.

Database Selection

Assess your data-storage requirements and choose a suitable database system, considering data structure, scalability, performance, query capabilities, and compatibility with the chosen API.

Modern database selection should also account for cloud deployment options, backup and recovery capabilities, and integration with monitoring tools. Consider whether your use case requires ACID compliance for transactions or if eventual consistency models are acceptable for better performance and scalability.

2. Connecting the API to the Database

Authentication and Authorization

Sign up for the API and obtain credentials (API key or access token). Authenticate API calls and verify that the requester has the necessary permissions to access and modify data.

Implement secure credential management using environment variables or dedicated secret management services. Consider implementing OAuth 2.0 flows for user-specific access and ensure proper token-refresh mechanisms to handle expiration automatically.

Set Up Database Connection

Establish a connection to the database by providing host, port, username, and password. Use appropriate libraries or drivers for your language or framework.

Implement connection pooling to optimize database resource usage and configure appropriate timeout values for both API calls and database operations. Consider using SSL/TLS encryption for database connections, especially in cloud environments.

Send API requests using HTTP client libraries, including required headers such as the API key. Transform the received data as needed and store it in the database using database-specific libraries.

3. Designing the Data-Transfer Process

- Pushing Data: Update the database in real-time when the API is the source.

- Pulling Data: Periodically retrieve information from the API and store it for analysis.

- Hybrid Approach: Combine pushing and pulling to balance real-time updates with periodic ingestions.

Consider implementing backpressure mechanisms to handle situations where data arrives faster than it can be processed. Design appropriate batching strategies to optimize database write operations while maintaining acceptable latency for real-time use cases.

4. Ensuring Data Consistency and Error Handling

- Data Validation: Implement data-validation mechanisms to ensure integrity during ingestion.

- Error Handling: Develop robust error-handling methods for network failures, rate limits, and connection issues.

- Consistency and Synchronization: Use versioning, timestamp-based synchronization, or conflict-resolution algorithms.

Implement exponential backoff retry strategies for transient failures and establish dead-letter queues for permanently failed operations. Design comprehensive logging and monitoring to track data-quality metrics and identify integration issues proactively.

Which Tools Should You Use for API Integration?

- Airbyte: Open-source platform with 600+ connectors and connector-development kit for custom integrations, supporting both cloud and self-managed deployments.

- Zapier: Automation workflows ("Zaps") to transfer data between APIs and databases with user-friendly visual interfaces.

- Microsoft Power Automate: Visual designer for triggers and actions, supporting RESTful API integrations with deep Microsoft ecosystem integration.

- AWS AppSync: Managed service for real-time data synchronization in serverless applications with GraphQL support.

Additional considerations include Apache Kafka for high-throughput streaming integrations, Debezium for database change data capture, and cloud-native options like Google Cloud Dataflow or Azure Data Factory for enterprise-scale data processing requirements.

What Are the Best Practices for API and Database Integration?

Ensuring Secure Data Transfer

- Encryption: Use HTTPS (SSL/TLS) for secure transmission and implement field-level encryption for sensitive data at rest.

- Authentication & Authorization: Implement API keys, tokens, or OAuth with proper scope limitations and regular credential rotation.

- Secure Credential Storage: Store credentials in secure vaults like HashiCorp Vault or cloud-native secret managers, never in code repositories.

Implement zero-trust security models that verify every request regardless of source, and consider implementing mutual TLS for service-to-service communication in sensitive environments.

Efficient Error Handling and Data Validation

- Comprehensive Error Handling: Provide meaningful error messages and structured logging with correlation IDs for debugging.

- Data Validation & Sanitization: Implement input validation at API boundaries and database constraints to prevent invalid data entry.

- Consistent Data Formatting: Enforce consistent formats, naming conventions, and units across all integration points.

Design circuit breaker patterns to prevent cascading failures and implement graceful degradation strategies that maintain core functionality even when some integrations fail.

Regular Monitoring and Optimization

- Performance Monitoring: Track response times, throughput, error rates, and database query performance with tools like Prometheus and Grafana.

- Load Testing: Assess scalability under high-volume scenarios using realistic data patterns and traffic distributions.

- Query Optimization: Use indexing strategies, implement caching layers, and optimize database queries for integration-specific access patterns.

- Regular Updates: Keep libraries and drivers current, monitor API deprecation notices, and update the integration process as APIs evolve.

Establish automated alerting for key performance indicators and implement proactive monitoring that identifies potential issues before they impact users.

Turn API Connections into Scalable, Production-Ready Workflows

Bringing APIs and databases together is more than a technical necessity—it's how modern teams unlock real-time insights, automate manual processes, and reduce data fragmentation. From selecting the right database application programming interface to managing transformations and ensuring data quality, this guide covered the essential steps to build robust API-database integrations. The evolution toward event-driven architectures, change data capture, and advanced patterns like CQRS demonstrates how API-database integration continues to mature, enabling organizations to build integration architectures that scale with business growth while maintaining reliability and security.

Frequently Asked Questions

What Is the Difference Between a Database API and a REST API?

A database API is specifically designed to interact with database systems, providing operations like queries, inserts, updates, and deletes. A REST API is an architectural style that can be applied to any type of web service, including database APIs. Database APIs may use REST principles but can also implement other patterns like GraphQL or RPC depending on requirements.

How Do You Handle Rate Limiting When Integrating APIs with Databases?

Implement exponential backoff retry strategies, respect API rate-limit headers, and use queuing mechanisms to buffer requests during high-traffic periods. Consider implementing client-side rate limiting to stay within API quotas and use caching to reduce the number of API calls required for frequently accessed data.

What Are the Security Considerations for API-Database Integrations?

Key security measures include using HTTPS for all API communications, implementing proper authentication and authorization, encrypting sensitive data both in transit and at rest, storing credentials securely in vault systems, and implementing input validation to prevent injection attacks. Regular security audits and monitoring for suspicious activity are also essential.

How Do You Ensure Data Consistency Across Multiple API-Database Integrations?

Implement transaction management patterns like the Saga pattern for distributed transactions, use timestamps or version numbers for conflict resolution, establish data validation rules at integration boundaries, and consider eventual consistency models where immediate consistency isn't required. Monitor data quality metrics to detect inconsistencies early.

What Performance Optimization Techniques Work Best for API-Database Integrations?

Optimize performance through connection pooling for database connections, implementing caching layers for frequently accessed data, using batch operations for bulk data transfers, creating appropriate database indexes for integration query patterns, and implementing asynchronous processing for non-critical operations. Regular monitoring helps identify bottlenecks and optimization opportunities.

Suggested Read:

.webp)