Data Warehouse vs Database: Key Differences and Considerations

Summarize this article with:

✨ AI Generated Summary

Two of the most critical data management tools are databases and data warehouses. While both are designed to store and manage data, they differ in their approach and functionality.

In this article, we will explore the differences between a database and a data warehouse in detail. We will also discuss the benefits of a hybrid approach and the considerations for choosing the right solution.

TL;DR: Database vs Data Warehouse at a Glance

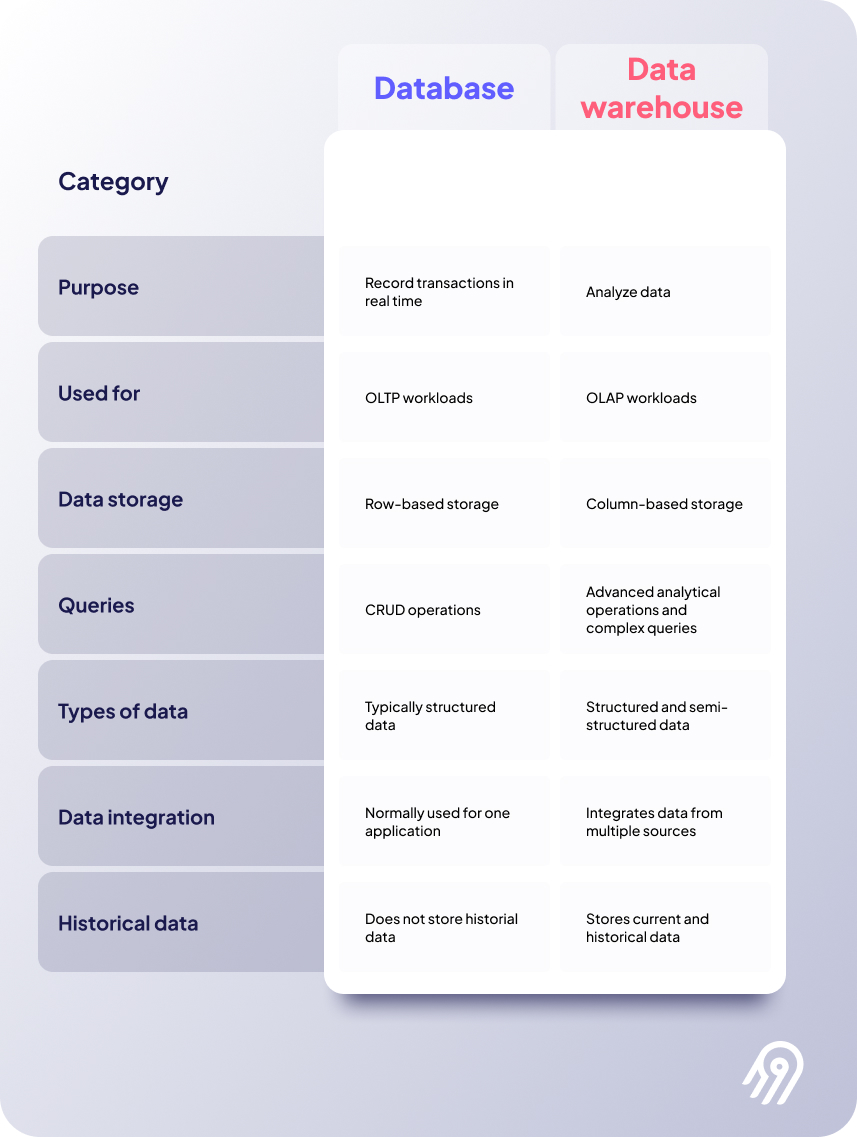

- Databases handle real-time transactional processing (OLTP) with normalized schemas for current operational data, while data warehouses optimize analytical processing (OLAP) with denormalized schemas for historical data analysis

- Databases excel at high-volume transactions with ACID compliance and millisecond response times, whereas data warehouses specialize in complex queries across petabyte-scale datasets using columnar storage

- Modern architectures increasingly blur these boundaries through HTAP systems, data lakehouses, and hybrid approaches that leverage both technologies' strengths

- Airbyte enables seamless synchronization between databases and warehouses using CDC, streaming ETL, and 600+ connectors for unified analytics

- Choose based on workload: databases for real-time operations, warehouses for analytics, or hybrid solutions for comprehensive data strategies

What Is a Database?

A database is a structured collection of data that is organized in a specific way to facilitate easy access, retrieval, and processing. It stores current data used by an application.

Databases store and process transactional data generated in real time by an organization's daily operations. This is known as OLTP (Online Transaction Processing).

Different types of database systems serve varying use cases. The most common type is a relational database (RDBMS). They store data in tables, where a row represents a single record, and a column represents a field or attribute of that record. Other types of databases include NoSQL databases, distributed databases, and NewSQL databases.

Key Features of Databases

- ACID properties: Databases adhere to the ACID properties (Atomicity, Consistency, Isolation, Durability), ensuring every transaction is processed reliably and consistently.

- Focus on OLTP: Designed for OLTP workloads to store, retrieve, and query transactional data.

- Schema-based organization: Most databases use a normalized schema design to minimize redundancy and ensure consistency.

- SQL as a query language: SQL is the standard querying language.

- Real-time processing capabilities: Modern databases support streaming data ingestion and real-time analytics through in-memory processing and columnar indexing.

- Cloud-native scaling: Contemporary database systems offer elastic scaling through read replicas, auto-scaling, and serverless configurations.

Pros and Cons of Databases

Pros

- Real-time data processing for immediate consistency

- ACID compliance ensures data integrity

- Efficient for high-volume, small-scale read/write operations

- Optimized for point queries and range scans on indexed columns

- Modern databases support analytical workloads through hybrid architectures

- Cloud-native deployments offer cost-effective scaling and management

Cons

- Limited scalability for very large datasets

- Not optimized for complex analytical queries

- Vertical scaling can be expensive and has physical limits

- Schema changes can be difficult and may require downtime

- Performance degrades with increasing concurrent analytical workloads

- Storage costs can be high due to normalization and index overhead

Popular Databases

- MySQL: Free, open-source RDBMS that supports ACID transactions, foreign keys, and stored procedures.

- PostgreSQL: Open-source RDBMS known for customization and extensibility.

- Oracle Database: High-performance RDBMS commonly used for transactional systems.

- Microsoft SQL Server: RDBMS used for transaction processing, BI, and analytics.

- TiDB: Modern distributed SQL database that supports both OLTP and OLAP workloads through HTAP architecture.

What Is a Data Warehouse?

A data warehouse is a large, centralized data repository that supports business intelligence (BI) activities such as reporting, analysis, and decision-making. It stores massive amounts of current and historical data from different applications and sources.

A data warehouse facilitates Online Analytical Processing (OLAP). It is optimized for complex querying and analysis of large datasets to identify trends that inform strategic decision-making.

Data is extracted from various sources, transformed, and loaded into the warehouse through data pipelines. Modern data warehouses increasingly support real-time data ingestion through streaming ETL pipelines and change data capture (CDC) technologies.

Key Features of Data Warehouses

- Focus on OLAP: Supports OLAP workloads and provides fast query response times.

- Optimized for large-scale storage & retrieval: Employs Star Schema vs. Snowflake Schema to organize data for efficient querying.

- Data integration from multiple sources: Central destination where data is extracted, transformed, and loaded.

- Support for complex queries and aggregations: Enables in-depth analysis of large-scale datasets.

- Columnar storage optimization: Modern warehouses use columnar storage formats that enable extreme compression and rapid analytical scans.

- Cloud-native architectures: Separation of compute and storage allows independent scaling and cost optimization.

- AI and machine learning integration: Native integration with ML frameworks enables predictive modeling and automated analytics.

Pros and Cons of Data Warehouses

Pros

- Optimized for complex analytical queries on large datasets

- Efficient storage of historical data

- Scalable to handle petabytes through distributed architectures

- Fast aggregations and multi-dimensional analysis

- High-throughput, parallel query processing

- Cost-effective for large-scale analytics

- Modern warehouses support real-time analytics and streaming data

- Built-in machine learning capabilities for predictive insights

Cons

- Not suitable for high-volume transactional processing

- Initial setup and data modeling can be complex

- Potential for data silos if not properly integrated

- May require specialized administration skills

- Traditional warehouses have limited real-time capabilities

Popular Data Warehouses

- Amazon Redshift: Cloud data warehouse for petabyte-scale workloads.

- Google BigQuery: Google Cloud Platform's fully managed warehouse for rapid analytics.

- Snowflake: Cloud data platform for structured and semi-structured data.

- Microsoft Azure Synapse Analytics: Scalable warehouse integrated with Azure ecosystem.

- Databricks: Unified analytics platform combining data warehousing with machine learning capabilities.

How Do Database and Data Warehouse Architectures Compare?

The main difference between a Data Warehouse and a Database is that a Data Warehouse is optimized for analytical queries and stores historical data for reporting, while a Database is designed for transactional processing and manages real-time operational data.

Purpose and Workloads

Databases are primarily designed for OLTP (Online Transaction Processing) workloads. They excel at handling high-volume, real-time transactional operations such as:

- Customer order processing

- Inventory management

- Financial transactions

- User authentication and session management

- Real-time data updates and modifications

Data Warehouses are optimized for OLAP (Online Analytical Processing) workloads. They specialize in:

- Complex analytical queries across large datasets

- Historical data analysis and trend identification

- Business intelligence and reporting

- Multi-dimensional analysis and data mining

- Strategic decision support through aggregated insights

Data Storage and Organization

Databases typically use normalized schemas designed to minimize data redundancy and ensure consistency. This approach:

- Reduces storage requirements through elimination of duplicate data

- Maintains data integrity through referential constraints

- Optimizes for frequent insert, update, and delete operations

- Uses row-based storage optimized for transactional access patterns

Data Warehouses employ denormalized schemas such as star or snowflake schemas that:

- Optimize query performance through pre-joined data structures

- Enable fast aggregations and multi-dimensional analysis

- Support historical data storage with slowly changing dimensions

- Use columnar storage formats for analytical query acceleration

Performance and Scalability

Databases prioritize:

- Low-latency responses for individual transactions (milliseconds)

- High concurrent user support for operational applications

- Consistent performance under varying load conditions

- Vertical scaling through hardware upgrades, though modern systems support horizontal scaling

Data Warehouses focus on:

- High-throughput processing for large-scale analytical queries

- Parallel query execution across distributed architectures

- Scalability to petabyte-scale datasets through cloud-native architectures

- Elastic resource allocation that scales compute and storage independently

Data Integration and Transformation

Databases handle data integration through:

- Real-time data ingestion from applications and external sources

- Minimal transformation to preserve operational data integrity

- Direct application integration through APIs and connectors

- Change data capture (CDC) for real-time replication

Data Warehouses manage integration via:

- ETL/ELT processes that extract, transform, and load data from multiple sources

- Data cleansing and standardization to ensure analytical quality

- Historical data consolidation from various operational systems

- Schema evolution management to handle changing data structures

Query Capabilities

Databases excel at:

- Simple queries with point lookups and range scans

- Join operations across normalized tables

- Transactional consistency through ACID properties

- Real-time query responses for operational applications

Data Warehouses specialize in:

- Complex analytical queries with multiple joins and aggregations

- Window functions for time-series analysis

- Advanced analytics including statistical functions and machine learning

- Query optimization through materialized views and pre-aggregated data

Data Structure

Databases maintain:

- Normalized data structures that eliminate redundancy

- Referential integrity through foreign key constraints

- Current operational data with limited historical context

- Transactional consistency across related data modifications

Data Warehouses organize:

- Denormalized structures optimized for analytical queries

- Historical data preservation with time-variant dimensions

- Data from multiple sources consolidated into unified schemas

- Aggregated and summarized data for faster query performance

Data Volume and Scalability

Databases handle:

- Moderate to large datasets focused on current operational needs

- Frequent small transactions rather than bulk operations

- Scaling challenges with very large datasets due to normalization overhead

- Performance optimization through indexing and query tuning

Data Warehouses manage:

- Massive datasets spanning years of historical information

- Bulk data operations through batch and streaming processing

- Petabyte-scale storage through distributed architectures

- Automatic scaling in cloud environments based on workload demands

Data Freshness and Update Frequency

Databases provide:

- Real-time data updates as transactions occur

- Immediate consistency for operational applications

- High-frequency modifications throughout business operations

- Current data state reflecting the latest business activities

Data Warehouses offer:

- Periodic data updates through scheduled ETL processes

- Historical data preservation with time-stamped records

- Batch processing for data integration and transformation

- Eventually consistent data across distributed environments

Modern data warehouses increasingly support real-time updates through streaming architectures and change data capture technologies.

Schema Flexibility

Databases require:

- Predefined schemas with strict structure enforcement

- Schema evolution challenges that may require downtime

- Referential integrity maintenance across schema changes

- Application coordination for schema modifications

Data Warehouses provide:

- Schema-on-read flexibility for semi-structured data

- Easier schema evolution for analytical requirements

- Support for multiple data formats, including JSON and XML

- Adaptive schemas that evolve with business needs

Concurrency

Databases support:

- High concurrent users for operational applications

- Locking mechanisms to maintain transactional consistency

- Real-time conflict resolution during simultaneous updates

- Multi-version concurrency control for read consistency

Data Warehouses handle:

- Concurrent analytical users accessing historical data

- Read-heavy workloads with occasional bulk updates

- Query queuing and resource management for complex analyses

- Parallel processing across distributed compute resources

Cost Considerations

Databases involve:

- Licensing costs for enterprise database systems

- Infrastructure expenses for high-performance hardware

- Operational costs for maintenance and administration

- Scaling costs that increase with data volume and user load

Data Warehouses incur:

- Cloud-based pricing models with pay-as-you-go options

- Storage costs for historical data retention

- Compute costs for analytical processing

- Lower operational overhead through managed services

How Do Enterprise Security and Governance Requirements Shape Database vs Data Warehouse Decisions?

Enterprise security and governance requirements play a critical role in determining the optimal balance between databases and data warehouses, as each system presents distinct security challenges and governance opportunities that must align with organizational compliance frameworks.

Security Architecture Considerations

Database security prioritizes real-time protection through encryption and access controls that safeguard operational data at the point of creation. Transactional systems require immediate consistency checks and atomic transaction processing, demanding granular row-level locking mechanisms and continuous monitoring to prevent security breaches during high-velocity operations. Modern databases implement field-level encryption for active records and robust authentication systems that integrate with enterprise identity management platforms.

Data warehouses present different security challenges due to their analytical nature and multi-source data integration requirements. These systems demand comprehensive data lineage tracking and cross-source policy enforcement to protect consolidated information assets. Warehouse environments face greater risks at integration points where heterogeneous datasets merge, requiring robust anomaly detection and metadata auditing to identify compromised pipelines. The analytical query patterns typical of warehouses generate extensive metadata that becomes itself a security asset, enabling early threat detection through access pattern deviation identification.

Governance Framework Implementation

Data governance frameworks must accommodate the distinct characteristics of each system while maintaining unified policy enforcement across the enterprise data landscape. Role-based access control implementation diverges significantly between systems: databases employ detailed schema-level privileges aligned with transactional roles, while warehouses implement business-domain aligned access through attribute-based controls that evaluate multiple factors including user department, query sensitivity, and dataset freshness.

The governance challenge intensifies in warehouse environments due to multi-source integration, necessitating advanced metadata management and specialized stewardship roles. Warehouse governance requires establishing data contracts between producers and consumers, defining expected schemas, quality metrics, and service levels that span across multiple operational systems feeding into analytical environments.

Compliance Integration Strategies

Regulatory compliance requirements fundamentally shape database vs data warehouse selection based on specific industry mandates and jurisdictional requirements. GDPR compliance requires different approaches: databases implement purpose-based access restrictions at record creation through consent metadata embedding, while warehouses establish automated data minimization workflows that purge non-essential attributes after analytical transformations.

Financial standards like SOX demand transaction-level controls where databases implement dual-approval workflows for material entries, complemented by warehouses containing segregated journal repositories with write-once characteristics preventing retrospective modification. Healthcare compliance under HIPAA necessitates specialty implementations where databases enforce strict protected health information access logging with user-contextual queries, while warehouses implement pseudonymization bridges allowing research analysis without full de-anonymization.

Unified Security Strategies

Organizations increasingly adopt integrated security strategies that leverage the complementary strengths of both systems while addressing their individual vulnerabilities. Database access systems federate authentication to warehouse environments, while data classification schemas propagate from transactional systems to analytical models. Advanced implementations feature bidirectional control synchronization where warehouse sensitivity analysis informs database masking requirements, while database access patterns shape warehouse row-level security models.

The convergence of security controls creates opportunities for enhanced threat detection through correlation of operational database events with analytical warehouse access patterns. This integrated approach enables identification of sophisticated attacks that might span both transactional and analytical environments, providing comprehensive security coverage across the complete data lifecycle.

What Role Do Modern Data Integration Methodologies Play in Bridging Database and Data Warehouse Environments?

Modern data integration methodologies have evolved beyond traditional batch processing approaches to provide sophisticated frameworks that seamlessly connect database and data warehouse environments while addressing contemporary challenges around real-time analytics, data quality, and governance.

Real-Time Integration Through Change Data Capture

Change data capture represents a fundamental shift from batch-oriented integration to continuous synchronization between transactional databases and analytical warehouses. Modern CDC implementations capture insert, update, and delete operations at the database transaction log level, streaming these changes to target warehouses through message queues with minimal latency. This approach enables operational analytics requiring near-real-time data while minimizing performance impact on source database systems.

Advanced ELT and Data Transformation Paradigms

The evolution from traditional ETL to ELT methodologies has fundamentally changed how organizations approach database and warehouse integration. ELT paradigms leverage the computational power of modern cloud data warehouses to perform transformations after loading raw data, preserving complete datasets for exploratory analysis while enabling on-demand transformation for specific analytical use cases.

Data Virtualization and Federation Strategies

Data virtualization creates abstraction layers that present distributed database and warehouse sources as unified entities without requiring physical data consolidation. This approach enables real-time access to current operational data while supporting complex analytical queries that span multiple systems, bridging the traditional gap between transactional and analytical environments.

Hybrid Architecture Integration Patterns

Modern integration methodologies increasingly support hybrid architectures that leverage the specialized capabilities of both databases and data warehouses within unified data processing pipelines. These patterns typically implement streaming data capture from operational databases combined with batch analytical processing in warehouses, creating end-to-end data flows that support both real-time operational requirements and complex analytical use cases.

How Do AI and Automation Impact Database and Data Warehouse Selection?

Artificial intelligence and automation are fundamentally transforming how organizations approach database vs data warehouse selection by introducing capabilities that were previously impossible or required significant manual intervention.

AI-Driven Query Optimization and Performance Management

Machine learning algorithms analyze query execution plans, data distribution patterns, and resource utilization to automatically optimize performance without human intervention.

Automated Data Management and Governance

AI automation transforms data management tasks that traditionally required significant manual effort and specialized expertise, automatically classifying sensitive data and maintaining compliance.

Embedded Machine Learning and Predictive Analytics

The integration of machine learning capabilities directly within database and data warehouse systems eliminates the need for separate analytical platforms and enables real-time predictive analytics.

Intelligent Data Integration and Pipeline Management

AI-powered data integration platforms automatically discover data sources, map schema relationships, and maintain synchronization across database and data warehouse environments.

Real-Time Decision Intelligence

The convergence of AI and real-time processing enables decision intelligence systems that combine the transactional capabilities of databases with the analytical power of data warehouses.

What Are the Benefits of Hybrid Approaches Combining Databases and Data Warehouses?

Hybrid systems leverage the strengths of both databases and data warehouses to create flexible, scalable data architectures that address the limitations of single-system approaches.

- Data mart: A departmental subset of a data warehouse optimized for specific business functions.

- Hybrid cloud solutions: Mix on-premises databases with cloud warehouses to balance security, cost, and performance requirements.

- Data virtualization: Access data from multiple sources as if stored in a single location, enabling unified querying without data movement.

- HTAP architectures: Combine transactional processing with analytical capabilities in unified platforms.

Benefits of Using Both

- Performance – Specialized systems handle workloads they are optimized for.

- Scalability – Transactional and analytical workloads can scale independently.

- Flexibility – Architectures adapt to changing business requirements.

- Cost optimization – Resources are allocated cost-effectively.

- Improved data quality – Unified management enables better governance.

- Real-time analytics – Immediate insights on operational data.

Examples of Hybrid Solutions

- Microsoft Azure Cosmos DB

- Google Cloud Spanner

- Amazon Aurora

- Snowflake (through integrations)

- Databricks Lakehouse

Conclusion

Databases and data warehouses serve distinct yet complementary purposes in modern data architecture, with databases optimized for real-time transactions and data warehouses designed for analytical processing of historical data. The choice between them depends on specific business requirements, with many organizations adopting hybrid approaches that leverage the strengths of both systems. As data integration technologies advance, the boundaries between these systems continue to blur, creating opportunities for more flexible, scalable, and intelligent data management solutions.

Frequently Asked Questions

What's the core difference between a database and a data warehouse?

A database is designed for real-time transactional processing (OLTP) like order processing and customer management, using normalized schemas for current data. A data warehouse is optimized for analytical processing (OLAP), storing large volumes of historical data for reporting, trend analysis, and strategic decision-making.

When should I choose a database over a data warehouse?

Use a database when your workloads involve:

- Real-time transactions and immediate updates

- Operational applications (inventory, CRM, payments)

- Frequent read/write operations

- Applications needing strict data consistency (ACID compliance)

Why would I need a data warehouse instead?

Choose a data warehouse when your business requires:

- Complex analytical queries on historical data

- Aggregations and reporting across large datasets

- Business intelligence dashboards

- Predictive analytics and machine learning at scale

Are modern systems still strictly separated between databases and data warehouses?

No. Emerging architectures like HTAP (Hybrid Transactional/Analytical Processing), data lakehouses, and serverless cloud-native platforms increasingly blur these lines. Many organizations now combine both, using:

- Databases for real-time operations

- Data warehouses for analytics

- Hybrid systems for real-time insights and historical analysis

How do tools like Airbyte support database and data warehouse integration?

Airbyte enables real-time data movement between databases and data warehouses through:

- Over 600 pre-built connectors

- Change Data Capture (CDC) for streaming updates

- ELT pipelines for flexible transformations

This supports hybrid architectures, operational analytics, and unified data strategies without vendor lock-in.

Suggested Read:

.webp)