How to Automate Data Scraping from PDFs Using Airbyte?

Summarize this article with:

✨ AI Generated Summary

PDF data scraping automates extraction of structured data from complex, unstructured PDF documents, converting them into machine-readable formats like CSV, Excel, or JSON to reduce manual entry and errors. Modern AI-powered tools improve layout detection, context-aware extraction, and adaptability to diverse document types, while platforms like Airbyte offer scalable, secure, and flexible integrations for enterprise-level PDF processing workflows.

- Challenges include document variability, OCR accuracy, and infrastructure demands.

- Key use cases span finance, legal, healthcare, and academic research.

- Airbyte provides 600+ connectors, AI-assisted setup, and supports complex layouts and multi-language documents with enterprise-grade security.

PDF data scraping is the process of turning static, document-based information into structured, machine-readable data. Instead of manually copying details from reports, invoices, or research papers, automated tools extract tables, text, and form fields directly from PDFs and convert them into formats like CSV, Excel, JSON, or databases. This shift matters because PDFs are built for readability, not structured storage—mixing headers, tables, and free-form text in ways that make automation difficult.

By applying modern extraction methods, organizations can cut down on manual entry, reduce errors, and unlock valuable insights from documents that would otherwise remain locked in static files.

What Is PDF Data Scraping and Why Does It Matter?

PDF data scraping is an automated technique for extracting semi-structured or unstructured data from PDF documents. This process transforms static document content into machine-readable formats like CSV, Excel, JSON, or direct database loads. The extracted data can then flow seamlessly into analytics and automation workflows.

PDFs prioritize visual layout over structured storage, mixing headers, footers, tables, and free-form text in unpredictable ways. This design choice makes PDFs excellent for human reading but challenging for automated processing.

Effective scraping converts this complex content into structured outputs ready for downstream applications. Common use cases include financial reporting, resume parsing, scientific literature analysis, and regulatory compliance monitoring.

Organizations that successfully scrape data from PDF documents gain significant competitive advantages. They reduce manual data entry errors, accelerate processing times, and unlock insights previously trapped in static documents.

How Does Data Scraping From PDFs Actually Work?

The PDF data extraction process follows a systematic approach that transforms unstructured documents into structured data. Understanding each step helps optimize extraction accuracy and processing speed.

What Are the Key Technical Challenges When Scaling PDF Data Processing Operations?

Document Structure Variability

Even "standard" forms like invoices or contracts vary widely across organizations and time periods. Multi-column layouts, nested tables, and repeating headers create extraction complexity that simple template-based approaches cannot handle.

Different document creators use varying fonts, spacing, and alignment preferences. These variations force extraction systems to handle multiple layout patterns for seemingly identical document types.

Legacy documents often contain formatting inconsistencies that modern extraction tools struggle to process. Organizations processing historical archives face particular challenges with outdated document standards.

OCR Accuracy Limitations

Low-resolution scans, handwriting, and uncommon fonts significantly degrade OCR accuracy. At enterprise scale, even a 2% OCR error rate translates into thousands of incorrect records daily.

Scanned documents with poor contrast, skewed orientation, or background noise require preprocessing before extraction. These additional steps increase processing time and computational requirements.

Multi-language documents present additional OCR challenges. Organizations operating globally must handle various character sets and reading directions within their extraction pipelines.

Infrastructure and Performance Demands

High-volume parsing, OCR processing, and layout analysis consume significant computational resources. Organizations must balance throughput requirements against cost constraints and storage needs for raw files, extracted data, and processing logs.

Peak processing periods create resource bottlenecks that can delay critical business operations. Effective scaling requires infrastructure that handles variable workloads without performance degradation.

Data security and compliance requirements add complexity to infrastructure design. Sensitive document processing often requires specialized security controls and audit capabilities.

How Do Modern AI-Powered Solutions Enhance PDF Data Extraction Capabilities?

Recent advances in computer vision, large language models, and transformer-based document understanding have revolutionized PDF data extraction capabilities. These technologies address traditional limitations while enabling new extraction possibilities.

Layout Detection Accuracy has improved dramatically through deep learning models trained on diverse document types. Modern systems recognize complex layouts, multi-column text, and nested table structures with near-human accuracy.

Context-Aware Field Extraction leverages natural language understanding to identify relevant data based on semantic meaning rather than just position. This approach handles document variations more effectively than rule-based systems.

Confidence Scoring and Automated Validation provide quality metrics for extracted data. These systems flag uncertain extractions for human review while automatically processing high-confidence results.

Few-Shot Adaptation to New Document Templates* allows rapid deployment for previously unseen document types. Advanced AI models learn extraction patterns from minimal examples, reducing setup time for new use cases.

These innovations reduce manual rule writing and accelerate deployment timelines. Organizations can process new document types within days rather than weeks or months required by traditional approaches.

How Can You Automate Data Scraping From PDFs Using Airbyte?

Airbyte offers 600+ connectors and built-in document processing capabilities that simplify PDF data extraction workflows. The platform's document processing features automatically handle common extraction challenges while providing flexibility for custom requirements.

Below is a practical guide for scraping PDFs stored in Azure Blob Storage and loading the results into Google Sheets. This workflow demonstrates Airbyte's end-to-end document processing capabilities.

Step 1: Configure the Source Connection

Navigate to Sources → New source and select Azure Blob Storage from the connector library. Provide your container details and authentication credentials to establish the connection.

Enable the Document File Type Format option to activate automatic field extraction from PDF documents. This feature uses Airbyte's built-in document processing engine to identify and extract structured data from your PDF files.

Configure any specific parsing parameters based on your document types. Advanced options include OCR settings for scanned documents and field mapping preferences for consistent output formatting.

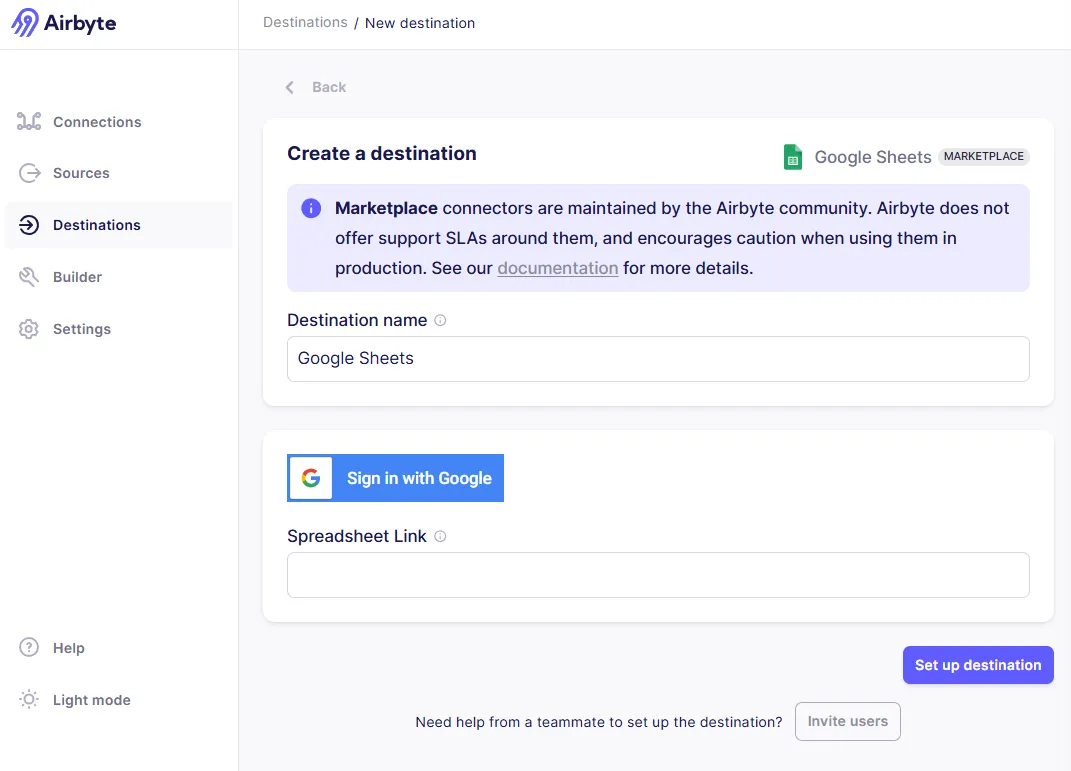

Step 2: Configure Google Sheets as Destination

Navigate to Destinations → New destination and select Google Sheets from the available options. Click Sign in with Google to complete OAuth authentication and grant Airbyte access to your Google Workspace.

Paste the target spreadsheet link and select the specific worksheet for data loading.

Complete the destination setup by clicking Set up destination. Airbyte validates the connection and confirms write permissions to your specified Google Sheets location.

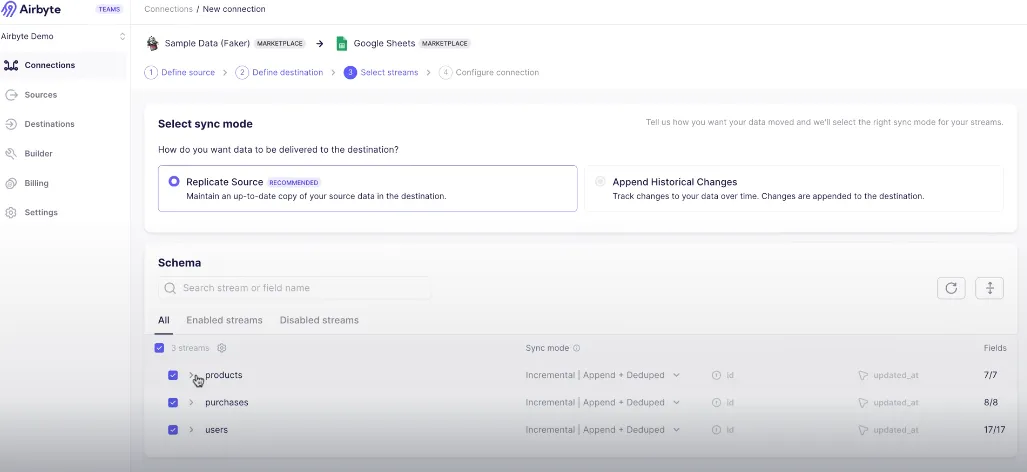

Step 3: Configure the Connection and Sync Settings

Open Connections → New connection to link your configured source and destination. Select the Azure Blob Storage source and Google Sheets destination from your available options.

Choose the appropriate sync mode based on your requirements. Full Refresh reprocesses all documents on each sync, Incremental handles only new or modified files, while CDC provides real-time change detection for supported sources.

Select the specific data streams to replicate and configure sync frequency according to your business needs. Common options include hourly processing for time-sensitive documents or daily batch processing for routine operations.

Add field mappings and transformations as needed to align extracted data with your destination schema. Click Set up connection to initialize the pipeline and begin monitoring extraction performance.

For complex data modeling requirements, integrate Airbyte with dbt to apply sophisticated transformations and business logic to your extracted PDF data.

What Are the Primary Use Cases for Data Scraping From PDFs?

Organizations across industries rely on PDF data extraction to automate manual processes and unlock insights from document-based workflows. The following use cases demonstrate the breadth of applications for automated PDF processing.

Finance and Accounting Operations

Automated Invoice Processing extracts vendor information, line items, taxes, and payment terms from supplier invoices. This automation reduces accounts payable processing time while improving accuracy and enabling better cash flow management.

Bank Statement Analysis categorizes transactions automatically and reconciles account balances across multiple financial institutions. Organizations use this capability for expense reporting, budget analysis, and financial planning workflows.

Loan Application Processing pulls income documentation, tax returns, and credit information to accelerate approval decisions. Financial institutions process applications faster while maintaining compliance with regulatory requirements.

Legal Document Management

Contract Analysis and Management surfaces key clauses, critical dates, and contractual obligations from legal agreements. Law firms and corporate legal departments use this capability to manage contract portfolios and identify compliance requirements.

E-Discovery and Document Review automates evidence compilation during legal proceedings. Legal teams process large document volumes more efficiently while ensuring comprehensive review coverage.

Regulatory Compliance Monitoring tracks obligations and requirements across regulatory filings and government publications. Organizations maintain compliance by automatically identifying relevant regulatory changes.

Healthcare Information Systems

Patient Records Management consolidates diagnostic reports, treatment summaries, and insurance documentation from multiple healthcare providers. This integration improves care coordination while reducing administrative overhead.

Insurance Claims Processing accelerates claim validation by automatically extracting relevant medical information and billing codes. Healthcare organizations reduce processing delays and improve patient satisfaction.

Medical Research Data Collection mines scientific literature for treatment outcomes and clinical indicators. Researchers access broader data sets while reducing manual literature review time.

Academic and Research Applications

Scientific Literature Analysis supports systematic reviews and meta-analyses by extracting key findings and methodologies from research publications. Academic institutions accelerate research timelines while improving analysis comprehensiveness.

Citation Management extracts references and author networks from academic papers to build comprehensive bibliographic databases. Researchers track citation patterns and identify collaboration opportunities.

Patent Analysis evaluates patent claims and technology trends across large patent databases. Organizations make informed innovation decisions based on comprehensive intellectual property analysis.

Why Should You Use Airbyte to Automate Data Scraping From PDFs?

Airbyte provides comprehensive PDF data extraction capabilities within a modern data integration platform designed for enterprise scale and flexibility. The platform addresses common extraction challenges while providing the infrastructure needed for production deployments.

Conclusion

Automating PDF data scraping unlocks valuable insights trapped in documents while eliminating manual effort and reducing error rates. Combining AI-driven extraction with Airbyte's flexible integration platform enables organizations to process documents at scale, feed data into analytics pipelines, and power generative AI search experiences.

To experience these capabilities firsthand, try Airbyte free for 14 days and see how automated PDF processing can transform your document-based workflows.

Frequently Asked Questions

What types of PDF documents work best with automated scraping?

Digital PDFs with consistent formatting and clear text work best for automated scraping. Documents with standard layouts like invoices, forms, and reports typically yield the highest extraction accuracy. Scanned PDFs require OCR processing, which may reduce accuracy depending on scan quality and document condition.

How accurate is PDF data extraction compared to manual processing?

Modern PDF extraction tools achieve 95-98% accuracy for digital documents with clear formatting. OCR-based extraction from scanned documents typically achieves 85-95% accuracy depending on document quality. While slightly lower than perfect manual processing, automation provides consistency and eliminates human errors like typos and oversight.

Can Airbyte handle large volumes of PDF documents efficiently?

Yes, Airbyte processes over 2 petabytes of data daily across customer deployments and supports enterprise-scale document processing. The platform automatically scales with workload demands and provides monitoring capabilities to track processing performance and identify potential bottlenecks.

What security measures protect sensitive PDF content during extraction?

Airbyte provides end-to-end encryption for data in transit and at rest, role-based access control, and comprehensive audit logging. The platform maintains SOC 2 and GDPR compliance certifications and implements security features that support HIPAA requirements, ensuring sensitive document content remains protected throughout the extraction and integration process.

How do I handle PDF documents with complex layouts or multiple languages?

Airbyte's document processing engine handles complex layouts including multi-column text, nested tables, and mixed content types. For documents with multiple languages, the platform supports various character sets and can process documents containing multiple languages within the same file, though extraction accuracy may vary based on language complexity.

.webp)