Batch Processing vs Stream Processing: Key Differences

Summarize this article with:

✨ AI Generated Summary

Data processing decisions involve choosing between batch processing, which handles large datasets at scheduled intervals for comprehensive analysis and resource optimization, and stream processing, which processes data in real time for immediate insights and rapid response. Key differences include:

- Batch Processing: Suitable for high-volume, complex tasks like regulatory reporting, data warehousing, and machine learning training with higher latency but optimized resource use.

- Stream Processing: Ideal for real-time applications such as fraud detection, personalization, and operational monitoring, offering low latency but requiring continuous resources and complex fault tolerance.

- Hybrid Architectures: Combine both approaches (e.g., Lambda and Kappa architectures) to balance accuracy and responsiveness while managing operational complexity.

- Tools: Popular batch tools include Airbyte, AWS Batch, and Azure Batch; stream processing tools include Apache Kafka, Google Cloud Dataflow, and Amazon Kinesis.

Data processing decisions have become increasingly complex as organizations balance the need for comprehensive analysis with demands for real-time insights. The exponential growth of data generation means businesses can no longer rely solely on traditional approaches but must strategically choose between batch processing and stream processing, or implement sophisticated hybrid architectures that leverage both paradigms effectively.

In this comprehensive guide, you will explore the fundamental differences between batch processing vs stream processing, understand when each approach delivers optimal value, and discover how modern platforms address the unique challenges organizations face when implementing these data processing strategies at scale.

What Is Batch Processing?

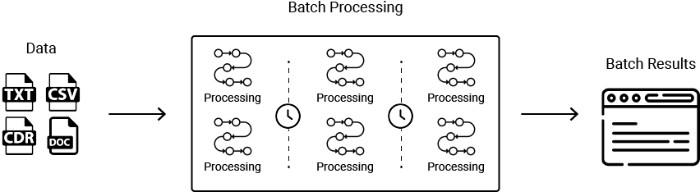

Batch processing allows you to collect data over specific periods and process it in bulk at scheduled intervals. This approach groups large datasets into batches and manages them during predetermined time windows, typically during off-peak hours when system resources are readily available and operational systems experience minimal load.

The batch processing methodology proves particularly valuable for scenarios requiring comprehensive data analysis, complex transformations, and high-volume data processing, where slight delays are acceptable in exchange for processing efficiency and resource optimization. Organizations frequently use batch processing for regulatory reporting, data warehousing operations, and analytical workloads that benefit from having access to complete datasets.

How Does Batch Processing Work?

- Data Collection: Gather large datasets from diverse sources such as databases, logs, sensors, or transactions and store them in a staging system.

- Grouping: Determine how and when to process the data by grouping related tasks or jobs into batches based on business logic or processing requirements.

- Scheduling: Schedule these batches to run at defined times, such as overnight or during low-traffic hours, to optimize resource utilization and minimize impact on operational systems.

- Processing: Depending on the system's capacity, batches are processed sequentially or in parallel using distributed computing frameworks that can handle large-scale data transformations.

- Results: Once processed, results are stored in databases or data warehouses and can be surfaced in dashboards, business intelligence tools, or downstream applications for analysis and decision-making.

What Are the Advantages of Batch Processing?

Batch processing improves data quality by allowing comprehensive validation processes, including duplicate removal, missing-value checks, and data consistency verification in the staging area before execution. This thorough validation ensures that downstream systems receive clean, reliable data that meets organizational quality standards.

Jobs can run in the background or during off-peak hours, preventing disruptions to real-time business activities while optimizing resource costs. This offline processing capability enables organizations to leverage available computational resources efficiently while maintaining operational system performance during critical business hours.

Resource optimization through bulk processing enables higher throughput and more efficient use of computational resources compared to processing individual records. The batch approach allows systems to optimize memory usage, disk I/O operations, and network utilization by processing large volumes of data simultaneously.

What Are the Limitations of Batch Processing?

Batch processing is not efficient for small changes because if only a few records need modifications, the entire batch still runs, potentially wasting computational resources and increasing processing costs unnecessarily.

Once a batch job starts, changing or stopping it midstream proves difficult, leading to a lack of flexibility for quick updates or error handling. This inflexibility can create challenges when business requirements change or when processing errors need immediate correction.

The inherent latency in batch processing means that insights and results are only available after batch completion, which may not meet the requirements of time-sensitive business applications or real-time decision-making scenarios.

What Is Stream Processing?

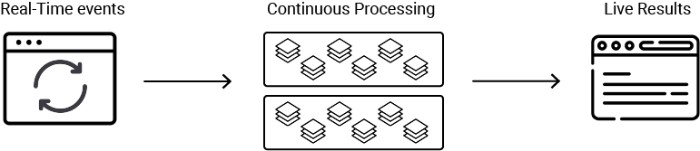

Stream processing refers to processing data in real time as it is created, enabling immediate insights and rapid response to changing business conditions. This approach manages data continuously through event-driven architectures that process individual data points or small batches as they arrive in the system.

You can utilize stream processing for applications that require instant updates, such as real-time analytics, financial trading systems, fraud detection, and live recommendation engines. Stream processing works on continuous data flows and depends on low-latency systems to handle high-velocity data streams while maintaining consistent performance across distributed processing environments.

Real-time processing capabilities can range from milliseconds to minutes, depending on the application requirements and system architecture. Modern stream processing frameworks provide sophisticated features, including windowing operations, state management, and exactly-once processing guarantees that enable complex event processing scenarios.

How Does Stream Processing Work?

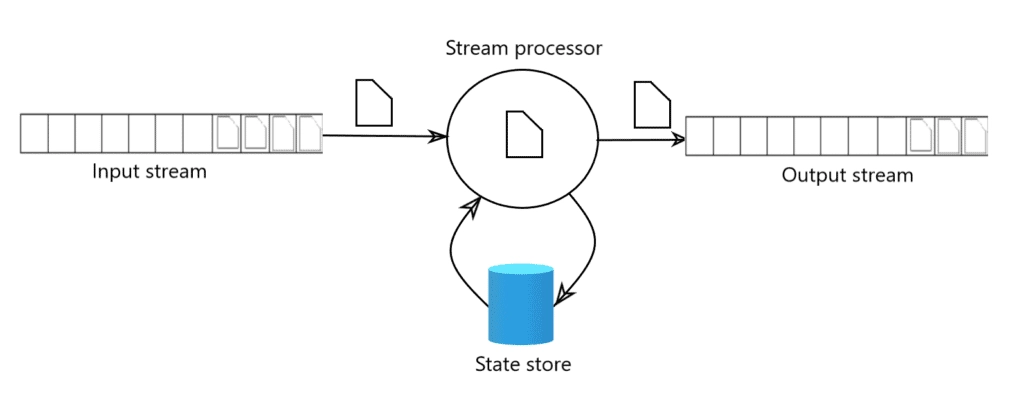

- Input Stream: Data is continuously produced from multiple sources, including sensors, application logs, social media feeds, and transaction systems, flowing directly into stream-processing engines without intermediate storage.

- Stream Processing: As soon as data arrives, it is processed in real time using sophisticated algorithms that can perform aggregations, joins, and transformations while maintaining low latency and high throughput.

- Output Stream: Once processed, data is immediately sent to destinations such as databases, data warehouses, messaging systems, or analytical platforms, where it can trigger immediate actions or updates.

What Are the Advantages of Stream Processing?

Stateful processing maintains context such as user activity patterns and trend analysis through state stores, enabling more sophisticated real-time decisions that consider historical patterns and current events simultaneously.

Event-driven architecture ensures that events are processed as they occur, providing low-latency responses that enable immediate business reactions to changing conditions. This responsiveness proves critical for applications like fraud detection, recommendation systems, and operational monitoring.

Continuous processing enables immediate insights and actions based on current data, supporting use cases where delayed responses could result in missed opportunities or negative business impact. Real-time processing capabilities allow organizations to respond to market changes, customer behavior, and operational conditions as they happen.

What Are the Limitations of Stream Processing?

Adopting stream processing requires expertise in real-time distributed systems architecture, and without adequate knowledge, teams struggle with consistency management, scaling optimization, and performance tuning across complex distributed environments.

Continuous processing demands constant computational resources, leading to higher CPU and memory utilization compared to batch processing. The always-on nature of streaming systems can result in increased infrastructure costs, particularly during periods of low data volume.

Complexity in error handling and fault tolerance increases significantly in streaming environments where failures must be managed without interrupting continuous data flows. Organizations must implement sophisticated recovery mechanisms and duplicate detection strategies to maintain data integrity.

What Are the Key Differences Between Batch Processing and Stream Processing?

How do you choose between Batch Processing and Stream Processing?

When Should You Choose Batch Data Processing?

Batch processing works best when you need thorough data analysis, can handle slight delays, and want to optimize resources. It's ideal for:

Regulatory Reporting and Compliance

Financial institutions and healthcare organizations use batch processing for compliance reports that need complete dataset analysis and audit trails. Monthly statements, regulatory filings, and compliance monitoring fit naturally with scheduled batch processing.

Data Warehousing and Business Intelligence

Batch processing excels at loading data warehouses and running complex analytics on historical data. You can process complete datasets with sophisticated transformations during off-peak hours to save costs.

Machine Learning Model Training

Training ML models on large historical datasets requires significant computing power. Batch processing lets you schedule this intensive work during low-cost periods while accessing complete datasets.

System Backups and Maintenance

Routine backups, database maintenance, and system optimization run smoothly on batch schedules that minimize disruption to daily operations.

When Should You Choose Stream Data Processing?

Stream processing is essential when you need immediate responses and can't afford delays. Choose it for:

Fraud Detection and Security Monitoring

Banks and payment processors use stream processing to analyze transactions in milliseconds and stop fraud instantly. Security systems continuously monitor logs to detect threats as they happen.

Real-Time Personalization

E-commerce sites and streaming services analyze user behavior continuously to deliver personalized experiences immediately. This keeps users engaged and improves conversion rates.

Operational Monitoring and Alerting

Manufacturing systems and IoT devices need stream processing to detect equipment failures or performance issues instantly, preventing costly downtime and safety hazards.

Financial Trading and Market Analysis

High-frequency trading systems analyze market data and execute trades within microseconds to capture opportunities and manage risk in real-time.

What Are the Best Stream Processing Tools Available?

Apache Kafka

Apache Kafka serves as a distributed event-streaming platform that implements a producer/consumer model with topics and partitions to enhance fault tolerance across distributed broker networks. Kafka provides the foundation for many enterprise streaming architectures through its ability to handle millions of messages per second while maintaining durability and ordering guarantees.

The platform's distributed architecture enables horizontal scaling and provides built-in replication mechanisms that ensure data availability even during node failures. Kafka's ecosystem includes Kafka Streams for stream processing applications and Kafka Connect for integrating with external systems, creating a comprehensive streaming platform.

Google Cloud Dataflow

Google Cloud Dataflow provides a fully managed streaming service built on Apache Beam, supporting Java, Python, and Go SDKs while remaining portable across multiple execution engines. The service automatically handles resource scaling, optimization, and operational management while providing unified programming models for both batch and streaming processing.

Dataflow's serverless architecture eliminates infrastructure management overhead while providing enterprise-grade security, monitoring, and integration capabilities with other Google Cloud services. The platform optimizes performance automatically and provides transparent pricing based on actual resource consumption.

Amazon Kinesis

Amazon Kinesis provides a comprehensive suite of services for continuously ingesting and analyzing streaming data at scale, with native integration across AWS services including Lambda, S3, CloudWatch, and Redshift. The platform includes Kinesis Data Streams for real-time data ingestion, Kinesis Data Firehose for data delivery, and Kinesis Analytics for real-time analysis.

Kinesis automatically scales to handle varying data volumes while providing multiple delivery and processing options that integrate seamlessly with existing AWS infrastructure. The service supports multiple data formats and provides built-in transformation capabilities for preparing data for downstream analysis and storage.

What Are the Most Effective Batch Processing Tools?

Airbyte

Airbyte provides comprehensive data movement capabilities with over 600 pre-built connectors supporting diverse source and destination systems. The platform supports custom connector development through its Connector Development Kit, multiple synchronization modes including incremental updates, and flexible scheduling options including scheduled, cron-based, and manual execution.

The platform's architecture supports both cloud-hosted and self-managed deployment options while providing enterprise-grade security, governance, and monitoring capabilities. Airbyte's ELT approach enables transformation within destination systems, optimizing performance and reducing processing overhead compared to traditional ETL methodologies.

AWS Batch

AWS Batch dynamically provisions optimal compute resources to run batch workloads of any scale, integrating seamlessly with AWS services including Lambda, CloudWatch, and EC2. The service automatically handles job scheduling, resource allocation, and queue management while providing cost optimization through spot instance utilization.

The platform supports containerized applications and provides comprehensive monitoring and logging capabilities for operational visibility. AWS Batch eliminates infrastructure management overhead while providing enterprise-grade security and compliance capabilities through integration with AWS identity and access management systems.

Azure Batch

Azure Batch automates the creation and management of compute pools for large-scale high-performance computing jobs without requiring cluster maintenance or infrastructure management. The service provides automatic scaling, load balancing, and fault tolerance while integrating with Azure Active Directory for security and governance.

The platform supports diverse workload types, including rendering, simulation, and data processing applications, while providing cost optimization through low-priority virtual machines and automatic scaling policies. Azure Batch includes comprehensive monitoring and troubleshooting capabilities for operational management and performance optimization.

How Can You Combine Batch and Stream Processing Effectively?

Hybrid processing approaches enable organizations to leverage the strengths of both batch and stream processing while mitigating their respective limitations. These architectures require careful design to ensure consistency between processing paths while optimizing for both real-time responsiveness and comprehensive analytical capabilities.

- Lambda Architecture Implementation: Organizations can implement Lambda architectures that maintain separate batch and stream processing paths converging at a serving layer. The batch layer processes complete datasets for maximum accuracy, while the stream layer provides real-time insights, with the serving layer reconciling results from both processing approaches.

- Kappa Architecture Simplification: The Kappa architecture simplifies hybrid processing by focusing exclusively on stream processing while maintaining the ability to reprocess historical data when necessary. This approach treats all data as streaming data, using replay capabilities to handle scenarios traditionally requiring batch processing.

- Use Case Segregation: Organizations can implement different processing approaches for different data types and business requirements. Critical real-time applications utilize stream processing for immediate response, while comprehensive analytical workloads leverage batch processing for efficiency and accuracy.

- Staged Migration Strategies: Gradual migration from batch to streaming processing enables organizations to transition incrementally while maintaining operational continuity. This approach allows organizations to build streaming expertise and confidence while minimizing disruption to existing business processes.

How Does Airbyte Simplify Both Batch and Stream Data Processing?

Airbyte addresses the complex requirements of modern data processing through a comprehensive platform that supports both batch processing optimization and near-real-time capabilities while maintaining operational simplicity and enterprise-grade governance.

- Streamline GenAI Workflows: Automated chunking, embedding, and indexing capabilities enable direct integration with vector databases such as Pinecone and Chroma, supporting artificial intelligence applications that require both batch processing for model training and real-time processing for inference and interaction.

- Advanced Schema Management: Configurable schema-change handling with automatic detection and validation ensures data consistency across evolving source systems while supporting both batch synchronization cycles and change data capture scenarios for near-real-time processing.

- PyAirbyte Integration: The PyAirbyte library enables data scientists and engineers to build custom processing pipelines in Python environments while leveraging Airbyte's extensive connector library. This integration supports extraction via Airbyte connectors with transformation using SQL, Pandas, or other Python libraries for flexible data processing workflows.

- Custom Transformations: Seamless dbt Cloud integration enables sophisticated post-sync transformations that support batch processing scenarios requiring complex analytical logic, but it is not inherently designed for streaming scenarios or real-time data enrichment and validation.

- Comprehensive Data Orchestration: Native integrations with Prefect, Dagster, Apache Airflow, and other orchestration platforms enable coordination between batch and streaming processing workflows while maintaining operational consistency and monitoring capabilities across diverse processing requirements.

Conclusion

Batch processing excels in scenarios requiring comprehensive analysis, high-volume efficiency, and cost optimization, while stream processing delivers value for applications demanding immediate insights and rapid response capabilities. Modern data architectures increasingly move beyond binary choices toward unified processing frameworks that combine the advantages of both approaches while minimizing operational complexity.

The key to success lies in carefully evaluating business requirements, understanding the trade-offs between processing approaches, and selecting platforms that provide the flexibility to adapt as organizational needs evolve.

Frequently Asked Questions

What is the main difference between batch processing vs stream processing?

The fundamental difference lies in timing and data handling: batch processing collects and processes large datasets at scheduled intervals, while stream processing handles data continuously as it arrives in real-time. Batch processing optimizes for throughput and accuracy with higher latency, while stream processing prioritizes low latency and immediate insights.

When should I choose batch processing over stream processing?

Choose batch processing for scenarios requiring high-volume data processing, complex analytical computations, regulatory reporting, and situations where processing delays are acceptable. Batch processing proves most effective for data warehousing, machine learning model training, and comprehensive business intelligence applications where complete datasets enable more accurate analysis.

Can I use both batch and stream processing together?

Yes, hybrid architectures like Lambda can combine both batch and stream processing approaches effectively. Organizations often use stream processing for real-time applications like fraud detection while simultaneously employing batch processing for comprehensive analytics and reporting. Modern platforms increasingly support unified processing models that enable both approaches within single systems.

What are the cost implications of choosing stream processing over batch processing?

Stream processing typically requires higher operational costs due to continuous resource requirements, specialized expertise, and complex infrastructure needs. However, the business value of real-time insights may justify these additional costs for applications where immediate response provides competitive advantages or prevents significant losses.

How does Airbyte support both batch and stream processing requirements?

Airbyte combines efficient batch processing with Change Data Capture capabilities for near-real-time synchronization, providing a practical hybrid approach that balances performance, reliability, and operational simplicity. The platform supports over 600 connectors, flexible scheduling options, and enterprise-grade governance while enabling organizations to implement both processing paradigms without maintaining separate systems.

.webp)