What's the Best ETL Approach for a Serverless Data Stack?

Summarize this article with:

✨ AI Generated Summary

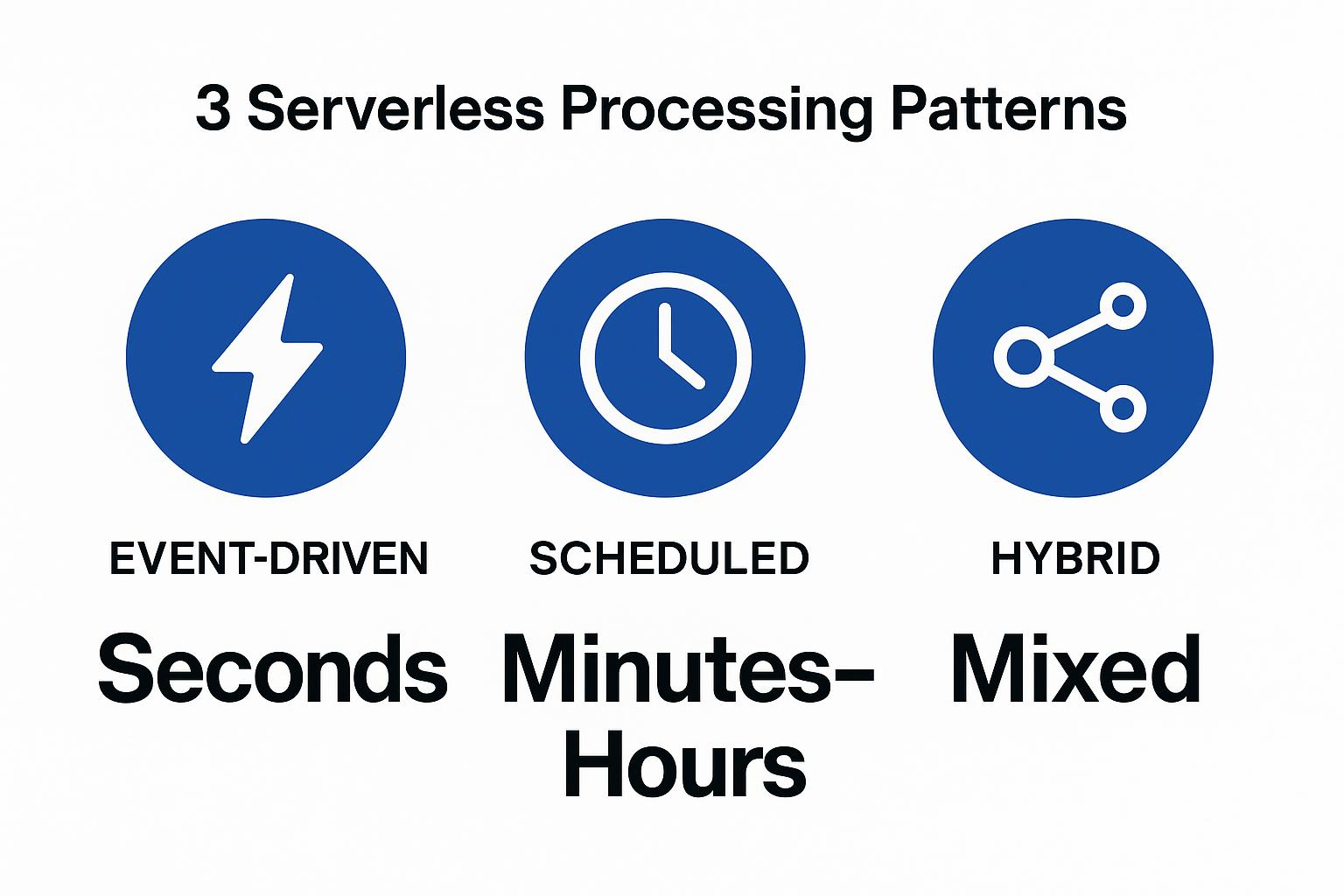

The article explains why traditional ETL struggles in serverless environments due to statelessness, resource limits, and orchestration complexity, recommending ELT as a better fit. It outlines three serverless data processing patterns—event-driven ELT, scheduled ELT, and hybrid streaming—each suited for different latency and complexity needs.

- Best serverless ELT architecture: managed connectors for extraction, object storage for raw data, serverless or warehouse compute for transformation, and event/scheduled orchestration.

- Common challenges include cold starts, state management, cost control, and error handling, with practical mitigation strategies.

- Traditional ETL remains preferable for complex stateful workflows, regulatory constraints, or very large datasets.

- Recommended first step: migrate a simple pipeline using managed connectors and event-driven triggers, monitor costs, and validate outputs before full cutover.

Data team runs nightly ETL jobs on EC2 instances that sit idle 22 hours per day, costing $2,000/month for 2 hours of work. Management wants to "go serverless" but unclear how to redesign pipelines without breaking dashboards or blowing deadlines.

ELT (Extract, Load, Transform) generally works better than traditional ETL in serverless environments. Use event-driven extraction with managed connectors, store raw data in object storage, then transform with serverless compute that scales to zero when not in use.

Why Does Traditional ETL Struggle in Serverless Environments?

Traditional ETL pipelines and ETL tools were designed for fixed, always-on servers where you could control memory, disk, and runtime length. The transform step happens on dedicated hardware before data reaches the warehouse, creating tight coupling between compute capacity and pipeline logic.

State management creates critical friction points. Legacy ETL jobs depend on local temp files to track which records have been processed, or use in-memory caches to store lookup tables across batches. When a traditional ETL job processes 1 million customer records, it might cache product details in memory to avoid repeated database calls. Serverless functions are stateless and short-lived by design, losing all temporary data when they terminate. You must externalize this state to S3 or DynamoDB, adding latency and complexity.

Resource allocation issues compound the problem when single transformations need more CPU or memory than per-function limits allow. AWS Lambda caps memory at 10GB and runtime at 15 minutes. A complex data quality check that joins multiple large datasets might hit these limits, forcing you to split logic across multiple functions or fall back to traditional servers.

Orchestration amplifies the complexity. Choreographing dozens of short functions to mimic multi-step transformations requires durable workflows, retries, and idempotency controls. Cold start penalties make it worse: code packages with heavyweight libraries can add 2-5 seconds of latency per function invocation, turning a 30-second ETL job into a 2-minute ordeal when functions launch cold.

What Are Your Three Serverless Data Processing Patterns?

Event-driven ELT

Event-driven ELT processes data the moment it arrives. When a file lands in S3 or a message hits a queue, serverless functions immediately extract, load, and transform the data. This pattern works well for fraud detection systems that need to score transactions within seconds, or IoT sensor data that triggers real-time alerts. Complexity stays high because you must handle out-of-order events, implement exactly-once processing, and coordinate multiple functions without central orchestration.

Scheduled ELT

Scheduled ELT modernizes traditional batch processing by running serverless functions on fixed schedules. Every night at 2 AM, functions extract data from APIs, load it into object storage, then transform it using warehouse compute. This approach suits financial reporting that runs monthly, or marketing analytics that refresh daily dashboards. Complexity remains medium because execution is predictable, but you still need error handling and monitoring across multiple functions.

Hybrid streaming

Hybrid streaming combines real-time ingestion with batch processing. Streaming functions continuously load raw data into object storage, while scheduled functions aggregate and enrich this data hourly or daily. E-commerce companies use this pattern to capture clickstream events in real-time while building customer segments through nightly batch jobs. Complexity peaks because you manage two different execution models and must ensure consistency between streaming and batch results.

How Do You Choose Between Event-Driven and Scheduled Processing?

Event-driven when:

- Unpredictable data arrival: API webhooks, user events

- Sub-minute latency required: Real-time dashboards

- Small, frequent volumes: <100MB per trigger

Scheduled when:

- Predictable patterns: Nightly database exports

- Large batch volumes: >1GB per run

- Cost optimization priority: Off-peak processing rates

What's the Best Architecture for Serverless ELT?

- Extract: Managed connectors handle API polling and database CDC without requiring dedicated servers. These services automatically scale up during peak loads and scale down to zero during quiet periods. They handle authentication, rate limiting, and incremental sync logic so you don't need to build custom extraction code.

- Load: Raw data lands in object storage (S3, GCS, Azure Blob) in efficient columnar formats like Parquet or Delta. Object storage provides virtually unlimited scale at low cost, while columnar formats enable fast query performance. This approach preserves all original data for future analytics while supporting schema evolution.

- Transform: Serverless compute services (AWS Lambda, Google Cloud Functions, Azure Functions) or cloud warehouse engines (Snowflake, BigQuery, Redshift) process data on-demand. Warehouse-based transforms leverage elastic compute that scales automatically, while serverless functions handle lightweight preprocessing or event-driven logic.

- Orchestrate: Event triggers (S3 notifications, SQS messages) or lightweight schedulers (CloudWatch Events, Cloud Scheduler) coordinate pipeline execution. Event-driven orchestration responds immediately to new data, while scheduled orchestration provides predictable execution windows for batch processing.

Example: Airbyte extracts from Salesforce to S3, event triggers serverless transformation, results land in data warehouse.

How Do You Handle Common Serverless ETL Challenges?

- Cold starts: Use provisioned concurrency for time-sensitive transforms that need sub-second response times, or accept 2-3 second delays for non-critical workloads. Minimize package size by removing unused dependencies and lazy-loading heavy libraries only when needed. Monitor cold start frequency through CloudWatch metrics and adjust concurrency settings based on usage patterns.

- State management: Store intermediate results in object storage with partitioned keys (date, pipeline_id) for fast retrieval, and implement idempotent processing using unique job identifiers. Use database transactions for critical state updates and implement checkpointing to enable pipeline restarts from specific points. Consider AWS Step Functions or Azure Logic Apps for complex workflow state management.

- Cost control: Set concurrency limits on functions to prevent runaway costs during traffic spikes, use reserved capacity for predictable workloads, and monitor per-pipeline costs through detailed billing tags. Implement circuit breakers to stop expensive operations when error rates spike. Schedule non-urgent workloads during off-peak hours to leverage lower compute prices.

- Error handling: Implement dead letter queues to capture failed messages for later analysis and reprocessing. Use exponential backoff with jitter for API rate limits and transient failures. Set up comprehensive alerting on function failures, duration spikes, and cost anomalies. Implement structured logging with correlation IDs to track requests across multiple functions and services.

When Should You Stick with Traditional ETL?

Traditional ETL remains the right choice for specific scenarios. Complex multi-step transformations requiring shared state work better on stateful systems. Microsoft shops entrenched in SSIS often keep workloads on-premises because packages rely on persistent temp tables and Windows authentication.

Regulatory requirements for on-premises processing also favor traditional approaches. Industries handling regulated PII often need to scrub or mask data before it leaves the firewall. Very large datasets (TB+) where dedicated clusters are more cost-effective than pay-per-invoke functions should consider traditional ETL.

What Should You Do Next?

Start with one simple pipeline by picking a low-risk source like a SaaS API and routing it straight into your warehouse. Use managed extraction tools to reduce complexity while you focus on transformation logic.

Implement event-driven triggers for time-sensitive data by configuring storage or queue events to fire serverless transforms the moment new files land. Monitor costs and adjust concurrency settings by tracking execution time, memory, and frequency.

Ready to simplify your data extraction? Airbyte Cloud handles the connector complexity while you focus on serverless transformations. Start your free trial today.

Frequently Asked Questions

Is ELT always better than ETL for serverless?

Not always. ELT works better in serverless environments because transformations happen inside scalable data warehouses or serverless compute, but complex stateful transformations may still require ETL on dedicated infrastructure.

How do I migrate an existing ETL pipeline to serverless without breaking dashboards?

Start with one low-risk pipeline and replicate outputs exactly as they appear today. Validate downstream dashboards before cutting over. Using a dual-run approach (old and new pipelines in parallel) helps ensure consistency before deprecating the legacy system.

What’s the biggest hidden cost of serverless data pipelines?

Cold starts and uncontrolled concurrency. Without limits, spikes in event volume can trigger thousands of simultaneous function runs, leading to unexpected charges. Always set concurrency caps and monitor per-pipeline costs with billing tags.

When should I use Step Functions or workflow engines?

Use them when a transformation requires multiple steps, retries, or complex branching logic. For simple one-step transformations, direct triggers (like S3 events) are enough. As workflows grow in complexity, managed orchestration prevents failure cascades.

What’s the simplest first step for going serverless?

Pick a SaaS connector (like Salesforce or HubSpot), send the raw data to S3 or GCS, and apply one lightweight transformation with a serverless function. Prove the pattern works before tackling larger or more complex pipelines.

.webp)

.png)