What is Cloud Data Ingestion: A Comprehensive Guide

Summarize this article with:

✨ AI Generated Summary

Cloud data ingestion enables efficient, scalable, and cost-effective transfer of diverse data types from multiple sources to cloud storage, overcoming limitations of traditional on-premise systems. Key benefits include:

- Elastic scalability and real-time processing for handling large, fluctuating data volumes.

- Automation with AI/ML for error handling, anomaly detection, and schema management.

- Enhanced security, compliance, and centralized governance across distributed data environments.

- Cost efficiency through pay-as-you-go models and reduced infrastructure maintenance.

Platforms like Airbyte offer open-source, enterprise-grade connectors and integrations that simplify cloud data ingestion while supporting modern data architectures and workflows.

Data ingestion is an integral part of modern enterprise workflows, as it helps you collect and unify data from various sources to enhance business operations. However, due to growing data volumes, on-premise data-storage solutions may be highly maintenance-intensive and less scalable, leading to latency issues and increased expenditures. To overcome these challenges, you can use a fast and more reliable cloud data ingestion approach.

Let's understand what cloud data ingestion is, along with its key features and some prominent cloud-hosted data-ingestion tools. This will aid you in ensuring uninterrupted data movement in your organizational workflows.

What Is Cloud Data Ingestion and How Does It Differ from Standard Data Ingestion?

Cloud data ingestion is the process of extracting raw data from various sources and transferring it to a cloud-based storage system or data warehouse. Unlike traditional on-premise data ingestion, which relies on local databases, data warehouses, or storage systems, cloud-based ingestion eliminates the need for physical infrastructure and provides unprecedented flexibility in handling diverse data types and sources.

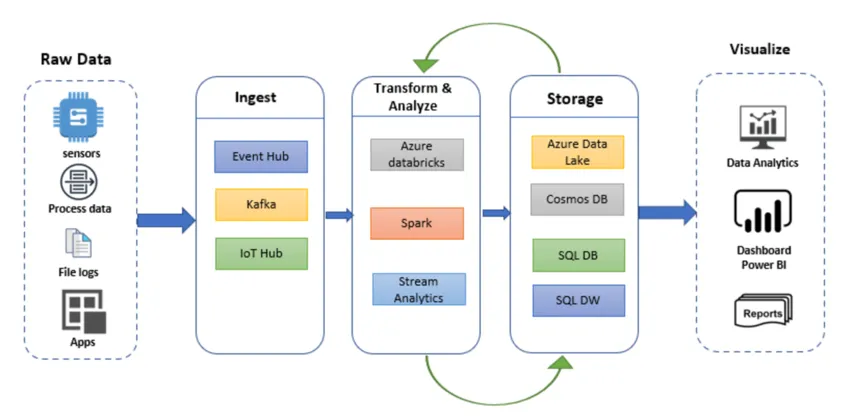

The fundamental difference between cloud and standard data ingestion extends beyond simple location. Modern cloud data-ingestion platforms support both batch and streaming methodologies, enabling organizations to process data in real time or scheduled intervals based on specific business requirements.

Cloud platforms offer elastic scalability that automatically adjusts to data-volume fluctuations, ensuring consistent performance during peak periods without requiring manual intervention or infrastructure upgrades. This capability becomes essential when organizations experience sudden spikes in data volume or need to handle seasonal business fluctuations.

Cloud-native architectures leverage advanced technologies such as serverless computing, containerization, and distributed processing frameworks to handle complex data-transformation and validation processes. These platforms often incorporate artificial intelligence and machine-learning capabilities for automated anomaly detection, intelligent data classification, and predictive optimization. These features are typically cost-prohibitive in traditional on-premise deployments.

On-premise setups require investment in software licensing and hardware, which can be expensive and demand ongoing maintenance. They also have limited scalability due to resource constraints and typically require specialized expertise to maintain and optimize performance.

Conversely, cloud ingestion provides on-demand scalability, cost efficiency, and simplified maintenance, making it a preferred choice for data-driven organizations seeking to modernize their data infrastructure while reducing operational overhead.

What Are the Key Features That Make Cloud Data Ingestion Essential?

Adopting cloud data ingestion can significantly improve the productivity of the data-collection process through several distinctive capabilities that address modern enterprise requirements.

Better Data Accessibility

Cloud ingestion enables you to access data from anywhere, anytime, supporting distributed teams and global operations. Once ingested, the data is readily available for business intelligence, analytics, and decision-making across multiple platforms and applications.

Modern cloud platforms provide sophisticated access controls and authentication mechanisms that ensure data security while maintaining accessibility for authorized users across different organizational roles and responsibilities. This accessibility becomes crucial for organizations with remote teams or multiple office locations.

The cloud infrastructure also enables seamless integration with various business applications and analytics tools. You can connect your ingested data to visualization platforms, machine learning models, and reporting systems without complex configuration or custom development.

Scalability

You can dynamically scale computational, storage, and processing power according to workload changes whether you're handling small datasets or data at petabyte scale. Cloud platforms offer both horizontal and vertical scaling capabilities, automatically adjusting resources based on current demand patterns while optimizing costs through intelligent resource allocation.

This elasticity ensures consistent performance during peak business periods without requiring advance capacity planning or infrastructure investments. Organizations can handle sudden increases in data volume without experiencing system slowdowns or requiring emergency hardware procurement.

The scaling capabilities extend to processing power as well. Complex data transformations and computations can leverage additional resources automatically, ensuring that data processing times remain consistent regardless of workload complexity.

Automation

Pre-built connectors, event-driven triggers, and scheduling features allow you to automate extraction and loading processes. Advanced cloud platforms incorporate artificial-intelligence and machine-learning capabilities to optimize data collection, preparation, and transfer automatically.

These automation features include intelligent error handling, automatic retry mechanisms, and self-healing capabilities that minimize manual intervention while ensuring data-pipeline reliability and consistency. The automation reduces the need for constant monitoring and manual intervention in data workflows.

Modern cloud data ingestion platforms can automatically detect schema changes, handle data format variations, and adjust processing parameters based on data characteristics. This intelligent automation significantly reduces maintenance overhead while improving data quality.

Cost Efficiency

With most cloud platforms pay-as-you-go pricing models, you only pay for what you use and avoid maintaining additional infrastructure. Cloud data ingestion eliminates capital expenditures for hardware, software licenses, and physical data-center space while reducing operational costs through automated management and maintenance.

Advanced cost-optimization features include data-lifecycle management, automated resource scaling, and intelligent storage tiering that further reduce overall data-management expenses. Organizations can optimize costs by automatically moving less frequently accessed data to cheaper storage tiers.

The elimination of maintenance costs for physical infrastructure, along with reduced need for specialized on-site technical staff, creates significant operational savings. These cost savings can be redirected toward strategic initiatives that drive business value.

Advanced Security and Compliance

Modern cloud data-ingestion platforms provide enterprise-grade security features including end-to-end encryption, role-based access controls, and comprehensive audit logging. These platforms support compliance with various regulatory frameworks such as GDPR, HIPAA, and SOX while providing data-sovereignty options that allow organizations to control where their data is stored and processed.

Built-in security monitoring and threat-detection capabilities provide continuous protection against emerging cybersecurity risks. The cloud providers invest heavily in security infrastructure that would be cost-prohibitive for most organizations to implement independently. Yet, despite the strength of these enterprise measures, personal safeguards such as cyber extortion protection remain crucial to ensure that individuals and teams are not left vulnerable to targeted attacks.

Compliance features include automated reporting, data retention policies, and audit trails that simplify regulatory compliance while reducing the administrative burden on internal teams.

Why Is Cloud Data Ingestion Critical in Today's Business Environment?

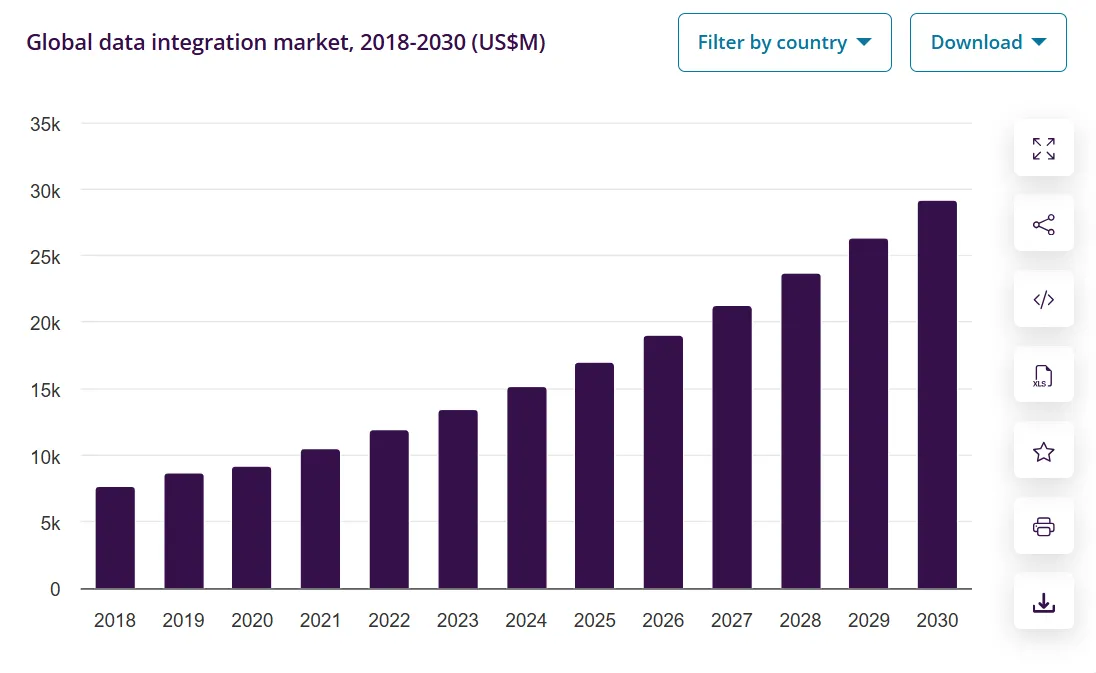

With growing data production, it has become imperative to manage and utilize massive amounts of data effectively. The exponential growth of data sources including Internet-of-Things devices, social-media platforms, and mobile applications creates unprecedented volumes of information that traditional on-premise systems cannot handle efficiently.

Organizations generate data from diverse sources, requiring sophisticated ingestion capabilities that can handle multiple data formats and protocols simultaneously. The variety of data types includes structured database records, unstructured social media content, semi-structured API responses, and streaming sensor data from IoT devices.

Cloud data-ingestion platforms enable organizations to implement data-mesh architectures and federated governance models that support decentralized data ownership while maintaining consistent quality and security standards. This approach allows different business units to manage their own data while ensuring organization-wide consistency and compliance.

Real-Time Decision Making Requirements

Modern business environments demand immediate access to current information for competitive advantage. Real-time data ingestion capabilities enable organizations to respond to market changes, customer behavior, and operational issues as they occur rather than waiting for batch processing cycles.

The speed of business decisions directly impacts competitive positioning. Organizations that can process and act on data faster than competitors gain significant advantages in customer service, inventory management, and strategic planning.

Cloud platforms provide the processing power and infrastructure needed to support real-time analytics without the complexity and cost of building streaming infrastructure internally.

Digital Transformation Initiatives

Organizations undergoing digital transformation require flexible data infrastructure that can adapt to changing business models and technology requirements. Cloud data ingestion provides the agility needed to support new applications, services, and business processes without infrastructure constraints.

The ability to quickly integrate new data sources becomes critical as organizations adopt new technologies, enter new markets, or acquire other companies. Cloud ingestion platforms can rapidly onboard new data sources without requiring significant infrastructure changes.

Digital transformation often involves consolidating data from multiple legacy systems into modern cloud-based platforms. Cloud data ingestion simplifies this migration process while maintaining business continuity.

What Challenges Does Cloud Data Ingestion Address?

Cloud data ingestion solutions address several critical challenges that organizations face when managing data in modern business environments.

Data Silos and Integration Complexity

Traditional data management approaches often create isolated data silos across different departments and systems. These silos prevent comprehensive analysis and create inconsistencies in business reporting and decision-making.

Cloud data ingestion platforms break down these silos by providing unified access to data from multiple sources. The centralized approach ensures that all stakeholders work with the same version of truth while maintaining appropriate access controls.

The complexity of integrating disparate systems decreases significantly when using cloud-based ingestion tools. Pre-built connectors and standardized APIs eliminate much of the custom development traditionally required for data integration projects.

Scalability Limitations of Legacy Systems

On-premise data infrastructure often cannot scale effectively with business growth. Hardware limitations, software licensing constraints, and maintenance complexity create bottlenecks that restrict data processing capabilities.

Legacy systems typically require significant advance planning and capital investment to increase capacity. This planning cycle cannot respond quickly to sudden changes in data volume or business requirements.

Cloud data ingestion eliminates these scalability constraints by providing virtually unlimited processing and storage capacity that scales automatically based on demand.

High Infrastructure and Maintenance Costs

Maintaining on-premise data infrastructure requires significant ongoing investment in hardware, software licenses, and specialized technical staff. These costs often grow faster than the business value derived from the data infrastructure.

The complexity of maintaining multiple data integration tools and platforms creates operational overhead that diverts resources from strategic initiatives. Organizations spend more time managing infrastructure than extracting value from their data.

Cloud-based solutions reduce infrastructure costs through shared resources and economies of scale while eliminating the need for specialized on-site maintenance expertise.

Data Quality and Governance Issues

Ensuring consistent data quality across multiple sources and systems becomes increasingly challenging as data volume and source diversity grows. Manual data quality processes cannot scale effectively with modern data requirements.

Governance requirements become more complex when data spans multiple systems, locations, and access patterns. Organizations struggle to maintain comprehensive audit trails and access controls across distributed data environments.

Many cloud data ingestion platforms offer features such as data quality monitoring, automated governance enforcement, and audit capabilities to help organizations maintain consistent data management standards, though the extent and integration of these features may vary.

How Can Organizations Implement Effective Cloud Data Ingestion Strategies?

Successful cloud data ingestion implementation requires careful planning and consideration of organizational requirements, technical constraints, and business objectives.

1. Assessment and Planning Phase

Begin by conducting a comprehensive assessment of existing data sources, current infrastructure, and business requirements. Identify all data sources that need to be ingested, including databases, applications, files, and external APIs.

Evaluate current data processing requirements including volume, velocity, and variety characteristics. Understanding these requirements helps in selecting appropriate cloud platforms and configuring ingestion processes effectively.

Define success metrics and key performance indicators that will measure the effectiveness of the cloud data ingestion implementation. These metrics should align with business objectives and provide clear benchmarks for progress evaluation.

2. Platform Selection and Architecture Design

Choose cloud data ingestion platforms based on specific requirements including supported data sources, processing capabilities, security features, and cost structure. Consider platforms that offer 600+ connectors to minimize custom development requirements.

Design the overall data architecture including storage patterns, processing workflows, and integration points with existing systems. Ensure the architecture supports both current needs and future growth requirements.

Plan for hybrid scenarios where some data may need to remain on-premise due to regulatory or business requirements. The architecture should support seamless data movement between cloud and on-premise environments.

3. Security and Compliance Implementation

Implement comprehensive security measures including encryption for data in transit and at rest, role-based access controls, and network security configurations. Ensure security measures meet organizational standards and regulatory requirements.

Configure compliance monitoring and audit logging capabilities to support ongoing governance requirements. Implement automated compliance checking where possible to reduce manual oversight requirements.

Establish data classification and handling procedures that ensure sensitive data receives appropriate protection throughout the ingestion and processing lifecycle.

4. Testing and Validation

Develop comprehensive testing strategies that validate data accuracy, processing performance, and system reliability. Include both functional testing of individual components and end-to-end testing of complete data workflows.

Implement monitoring and alerting systems that provide real-time visibility into data ingestion processes. Configure alerts for data quality issues, processing failures, and performance degradation.

Conduct performance testing to ensure the system can handle expected data volumes and processing requirements. Include testing for peak load scenarios and disaster recovery situations.

5. Migration and Deployment

Plan migration strategies that minimize business disruption while ensuring data consistency and accuracy. Consider phased migration approaches that allow gradual transition from legacy systems.

Implement rollback procedures and contingency plans that can address issues during migration. Ensure business continuity by maintaining parallel systems during transition periods.

Provide training and documentation for teams that will manage and use the new cloud data ingestion capabilities. Include both technical training for administrators and user training for business stakeholders.

What Are the Best Practices for Cloud Data Ingestion Success?

Implementing these best practices ensures reliable, efficient, and secure cloud data ingestion operations that support long-term business objectives.

Data Quality and Validation

Implement comprehensive data quality checks at ingestion points to catch issues before they propagate through downstream systems. Include validation for data completeness, accuracy, consistency, and timeliness.

Configure automated data profiling that continuously monitors data characteristics and detects anomalies. This proactive approach helps identify data quality issues before they impact business operations.

Establish clear data quality metrics and reporting that provide visibility into ongoing data health. Regular quality reporting helps maintain stakeholder confidence and identifies improvement opportunities.

Performance Optimization

Configure ingestion processes to optimize for throughput while maintaining data quality and system stability. Balance batch sizes, processing parallelism, and resource allocation based on specific workload characteristics.

Implement intelligent retry mechanisms that handle temporary failures without overwhelming source systems or creating data duplication. Configure exponential backoff strategies for resilient error handling.

Monitor performance metrics continuously and adjust configurations based on observed patterns. Use historical performance data to predict capacity requirements and optimize resource allocation.

Cost Management

Implement cost monitoring and optimization practices that ensure efficient resource utilization. Configure automated scaling policies that adjust resources based on actual demand rather than peak capacity requirements.

Use appropriate storage tiers based on data access patterns and retention requirements. Implement lifecycle policies that automatically move less frequently accessed data to cost-effective storage options.

Regular cost analysis and optimization reviews help identify opportunities to reduce expenses while maintaining performance and reliability standards.

Security and Governance

Maintain strict access controls and authentication mechanisms that ensure only authorized users can access data and system configurations. Implement multi-factor authentication and regular access reviews.

Configure comprehensive audit logging that captures all data access, processing activities, and configuration changes. Maintain audit logs for compliance requirements and security monitoring.

Regular security assessments and penetration testing help identify vulnerabilities and ensure ongoing security posture effectiveness.

How Does Airbyte Enhance Cloud Data Ingestion Capabilities?

Airbyte transforms cloud data ingestion by providing an open-source platform that combines enterprise-grade capabilities with the flexibility and control that technical teams demand. With 600+ connectors, Airbyte eliminates the development overhead typically required for data integration projects while maintaining the customization options needed for specific business requirements.

The platform addresses the fundamental challenges that prevent effective cloud data ingestion: high costs, vendor lock-in, and limited flexibility. Airbyte's open-source foundation eliminates expensive licensing fees while generating portable code that prevents vendor dependencies.

Open Source Foundation with Enterprise Features

Airbyte combines the innovation and flexibility of open-source development with enterprise-grade security, governance, and support capabilities. This approach allows organizations to leverage community-driven connector development while maintaining the security and compliance standards required for enterprise operations.

The platform provides multiple deployment options including Airbyte Cloud for fully-managed operations, Self-Managed Enterprise for complete infrastructure control, and Open Source for maximum customization. This flexibility ensures organizations can choose deployment models that align with their security requirements and operational preferences.

Enterprise features include role-based access control, comprehensive audit logging, end-to-end encryption, and support for SOC 2, GDPR, and HIPAA compliance requirements. These capabilities ensure that open-source flexibility doesn't compromise enterprise security or governance standards.

Comprehensive Connector Ecosystem

The 600+ connector library covers databases, APIs, files, and SaaS applications with community-driven development that rapidly expands integration capabilities. Pre-built connectors eliminate the custom development traditionally required for data integration projects.

The no-code connector builder enables organizations to create custom integrations without development overhead. This capability ensures that unique business requirements don't create integration bottlenecks or require expensive custom development.

Enterprise-grade connectors are optimized for high-volume CDC database replication with automated testing and validation to ensure reliability. The connector ecosystem supports both real-time streaming and batch processing methodologies based on specific business requirements.

Production-Ready Performance and Reliability

Airbyte processes over 2 petabytes of data daily across customer deployments, demonstrating the platform's ability to handle enterprise-scale workloads. Kubernetes support provides high availability and disaster recovery capabilities that ensure business continuity.

Automated scaling and resource optimization features ensure cost efficiency while maintaining consistent performance. Real-time monitoring and alerting capabilities provide visibility into pipeline health and performance metrics.

Automated schema management and change detection reduce maintenance overhead while ensuring data consistency during source system changes. These capabilities minimize the operational burden typically associated with maintaining large-scale data integration environments.

Integration with Modern Data Stack

Native integration with Snowflake, Databricks, BigQuery, and other cloud data platforms ensures compatibility with modern data architectures. The platform supports data lakes, warehouses, and real-time streaming architectures without requiring architectural compromises.

Integration with orchestration tools like Airflow, Prefect, and Dagster enables sophisticated workflow management and scheduling. Compatibility with transformation tools like dbt and data quality platforms ensures seamless integration with existing data engineering workflows.

API-first architecture enables integration with existing development workflows and tools while maintaining the flexibility needed for custom business requirements.

What Are the Leading Cloud Data Ingestion Tools and Platforms?

The cloud data ingestion landscape includes several categories of tools and platforms, each addressing different aspects of modern data integration requirements.

1. Enterprise Data Integration Platforms

Traditional enterprise platforms like Informatica and Talend provide comprehensive data integration capabilities but often require significant licensing investment and specialized expertise for maintenance. These platforms excel in complex enterprise environments with extensive governance requirements.

However, the high cost and complexity of traditional platforms often limit their effectiveness for organizations seeking agile data integration capabilities. The specialized expertise required for maintenance can create operational bottlenecks and increase long-term costs.

Modern alternatives focus on reducing complexity while maintaining enterprise-grade capabilities, offering better cost-effectiveness and operational simplicity.

2. Cloud-Native Data Integration Services

Major cloud providers offer native data integration services including AWS Glue, Azure Data Factory, and Google Cloud Dataflow. These services provide tight integration with other cloud services and simplified management within specific cloud ecosystems.

Cloud-native services work well for organizations fully committed to single-cloud strategies but may create vendor lock-in that limits long-term flexibility. The services are optimized for their respective cloud platforms but may not provide the connector variety needed for diverse data source environments.

Organizations requiring multi-cloud flexibility or hybrid deployment capabilities may find cloud-native services limiting for comprehensive data integration requirements.

3. Open Source and Hybrid Solutions

Open-source platforms like Airbyte provide the flexibility and cost-effectiveness that many organizations need while offering enterprise-grade capabilities through commercial offerings. These platforms combine community-driven innovation with professional support and enterprise features.

The hybrid approach allows organizations to start with open-source capabilities and add enterprise features as requirements evolve. This flexibility ensures that initial investments remain valuable as organizational needs change and grow.

Open-source platforms often provide superior connector variety and customization options compared to proprietary alternatives while maintaining cost-effectiveness and avoiding vendor lock-in.

Platform Selection Considerations

When evaluating cloud data ingestion platforms, consider the total cost of ownership including licensing fees, operational overhead, and required specialized expertise. Many traditional platforms have hidden costs that emerge during implementation and ongoing operations.

Assess connector availability and quality for your specific data sources. The breadth of pre-built connectors directly impacts implementation speed and ongoing maintenance requirements.

Evaluate deployment flexibility and vendor lock-in implications. Platforms that generate portable code and support multiple deployment options provide greater long-term flexibility and reduce switching costs.

Consider the platform's ability to scale with your organization's growth and evolving requirements. Solutions that can adapt to changing business needs provide better long-term value than platforms with rigid architectures or limited scalability.

Conclusion

Cloud data ingestion represents a fundamental shift in how organizations approach data integration and management. The combination of scalability, cost-effectiveness, and advanced capabilities makes cloud-based approaches essential for modern data-driven organizations.

Successful implementation requires careful planning, appropriate platform selection, and ongoing optimization based on business requirements and performance metrics. Organizations that effectively leverage cloud data ingestion capabilities gain significant competitive advantages through improved data accessibility, reduced operational costs, and enhanced decision-making capabilities.

Frequently Asked Questions

What is the difference between cloud data ingestion and traditional ETL processes?

Cloud data ingestion extracts and loads data into cloud storage with minimal transformation, supporting ELT or ETL tools and real-time streaming with automatic scalability. traditional ETL platforms extensive transformations before loading, though some now offer similar cloud-like features.

How do organizations ensure data security during cloud data ingestion?

Ensure data security during cloud ingestion with end-to-end encryption, role-based access, network protections, and audit logging. Implement data classification, regular assessments, and compliance monitoring. Leading platforms offer built-in features meeting SOC 2, GDPR, and HIPAA standards.

What factors should organizations consider when choosing a cloud data ingestion platform?

When choosing a cloud ingestion platform, consider connector availability, deployment flexibility, scalability, security and compliance, total cost, support quality, and ability to handle batch and real-time data. Evaluate integration with existing tools and platform usability.

How can organizations optimize costs for cloud data ingestion?

Optimize cloud data ingestion costs with demand-based resource scaling, appropriate storage tiers, automated data retention policies, usage monitoring, usage-based pricing, and data compression or deduplication to reduce storage and transfer expenses.

What are the key challenges in implementing cloud data ingestion and how can they be addressed?

Key challenges in cloud data ingestion include data quality, integration complexity, security, compliance, and governance. Address them via thorough source assessment, robust testing, clear policies, platforms with strong connectors and quality features, and training plus change management for smooth adoption.

.webp)