What is the Role of Data Integration in Data Mining?

Summarize this article with:

✨ AI Generated Summary

Data integration consolidates fragmented data from multiple sources into a unified system, enhancing data mining by improving data quality, enabling feature engineering, and supporting advanced analytics. Key benefits include:

- Competitive edge through faster, comprehensive insights

- Robust security via centralized data management

- Cost savings by automating data processes

- Enhanced customer experience with a 360-degree data view

Common integration methods include ETL, ELT, streaming, application integration, and data virtualization. Tools like Airbyte facilitate seamless data integration with extensive connectors, open-source flexibility, and efficient change data capture, streamlining data mining workflows for actionable business insights.

Unearthing valuable data is a cornerstone for modern decision-making. Data mining plays a vital role in this by extracting hidden patterns and knowledge from information. However, data often exists in silos and is trapped within separate databases, applications, and file systems. This fragmented landscape presents a significant challenge for data mining. This is where data integration in data mining steps in as a hero, acting as a connecting bridge to these isolated sources, paving the way for a holistic and powerful approach.

Let's explore how data integration empowers data mining to unlock the true potential of your organization's information.

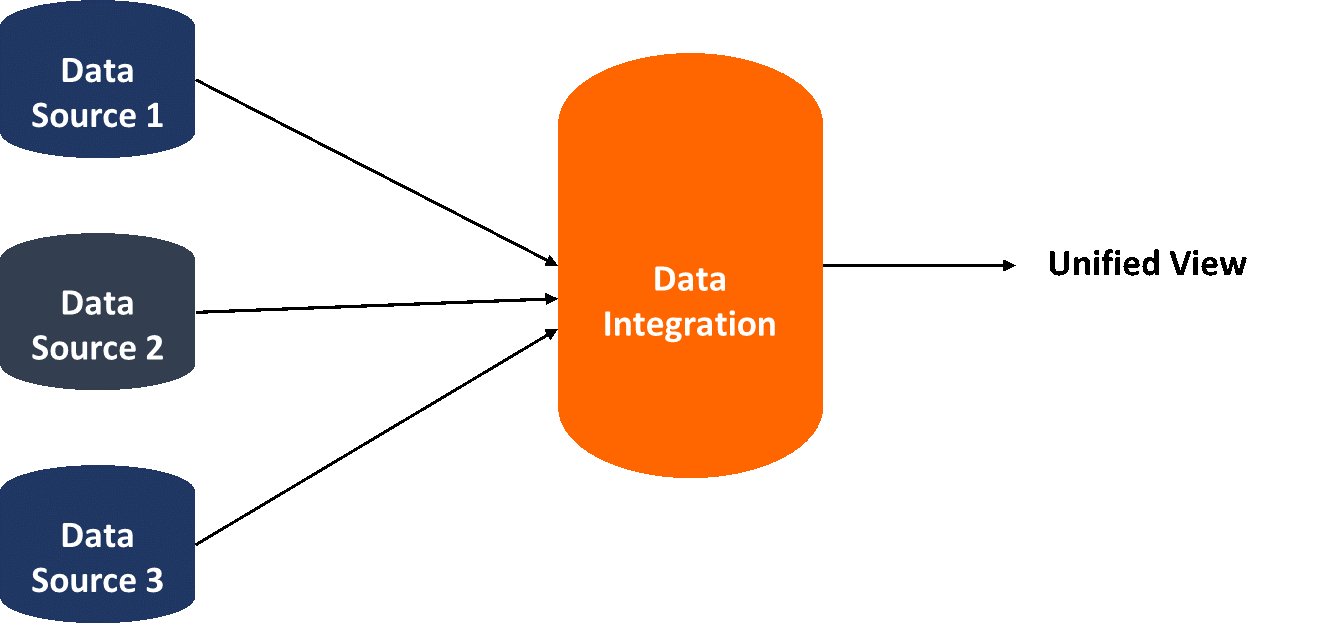

What is Data Integration?

Data integration is bringing together information from different sources and storing it in a unified way. It is similar to the file cabinets throughout your office, each holding bits and pieces of information on a topic. By implementing data integration, you can house the data, sort it, and put it into one filing cabinet for improved decision-making

The benefits of data integration are:

- Competitive Edge: With readily available and comprehensive data, your business can respond faster to market changes and opportunities. This agility allows you to stay ahead of the competitors.

- Robust Security: By centralizing the data at one central hub, applying and managing security protocols becomes easier. This simplifies data usage monitoring and prevents unauthorized access effortlessly.

- Enhanced Customer Experience: Consolidated data provides a 360-degree view of the consumers, enabling your business to personalize interactions and deliver a more consistent and positive experience.

- Cost Saving: Automating data transfer and processing tasks through integration tools saves time and resources. Eliminating manual data entry tasks frees your employees to focus on higher-value activities. Additionally, it reduces the expenses of maintaining and operating multiple databases.

Types of Data Integration

Data integration techniques can be implemented in various forms, each with its own strengths that are suited for different scenarios. Here are the common approaches to achieve a unified view of your data:

ETL

ETL is a traditional data integration approach and it follows a three-step process:

- Extract: The first phase involves identifying and extracting relevant data points from their original locations. These sources can be databases, flat files, applications, etc.

- Transform: The second phase involves cleaning and standardizing the extracted data format according to the target system. This might involve handling missing values, converting data types, or resolving inconsistencies.

- Load: In the final phase, transformed data is loaded into a target system so that it can be connected with the downstream applications for analysis or reporting. The destination repositories can be data warehouses or lakes.

ELT

This method flips the script on ETL. The process flow of ELT is similar to ETL; the sole difference is that it enables loading data immediately to the target systems after extracting it. The destination systems are typically a cloud-based data lake, data warehouse, or data lakehouse. Here is the brake of the ELT process:

- Extract: The extraction process is similar to the ETL technique. Data is pulled from various databases or applications.

- Load: The data is directly loaded into the target systems in its raw format. These destinations' storage are often data lakes.

- Transform: Once loaded, the data is transformed within the target system. This approach can be advantageous for big data scenarios where raw volume is massive, and transformations can be done whenever required.

Steaming Data Integration

The streaming data integration technique is designed to handle continuous streams of data generated by sensors, social media feeds, or other real-time sources. Streaming data integration focuses on ingesting, transforming, and delivering data in near real-time to enable analytics and decision-making. You can use tools like Apache Flink, Google Cloud Dataflow, Microsoft Azure Stream Analytics, etc., to build real-time queries and visualizations on data streams from various sources.

Application Integration

This integration process enables different software applications to communicate and exchange data with each other. For example, in your organization, you have isolated islands of information, each holding valuable data but unable to share it effectively. Application integration creates a bridge between these gaps by forming a more collaborative and data-driven environment.

Data Virtualization

The data virtualization technique creates a virtual layer over disparate data sources. It acts like a unified facade, presenting a single point of access to you without physically moving the data. Additionally, virtualization offers real-time access to data, but the processing power demands can be high depending on the complexity of the virtual layer.

What is Data Mining?

Data mining is a process of analyzing large amounts of data to extract hidden patterns, trends, and insights. It's like shifting through a pile of rocks to find precious gems. In the case of data mining, the “rocks” are the raw data points, and the “gems” are the chunks of valuable information that can help in better decision-making.

Data mining offers various key benefits for your organization that can be categorized into these key areas:

- Pattern Recognition: Data mining algorithms are designed to identify patterns and relationships within the data. These patterns can be simple correlations between variables or more complex, like customer segmentation based on buying habits.

- Predictive Analysis: It can be used to build a predictive model that forecasts future trends and consumer behavior. By analyzing historical data, your business can gain insights into what might happen in the future. This lets you anticipate your buyer's needs, prepare for market fluctuations, and proactively address potential issues.

- Enhanced Operational Efficiency: Data mining can identify areas where operational processes can be streamlined. You can identify bottlenecks and inefficiencies by analyzing data on production lines, inventory levels, and resource allocation. This allows it to optimize operations, reduce costs, and improve overall business performance.

What is Data Integration in Data Mining?

Data integration acts as a foundation for successful data mining. It prepares your data for uncovering hidden insights. Here’s how data integration empowers data mining:

Unifying Data Sources

Data mining typically involves analyzing data from various sources, such as customer databases, sales transactions, social media feeds, and sensor readings. Data integration brings all this information into a single unified army. This enables data mining algorithms to analyze a more comprehensive picture and identify patterns that might be covered within isolated data sources.

Improved Data Quality

Data quality is paramount for existing reliable insights for data mining. Data integration helps ensure data accuracy and consistency across different sources. This process allows you to identify and remove errors, handle missing values, and standardize data according to your analysis requirements. This ensures the data mining algorithms work well with reliable information.

Enabling Feature Engineering

Feature engineering is the process of creating new features from existing data that are more relevant to the specific question you’re trying to answer with data mining. Data mining in data engineering allows you to combine data points from various origins to create these informative features. For instance, you might combine purchase history with website browsing behavior to create a feature that predicts future buying preferences.

Facilitating Advanced Data Mining Techniques

Certain data mining techniques, like association rule learning, thrive on a wide range of data points. These techniques allow you to easily identify complex relationships and patterns that might get missed. Data integration provides the comprehensive dataset needed for these advanced techniques to operate at full capacity, allowing the mining of correlations between datasets and predictive models.

Streamline Data Mining Workflows

Integrating data upfront saves significant effort during the data mining process. You don’t have to waste time on tedious tasks like manually collecting and cleaning data from various sources. This streamlined workflow allows you to focus on core tasks of data exploration, model building, and interpreting the insights unearthed by data mining.

Simplify Your Data Integration Workflows Today with Airbyte

So far, now that you know how data integration plays a crucial role in data mining by facilitating the collection, aggregation, and preprocessing of data from disparate sources, it's essential to understand how Airbyte enhances this process.

With Airbyte's robust data integration capabilities, you can seamlessly gather data from various sources, harmonize it into a unified format, and prepare it for analysis. By leveraging Airbyte as part of your data mining workflows, you can enhance the efficiency and effectiveness of your data mining efforts, leading to valuable and actionable insights.

Here are some key features of Airbyte:

- Extensive Connector Library: Airbyte offers a collection of 350+ pre-built connectors that allow you to connect with popular databases, APIs, flat files, data warehouses, and data lakes. This vast selection empowers you to bring data from any source into your data platform. Additionally, it has a user-friendly interface and supports building custom connectors through its Connector Development Kit (CDK) in just 10 minutes.

- Open-Source: Being an open-source, Airbyte offers flexibility and customization for implementing small-sized data integration workflows.

- Change Data Capture: With Airbyte’s CDC technique, you can capture the changes made to your data since the last sync rather than transferring the entire data set each time. This significantly improves efficiency and reduces the amount of data transfer, especially for larger data sets that are constantly being updated.

Wrapping Up!

Data integration in data mining is the backbone for effective in-depth analysis and informed decision-making. This comprehensive approach empowers data mining techniques to extract hidden patterns, correlations, and fields that might not be evident in isolated data sets. Ultimately, successful data integration paves the way for data mining to yield actionable insights that can inform better decision-making and drive your business growth.

💡Suggested Read : Healthcare Data Integration