Mastering Data Pipeline Management: A Complete Guide

Summarize this article with:

✨ AI Generated Summary

Data pipelines are essential for organizing, validating, and transforming business data from multiple sources to enable accurate analysis and reporting. Key challenges include data quality issues, pipeline breakdowns, and security risks, which can be mitigated through robust validation, monitoring, and encryption.

- Core components: data sources, quality checks, transformation layers, storage, and monitoring systems.

- Common patterns: batch vs. real-time processing, change data capture, event-driven architectures, and incremental vs. full loads.

- Future trends: cloud-native pipelines, AI/ML integration, automation, and decentralized data mesh architectures.

- Case study: Airbyte helped Anecdote reduce engineering time by 25% with scalable, easy-to-use data pipeline solutions.

Data management may seem like a new concept, but it has been practiced by business organizations for many years. However, with rising data volumes and complexity, this approach has branched out considerably. Data pipelines play a vital role in orchestrating other data-related processes. If you are a data enthusiast seeking to learn more about data pipeline management, you’re in the right place!

What is a Data Pipeline?

While data gets generated from several touchpoints, it often isn’t ready for immediate usage. To process data for particular business use cases, you must build a structure that can carry out data processing tasks in an orderly fashion. Here’s where a data pipeline comes in handy.

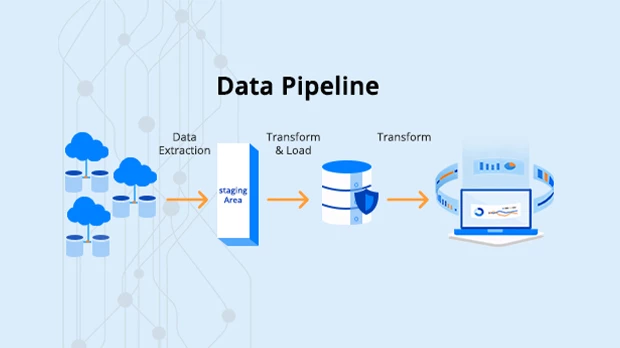

A data pipeline is a series of processes designed to prepare and organize business data for analysis and reporting. It acts as a channel, helping you aggregate, validate, and optimize data before it reaches the final repository.

Data pipelines differ slightly from data integration processes. Data integration through a data pipeline can be carried out in two ways: ELT and ETL. The fundamental difference between the two is the sequence of loading and transforming data.

Common Challenges Teams Face with Data Management

Like every other sphere of business, you may face some challenges while mastering data pipeline management. Take a look at a few of them:

- Data Quality Issues: Errors during collecting and transforming data in the pipeline may result in inaccurate strategies and poor outcomes. It can be difficult to pinpoint the cause of flawed data, which lowers overall trust in the decision-making process.

- Pipeline Breakdown: Handling growing data volumes can put a strain on the data pipeline, resulting in processing bottlenecks and pipeline failure. This can disrupt operations and lead to productivity and financial losses.

- Data Breaches: Security breaches in data pipelines can have disastrous consequences, especially when you are migrating personally identifiable information. To overcome this, your organization must invest in resilient, fault-tolerant data pipelines with proper encryption and role-based authentication mechanisms.

Core Components of Data Pipeline Management

- Data Sources and Integration Points: The first core component in a data pipeline architecture is the entry point where data flows in from multiple sources. A data source can be any system, platform, or device that generates business data. You should be able to integrate and consolidate a variety of data types from various origins through your data pipeline.

- Data Quality Checks and Validation: Running various data quality checks to validate data in the pipeline saves the time and effort required to ensure data reliability and accuracy. You can integrate your data pipeline with data orchestration tools or data quality platforms that help you filter out irrelevant data and standardize it into a usable format for your organization.

- Transformation Layers: The data transformation layer is an integral part of modern data pipelines. Several data pipeline tools offer drag-and-drop transformation filters that allow you to handle missing or null values and perform aggregation and joins.

- Storage and Warehousing: Processed and transformed data has to be securely stored in a destination system, such as a data warehouse or data lake. The primary role of this component is to safely hold large volumes of data until it is ready to be analyzed.

- Monitoring and Alerting Systems: Pipeline monitoring systems are crucial for quickly detecting and resolving issues that may have occurred during data transfer. By integrating your data pipeline with monitoring dashboards or versioning systems, you can keep a record of events and receive notifications in case of failed operations.

Common Data Pipeline Patterns

Data pipeline patterns allow the integration and transformation of data suitable for various business purposes. Here are some common data pipeline patterns to explore:

Batch Processing vs. Real-time Streaming

In batch processing, once the data is ingested in the pipeline, it is stored temporarily in the staging area. At a specific interval, the entire dataset is processed as a batch. This allows you to break down larger chunks of data into smaller and more manageable chunks. Your organization can use batch processing for billing or payroll systems where datasets need to be processed weekly or monthly.

Conversely, real-time or streaming data pipelines can process data within seconds or minutes of data arrival. These pipelines can continuously ingest and produce outputs in near real-time, allowing you to analyze datasets and respond instantaneously. Such pipelines can be used to detect fraudulent transactions in financial databases.

Change Data Capture (CDC)

Change data capture is a process that allows your data pipeline to track changes in your source systems and reflect them in the destination system. This method allows the pipeline to identify data modifications, ensuring timely synchronization. CDC can be push-based, where the source database can send updates to downstream applications, or pull-based, where downstream systems pull modified data from the source.

Event-driven Architectures

To build an event-driven architecture, you must configure the data pipeline to receive data whenever a specific event occurs. The event could be brought through a customer’s actions on your website, a webhook, or a data store. Once the event has been received, your data pipeline should process the data and issue notifications to the concerned team. You can also build event-driven pipelines through poling. Here, you must schedule code to run at particular intervals to check data stores for any new events.

Incremental vs. Full Loads

When you perform a full load through your data pipeline, you replace all the data from your target system with new data. While this process ensures data consistency, as all previous records are completely overwritten, it is quite resource-intensive for huge datasets.

On the other hand, through an incremental load, the pipeline only adds modified or newly ingested data from your source to the target system. Since the destination is updated with recent changes, this method is faster and more efficient in data pipeline management. However, you must implement change-tracking mechanisms to identify when modifications were made to the dataset.

Data Synchronization Patterns

Data synchronization patterns are methods that data pipelines use to manage coordination among multiple threads to ensure the orderly execution of data flow tasks. This process can happen in one direction, which involves sending data from an application to a data warehouse. Another data synchronization pattern is the bi-directional sync, where the pipeline allows data updates to move in both directions.

Future of Data Pipeline Management

- Emerging Trends: As cloud-based data integration tools are gaining popularity, several non-technical users are foraying into building data pipelines without requiring IT expertise. This upcoming shift enables more team members with limited technical skills to get involved in data pipeline management and decision-making.

- AI/ML Integration: You can boost customer retention rates by integrating machine learning (ML) and artificial intelligence (AI) architectures into your data pipeline. AI models and ML algorithms can automate data transformation tasks and quickly uncover patterns in the dataset, allowing you to analyze customer preferences in near real-time. This will enable you to enhance customer experience in the long run.

- Automation Opportunities: Inculcating automation tools in your data pipelines frees you from repetitive and mundane tasks. By automating essential tasks like code testing and debugging the pipeline architecture, you can reduce the risk of human errors. It also helps you allocate resources for high-priority tasks.

- Industry Predictions: A data mesh is a modern data architectural concept in which each business unit treats data as a product. This decentralized approach allows departments to control their data pipelines and services completely. It provides more autonomy at the grassroots levels, enabling them to develop better data-driven strategies.

- Preparing for Scale: Shifting from traditional to cloud-native data pipelines can give your organization more flexibility and scalability. Traditional pipelines are limited by fixed resources and cannot automatically adjust compute resources based on data demands. Cloud pipelines are more elastic and scalable and can catch up with the latest trends more quickly.

How Airbyte Helped Anecdote in Speeding up the Platform’s Launch and Growth with Pipeline Management

Founded in 2020, Airbyte has quickly carved a niche for itself as an easy-to-use and robust data movement platform. Over 7,000 data-driven companies use Airbyte daily for their data pipeline deployment and management. Anecdote is one such company that vouches for Airbyte’s scalability and simple yet effective features.

Anecdote, an AI-powered platform for customer insights, was founded in 2022 by Pavel Kochetkov and Abed Kasaji. Right from the start, the team discovered how Airbyte demonstrated value by saving 25% of their data engineering time and resources.

Instead of developing in-house data integration pipelines and infrastructure, which would have taken months, Anecdote turned to Airbyte’s extensive library of connectors and custom data pipeline solutions. This efficiency allowed the bootstrapped startup to develop its core platform, expand its team, and secure funding, all of which proved critical for its survival.

Currently, Anecdote uses nine data sources and leverages open-source technologies like Python and Linux. Their team relies heavily on Airbyte connectors to move data into Anecdote’s S3 storage, which then integrates with the platform’s other machine learning pipelines.

Anecdote’s first engineer, Mikhail Masyagin, has spoken at length about Airbyte’s reliability and open-source community support. You can read more about the company’s positive experience with Airbye here.

The Final Word

Data pipeline creation, maintenance, and management may seem complex at first. However, this extensive guide helps you understand the crucial components, challenges, and upcoming associated trends.

Opt for robust data movement tools like Airbyte to simplify data pipeline management. This will leave the infrastructure and maintenance to the experts, freeing your resources for important strategy and analysis.

💡Suggested Read: Data Mesh Use Cases