Incremental Load in ETL: How It Works and Why It Matters

Summarize this article with:

✨ AI Generated Summary

Your business benefits from ETL incremental loading by transferring only new or changed data, improving efficiency and reducing resource usage compared to full data loads. Key advantages include:

- Faster processing and reduced network traffic by avoiding full dataset transfers.

- Improved data freshness and easier error recovery with granular updates.

- Retention of historical data and suitability for large, frequently updated datasets.

Common implementation methods include timestamp-based, unique identifier, log-based CDC, and hash-based approaches, while challenges like handling deletions and schema changes can be addressed with CDC, soft deletes, and automatic schema detection. Platforms like Airbyte facilitate incremental loading with features such as deduplication, CDC support, automatic schema updates, and robust failure recovery.

Your business integrates data from diverse sources into a data warehouse to gain a consolidated view of your operations. As the information in your source systems continues to change, it’s crucial that these updates are reflected in your destination system in a timely manner. If you fail to do so, it can negatively impact your business, leading to decisions based on outdated or inaccurate data.

However, traditional methods of loading data often require transferring entire datasets, which can be quite challenging, especially as your data volume grows. This is where incremental loading in ETL can greatly help. It streamlines the process, ensuring that your target system remains current and relevant without the overhead of full data transfers.

In this blog, you will learn how incremental load in ETL works, its advantages, and the challenges and possible solutions for efficient data management.

What is ETL Incremental Loading?

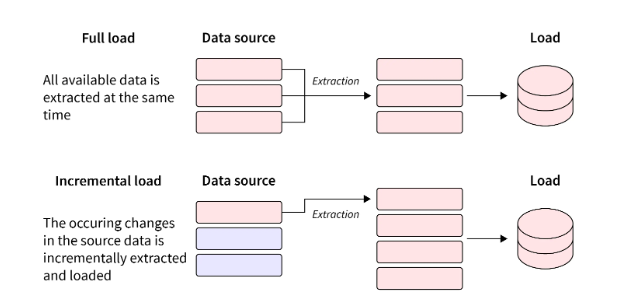

Incremental loading in ETL (Extract, Transform, Load) is a data processing technique that focuses on transferring only the data that has changed or been newly added since the last load. Unlike full loading, which involves reprocessing and moving the entire dataset each time, incremental loading focuses solely on new or modified records. This process is especially advantageous when working with large datasets, as it minimizes the amount of data that needs to be transferred, significantly reducing both processing time and system load.

For instance, if a database contains millions of records but only a few hundred have changed since the last update, an incremental load will only move those modified records rather than the entire dataset. This leads to faster data processing cycles and reduced strain on system resources, enabling you to keep your data up-to-date without unnecessary overhead.

Key Components of Incremental Loading

Here are the key elements involved in the incremental data processing:

Source Change Detection

At the core of incremental data loading is the ability to detect changes in the source systems. This process involves identifying new, modified, or deleted records since the last data load. Various mechanisms, such as timestamps or log-based Change Data Capture, can be utilized to track changes accurately.

Extraction Process

During extraction, only the records that meet the change criteria (e.g., those with a timestamp greater than the last loaded timestamp) are pulled from the source system. This selective extraction minimizes data transfer and speeds up the process.

Transformation Logic

The extracted data may require transformation to fit the target schema. This may include data cleansing, formatting, and applying business rules. Transformations are crucial for maintaining data quality and consistency in the destination system.

Loading Mechanisms

There are two approaches based on the volume of data being loaded in incremental processing: stream and batch. Stream incremental load is designed for scenarios where small volumes of data are processed continuously. In contrast, batch is used for larger volumes of data, where updates are loaded at scheduled intervals.

Data Validation and Consistency Checks

After the data is loaded, validation steps should be in place to ensure the incremental load doesn’t introduce inconsistencies. This might include running data quality checks or comparing a sample of the loaded data with the source to ensure accuracy. Ensuring data consistency is key to preventing data integrity issues in the long run.

Benefits of Incremental Loading in ETL

Let’s look at the key advantages of incremental data loading:

Efficiency in Data Processing

Incremental loading deals with data that has been modified or newly added, which significantly reduces the amount of data to be moved and processed. This results in faster ETL processes and better utilization of system resources.

Data Freshness

Frequent loading cycles made possible by the efficiency of incremental loading ensure that your target system is constantly updated with the latest data. This improves the freshness and relevance of the data available for downstream applications.

Reduced Network Traffic

By transferring only the changed data, incremental loading significantly reduces the amount of data that needs to be moved across the network. This reduction in network traffic leads to faster data transfers and prevents network congestion, which can affect other operations reliant on network bandwidth. Reduced network load also decreases latency, contributing to faster ETL cycles and better system responsiveness.

Improved Error Recovery

You'll find error handling and recovery much more manageable with incremental loading. If an ETL job fails, you only need to reprocess the failed increment rather than the entire dataset. This granular recovery approach significantly reduces the impact of failures on your data pipeline and helps maintain better system reliability.

Lower Costs

Incremental loading can help lower operational costs by reducing the amount of computational power and storage resources required for ETL processes. Instead of transferring the entire dataset, incremental load only processes new or modified records since the last update. Therefore, only fewer server resources are consumed, which directly translates to lower costs, especially when dealing with cloud-based services where pricing is often tied to resource usage.

Difference between ETL Full Loading and ETL Incremental Loading

There are two primary approaches to loading data into the destination system—full loading and incremental loading. Both methods serve different purposes and are chosen based on factors like data volume, frequency of updates, and resource efficiency.

Let's explore the key differences between them in a tabular format:

When Does It Make Sense to Use ETL Incremental Loading?

Incremental processing of data is particularly beneficial for specific scenarios. Here’s a detailed look at when it makes sense to implement incremental loading in your data management strategy:

Large Datasets

If your organization handles substantial datasets, full loads can be impractical. For example, if you have millions of customer records, reloading all of them every time can be resource-intensive and time-consuming. Incremental loading enables you to focus only on those records that have changed, making the ETL process faster.

Frequent Data Updates

If your data changes frequently, such as in e-commerce platforms where new sales transactions are recorded continuously, incremental loading becomes crucial. You can update your data warehouse with the latest transactions instead of reloading the entire sales history. This way, you maintain up-to-date information with minimal impact on performance.

Retention of Historical Data

Incremental loading helps in retaining historical data when old records are deleted from the source. By loading only new and modified data during each sync, you can keep a complete history in your target system. This is important for compliance and auditing purposes so even if data is removed from the source it’s still available in the data warehouse for analysis and reporting.

Real-Time Analytics

In scenarios where real-time analytics are crucial, incremental loading is invaluable. It enables you to process the latest updates quickly so you can make timely decisions. For example, consider a financial trading platform that requires immediate transaction data to be available for analysis. By using incremental loading, the platform can quickly incorporate new data without delay, ensuring traders have access to the latest information.

Limited Resources

If your organization has limited resources, such as computing power and storage, incremental loading is an efficient approach to data management. Loading only the necessary data helps prevent overload and reduces the strain on your network, ensuring smoother operations.

Implement Approaches of Incremental Loading

Here’s a breakdown of various approaches to implementing incremental load in ETL:

Timestamp-Based Incremental Load

This method uses timestamps to identify new or modified records. By comparing the last modified timestamp of records in the source system with the last successful load timestamp in the data warehouse, you can efficiently capture only the changes. This approach is particularly effective for systems where data is frequently updated or created, such as transaction logs or user activity records.

Unique Identifier Incremental Load

This approach relies on a primary key or unique identifier to track changes in the source data. After each ETL execution, the highest unique identifier value is stored, and subsequent loads only fetch records with identifiers greater than this stored value. It is particularly useful when no timestamp is available or when timestamps are unreliable.

Log-based CDC

Log-based CDC is a specific type of Change Data Capture (CDC) that works by reading the transaction logs of a database to track changes. Every time a record is inserted, updated, or deleted, the database logs these actions for recovery and replication purposes. This minimizes the impact on source systems by capturing changes directly from transaction logs.

Hash-Based Incremental Load

Hash-based incremental loading employs hash functions to detect changes in records. In this approach, a hash value is generated for each record based on its content. During the ETL process, these hash values are compared between the source and target tables to identify which records have changed since the last load. This technique is particularly useful for huge datasets where it’s impractical to check every field manually.

Slowly Changing Dimensions (SCD)

SCD refers to a set of techniques used in data warehousing to manage changes in dimension data over time. There are several types of SCDs, but the most common are Type 1 (overwrite) and Type 2 (historical tracking). Each type addresses different needs regarding how historical data should be preserved or updated when changes occur. Implementing SCDs enables you to maintain accurate historical records while still reflecting current information, making it essential for effective reporting and analysis.

Common Challenges & Solutions

Incremental load in ETL presents certain challenges that you must address to ensure successful data processing. Here are a few of them:

Handling Deleted Records

One of the primary difficulties in incremental processing is managing deleted records. When a record is deleted from the source, the incremental replication may not correctly transmit this event to the destination, as it typically focuses on new or modified records. This can lead to discrepancies in the target database, where deleted records still exist.

Solution: Implement the CDC mechanism to replicate data accurately. CDC reads a log of the changes made to the source database. This log captures all modifications, including inserts, updates, and deletions. Thus, deletions in the source are identified and reflected in the destination. This ensures that the replicated data remains consistent and up-to-date with the source.

Another approach to handling deleted records is using a soft delete strategy. Instead of physically removing records from the source database, a soft delete involves marking records as deleted by setting a specific flag (e.g., IsDeleted). This flag indicates that the record should be treated as inactive or deleted without being removed from the database. It helps filter out these marked records, ensuring they are not included in the target system.

Dealing with Schema Changes

Another challenge in incremental loading is managing schema changes in the source system. Over time, tables may be modified, which can include adding new columns, renaming fields, or changing data types. If these are not addressed properly, the ETL process may fail or result in incorrect data being loaded into the target system.

Solution: To manage schema changes, you can use ETL tools that offer automatic schema detection. These tools can dynamically identify changes in the source schema, such as new columns or renamed fields, and adjust the data mapping accordingly. Additionally, incorporating version control for schema updates and performing regular audits can help ensure data compatibility.

Managing Data Consistency

Data consistency is critical in ETL processes, especially when dealing with incremental loads. Inconsistent data can arise because of several factors like concurrent updates, partial loads, or errors during the transformation process. This can lead to inaccurate reporting and decision-making.

Solution: Perform data validation checks at various stages of the ETL process. For example, compare row counts, sums, or checksums between the source and destination systems to confirm that all records have been transferred correctly. Furthermore, set up comprehensive logging and auditing. This lets you track the exact records loaded in each ETL run, helping you troubleshoot any inconsistencies.

Recovery from Failures

Failures can occur at any stage of the ETL process. Since an incremental load only processes data changes, recovering from a failure can be more complicated than with a full load. If a load fails midway, some changes may be applied to the target system while others are not, resulting in an inconsistent state.

Solution: Consider implementing techniques like checkpointing to effectively manage recovery from failures. Checkpointing captures the data that has been successfully processed and loaded. This ensures that if a failure occurs, the system can resume from the last successful checkpoint rather than starting over from the beginning.

Another solution is to use idempotency in data pipelines. Idempotent operations ensure that if the same data is loaded multiple times (due to a failure and retry), it won’t cause duplication or inconsistencies in the target system. For example, using upserts (update or insert operations) rather than simple inserts can prevent duplicate records. An upsert checks for the existence of a record—if it exists, it updates it; if not, it inserts a new one. This prevents duplicates, ensuring that the final state remains consistent.

How can Airbyte Help you Implement Incremental Loading?

Airbyte is an AI-powered robust data integration platform that simplifies the process of syncing data from various sources into data warehouses, lakes, and databases. With a catalog of over 400+ pre-built connectors, Airbyte makes it easy to build and manage data pipelines tailored to your specific needs.

One of Airbyte's key features is incremental data loading, which pulls only the data that has changed since the previous sync instead of all the data available in the data source. This approach significantly reduces the amount of data transferred during each sync, optimizing time and resource usage while ensuring up-to-date data across systems.

Modes of Incremental Sync

Airbyte supports two main modes of incremental sync:

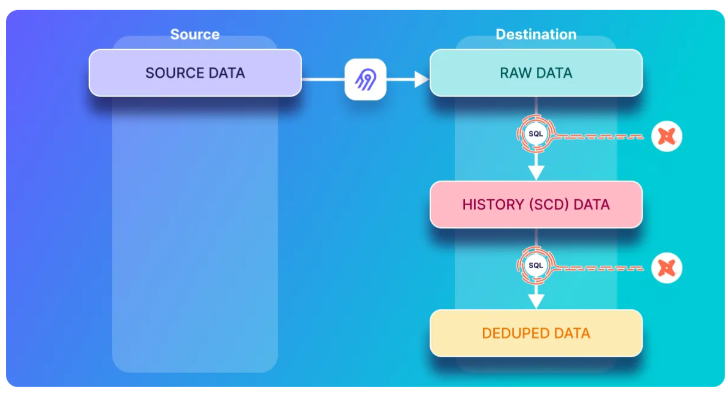

Incremental Append: In this mode, new and updated records are appended to the destination table during each sync run. However, if a record is updated multiple times between sync runs, you may end up with multiple copies of that record in the destination. This is because the sync will not overwrite previous versions of the record; it simply appends the latest data.

Incremental Append + Deduped: This mode also syncs only new or modified records, but it adds a layer of deduplication. When a record is updated, the system retains only the latest version of that record based on a primary key, ensuring that the final dataset in the destination contains unique entries. Therefore, while historical changes are tracked, only the most recent data is kept in the final table, preventing duplication and maintaining data integrity.

While standard incremental replication in Airbyte is efficient for syncing new or updated records, it has some limitations. This method works by periodically querying the source system for recent changes and transmitting them to the destination. However, it cannot detect deletions because queries typically return only existing records; thus, any records deleted in the source system will still exist in the destination.

To address these issues, it also supports CDC replication, which can be used in conjunction with incremental replication.

Unlike standard incremental syncs, the CDC tracks changes by reading the transaction log, which records all data modifications, including deletions and intermediate changes. This ensures that the destination remains consistent with the source, reflecting all updates accurately.

Apart from the above ones, some other interesting features of Airbyte include:

Automatic Schema Detection: With Airbyte's schema propagation feature, you can specify how changes to the source schema should be handled. Based on your configuration, it can automatically sync any detected schema changes to your target system. This approach ensures that your data synchronization remains accurate and efficient.

Detects Dropped Records: Airbyte's automatic detection of dropped records enhances sync reliability by monitoring data across the source, platform, and destination. It compares record counts at each stage to identify data losses caused by issues like serialization errors. If discrepancies are found, you’ll be promptly notified to take corrective action.

Record Change History: This feature prevents sync failures caused by problematic rows. If an oversized or invalid record disrupts the sync, Airbyte modifies the record in transit, logs the changes, and ensures the sync completes successfully. This enhances resilience and guarantees smooth data synchronization.

Uninterrupted Data Syncs: Airbyte’s checkpointing feature ensures that any sync failure can resume from the last successful state. This improves the reliability of data synchronization, avoiding data loss or redundant processing.

Detailed Sync Logs: Airbyte generates detailed logs for each connector, tracking every step of the data movement process. These logs offer insights into potential issues, making troubleshooting easier and ensuring transparency throughout the sync.

Wrapping Up

In this article, you have explored the importance of incremental load in ETL. This method significantly reduces processing time and resource consumption, making it ideal for large datasets where full loads can be slow and resource-intensive. You have also examined various implementation approaches, such as using timestamps, unique identifiers, and log-based CDC techniques. By adopting these strategies, you can optimize the ETL processes, leading to faster insights and more effective decision-making.

Suggested Read