Data Plane Flexibility: On-Premises, Cloud, or Hybrid per Workload

Summarize this article with:

✨ AI Generated Summary

Data plane flexibility enables enterprises to process data on-premises, in the cloud, or hybrid environments without rewriting code, ensuring compliance, low latency, cost control, and vendor independence. Key best practices include unified control planes, outbound-only security, compliance boundary mapping, connector parity, infrastructure automation, and centralized monitoring. Airbyte Enterprise Flex exemplifies this approach by offering a managed control plane with local processing engines, supporting seamless workload migration across environments while maintaining security and compliance.

Your BI dashboard lives in AWS, yet patient records stay locked in a hospital rack. Regulations dictate where data may rest, edge devices demand millisecond response, and finance teams watch cloud bills spike overnight. No single location works for every workload.

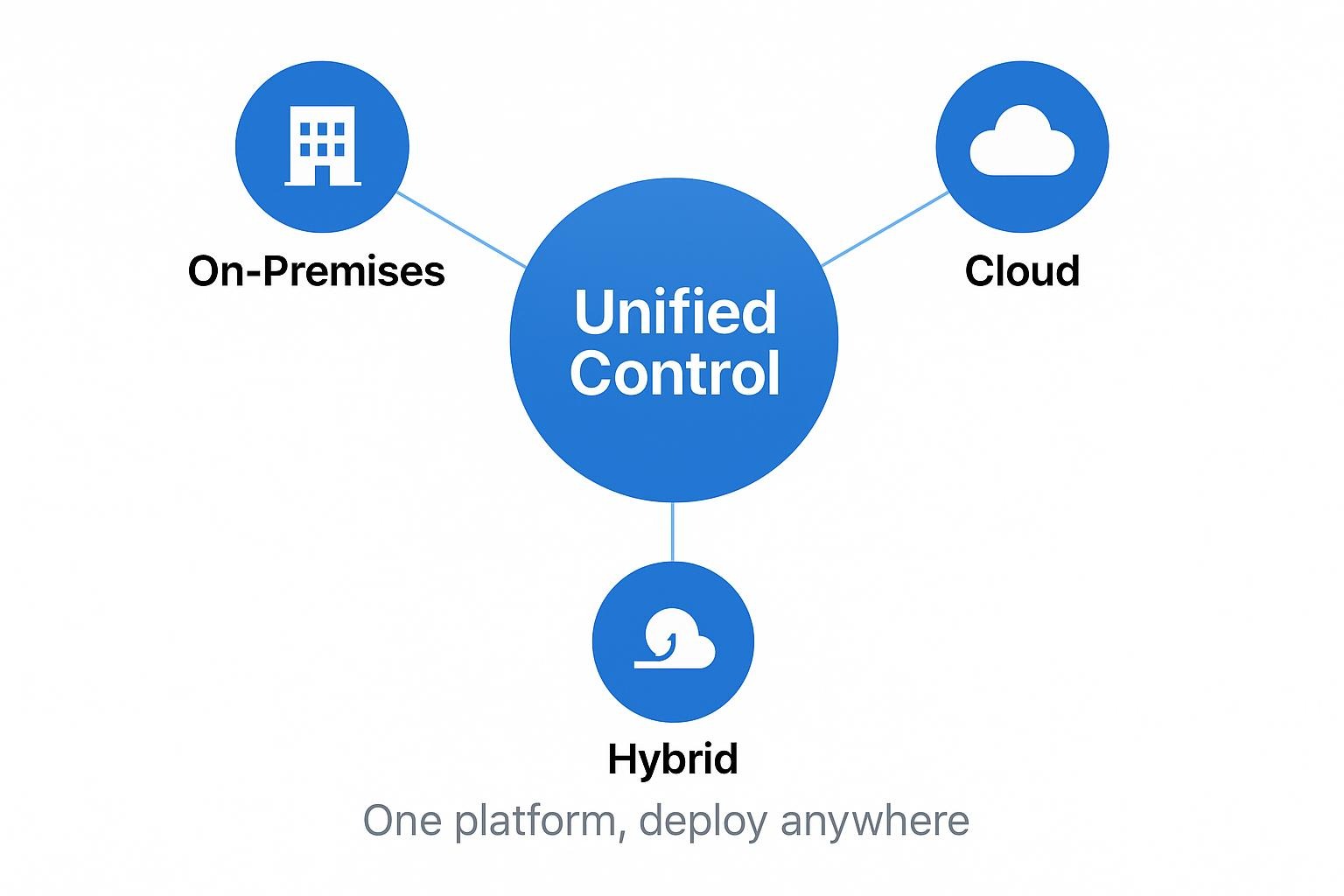

Data plane flexibility solves this problem. When the layer that processes data can run on-premises, in the cloud, or both while a single control plane coordinates everything, you move jobs to wherever they run best without rewriting code or re-auditing security.

The sections that follow provide a practical framework for choosing the right environment for each workload and show how Airbyte Enterprise Flex makes those moves routine.

What Does Data Plane Flexibility Mean?

The data plane processes and transforms your actual data records, while the control plane manages orchestration, scheduling, and monitoring.

Your data plane runs inside your compute environment and handles throughput. It applies routing rules, executes transformations, and writes to destinations. Performance and data locality matter here because every millisecond impacts user-facing latency. The control plane stores metadata, schedules jobs, and exposes APIs for management.

Data plane flexibility means you can deploy processing anywhere (on-premises, in private VPCs, or across multiple clouds) without rewriting pipelines or sacrificing compliance capabilities. A unified control plane maintains consistent configuration, monitoring, and lineage tracking while individual processing engines run close to your data for sovereignty or latency requirements.

When you can change deployment targets without code changes, you gain vendor negotiating power, control infrastructure costs, and meet regional regulations without losing functionality. True flexibility requires unified connector architectures, aligned security policies, and adaptable performance characteristics across every deployment environment.

Why Is Data Plane Flexibility Critical for Modern Enterprises?

Data plane flexibility addresses four critical enterprise challenges:

- Regulatory compliance: GDPR regulates international transfers of personal data and requires safeguards for data leaving the EU, HIPAA mandates robust protections for protected health information regardless of data storage location, and DORA is tightening financial-sector oversight across Europe. When your architecture can run processing on-premises, in the cloud, or both, you meet those residency rules without rewiring every pipeline.

- Latency requirements: Real-time fraud scoring or network troubleshooting loses value if round-trips cross an ocean. Keeping processing close to its data source (on-prem for branch trading systems, regional clouds for global BI) delivers predictable performance.

- Cost optimization: Cloud's pay-as-you-go model works well for bursty analytics, while steady, high-volume workloads may cost less on your own hardware over time.

- Vendor independence: If a provider hikes egress fees or drops regional support, a portable processing layer lets you redeploy workloads where economics and compliance still work. Banks forced to repatriate data after sovereignty shifts, or manufacturers moving edge analytics on-site to cut downtime, learn this the hard way.

Each of these constraints pulls your workloads in different directions, and only flexible deployment lets you satisfy all of them simultaneously.

Table: Deployment Environment Comparison

How to Decide the Right Environment per Workload?

Your infrastructure has finite resources, and every workload wants them. The decision comes down to one question: which deployment gives you the control, performance, and cost profile this specific data requires?

On-Premises: When Control and Compliance Come First

When regulators or internal policies require strict control over data location, on-premises deployment can simplify certain compliance tasks, but regulations like HIPAA, GDPR, or PCI also allow for secure off-premises options as long as proper safeguards are enforced. Compliance audits require a combination of data location control and rigorous security measures.

The cost is ownership of every capital expense and upgrade cycle. Hardware refreshes and floor-space constraints slow scaling, and your team carries the pager for all patching and power issues.

Reserve on-prem for workloads that meet at least two criteria:

- Process regulated personal or financial data

- Demand sub-millisecond latency

- Require custom security tooling that public clouds can't support

Healthcare patient records and imaging data typically require strong regulation and sometimes custom security tooling, but not always sub-millisecond latency. Trading platforms' order books check the first two.

Cloud: When Scalability and Speed Matter Most

For variable or bursty workloads, cloud's pay-as-you-go elasticity wins. Spin up GPUs for a week-long ML training run, shut them down, and skip the purchase order entirely. Public providers carry most major compliance badges, but you still own encryption, access policies, and placement decisions. Watch costs closely. Long-running queries or high egress volumes can eliminate savings overnight.

Use cloud when:

- The data already lives there (SaaS logs)

- Latency tolerance is measured in seconds

- The workload benefits from horizontal scale (ad-hoc analytics, large-scale simulation, global BI dashboards)

A single Terraform plan can launch the environment, run the job, and tear it down hours later.

Hybrid: When You Need the Best of Both

Hybrid keeps sensitive data at home while letting elastic demand spill into the cloud. Run a local processing layer for regulated tables and burst compute-heavy steps to managed services, all orchestrated under one control layer.

This model works when sovereignty clashes with growth. Use hybrid deployment for:

- In-country data processing with cloud-based aggregation and analytics

- Local call-detail records with cloud-scaled network analytics during peak events

- On-premises patient data with cloud-based research and forecasting

A regional bank can comply with in-country residency rules by processing customer PII locally, then send de-identified aggregates to a cloud warehouse for quarterly forecasting. Telecom operators follow the same pattern: call-detail records stay in secure facilities, but network analytics scale in the cloud during sporting events.

Table: Workload Decision Framework

Use these patterns as guardrails, not hard rules. Map each workload's compliance ceiling, performance floor, and budget constraints, then pick the deployment that clears every hurdle.

What Are the Best Practices for Managing Flexible Data Planes?

You gain true processing flexibility only when control, security, and automation move in lockstep across every environment. The biggest mistake teams make is running separate dashboards for cloud and on-premises workloads.

Unify Control Across All Environments

A unified control plane (one console orchestrating on-premises, cloud, and edge workloads) prevents the "two dashboards, two policies" problem that fragments operations. By centralizing scheduling, RBAC, and audit logging, you cut duplicate effort and reduce misconfigurations.

Implement Outbound-Only Security

The safest pattern is outbound-only networking: each local processing engine dials out to the control plane, never exposing inbound ports. This shrinks the attack surface and lets you keep firewalls tight even in air-gapped or highly regulated zones. Pair that with strict separation of duties (operators can change orchestration logic without ever touching raw data) and you satisfy zero-trust principles without slowing teams down.

Map Compliance Boundaries First

Regulations rarely care about your architecture diagram; they care where data sits. Map compliance boundaries first, then deploy regional processing engines inside those borders. This approach anchors sensitive workloads within jurisdictional lines while still letting global jobs run wherever capacity is cheapest.

Guarantee Connector Parity

Functionality must be identical everywhere. Connector parity ensures a pipeline built in the cloud runs unchanged on-prem. This avoids the rewrite tax that sabotages many hybrid rollouts. Maintain one codebase, one versioning scheme, and continuous compatibility tests to enforce this standard.

Automate Infrastructure Provisioning

Manual provisioning doesn't scale. Package every processing component (runtimes, connectors, secrets) into Terraform modules or Kubernetes manifests. Infrastructure-as-code turns a six-week server order into a 10-minute terraform apply. This enables rapid spin-up for temporary projects or burst capacity.

Maintain Centralized Visibility

Visibility glues the system together. Continuous metrics, trace logs, and alerting let you catch latency spikes or policy drifts before they breach SLAs. Pair this with immutable audit trails so compliance teams can reconstruct every sync.

A practical checklist for managing flexible processing architectures:

- Unify control under a single management layer to eliminate silos

- Enforce outbound-only traffic from processing engines for minimal exposure

- Align deployment locations with legal residency requirements

- Guarantee connector and feature parity across all environments

- Automate provisioning via Terraform, Helm, or similar IaC tools

- Monitor throughput, errors, and policy compliance centrally

- Apply identical encryption, IAM, and network policies everywhere

- Classify data by sensitivity; let those labels drive placement decisions

When you follow these practices, moving a workload is no longer a multi-month migration. It's a configuration toggle that keeps compliance intact and budgets in check.

How Airbyte Enterprise Flex Enables Data Plane Choice?

Airbyte Enterprise Flex provides a managed control plane in Airbyte Cloud while placing every processing engine in your controlled environment (inside your VPC, on-premises infrastructure, or both). The architecture delivers:

- Cloud orchestration with local processing: The control plane schedules jobs and tracks health without touching payloads, receiving only outbound status and logs from the processing layer

- Complete data sovereignty: Information and credentials never leave your perimeter, ensuring GDPR, HIPAA, or DORA compliance without custom gateways

- No inbound firewall rules required: You keep sovereign datasets local while relying on cloud orchestration

- Consistent experience everywhere: Same open-source codebase, same UI, and same 600+ connectors whether you deploy on-premises, in private cloud, or across multiple regions

- True feature parity: Move workloads without rewriting them, with governance and monitoring unified through one dashboard

Real teams are already implementing this approach:

- A regional bank keeps PCI data on-premises while feeding cloud BI

- A hospital syncs PHI inside its network yet manages pipelines centrally

- A manufacturing firm mirrors plant-floor logs to a lake without external exposure

With Flex, you choose the right environment per workload while retaining full functionality, security, and support.

How Do You Build a Resilient Data Infrastructure?

The fastest path to a resilient infrastructure is placing each workload where it naturally fits (regulated data on-premises, bursty analytics in the cloud, hybrid scenarios blending both). Airbyte Enterprise Flex provides 600+ connectors with unified quality across cloud, hybrid, and on-premises deployments, so you can shift workloads freely without rewriting pipelines or vendor lock-in.

Talk to Sales to discuss your hybrid deployment and data sovereignty requirements.

Frequently Asked Questions

Can I move workloads between deployment environments without rewriting code?

Yes. Airbyte's unified architecture means the same connectors and configurations work across on-premises, cloud, and hybrid deployments. You change deployment targets through configuration, not code rewrites, because all environments use the same open-source foundation. This lets you respond to regulatory changes or cost shifts without engineering overhead.

How does hybrid deployment differ from running separate on-premises and cloud systems?

Hybrid deployment uses a single control plane to orchestrate both environments, maintaining unified monitoring, governance, and configuration. Separate systems require duplicate effort for policy management, connector maintenance, and troubleshooting. With hybrid deployment, you manage one platform that coordinates workloads across locations based on your sovereignty and performance requirements.

What compliance frameworks does flexible data plane deployment support?

Flexible deployment supports GDPR, HIPAA, DORA, PCI-DSS, and other frameworks by letting you keep regulated data within required jurisdictions while processing it locally. The control plane receives only metadata and status updates, never touching sensitive payloads. Outbound-only networking from processing engines to the control plane minimizes attack surface without exposing inbound ports in regulated zones.

How do I determine which workloads belong on-premises versus cloud?

Start with three criteria: regulatory requirements (does data need to stay in specific locations?), latency demands (do milliseconds matter?), and cost profile (is this steady-state or bursty?). On-premises fits regulated, latency-sensitive, or steady workloads. Cloud fits elastic, geographically distributed, or experimental workloads. Hybrid fits scenarios where you need both, like processing sensitive data locally while scaling analytics in the cloud.

.webp)