Database Schema Migration: Propagation-Driven Sync

Summarize this article with:

✨ AI Generated Summary

Database schema migration is essential for adapting database structures to evolving application needs while minimizing downtime and data loss risks. Key strategies include:

- Adopting zero-downtime migration patterns like expand-and-contract and leveraging AI-powered automation for code conversion, optimization, and error detection.

- Systematic planning involving thorough assessment, backup, testing, incremental deployment, and continuous validation.

- Utilizing modern tools such as Airbyte, Bytebase, Liquibase, and Atlas to automate and manage migrations efficiently.

- Following best practices like version control, rollback preparedness, automation, and clear communication to avoid common pitfalls.

Schema migration is a critical database management process, but many data professionals struggle with downtime, data loss risks, and complex transformations. Modern organizations can't afford traditional migration approaches that require extended maintenance windows or risk business continuity.

The key to successful schema migration lies in adopting modern techniques like AI-powered automation, zero-downtime strategies, and systematic validation processes. This comprehensive guide explores proven methodologies, cutting-edge tools, and strategic approaches that enable safe, efficient database evolution without disrupting business operations.

By implementing the practices outlined in this article, you'll transform schema migration from a high-risk maintenance task into a controlled, predictable process that supports continuous application development and business growth.

What Is Database Schema Migration?

Schema migration is the process of updating a database structure from its current state to a new desired state. This includes adding, altering, or removing tables, columns, or constraints to reflect new requirements in the database schema design. Schema migration is essential for maintaining database performance and adapting to the dynamic needs of your application.

Without a well-defined process, inconsistencies can arise between the application data model and the schema, leading to errors and downtime. Several secure schema migration tools let you manage changes systematically, preserving data integrity and minimizing disruptions.

Modern schema migration encompasses both structural changes and data transformations, requiring sophisticated validation mechanisms to ensure business continuity. The process involves careful orchestration of dependencies, rollback procedures, and performance optimization to support continuous application evolution while maintaining operational stability.

Why Do Organizations Need Database Schema Migration?

Schema migration is essential for several reasons that directly impact business operations and competitive advantage:

Adapting to New Features

When you introduce new functionality in an application, the database schema should be revised to meet such changes. For example, if an e-commerce bookstore introduces digital downloads, it must update the schema to add new fields for download links and file sizes.

Modern applications require frequent schema adjustments to support feature releases, A/B testing, and customer feedback implementation. This constant evolution demands flexible migration processes that can accommodate rapid development cycles.

Performance Optimization

If data volume increases, some queries may slow dramatically. You can optimize performance by modifying the schema by adding indexes, partitioning tables, or restructuring data relationships.

Cloud-native applications particularly benefit from schema optimizations that leverage platform-specific features like columnar storage or distributed indexing capabilities. These optimizations become critical as applications scale to handle larger user bases and data volumes.

Database Platform Transitions

Migrating from one database platform to another often requires schema modifications to ensure compatibility and efficiency on the new system. These transitions frequently involve moving from legacy on-premises systems to cloud-native platforms like PostgreSQL on AWS or Snowflake.

Such migrations require careful attention to data type conversions and platform-specific optimizations. Organizations often discover new performance opportunities when moving to modern platforms that support advanced features.

Ensuring Data Integrity

Schema changes may enforce constraints and preserve data integrity. Adding a foreign-key constraint to ensure customer_id in orders matches a valid customer record is a typical example.

Modern applications also require sophisticated validation rules, audit trails, and compliance controls that necessitate ongoing schema evolution. These requirements become more complex as organizations grow and face regulatory demands.

Improved Security

Fixing security issues or introducing new features may involve column-level encryption, data masking, or tighter access controls that require schema changes. Organizations increasingly need to implement privacy controls, data classification, and access auditing capabilities.

These security enhancements demand structural database modifications that protect sensitive information while maintaining application functionality. The growing emphasis on data privacy regulations makes security-focused schema changes increasingly important.

Bug Fixes

Design bugs can lead to incorrect storage or retrieval. Correct migrations can fix column data types, add missing fields, or restructure tables.

Early-stage applications particularly benefit from iterative schema refinements that address usability issues and performance bottlenecks discovered through real-world usage. These fixes often reveal design assumptions that don't hold up under production conditions.

How Should You Plan Database Schema Migration?

Effective schema migration requires systematic planning that balances speed, safety, and business continuity. Follow this comprehensive approach to ensure successful outcomes.

1. Assess the Current Schema

Examine tables, columns, and relationships to understand dependencies. Document existing constraints, indexes, and triggers that might be affected by proposed changes. Use automated schema analysis tools to identify complex relationships and potential impact areas that manual review might miss.

2. Define Migration Objectives

Decide which tables, columns, or relationships must change. A clear strategy prevents oversight and ensures alignment with business requirements. Establish success criteria, performance benchmarks, and rollback triggers that guide decision-making throughout the migration process.

3. Create a Data Backup

Back up the entire database using automated solutions and version control systems like Git to track and revert changes. Implement point-in-time recovery capabilities and test restoration procedures to ensure backup reliability. Consider implementing continuous backup strategies for high-volume production systems.

4. Test Thoroughly

Run the migration in a staging environment that mirrors production complexity. Identify and fix performance or compatibility issues and ensure data integrity through comprehensive validation. Execute load testing, edge case validation, and integration testing to verify system behavior under realistic conditions.

5. Implement the Changes

Apply modifications incrementally in production, phasing out deprecated data gradually. Use feature flags and canary deployment strategies to minimize risk exposure. Monitor system performance and user experience throughout the implementation process.

6. Validate and Monitor

Perform data-type, code, consistency, and format checks. Continue monitoring after deployment to ensure sustained performance and data quality. Implement automated alerts for schema drift detection and performance degradation that might indicate migration-related issues.

How Can You Implement Zero-Downtime Migration Strategies?

Zero-downtime migration strategies are essential for maintaining business continuity while evolving database structures. These approaches enable schema changes without disrupting user access or application functionality.

Expand and Contract Pattern

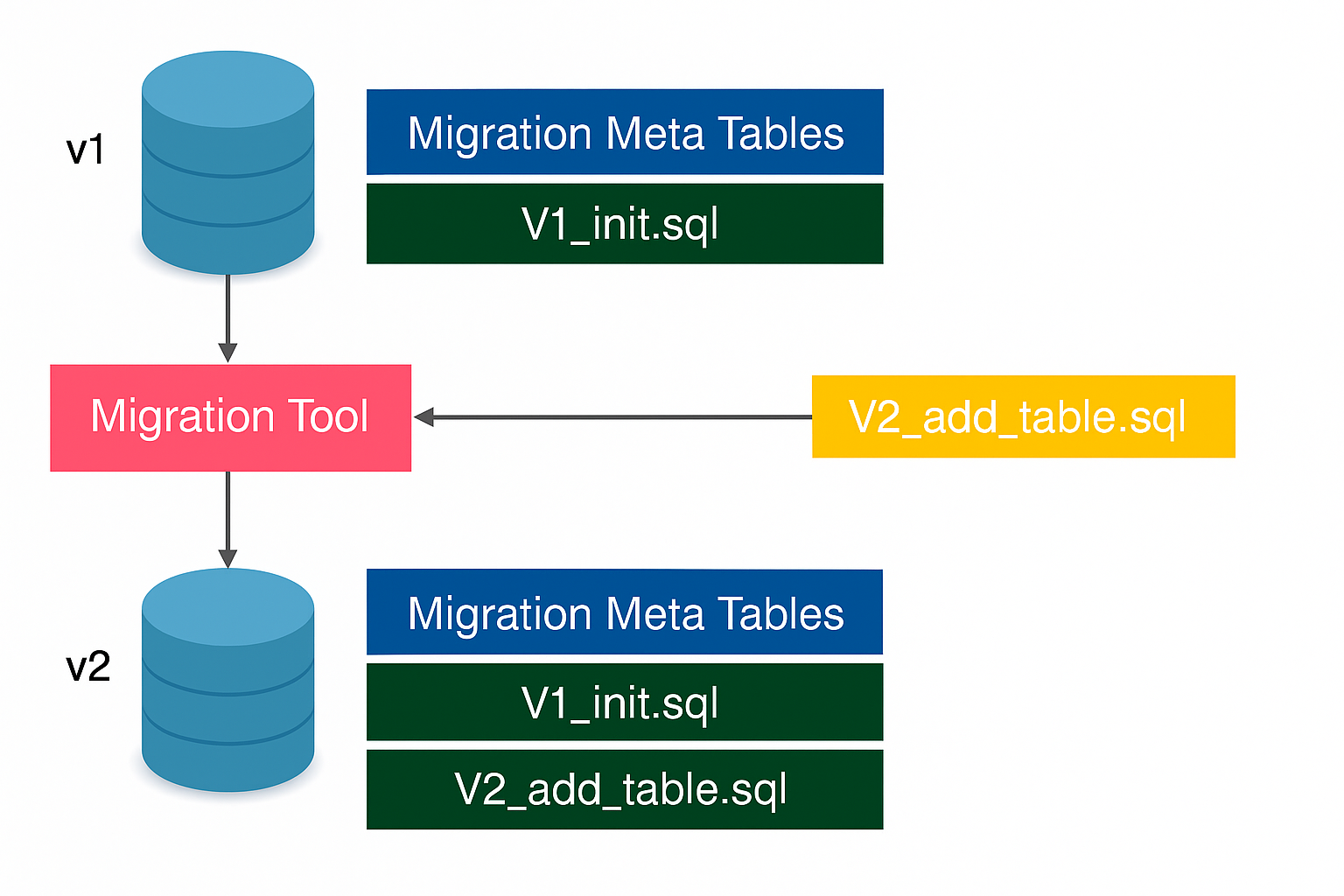

The expand and contract pattern provides a systematic approach to breaking changes by introducing new schema elements alongside existing ones before gradually transitioning applications.

The expand phase involves adding new columns, tables, or indexes in parallel with existing structures. This creates redundancy that enables safe transitions without immediate disruption.

During the dual-write phase, applications write data to both old and new schema versions while reading from the original structure. This ensures data consistency across both schema versions during the transition period.

The contract phase removes deprecated schema elements after confirming successful application migration to new structures. This final step eliminates technical debt while maintaining system stability.

Tools Supporting Zero-Downtime Migrations

pgroll enables zero-downtime schema changes for PostgreSQL via virtual schemas that abstract application code from underlying structural changes. This tool handles complex migrations while maintaining application compatibility.

AWS Blue/Green deployments provide staging environments for testing schema changes before production cutover. This approach reduces risk by validating changes in production-like environments.

GitHub gh-ost facilitates online schema changes for MySQL using shadow tables and binlog replication. This tool ensures data consistency while enabling continuous operations during schema modifications.

Validation and Monitoring

Implement automated data validation to compare record counts, checksums, and data integrity between old and new schema versions. This validation ensures migration accuracy and identifies potential issues early.

Monitor application performance, database resource utilization, and user experience metrics throughout the migration process. Continuous monitoring enables rapid response to performance degradation or functional issues.

Establish automated rollback triggers based on performance thresholds or error rates. These safety mechanisms protect business operations when migrations encounter unexpected problems.

What Are AI-Powered Schema Migration Techniques?

AI-powered schema migration techniques automate complex transformations, optimize performance, and reduce the manual effort traditionally required for schema changes.

Generative AI for Code Conversion

AI tools analyze stored procedures, functions, and triggers to generate equivalent logic in target databases, such as Oracle to PostgreSQL or SQL Server to Snowflake conversions. These tools understand complex business logic and translate it accurately across different database platforms.

AWS DMS now leverages generative AI to automate schema conversions, handling proprietary functions, data-type conversions, and platform-specific optimizations. This automation reduces manual coding effort while improving conversion accuracy.

The technology continues advancing to handle increasingly complex scenarios, including custom data types, complex relationships, and performance-optimized structures. AI-powered conversion significantly accelerates database platform transitions.

Intelligent Schema Optimization

AI analyzes historical data patterns to recommend optimal data types, indexing strategies, and partitioning schemes that improve performance post-migration. This analysis considers query patterns, data distribution, and access frequencies.

Machine learning algorithms predict query performance impacts to enable proactive optimization decisions. These predictions help avoid performance regressions that traditional manual approaches might miss.

Advanced optimization tools can simulate different schema configurations to identify the most effective approach for specific workloads. This simulation capability enables data-driven optimization decisions.

Automated Error Detection and Validation

AI systems identify inconsistencies in data mapping, resolve incompatible types, and flag compliance violations before they cause production issues. These systems learn from previous migrations to improve accuracy over time.

Advanced tools can simulate migration outcomes using synthetic data, ensuring privacy while identifying potential problems. This simulation approach validates migration logic without exposing sensitive information.

Pattern recognition capabilities help identify subtle issues that manual review might overlook, such as data quality problems or referential integrity violations. These capabilities improve migration reliability and reduce post-migration debugging.

What Best Practices Should You Follow for Schema Migration?

Document Your Changes

Maintain a comprehensive log of schema changes, including business justification, technical details, and rollback procedures. Documentation ensures knowledge preservation and enables effective troubleshooting when issues arise.

Include performance impact assessments and validation criteria in your documentation. This information helps future team members understand the reasoning behind specific design decisions.

Use Version Control

Store schema and migration scripts in Git to track history, enable collaboration, and integrate with CI/CD pipelines. Version control provides audit trails and enables rollback to previous schema states when necessary.

Tag releases and maintain branching strategies that align with application deployment cycles. This alignment ensures schema changes coordinate properly with application code updates.

Implement Rollback Procedures

Prepare and test rollback scripts before executing migrations. Automate rollback where possible and document triggers for rapid recovery.

Test rollback procedures in staging environments to ensure they work correctly under realistic conditions. Rollback testing often reveals edge cases that forward migration testing might miss.

Automate Wherever Possible

Automate validation, deployment, and monitoring to reduce human error and improve consistency. Automation ensures repeatable processes and reduces the risk of manual mistakes during critical operations.

Build automated pipelines that integrate schema migration with application deployment processes. This integration ensures coordinated releases and reduces deployment complexity.

Collaborate and Communicate

Keep stakeholders informed and align technical changes with business objectives. Coordinate schema changes with application development teams to ensure compatibility and minimize disruption.

Establish clear communication channels for migration status updates and issue reporting. Effective communication helps manage expectations and enables rapid response to problems.

What Are Common Use Cases for Database Schema Migration?

Healthcare

Updating schemas for electronic health records to enable interoperability with other healthcare systems. Healthcare organizations must adapt schemas to support new medical devices, treatment protocols, and regulatory requirements.

Modern healthcare systems require flexible schemas that accommodate diverse data types, including imaging data, genomic information, and IoT sensor readings. These requirements drive frequent schema evolution to support advancing medical technology.

Logistics

Adding real-time tracking capabilities via tables for GPS coordinates, IoT sensor data, and route optimization parameters. Logistics companies need schemas that support complex supply chain operations and real-time decision making.

Advanced logistics operations require schema modifications to support predictive analytics, automated routing, and customer communication systems. These capabilities demand sophisticated data structures that evolve with business requirements.

E-commerce

Frequent updates for product attributes, payment gateways, and customer experience enhancements while supporting high-volume transactions. E-commerce platforms must balance rapid feature development with system stability.

Modern e-commerce requires schemas that support personalization engines, recommendation systems, and complex pricing models. These features drive continuous schema evolution to maintain competitive advantage.

Finance

Reorganizing transaction logs, risk assessments, and fraud-detection parameters to launch new products or strengthen security. Financial institutions face strict regulatory requirements that influence schema design decisions.

Banking systems require schemas that support real-time fraud detection, regulatory reporting, and customer analytics. These requirements create complex migration scenarios that demand careful planning and execution.

What Tools Are Available for Database Schema Migration?

1. Airbyte

Airbyte offers an extensive catalog of 600+ pre-built connectors and automatic schema change propagation. The platform handles schema evolution automatically, detecting changes in source systems and propagating them to destinations while maintaining data integrity.

Airbyte's approach eliminates manual schema mapping and reduces migration complexity by automating the detection and handling of schema changes. This automation significantly reduces the operational overhead associated with maintaining data pipelines during schema evolution.

2. Bytebase

A robust migration platform supporting multiple databases, schema-drift detection, GitOps integration, GUI-based review, deployment, and rollback capabilities. Bytebase provides comprehensive collaboration features for database changes.

The platform offers visual tools for reviewing schema changes and coordinating team collaboration on database modifications. These features make complex migrations more manageable for distributed development teams.

3. Liquibase

Tracks, versions, and deploys schema changes defined in XML, YAML, JSON, or SQL formats with rollback support. Liquibase provides database-agnostic migration capabilities across multiple platforms.

The tool integrates with CI/CD pipelines to automate schema deployment as part of application release processes. This integration ensures schema changes coordinate properly with application code updates.

4. Atlas

An open-source, language-agnostic tool that uses declarative schema definitions and modern DevOps principles for safe, automated migrations. Atlas applies infrastructure-as-code principles to database schema management.

The platform provides advanced validation and planning capabilities that preview migration impacts before execution. These features help teams understand and prepare for complex schema changes.

What Common Mistakes Should You Avoid During Schema Migration?

Understanding and avoiding common migration mistakes prevents data loss, reduces downtime, and ensures successful database evolution that supports business objectives.

Conclusion

Database schema migration is crucial for keeping up with an evolving data model while maintaining business continuity and operational efficiency. By combining systematic planning, advanced tooling, zero-downtime strategies, and comprehensive validation, organizations can transform schema migration from a high-risk maintenance task into a competitive advantage.

Frequently Asked Questions

What is the difference between database migration and schema migration?

Database migration moves data from one database system to another, while schema migration changes the structure of an existing database (often within the same system).

How long does a typical schema migration take?

Duration depends on database size and complexity. Simple changes take minutes; complex migrations may require hours or days. Zero-downtime strategies can lengthen execution time but eliminate business disruption.

Can schema migrations cause data loss?

Yes, if not properly planned and executed. Prevent data loss with thorough testing, backups, and rollback procedures.

What happens if a schema migration fails?

Rollback to the previous schema state using prepared scripts or backups. Modern tools offer automated rollback capabilities for rapid recovery.

How do you handle schema migrations in microservice architectures?

Use backward-compatible changes, versioned APIs, and gradual deployments so services can migrate independently without disrupting the overall system.

.webp)