Enterprise Data Management Fresh Environment: How Does it Work?

Summarize this article with:

✨ AI Generated Summary

Enterprise data management in a fresh environment modernizes legacy systems by leveraging cloud-native, AI-driven automation, and scalable architectures like data mesh, lakehouse, and data fabric to enhance data accessibility, governance, and real-time insights. Key steps include defining goals, establishing data quality and governance policies, testing, implementation, and employee training. Platforms like Airbyte facilitate seamless data integration with extensive connectors, AI-powered tools, and real-time change data capture, addressing challenges such as migration, scalability, security, and integration complexity.

You have to handle high-volume datasets if you work in an enterprise that depends on data for most of its operations. The challenge isn't just managing data—it's breaking free from the data democratization paradox that plagues modern organizations. While 98% of IT teams now incorporate generative AI into their data strategies, most enterprises remain trapped in legacy systems that require 30-50 engineers just to maintain basic pipeline operations, creating bottlenecks that prevent business teams from accessing the insights they need when they need them.

To eliminate these issues, you can create a fresh data environment that facilitates successful enterprise data management. This approach transforms your data infrastructure from a reactive support function into a proactive driver of innovation and competitive advantage.

Here, you will learn about the enterprise data management fresh environment in detail and its essential components. With this information, you can set up a robust data management environment in your enterprise to simplify data use for business workflow while leveraging cutting-edge technologies like AI-driven automation and modern architectural paradigms.

What Is the Concept of Enterprise Data Management Fresh Environment?

Data management is the practice of collecting and storing data in an organized way for effective analytics and decision-making. To manage data efficiently, you need a set of relevant infrastructure, software, databases, data warehouses, and computer systems. These elements form an ecosystem known as a data environment.

Enterprise data management in a fresh data environment refers to a newly established ecosystem for managing enterprise data. It facilitates the creation of a robust data management system that aligns with the current business landscape and embraces cloud-native solutions with hybrid and multi-cloud architectures. An example of this approach is Oracle's enterprise data management in a fresh environment that helps you easily adapt to changing market trends.

Modern fresh environments prioritize data democratization—empowering non-technical users to explore, analyze, and act on data without relying on centralized IT teams. This shift moves organizations from fragmented, departmental systems to integrated, organization-wide platforms that prioritize accessibility, governance, and real-time insights.

How Do You Establish Enterprise Data Management in a Fresh Environment?

Step 1: Define Goals and Objectives

You should first clearly define the goals that you want to achieve through EDM. For this, you should assess how efficiently different departments in your enterprise are presently working with data. You can also take suggestions from your team members, employees, and other stakeholders. This will help you understand if there is a need for improvement in your organization's data management practices.

Consider implementing federated governance models that balance domain autonomy with global standards, especially when adopting modern architectures like data mesh that decentralize data ownership while maintaining interoperability.

Step 2: Establish Data Quality Rules

To maintain high-quality data, you should set metrics such as error rate, the percentage of data points outside the acceptable range, or the percentage of null values. You can also take the assistance of AI tools to check and improve the accuracy and completeness of data records.

Automated quality checks during ingestion and transformation help detect anomalies in real-time, while AI-powered tools can autonomously classify data assets and recommend corrections for inconsistencies.

Step 3: Deploy Data Governance Policies

Developing effective data governance policies for responsible usage and protection of data. You should frame rules for data formats that will be acceptable for enterprise tasks. Specifying the sources for extracting and loading data and tools to be used for data preprocessing also helps to ensure data integrity and consistency.

Modern governance frameworks should embed automated policy enforcement using active metadata to flag violations, while implementing role-based access controls and compliance automation for regulations like GDPR, CCPA, and industry-specific requirements.

Step 4: Test and Optimize

Before deployment, you should test the working of various processes involved in enterprise data management. This enables you to understand the areas of improvement. You can then work to eliminate these hindrances to ensure the smooth conduction of data-related tasks.

Focus on testing scalability and performance under high-volume workloads, ensuring your fresh environment can handle real-time processing requirements and edge computing scenarios that process data near the point of generation.

Step 5: Implement the Enterprise Data Management Framework

Finally, the enterprise data management framework can be deployed in a fresh environment. After implementation, you should regularly monitor the effectiveness in a new data environment. You can assess how the new data management approach has impacted the output of your enterprise by monitoring changes in workflow efficiency and customer experience.

Consider implementing continuous monitoring with behavioral anomaly detection to identify potential security threats and ensure your framework maintains enterprise-grade security across distributed environments.

Step 6: Train Your Employees

Properly training your employees before implementing data management policies can help them adopt the new data workflows. You can prepare a training curriculum based on different job roles in your organization. Then, you may hire a professional who can teach your employees about the importance of data management through practical tools and experimentation.

Training should emphasize self-service capabilities that enable business teams to access data independently while automatically enforcing security policies and compliance requirements.

What Are the Benefits of Enterprise Data Management Fresh Environment?

A fresh environment can help you in several ways to enhance your business performance. Some benefits of enterprise data management in a fresh environment are:

- By transitioning to a fresh environment, you can replace the legacy infrastructure with modern, cloud-based, or AI-driven infrastructure that supports serverless data warehouses and cross-cloud interoperability.

- You can establish a framework that is more helpful in fulfilling your current business needs than a system that was suitable for achieving older goals, particularly with cloud-native data lakes that store vast amounts of raw data while minimizing overhead costs.

- Through a fresh enterprise data management environment, you can comply with data regulatory frameworks like GDPR and HIPAA to ensure data security while implementing zero-trust models and data-centric security measures.

- You gain the flexibility to avoid vendor lock-in while optimizing performance through multi-cloud strategies that leverage the best capabilities from different cloud providers.

- Fresh environments enable real-time analytics and decision-making capabilities through streaming data architectures and distributed caching that stores frequently accessed data closer to users.

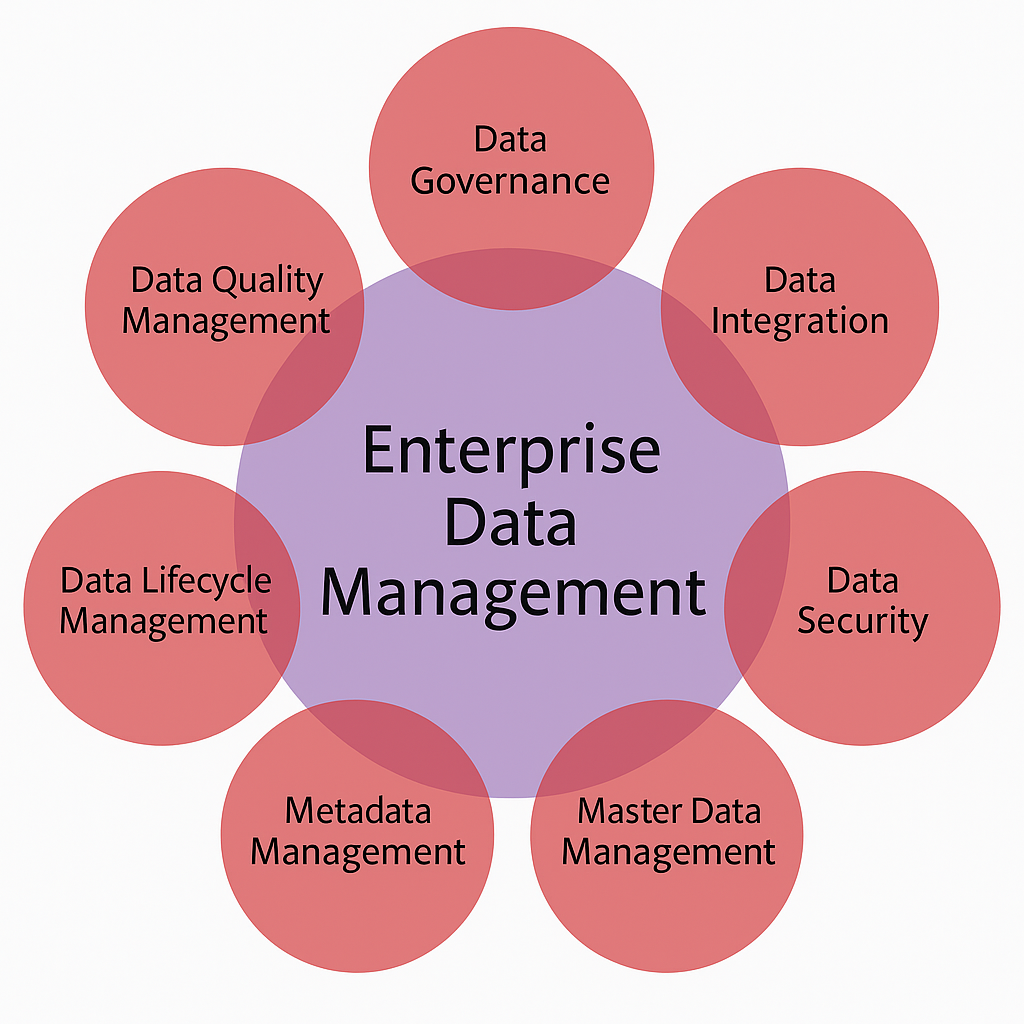

What Are the Essential Components of Enterprise Data Management Fresh Environment?

Enterprise data management helps you ensure that your data is accurate, reliable, and secure. To achieve this, you need the following components in your enterprise data management environment:

Data Governance

Data governance is a set of procedures and policies that help you to better manage and utilize data for your enterprise operations. This includes setting up data storage, retrieval, and usage rules to streamline data-related tasks. Establishing a strong enterprise data governance framework in a fresh environment allows you to promote responsible and secure use of data.

Modern governance frameworks should incorporate multi-domain governance approaches that manage interconnected customer, product, and supplier data under a unified strategy. This includes implementing automated metadata discovery that maps relationships between data assets and provides real-time lineage tracking for auditability and compliance.

Data Quality

Data quality is a critical aspect of data management. It helps measure the accuracy, consistency, and completeness of your enterprise data. While setting up a fresh environment for data management, you should see that the datasets used in your organization are of high quality. This can be done with the help of data cleaning and transformation techniques, including handling missing, duplicate, or outlier values.

AI-driven automation now enables intelligent data quality assurance through pattern recognition that maps fields and normalizes schemas automatically. Machine learning models can predict and prevent quality issues by analyzing historical patterns and flagging potential inconsistencies before they impact downstream processes.

Data Warehouse Architecture

Data warehouse architecture is the structure that represents how data can be stored and managed within a data warehouse. To manage enterprise data in a new environment, you should select a suitable architecture that fulfills your business objectives and scalability needs.

Modern architectures should consider hybrid frameworks that balance flexibility, governance, and performance. Cloud-native architectures that automatically scale with workload demands provide significant advantages over traditional fixed-capacity systems, especially when handling diverse data types from structured databases to unstructured content.

Master Data Management (MDM)

Master data refers to data records that are critical for the operation of your enterprise. For example, data related to customers' personal information, such as phone numbers, addresses, and payment information, can be considered master data. MDM enables you to store all the business-critical data in a centralized location. This helps you create a unified dataset for enterprise data management in a fresh environment.

Advanced MDM systems now incorporate AI-powered data cataloging that autonomously enriches metadata and maintains data lineage across complex enterprise ecosystems. This reduces the manual effort required for data stewardship while improving data discovery and trust.

Data Integration

The data integration process involves collecting and consolidating data from various sources into a single data system. ERP, CRM systems, log files, and social media platforms are some of the sources from which you can extract enterprise data. Integrating data is essential during enterprise data management in a fresh environment as it provides quick access to standardized data. You can use this data for analytics, reporting, and operational tasks.

For effective data integration, you should opt for a powerful data movement tool such as Airbyte. It offers an extensive library of 600+ connectors. You can use any of these connectors to extract enterprise data and load it to a desired destination data system.

If the connector you want to use is not in the existing set of connectors, you can create it on your own using Airbyte's low-code Connector Development Kit (CDK) or Connector Builder.

When dealing with semi-structured or unstructured data, Airbyte allows you to load it directly into vector destinations like Pinecone or Milvus. This capability is useful for handling workflows involving GenAI. You can take the assistance of GenAI workflows to automate processes like data transformation or MDM. These workflows reduce manual intervention and improve the accuracy of your EDM framework.

Airbyte's recent platform updates include True Data Sovereignty features that enable organizations to maintain control over their data across global regions while complying with regulations. The platform now supports multi-region deployments where you can isolate data planes across regions, ensuring compliance with residency requirements and reducing cross-region data transfer costs.

Some additional key features of Airbyte are:

- Build Developer-Friendly Pipeline: PyAirbyte is an open-source Python library that provides you with a set of utilities to use Airbyte connectors in the Python ecosystem. Using PyAirbyte, you can extract data from numerous sources and load it to SQL caches like Snowflake or BigQuery. You can manipulate the PyAirbyte cached data using Python libraries like Pandas to perform advanced analytics and business reporting.

- AI-powered Connector Development: While developing custom connectors using Connector Builder in Airbyte, you can utilize AI assistant to speed up the configuration process. The AI assistant pre-fills the configuration fields and suggests intelligent solutions to fine-tune the connector configuration by parsing API documentation and suggesting schema mappings.

- Change Data Capture (CDC): The CDC feature helps you to incrementally capture changes made at the source data and replicate them in the destination data system. This enables you to keep source and target data systems in sync with each other with minimal source impact through log-based capture methods.

- Schema Management: Schema management is essential for accurate and efficient data synchronization. While using Airbyte, you can specify how the platform must handle schema changes in source data for each connection. Airbyte allows you to manually refresh schema whenever required during the data pipeline development process.

- Direct Loading Capabilities: Airbyte's new Direct Loading method streamlines data ingestion into warehouses like BigQuery, cutting compute costs by 50-70% and increasing sync speeds by up to 33% by eliminating intermediate storage steps like JSON staging.

What Role Do Modern Architectural Paradigms Play in Fresh Data Environments?

Fresh data environments benefit significantly from adopting modern architectural approaches that address the complexity of diverse data types and distributed systems. These paradigms represent a fundamental shift from traditional centralized architectures to more flexible, scalable solutions.

Data Lakehouse Architecture

The lakehouse architecture combines the scalability of data lakes with the reliability of data warehouses, offering organizations the best of both worlds. Unlike traditional data lakes that often become data swamps due to limited metadata management, modern lakehouses provide flexible schema-on-write capabilities with active, auto-generated lineage tracking.

This architecture supports ACID transactions for concurrent updates while maintaining real-time query capabilities that optimize performance for both batch processing and real-time analytics. By decoupling storage and compute, organizations can optimize costs while maintaining strict governance standards essential for enterprise environments.

Data Fabric Implementation

Data fabric serves as a unified, intelligent integration layer that automates data ingestion, transformation, and governance across hybrid ecosystems. This approach leverages active metadata services and automation to unify data across on-premises, cloud, and edge environments without requiring physical data movement.

Key components include metadata discovery that maps relationships between data assets, comprehensive data lineage tracking for auditability, and cross-platform interoperability that enables queries across diverse technology stacks. Data fabric architectures use AI and machine learning to intelligently manage data diversity and scale integration tasks automatically.

Data Mesh for Decentralized Ownership

Data mesh represents a paradigm shift toward decentralized data ownership where domain teams take responsibility for their specific data products. This approach addresses data silos by enabling cross-domain analysis through standardized product definitions and federated metadata management.

The four core principles include domain-oriented ownership where teams align with business capabilities, treating data as a product with clear service level agreements, self-serve infrastructure platforms that enable domain autonomy, and federated governance that ensures global standards while allowing domain-specific customizations. This approach reduces bottlenecks by eliminating dependencies on centralized data teams while maintaining data quality and governance standards.

How Does AI-Driven Automation Transform Fresh Data Management Environments?

Artificial intelligence and machine learning have evolved from supplementary tools to foundational elements of modern data management environments. AI-driven automation addresses the scalability challenges that manual processes cannot solve while improving the accuracy and reliability of data operations.

Intelligent Pipeline Orchestration

AI-powered systems can automatically create and optimize data pipelines by analyzing patterns in data flow and usage. These systems use pattern recognition to map fields between different data sources and automatically normalize schemas without manual intervention. Machine learning algorithms can predict optimal processing times and resource allocation, ensuring pipelines run efficiently during peak business operations.

Generative AI tools are now capable of unifying siloed datasets by understanding data relationships and suggesting integration approaches that humans might overlook. This capability significantly reduces the time required to establish data connections in fresh environments while maintaining high quality standards.

Automated Data Quality Assurance

Modern AI systems provide continuous monitoring of data quality through anomaly detection algorithms that learn normal patterns and flag deviations in real-time. These systems can automatically classify data assets, enrich metadata, and maintain data lineage without human intervention.

Machine learning models enable predictive insights that anticipate data quality issues before they impact business operations. For example, algorithms can analyze historical patterns to predict when data sources might experience quality degradation and proactively implement corrective measures.

Adaptive Security and Governance

AI-driven security systems implement behavioral anomaly detection that identifies potential insider threats by monitoring unusual patterns in data access and usage. These systems learn normal user behaviors and automatically flag suspicious activities that could indicate security breaches or policy violations.

Automated governance workflows use machine learning to enforce policy compliance in real-time, reducing the manual overhead associated with regulatory compliance while ensuring consistent application of governance rules across distributed data environments. This approach enables organizations to maintain strict security standards while supporting the self-service capabilities that business teams require for agility.

What Are the Key Challenges in Implementing Enterprise Data Management Fresh Environment?

Enterprise data management helps you overcome the limitations offered by legacy data environments. However, you may encounter some challenges while managing data in a fresh environment. Some of these challenges are as follows:

- Data Migration Challenges: Migrating existing datasets from an older environment to a fresh one can be challenging, as their format may not be compatible with the new ecosystem. In addition to this data, you also need to collect newly generated data or replicate updated data records, which can be a laborious process. Modern approaches like change data capture and automated schema mapping can help address these challenges by providing real-time synchronization and format translation capabilities.

- Scalability Issues: You may find it complex to create a data environment that can accommodate the growing data volume with business expansion. A low-scalable data environment can delay the generation of business insights, affecting overall operational performance. Cloud-native architectures with auto-scaling capabilities and distributed processing frameworks can help address these concerns by automatically adjusting resources based on demand.

- Data Security Concerns: Implementing necessary modern security mechanisms and data regulation frameworks can be challenging in a newly deployed environment. If not implemented meticulously, it can lead to cyber attacks and data breaches, resulting in a loss of customer trust. Zero-trust security models and encryption at rest and in transit provide comprehensive protection, while automated compliance monitoring helps maintain regulatory adherence.

- Integration Complexity: Managing integrations across hybrid and multi-cloud environments introduces additional complexity in maintaining consistent governance and security policies. Organizations must balance the flexibility of distributed architectures with the need for centralized oversight and control.

- Skills and Training Gaps: Fresh environments often require new technical skills and approaches that existing teams may not possess. Organizations need to invest in comprehensive training programs and potentially hire specialists to effectively manage modern data architectures and AI-driven tools.

Build a Robust Enterprise Data Management System with Airbyte

Airbyte is a comprehensive data integration platform that transforms how organizations approach managing enterprise data by providing flexible, scalable solutions that eliminate traditional trade-offs between cost, functionality, and control.

Key features

- Extensive Connector Library – 600+ pre-built connectors for seamless data synchronization across databases, APIs, SaaS applications, and cloud platforms, eliminating custom integration development overhead.

- Connector Development Kit – Build custom connectors in under 30 minutes using no-code, low-code, or full development approaches that match your technical requirements and expertise levels.

- AI-Powered Development Tools – Leverage artificial intelligence assistance for connector creation, including automatic API analysis, configuration generation, and optimization recommendations that accelerate integration development.

- Change Data Capture – Real-time CDC capabilities for incremental replication that ensure data freshness while minimizing system impact and network utilization.

- Flexible Deployment Options – Choose from cloud-managed services, self-hosted enterprise solutions, or hybrid architectures that meet your security, compliance, and operational requirements.

- Mixed Data Type Support – Handle both structured and unstructured data within single connections, enabling comprehensive AI and machine learning workflows that combine diverse information sources.

- Advanced Security and Governance – Enterprise-grade encryption, role-based access controls, audit logging, and compliance support for GDPR, HIPAA, and industry-specific requirements.

- Capacity-Based Pricing – Predictable cost structure based on infrastructure needs rather than data volumes, enabling cost-effective scaling and experimentation with new data sources.

Enterprise-Grade Capabilities

Airbyte's Self-Managed Enterprise edition provides sophisticated capabilities designed for large-scale deployments including multitenancy support, advanced monitoring and alerting, and specialized enterprise connectors optimized for high-volume database replication. The platform processes over 2 petabytes of data daily across customer deployments while maintaining high availability and disaster recovery capabilities.

The platform's open-source foundation ensures transparency and eliminates vendor lock-in while providing the flexibility to customize integrations according to specific business requirements. Organizations maintain complete control over their data sovereignty and can deploy Airbyte across cloud, hybrid, or on-premises environments without compromising functionality or performance.

AI and Machine Learning Integration

Airbyte provides native support for AI workflows including automatic data chunking, vector database integration, and direct connectivity to popular AI frameworks like LangChain and LlamaIndex. The platform enables organizations to build comprehensive AI data pipelines that combine structured and unstructured information sources, supporting retrieval-augmented generation systems and other advanced analytics applications.

PyAirbyte, the platform's Python library, enables data scientists and machine learning engineers to incorporate Airbyte's connector ecosystem directly into their development workflows, providing programmatic access to data extraction capabilities that accelerate AI initiative development and deployment.

Frequently Asked Questions

What is the difference between a fresh data environment and traditional data management systems?

Fresh environments emphasize cloud-native architectures, real-time processing, AI integration, and data democratization, unlike rigid, centralized traditional systems. Traditional systems often require significant manual intervention and create bottlenecks that prevent business agility, while fresh environments enable self-service access with automated governance.

How long does it typically take to implement enterprise data management in a fresh environment?

Timelines vary, but cloud-native and automated approaches often reduce deployment from months to weeks, depending on data-migration complexity and team readiness. Organizations with simpler legacy environments and dedicated implementation teams can often achieve basic functionality within 4-6 weeks, while complex enterprise migrations may require 3-6 months for full deployment.

What are the key security considerations for fresh data environments?

Zero-trust architecture, end-to-end encryption, behavioral anomaly detection, automated compliance monitoring, and adherence to data-sovereignty requirements are essential. Fresh environments must also address security across multiple cloud providers and hybrid deployments, requiring consistent policy enforcement regardless of data location or processing environment.

What role does AI play in maintaining data quality in fresh environments?

AI delivers continuous quality monitoring, automatic metadata enrichment, predictive issue detection, and corrective suggestions, reducing manual oversight. These systems learn from historical patterns to identify anomalies, classify data assets automatically, and recommend improvements that maintain high quality standards without constant human intervention.

How do fresh data environments support regulatory compliance?

Fresh environments embed compliance capabilities into the architecture through automated policy enforcement, real-time monitoring, and comprehensive audit trails. This approach ensures consistent compliance across all data touchpoints while reducing the manual overhead typically associated with regulatory adherence.

Conclusion

To fully utilize enterprise data for business performance and revenue generation, setting up a fresh environment is transformative. By adopting AI-driven automation, cloud-native architectures, and advanced governance frameworks, organizations turn data infrastructure from a cost center into a competitive advantage. The key to success lies in embracing data democratization while maintaining security and governance. With proper planning, modern tools, and comprehensive training, enterprises can build fresh data environments that empower business teams and ensure data quality, security, and compliance.

Suggested Read:

.webp)