OLTP vs OLAP : Unveiling Crucial Data Processing Contrasts

Summarize this article with:

✨ AI Generated Summary

Modern data architectures integrate OLTP and OLAP through hybrid HTAP systems, enabling real-time transactional processing alongside complex analytics without data duplication or latency. Key benefits include:

- OLTP focuses on fast, reliable, ACID-compliant transactions for operational workloads.

- OLAP supports complex, multidimensional queries for deep business intelligence and analytics.

- HTAP platforms unify these workloads, reducing infrastructure costs, improving scalability, and enabling instant insights.

- Real-time OLAP and vector databases enhance analytics speed and AI-driven applications.

- Modern integration tools like Airbyte automate data synchronization, ensuring seamless OLTP-OLAP interoperability.

Data teams can no longer rely on outdated ETL platforms that drain engineering resources or fragile custom integrations that fail under load. The real challenge lies in bridging OLTP, which powers real-time transactional systems like banking and e-commerce, with OLAP, which enables deep analysis across large datasets for business intelligence.

Modern enterprises increasingly combine both into hybrid architectures or HTAP systems, giving them real-time visibility into transactions while also supporting complex analytics on historical data. This convergence eliminates costly bottlenecks, improves decision-making, and creates resilient data infrastructure that adapts to both immediate operational demands and long-term strategic needs.

In this article, we compare OLTP and OLAP, outline their defining characteristics, and explain how hybrid systems are reshaping modern data operations.

What Is OLTP and How Does It Power Real-Time Operations?

OLTPhandles large volumes of simple, concurrent database transactions that power day-to-day operations like bank transfers, online purchases, and patient admissions. Its focus is on speed, reliability, and absolute data integrity, ensuring every transaction fully succeeds or fails without partial execution. Modern OLTP systems leverage in-memory processing, cloud-native scaling, and distributed SQL architectures to achieve sub-millisecond latency and handle millions of transactions per second.

Examples include Microsoft SQL Server with memory-optimized tables, Amazon Aurora's serverless scaling, and CockroachDB's globally distributed architecture. By combining normalized storage, backup strategies, and hardware-level encryption, OLTP remains the backbone of mission-critical systems in finance, healthcare, and beyond.

Key Features

- Real-time processing: OLTP systems process transactions in real time, ensuring data is always current and enabling immediate business operations.

- Concurrent processing: They must handle many transactions, often made by multiple users simultaneously, with modern systems supporting hundreds of thousands of transactions per second.

- Data consistency: OLTP solutions ensure accuracy through validation, concurrency control, and transaction management, with advanced systems implementing optimized locking mechanisms to improve concurrency while maintaining isolation guarantees.

- ACID properties: OLTP systems adhere to the ACID (Atomicity, Consistency, Isolation, Durability) properties to maintain data integrity across distributed environments.

- Simple transactions: OLTP transactions are typically simple and short-lived, involving small amounts of data, allowing for optimized performance and resource utilization.

What Is OLAP and How Does It Enable Advanced Analytics?

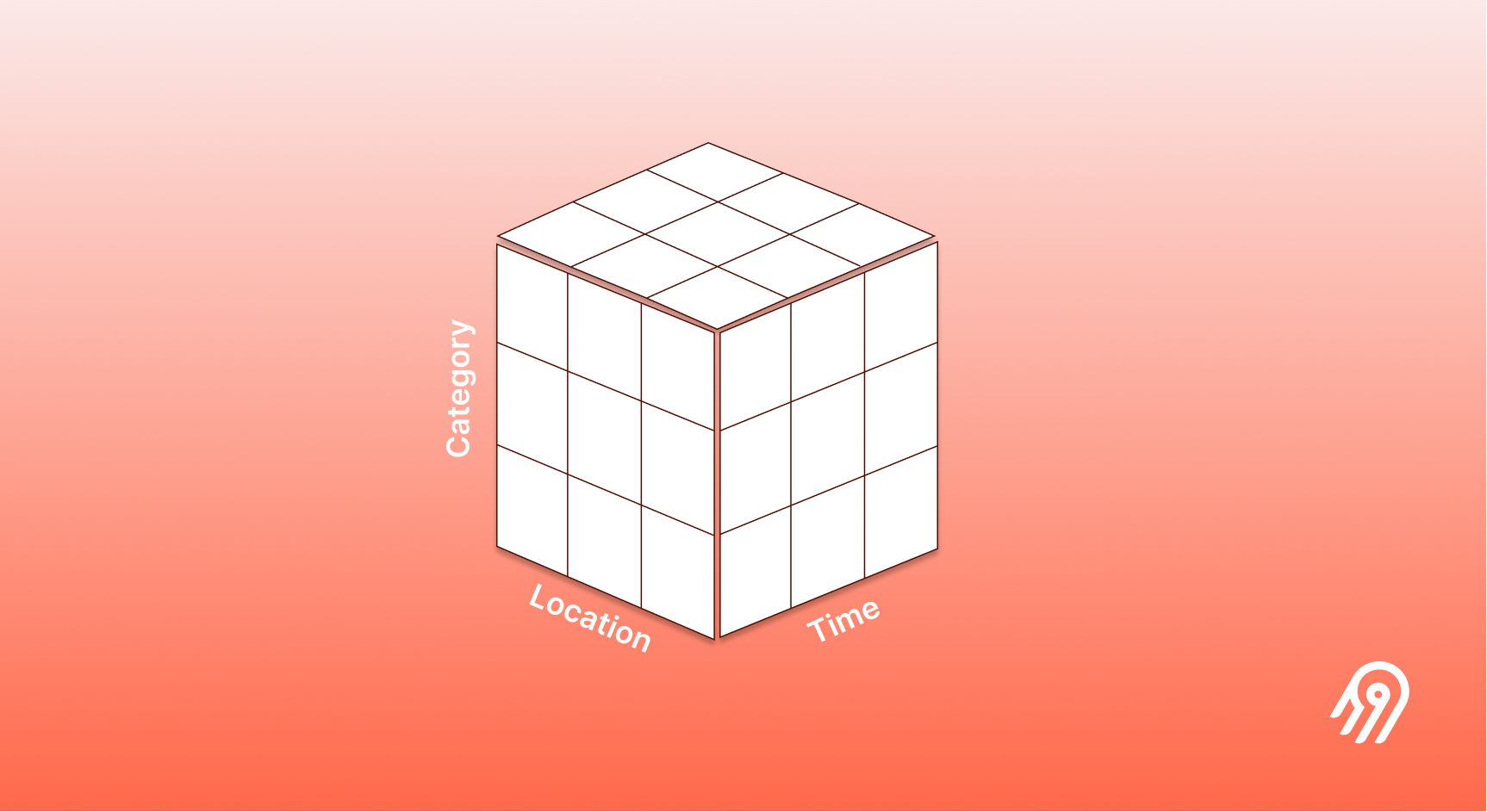

Online Analytical Processing (OLAP) is a data-processing system used to analyze large amounts of data from various perspectives, transforming raw transactional data into analytical insights through multidimensional aggregation. OLAP software is used in business intelligence and data analytics to support complex reporting, analysis, and decision-making processes that require sophisticated data exploration capabilities.

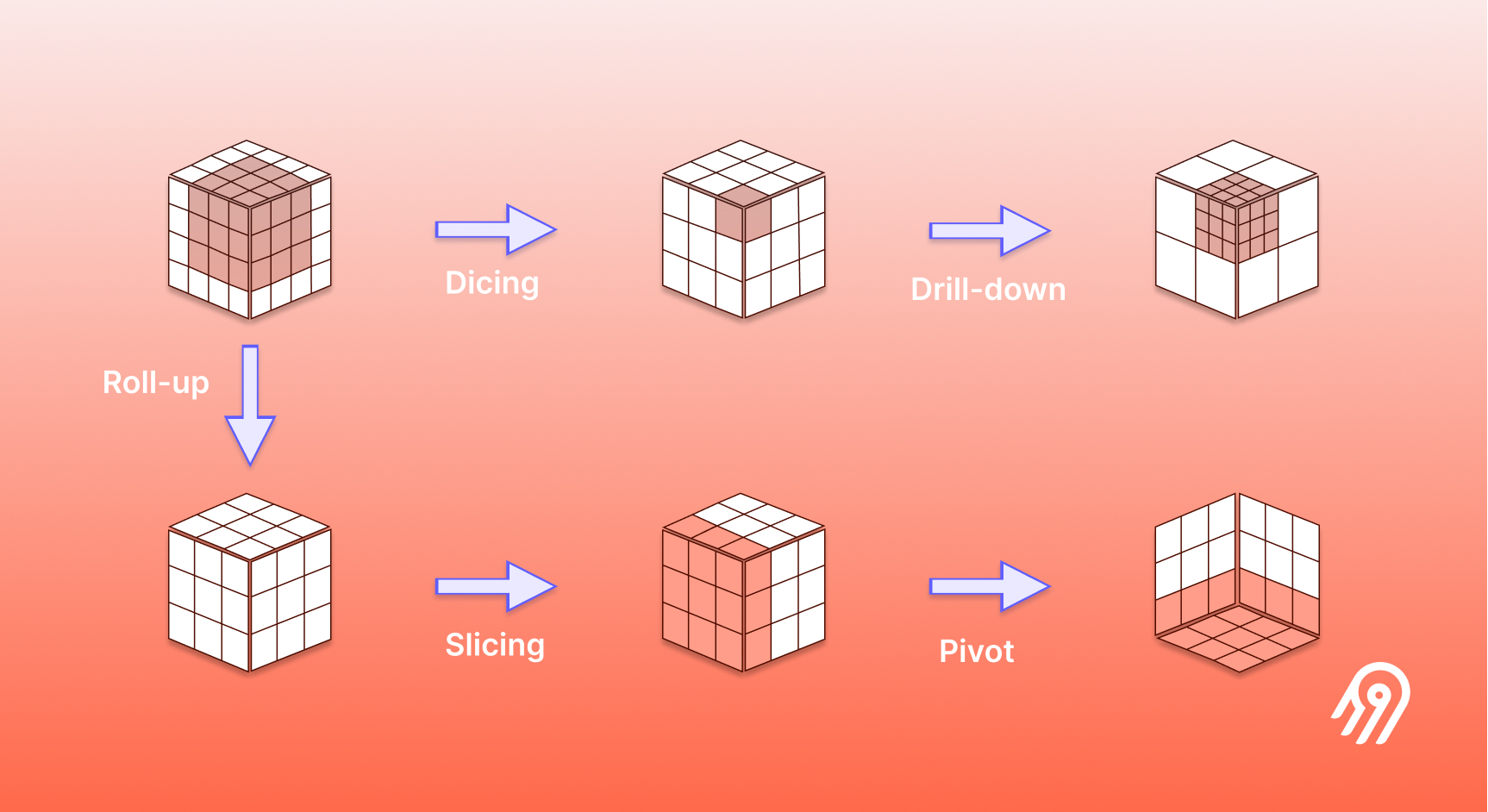

Online Analytical Processing (OLAP) powers interactive exploration of large datasets, enabling operations like pivoting, drill-down, and slice-and-dice for advanced analytics and visualization. Modern cloud-native OLAP systems such as ClickHouse, StarRocks, and Apache Doris achieve sub-second query latency on trillion-row datasets by combining vectorized execution, decoupled storage and compute, real-time indexing, and intelligent caching.

Optimized for read-heavy workloads, they let analysts run complex queries efficiently, scale resources independently, and query cloud data lakes and warehouses directly through open formats like Apache Iceberg and Delta Lake—turning massive raw data into actionable insights at speed and scale.

Key Features

- Multidimensional data model: OLAP systems use an OLAP cube to represent data in multiple dimensions, enabling sophisticated hierarchical analysis and drill-down capabilities across complex business metrics.

- Complex queries: OLAP software delivers fast query performance even on large datasets through techniques like vectorized execution, parallel processing, and intelligent partial aggregation strategies.

- Data aggregation: Systems pre-calculate and store summary data for faster retrieval, with cost-based materialization automatically prioritizing high-impact dimensions and frequently accessed metrics.

- Analytical operations: Drill-down, slice-and-dice, pivot, and other advanced functions enable dynamic exploration of data patterns and trends with interactive response times.

- High data volume: Advanced caching, indexing, and partitioning optimize performance on massive datasets, with modern implementations supporting petabyte-scale analytics through columnar storage formats and compression techniques.

What Are the Key OLAP and OLTP Difference Characteristics?

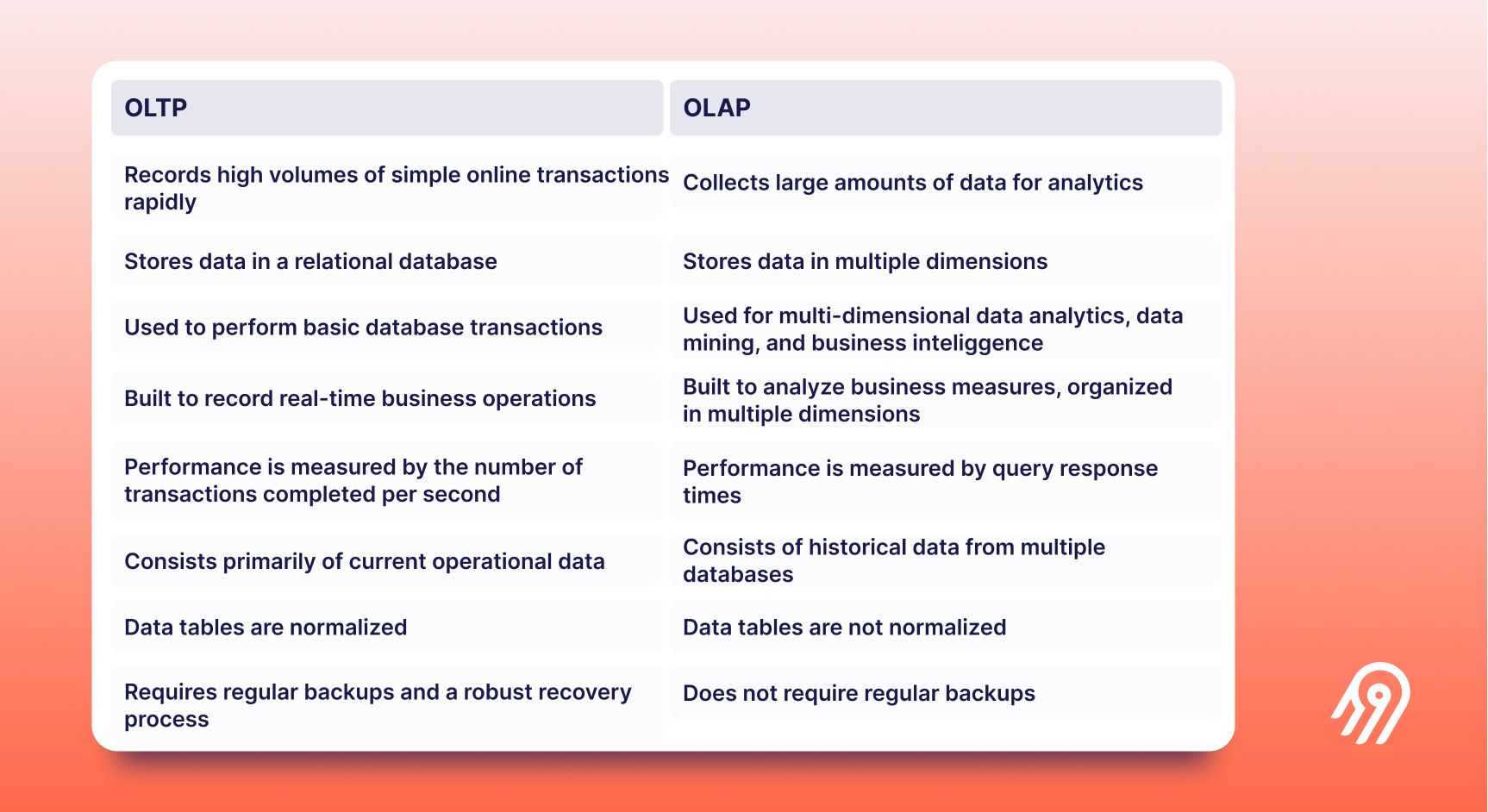

Main difference:OLAP is optimized for complex queries and data analysis on large datasets, while OLTP is designed for fast, real-time transactional operations and data integrity.

1. Data Structure and Schema Design

- OLTP: Uses a normalized data structure optimized for transaction processing, with third normal form reducing data redundancy and ensuring referential integrity across related tables.

- OLAP: Uses denormalized structures like Star or Snowflake schemas optimized for analytical queries, with fact tables connected to dimension tables that enable rapid aggregation and multi-dimensional analysis.

2. Query Types and Complexity

- OLTP: Simple, short-lived CRUD queries with millisecond response times, focusing on individual record operations like INSERT, UPDATE, DELETE, and point lookups.

- OLAP: Complex, aggregated queries involving roll-up, drill-down, slice-and-dice, and pivot operations that process millions of records to generate summary insights and trends.

3. Performance Focus and Optimization

- OLTP: Emphasizes low-latency throughput and high concurrency, optimized for handling thousands of simultaneous users performing individual transactions with consistent sub-millisecond response times.

- OLAP: Focuses on efficient data retrieval for large-scale analytical workloads, optimized for scanning and aggregating massive datasets with columnar storage and parallel processing capabilities.

4. Data Processing Methodology

- OLTP: Sequential, real-time, ACID-compliant processing that ensures immediate consistency and data integrity for mission-critical business operations.

- OLAP: Batch or pre-aggregated processing focused on insight generation, with increasing real-time capabilities through streaming analytics and continuous materialized view updates.

How Do Modern HTAP Architectures Bridge OLTP and OLAP Workloads?

Hybrid Transactional/Analytical Processing (HTAP) architectures bridge OLTP and OLAP by enabling both transactional integrity and analytical depth within the same platform. Instead of duplicating data across separate systems, HTAP platforms maintain unified data planes that support real-time operations and complex analytics simultaneously.

Unified Processing Models

TiDB pairs TiKV for transactional workloads with TiFlash for analytics, using Raft-based replication to keep both stores consistent so queries can run on fresh data without ETL delays. SingleStore takes a universal storage approach, automatically shifting data between row and column formats based on usage. Google Cloud Spanner demonstrates this convergence by combining global ACID transactions with SQL-based analytics for workloads like fraud detection and payment processing.

Performance and Scalability Benefits

HTAP architectures reduce latency, cut infrastructure costs, and eliminate data movement bottlenecks by processing both workloads in one place. Resource isolation techniques, query scheduling, and dedicated memory pools ensure analytical queries never disrupt mission-critical transactions. By consolidating systems into one HTAP framework, organizations gain both scalability and agility, supporting operational workloads and advanced analytics in real time.

Industry Implementation Examples

- Financial services: Real-time fraud detection systems combine transactional processing with immediate analytical pattern recognition, analyzing spending behaviors and transaction anomalies as payments occur without introducing latency in authorization processes.

- Retail: Point-of-sale systems merge transaction processing with inventory analytics for real-time demand forecasting and dynamic pricing, enabling immediate stock adjustments and promotional responses based on current sales velocity and inventory levels.

- Telecommunications: Network management platforms process call detail records while simultaneously analyzing traffic patterns for capacity planning and quality optimization, enabling proactive network adjustments without separate analytical infrastructure.

What Role Does Real-Time OLAP Play in Modern Analytics?

Real-time OLAP delivers sub-second insights on streaming data, enabling organizations to react instantly to operational events and market changes. Unlike traditional batch analytics, it ingests data continuously through pipelines connected to brokers like Kafka, maintaining incremental indexes and materialized views that update as new data arrives.

Streaming Analytics Architecture

Systems such as Apache Druid, ClickHouse, and Pinot process millions of events per second with millisecond query latency, powering dashboards and time-series analytics on fresh data. Engines like RisingWave and Materialize extend OLAP to infinite streams through incremental computation, ensuring analytics remain current without ETL delays.

Performance Innovations

Real-time OLAP achieves speed through vectorized execution, advanced compression, and multi-level indexing optimized for streaming and time-series data. Machine learning–driven index tuning further reduces overhead while adapting to evolving query patterns.

Industry Applications

Financial firms use real-time OLAP for algorithmic trading and risk monitoring, manufacturers for predictive maintenance on sensor data, and e-commerce platforms for dynamic personalization and pricing. These applications highlight how real-time OLAP transforms raw event streams into immediate, actionable intelligence.

How Do Vector Databases Enable Advanced Analytics for AI Workloads?

Vector databases are built for AI applications that require semantic similarity search and high-dimensional data processing, capabilities that traditional OLAP systems struggle to deliver. They store and index vector embeddings from deep learning models, enabling fast, accurate retrieval at scale.

Specialized Architecture for High-Dimensional Data

Platforms like Pinecone and Weaviate use Approximate Nearest Neighbor algorithms such as HNSW to search across billion-scale vector collections efficiently. Compression techniques like product quantization and locality-sensitive hashing reduce memory usage while preserving similarity accuracy, while GPU acceleration speeds up index building and query execution. Frameworks like LangChain connect these systems to large language models, powering retrieval-augmented generation that grounds AI outputs in organizational knowledge.

Performance and Scaling Benefits

Vector databases scale logarithmically with dataset size, delivering interactive performance even on trillion-vector collections. They support distributed clustering, multi-tenancy for SaaS deployments, and intelligent caching that keeps frequently queried embeddings in memory to cut latency.

Enterprise Applications

Organizations use vector databases for real-time recommendations, fraud detection, and semantic content search. E-commerce platforms update preference embeddings instantly to personalize shopping sessions, banks analyze transaction embeddings to detect anomalies, and content platforms combine OLAP queries with semantic search to surface related assets by meaning rather than keywords.

How Do Modern Data Integration Platforms Facilitate OLTP-OLAP Interoperability?

Modern platforms like Airbyte automate schema mapping, transformations, and real-time synchronization between OLTP and OLAP systems, removing the bottlenecks and delays of traditional ETL.

Addressing Integration Challenges

Change Data Capture (CDC) streams detect and propagate INSERT, UPDATE, and DELETE events with low latency, keeping analytical systems in sync with transactional sources. Airbyte's CDC connectors support log-based capture for minimal source impact and trigger-based replication for legacy systems. Streaming pipelines built on Kafka, Pulsar, and Flink provide sub-second data movement with exactly-once guarantees, enabling in-flight enrichment, validation, and format conversion. Schema evolution management automatically adapts to structural changes, ensuring downstream systems stay consistent without manual updates.

Connector-Centric Architecture

With 600+ pre-built connectors, Airbyte covers databases, APIs, file systems, and SaaS tools through standardized interfaces, while the Connector Development Kit allows custom builds. Incremental sync strategies reduce bandwidth and cost by transferring only changed data. Real-time connector health monitoring, retries, and detailed logging provide visibility, resilience, and auditability for production pipelines.

Enterprise-Grade Capabilities

Airbyte supports enterprise deployments with role-based access control, PII masking, and data tokenization for compliance. Comprehensive audit logging integrates with SIEM systems for centralized security monitoring. High-availability configurations with automated failover and cross-region disaster recovery ensure continuous operations even during infrastructure disruptions.

What Are the Most Effective Data Governance and Compliance Strategies?

Governance strategies must reflect the unique requirements of OLTP and OLAP while ensuring unified policy enforcement across integrated data architectures.

OLTP Governance: Ensuring Transactional Integrity

OLTP systems prioritize real-time audit trails, row-level security, and precision logging to comply with regulations like SOX and GDPR. Retention policies archive historical transactions without breaking referential integrity, while encryption techniques such as column-level or format-preserving encryption secure sensitive fields like PII without degrading performance.

OLAP Governance: Maintaining Traceability and Accuracy

OLAP governance emphasizes data lineage and metadata management to preserve trust in analytical outputs. Systems track data sources, transformation logic, and definitions while applying automated profiling, anomaly detection, and quality checks across metrics and hierarchies. These controls ensure consistent, verifiable insights even as schemas evolve.

Unified Policy Enforcement

Modern governance platforms like Collibra and Alation centralize rules across OLTP and OLAP environments using policy-as-code. They provide automated discovery, classification, and lineage visualization, enabling organizations to manage sensitive data consistently, trace data flows end-to-end, and quickly assess the impact of upstream changes on downstream analytics.

How Do Disaster Recovery and High Availability Strategies Differ Between Systems?

OLTP and OLAP systems use different approaches to recovery and uptime, shaped by their workloads and tolerance for downtime.

OLTP High Availability Requirements

OLTP systems need near-zero RTO and RPO because even short outages impact revenue and customer trust. Synchronous replication across regions ensures no data loss, while consensus protocols like Raft and Paxos keep systems like CockroachDB and Google Spanner consistent during failures.

Active-active deployments allow simultaneous writes across regions, using conflict resolution to maintain integrity. Clustering, connection pooling, and retry logic add resilience by redistributing workloads and keeping sessions alive when nodes fail.

OLAP Recovery Strategies

OLAP workloads tolerate longer recovery times and focus on cost efficiency. Versioned storage formats such as Apache Iceberg and Delta Lake enable point-in-time recovery without continuous replication.

Decoupled compute and storage make it possible to re-provision engines quickly, while cloud OLAP systems rely on durable object storage and auto-scaling for compute redundancy. Incremental backups with deduplication and compression reduce costs while preserving recovery options.

Integrated Recovery for HTAP Architectures

HTAP systems must balance OLTP's strict uptime with OLAP's consistency needs. Recovery plans validate synchronization between row- and column-store replicas to avoid data mismatches.

Testing covers both transactional accuracy and analytical results, with clear priorities for which workloads restore first in hybrid environments.

Which Database Systems Are Popular for OLTP and OLAP Applications?

OLTP Database Systems and Their Modern Capabilities

- PostgreSQL continues to dominate enterprise OLTP deployments through its combination of SQL standards compliance, extensibility, and robust transaction handling capabilities. Recent versions include significant performance improvements for concurrent workloads and built-in logical replication for change data capture scenarios.

- MySQL remains prevalent in web applications and e-commerce platforms, with MySQL 8.0 introducing features like document store capabilities and improved JSON processing that bridge traditional relational functionality with modern application requirements.

- Microsoft SQL Server provides enterprise-grade OLTP capabilities with advanced features including in-memory OLTP processing, columnstore indexes for hybrid workloads, and comprehensive integration with Microsoft's cloud ecosystem through Azure SQL Database and SQL Managed Instance offerings.

- Oracle Database maintains its position in large enterprise environments through advanced features like Real Application Clusters (RAC) for high availability, Autonomous Database capabilities for self-managing deployments, and comprehensive security features required by regulated industries.

- MongoDB represents the NoSQL approach to OLTP processing, providing document-based storage with ACID transaction support, automatic sharding for horizontal scaling, and flexible schema evolution capabilities that support agile application development patterns.

OLAP Database Systems and Analytics Platforms

- ClickHouse has emerged as a leading real-time OLAP engine, providing exceptional performance for time-series analytics and real-time dashboards through its columnar storage architecture and distributed query processing capabilities. Recent versions include S3-native storage integration and JavaScript user-defined functions for advanced analytics.

- Apache Druid specializes in sub-second queries on streaming data, making it ideal for real-time analytics dashboards and monitoring applications that require immediate visibility into operational metrics and business performance indicators.

- Snowflake revolutionized cloud data warehousing through its multi-cluster, shared data architecture that provides independent scaling of compute and storage resources while maintaining SQL compatibility and supporting diverse analytical workloads.

- Apache Kylin provides OLAP capabilities on big data platforms by pre-calculating aggregations on Hadoop clusters, enabling fast analytical queries on petabyte-scale datasets while integrating with business intelligence tools through standard interfaces.

- StarRocks offers vectorized execution and materialized view capabilities optimized for modern analytical workloads, with particular strength in real-time analytics and integration with data lake architectures through open table formats.

Primary Use Cases and Application Domains

OLTP Applications Across Industries

- E-commerce Transaction Processing: Online retailers utilize OLTP systems for order processing, inventory management, payment authorization, and customer account management, requiring millisecond response times and absolute data consistency to prevent overselling and ensure accurate financial records.

- Banking and Financial Services: Core banking systems rely on OLTP architectures for account transactions, loan processing, fraud detection, and regulatory reporting, where data integrity and audit trails are critical for financial accuracy and compliance requirements.

- Reservation and Booking Systems: Airlines, hotels, and entertainment venues use OLTP systems for inventory management and customer bookings, requiring real-time availability updates and conflict resolution to prevent double-booking scenarios while maintaining customer satisfaction.

- Customer Relationship Management: CRM applications depend on OLTP systems for contact management, sales pipeline tracking, and customer interaction history, enabling sales teams to access current customer information while maintaining data consistency across multiple touchpoints.

OLAP Applications for Strategic Decision Making

- Business Intelligence Dashboards: Executive reporting systems utilize OLAP capabilities to provide multidimensional views of business performance, enabling drill-down analysis from high-level KPIs to detailed operational metrics while maintaining interactive response times.

- Sales and Marketing Analytics: Marketing teams leverage OLAP systems for campaign performance analysis, customer segmentation, and market trend identification, enabling data-driven decisions about promotional strategies and budget allocation across diverse channels and customer demographics.

- Financial Planning and Budgeting: Finance departments utilize OLAP tools for variance analysis, forecasting, and what-if scenario modeling, enabling sophisticated financial planning processes that incorporate multiple dimensions including time periods, organizational units, and product categories.

- Supply Chain Optimization: Manufacturing and retail organizations implement OLAP systems for inventory optimization, demand forecasting, and supplier performance analysis, enabling strategic decisions about procurement, distribution, and capacity planning based on historical trends and predictive models.

Benefits of Hybrid Systems and Integrated Architectures

Modern hybrid architectures that combine OLTP and OLAP capabilities within unified platforms deliver significant operational and strategic advantages over traditional separated systems.

Operational Efficiency and Cost Reduction

- Real-time Analytics Capabilities: Hybrid systems eliminate the latency inherent in traditional ETL processes by enabling analytical queries directly on operational data, reducing time-to-insight from hours or days to seconds while maintaining transactional consistency and data accuracy.

- Faster Data Processing: Integrated architectures remove data movement overhead and transformation delays, enabling businesses to respond more quickly to market conditions, operational issues, and customer requirements while reducing the infrastructure complexity associated with maintaining separate systems.

- Improved Scalability: Cloud-native hybrid systems provide independent scaling of transactional and analytical workloads while sharing common data storage, optimizing resource utilization and costs based on actual demand patterns rather than peak capacity requirements.

- Better Decision-Making: The elimination of data staleness enables real-time operational intelligence, allowing businesses to make decisions based on current rather than historical information while maintaining the analytical depth required for strategic planning.

- Reduced Infrastructure Costs: Consolidating OLTP and OLAP functionality reduces licensing costs, operational overhead, and infrastructure complexity while improving data consistency and eliminating the integration challenges associated with maintaining separate systems.

Conclusion

Modern data processing no longer depends on choosing between OLTP for transactions and OLAP for analytics—hybrid approaches like HTAP, real-time OLAP, and vector databases now unify both. Platforms such as Airbyte streamline integration by eliminating ETL bottlenecks, while governance, security, and recovery strategies ensure resilience. Organizations that embrace these unified architectures gain faster insights, lower costs, and greater agility, turning data infrastructure into a true competitive advantage.

Frequently Asked Questions (FAQs)

What is the main difference between OLTP and OLAP?

OLTP supports real-time transactional operations with strict data integrity, while OLAP is optimized for complex queries and large-scale analytics. OLTP powers day-to-day business processes, and OLAP provides strategic insights through multidimensional analysis.

Why are hybrid systems becoming popular?

Hybrid systems like HTAP remove ETL delays by allowing analytics directly on live transactional data. This improves decision-making, lowers costs, and reduces infrastructure complexity compared to maintaining separate OLTP and OLAP systems.

Can OLTP and OLAP run in the cloud?

Yes. Cloud-native OLTP systems such as Amazon Aurora and CockroachDB deliver high availability and scalability, while OLAP engines like Snowflake, ClickHouse, and StarRocks provide fast, cost-efficient analytics at scale.

How does Airbyte support OLTP–OLAP integration?

Airbyte automates schema mapping, change data capture, and real-time synchronization between operational and analytical systems. Its 600+ connectors and enterprise features simplify interoperability while ensuring compliance and resilience.

.webp)

.webp)