PCI DSS Requirements for Data Integration: A Unified Architecture

Summarize this article with:

✨ AI Generated Summary

PCI DSS v4.0 extends compliance to all systems impacting cardholder data, requiring strict controls on data flows, encryption, segmentation, and continuous monitoring. A hybrid architecture with a cloud control plane for orchestration and customer-controlled data planes for processing minimizes PCI scope and enhances security by enforcing outbound-only connections, centralized logging, and automated vulnerability scanning. Key implementation steps include mapping data flows, enforcing network segmentation, managing encryption keys securely, centralizing access control, and maintaining unified logging to simplify audits and ensure ongoing compliance.

If your organization handles cardholder data (even just a SaaS checkout page), PCI DSS v4.0 applies to you. PCI DSS data integration means designing pipelines that move payment records across cloud and on-premises systems without breaking the standard's 300-plus controls. Every connector, queue, or script becomes a potential attack vector.

Version 4.0 expands scope to any system that "could impact the security of cardholder data," reaching beyond the Cardholder Data Environment (CDE) to include orchestration layers and auxiliary systems.

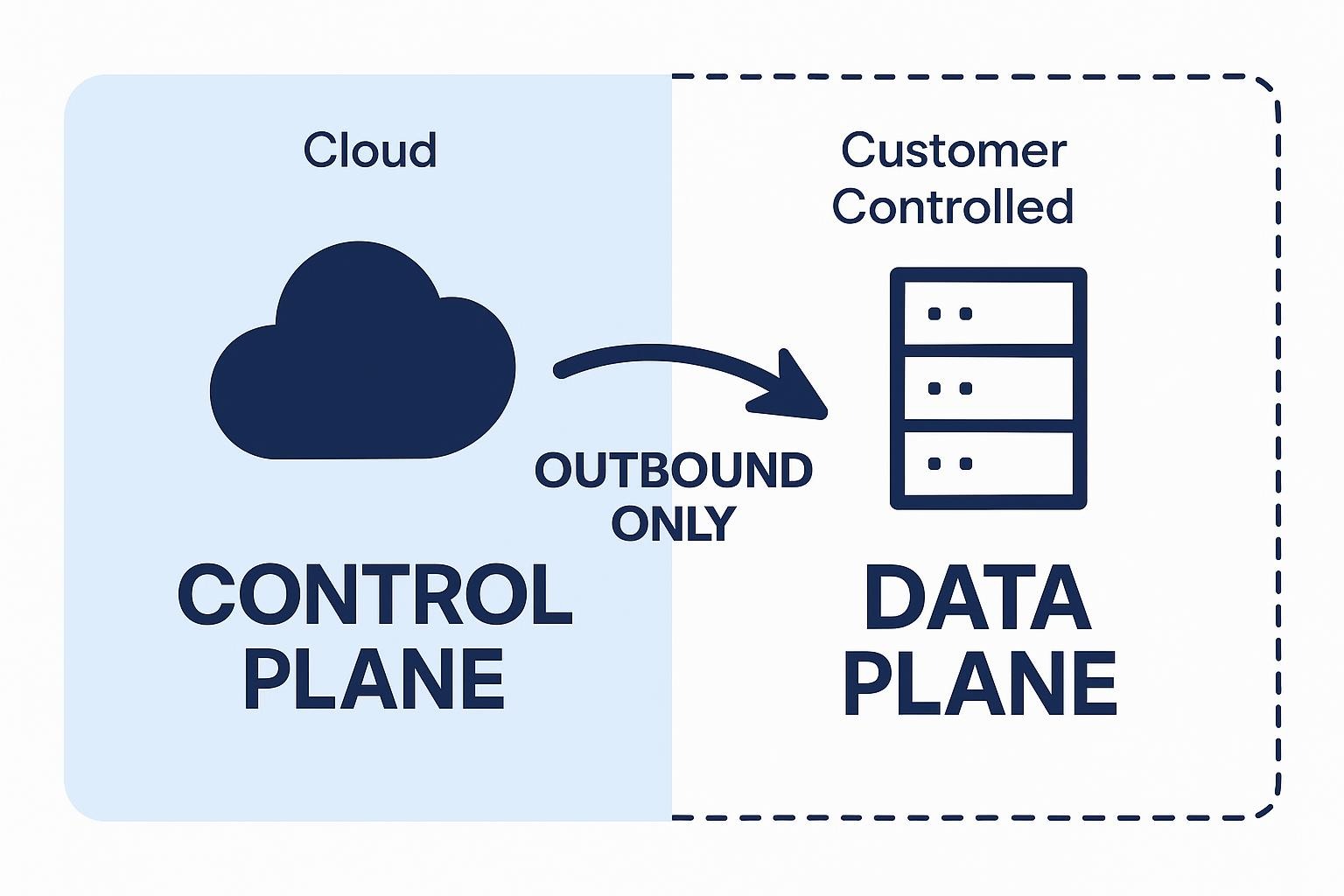

A hybrid architecture with a cloud control plane and customer-controlled data planes keeps compliance scope tight while enabling scale. Your card data stays where you control it; orchestration happens in the cloud.

What Are the Core PCI DSS v4.0 Requirements Relevant to Data Integration?

The 12 PCI DSS v4.0 requirements form a layered defense model: each layer tackles a different dimension of risk (network boundaries, asset inventories, access rights, vulnerability management, and continuous monitoring). Together, they establish the baseline that every data pipeline that moves, stores, or processes cardholder data must meet. While the framework is thorough, several controls directly impact how you orchestrate data flows across cloud and on-premises systems.

Requirement 1.4 prohibits direct public CDE access and requires documented justification for allowed connections, enforced through cloud security groups and scoped VPNs. Requirement 6.4.2 raises the bar starting March 2025: you must deploy automated tools that continuously scan public-facing applications for injection flaws and misconfigurations. Manual quarterly reviews won't cut it anymore.

These scans feed Requirement 11.3's penetration testing mandate (after each significant change and annually). Combined with encryption (Requirements 3 and 4) and year-long log retention (Requirement 10), these are the core safeguards auditors examine.

Master these controls early and every downstream design choice gets easier. Once your pipelines respect network boundaries, enforce encryption by default, and surface actionable audit logs, you're speaking your QSA's language.

What Does a PCI DSS-Compliant Data Integration Architecture Look Like?

You can meet compliance requirements without sacrificing cloud elasticity by adopting a hybrid integration model. This approach keeps cardholder data inside your infrastructure while letting a cloud control plane handle orchestration and upgrades.

A compliant deployment starts with two planes. The control plane lives in the cloud, storing only metadata and pipeline definitions. Your data planes run on-premises or in your VPC, process records locally, and initiate outbound-only HTTPS to the control plane. Because nothing outside can reach in, you avoid the inbound firewall exceptions that Requirement 1.4 warns against and shrink the CDE to the data plane itself.

To make this architecture audit-ready, you need five tightly defined building blocks:

- Segregated Control Plane: Cloud service that schedules and monitors pipelines without touching card data

- Customer-Controlled Data Planes: Workers that extract, transform, and load data inside your trusted zone

- Outbound-Only Network Flow: Eliminates inbound openings and simplifies segmentation evidence for assessors

- External Secrets Management: Credentials live in your vault, not in pipeline configs, supporting Requirements 3 and 7

- Centralized Logging and Monitoring: Every job, query, and access event streams to your SIEM for one-year retention per Requirement 10

How to Implement PCI DSS Data Integration Across Cloud and On-Prem Systems?

Designing pipelines that move card data between your data center and the cloud starts with a clear, verifiable plan. Each step below maps directly to v4.0 controls so you can show auditors not just intent but proof.

1. Map And Classify Data Flows

Start by drawing every path your payment data travels, from the POS terminal that captures a PAN to the analytics store that aggregates settlements. Document systems, endpoints, data categories, and owners in a detailed inventory that you update regularly.

The v4.0 guidance stresses that even components that could "impact the security of cardholder data" fall in scope, so include DNS servers, load balancers, and container hosts as well. Pair the inventory with a data-flow diagram that auditors can read against the control list to quickly confirm nothing is missing.

2. Apply Network Segmentation And Zoning

Requirement 1.4 expects a hard edge between trusted and untrusted networks. Place card-processing workloads in their own VPC or subnet, then restrict every inbound port.

Cloud providers make this explicit. A single outbound-only rule lets your data plane reach external APIs without opening inbound holes.

3. Enforce Encryption And Key Management

Controls 3.4 and 4.2 require strong cryptography throughout. Encrypt stored data using industry-standard strong algorithms (like AES) and enforce secure protocols (such as TLS 1.2+) for all connectors. Keep keys secure and never store them in code or pipeline configs. Using an HSM or managed KMS is highly recommended for robust key management.

Key rotation and split knowledge satisfy the standard. For legacy systems that break when field lengths change, format-preserving encryption lets you meet the rule without rewriting schemas.

4. Centralize Access Control And Secrets Management

Access to integration services must follow least privilege (Requirement 7) and MFA (Requirement 8). Use role-based IAM policies that grant each pipeline its own identity instead of shared service accounts.

Store credentials in a customer-controlled vault so the cloud control plane never handles secrets, an approach echoed throughout hybrid architectures. Schedule quarterly access reviews to prove that dormant accounts are removed.

5. Monitor, Log, And Alert In One Central Location

Requirement 10 demands a full year of log retention, with the most recent three months immediately available. Stream connector executions, authentication events, and network flows to a centralized SIEM, then set alerts for anomalies across environments.

Because all logs live in one place, auditors can sample evidence without hopping between clouds and servers. This unified logging makes your hybrid model as transparent as a single stack, while continuous monitoring transforms integration from a compliance headache into a self-validating control.

How Does Unified Architecture Simplify PCI DSS Auditing and Compliance?

Separating orchestration from data execution places cardholder data only inside systems you directly control. This design choice narrows the CDE and reduces audit complexity through several key advantages:

- Smaller scope means the cloud control plane never touches payment data, so only the local data plane falls within PCI scope. This reduces the number of hosts, firewalls, and configurations auditors must review.

- Simplified control validation emerges from clear boundaries that let you map each requirement (encryption, segmentation, access control) to one side of the architecture. This eliminates debates over responsibility during assessments.

- Enhanced visibility comes from outbound-only traffic that funnels every log, metric, and alert into a single SIEM. Auditors get one immutable trail instead of scattered traces across clouds and data centers.

- Faster remediation follows from network isolation that contains blast radius when vulnerabilities surface. You patch locally without pausing the entire platform.

Automated policy engines built into hybrid platforms test encryption strength, key rotation, and MFA settings continuously. What used to be quarterly spot checks become live compliance dashboards. Auditors validate encryption keys, IAM roles, and log retention in minutes rather than chasing screenshots across teams.

Centralized, append-only logs provide cryptographically verifiable lineage for Requirement 10 and regional data sovereignty obligations. Card data never leaves its legal boundary while you benefit from cloud-scale orchestration.

What Are Common Pitfalls in PCI DSS Data Integration (and How to Avoid Them)?

Even well-architected pipelines can fall out of compliance when daily shortcuts creep in. Below are five missteps that appear most often, and how you can fix them before an assessor flags them.

How Can Enterprises Transition to a PCI DSS-Compliant Hybrid Model?

Moving to a hybrid architecture doesn't require ripping out your entire stack. You can work through the change in five focused steps that tighten control around cardholder data without disrupting daily operations:

- Assess current data movement workflows: Trace every path where cardholder data enters, leaves, or moves through your stack, including auxiliary systems that "could impact the security of cardholder data." Data-flow diagrams and system-component inventory help you keep scope realistic while avoiding blind spots.

- Define integration zones: Segment the CDE from general workloads with dedicated VPCs, subnets, and firewalls.

- Deploy a hybrid integration layer: Set up a cloud control plane for orchestration while running data planes inside your own infrastructure. With a hybrid control plane architecture, data planes initiate outbound-only HTTPS, so you never punch inbound holes in your firewall.

- Implement continuous monitoring: Stream connector logs, network events, and IAM changes to your SIEM for near-real-time correlation. Centralized logging satisfies Requirement 10's "at least one year" retention while letting you spot anomalies early.

- Validate controls through quarterly internal audits: Re-run vulnerability scans, review access privileges, and update risk analyses each quarter so your next formal assessment is evidence-ready.

Each phase isolates new controls before expanding scope, so you keep transaction processing live throughout the project. If you choose a hybrid solution, 600+ connectors you run on-premises today can migrate to the hybrid layer without code rewrites, feature gaps, or cardholder data leaving your environment.

How to Build Payment Data Integrations That Are Secure by Design?

Compliant integration starts with control, segmentation, and visibility, not just turning on encryption. A unified hybrid architecture lets you keep card data inside environments you own while scheduling pipelines from a cloud control plane. With this approach, the data plane runs in your VPC or other customer-controlled infrastructure, communicates outbound-only, and produces an immutable audit trail you can hand to assessors.

Airbyte Enterprise Flex delivers PCI DSS-compliant hybrid architecture, keeping cardholder data in your VPC while enabling cloud orchestration for 600+ connectors. Talk to Sales to discuss your compliance requirements.

Frequently Asked Questions

What is the difference between PCI DSS v3.2.1 and v4.0 for data integration?

Version 4.0 expands scope to include any system that could impact cardholder data security (DNS servers, load balancers, orchestration layers). It introduces mandatory automated security scanning for public-facing applications starting March 2025 and requires continuous monitoring rather than point-in-time assessments.

How does hybrid architecture reduce PCI DSS audit scope?

Hybrid architecture separates the control plane (orchestration metadata only) from data planes (actual cardholder data processing). By keeping payment information in customer-controlled infrastructure with outbound-only connections, you shrink the CDE to just data plane components, reducing systems auditors must review.

Can I use the same data integration platform for both PCI-compliant and non-compliant workloads?

Yes, but maintain strict network segmentation. Run PCI-compliant workloads on dedicated data planes within isolated VPCs or subnets with no inbound connectivity. Non-compliant workloads operate on separate data planes with different network rules.

What logging and monitoring capabilities are required for PCI DSS v4.0 compliance?

Requirement 10 mandates centralized logging with 12 months retention (most recent 3 months immediately available). Capture every connector execution, authentication event, and configuration change. Logs must be immutable and include sufficient detail to reconstruct access patterns with near-instant alerting on security events.

.webp)