Your organization generates and manages large volumes of data. Handling this data manually can lead to errors and delays, hindering productivity. You can turn to data automation tools to address these challenges faced during manual data entry, extraction, and transformation.

Using data automation tools can enhance data quality, reduce operational costs, and save valuable time. This article will discuss different types of data automation tools and key factors to help you choose the best one.

What are Data Automation Tools?

Data automation is the process of automatically gathering, processing, analyzing, and storing data. Many automated data processing tools are available for this purpose. These tools allow you to integrate with various systems to assist you in performing tasks such as data processing and reporting. Data automation tools can handle massive amounts of data effectively, saving significant time in processing efforts.

12 Data Automation Tools

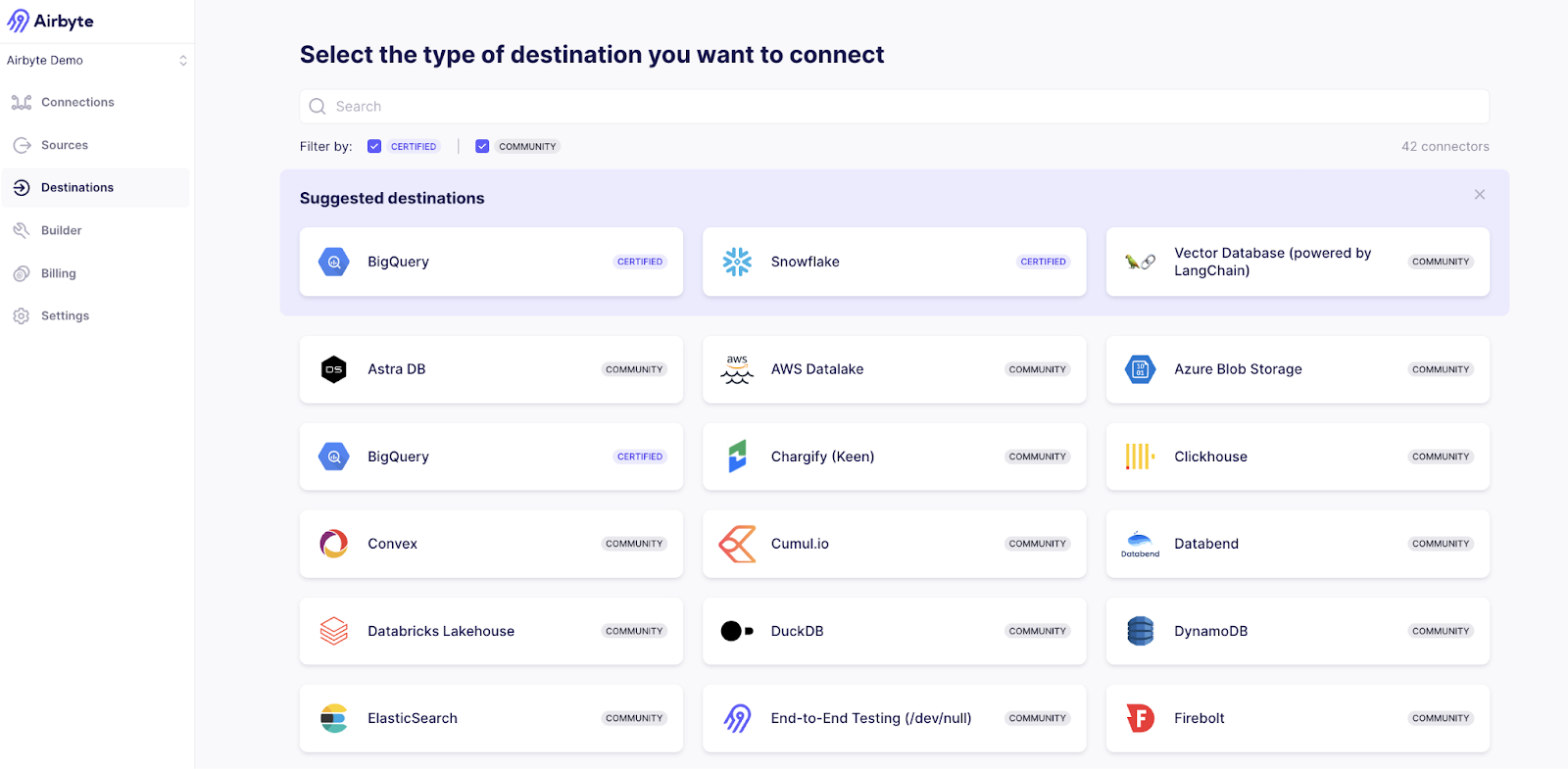

1. Airbyte

Airbyte is an integration platform that allows you to automate the process of building and managing data pipelines. It offers a user-friendly interface and a catalog of over 550 pre-built connectors that streamline data extraction, transformation, and loading. You can also leverage Airbyte’s AI capabilities to build LLM apps quickly and employ ML techniques for classification.

Key Features

- AI-Assistant: When creating custom connectors using the Connector Builder, you can leverage Airbyte’s AI assistant. It reads and understands the API documentation for you and prefills the configuration fields automatically, significantly reducing the development time.

- Streamline Your GenAI Workflows: You can load your unstructured data directly into a vector database like Pinecone and Weaviate. This significantly simplifies your GenAI workflows. Additionally, you can perform automatic chunking and indexing to make your data LLM-ready.

- Versatile Pipeline Management Options: Airbyte not only provides an intuitive UI but also provides powerful APIs, Terraform Provider, and PyAirbyte to enable rapid pipeline deployment and data syncing.

- Refresh Syncs: This feature allows you to perform incremental and complete data synchronizations without any downtime. If the underlying source and destination support checkpointing, you can resume your data syncs from where you previously left off.

- Schema Change Management: Based on your configured settings, Airbyte automatically propagates and reflects the schema changes made at the source into your destination. This ensures minimal errors and operational efficiency.

- Change Data Capture: With Airbyte’s CDC feature, you can detect and capture data changes at the source and sync them to the target database. Airbyte supports incremental and full data refreshes.

- Secured Data Movement: Airbyte supports several data security measures, such as encryption, auditing, monitoring, SSO, and role-based access control, to protect your data against data breaches and cyber-attacks.

Pricing

Airbyte offers four plans—Open Source (free), Cloud, Team, and Enterprise. The pricing of these plans depends on your data syncs and hosting requirements.

Ease of Use

- Airyte provides flexible deployment with self-managed and cloud-based versions.

- It is a no-code platform, which makes data exploration and pipeline building much more effortless, even for individuals with no technical expertise.

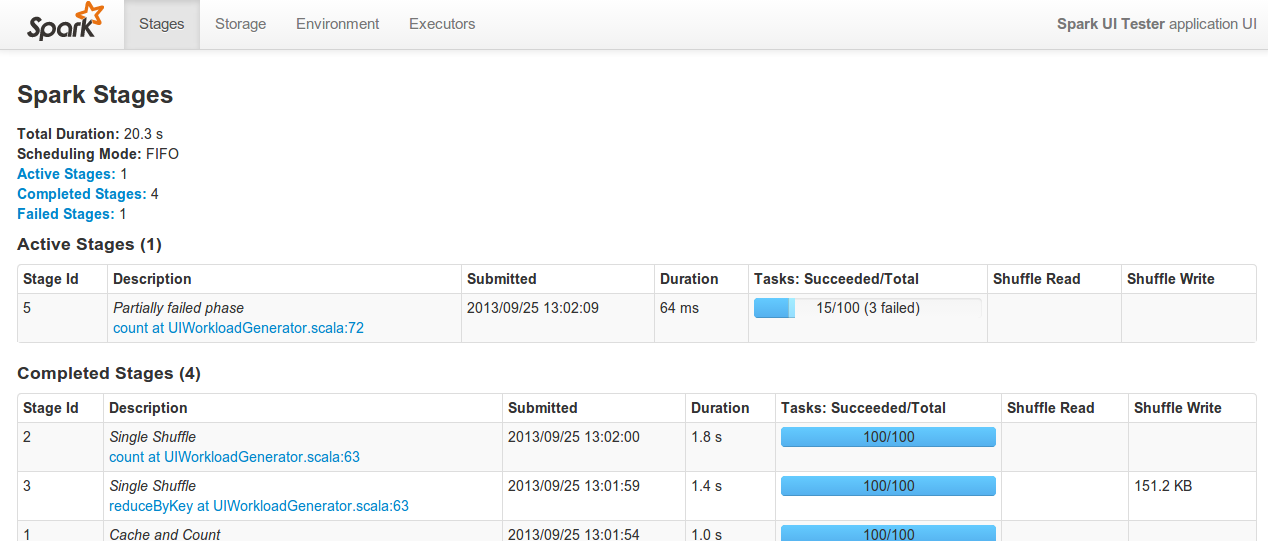

2. Apache Spark

Apache Spark is an open-source distributed computing tool that provides a robust analytics engine for large-scale data processing. The engine’s flexibility allows you to operate on multiple languages like Python, Java, and R. It enables you to efficiently integrate with various libraries, such as GraphX, Project Tungsten, and Spark SQL. This versatility helps you automate multiple tasks by using code solutions.

Key Features

- Code Generation: Apache Spark offers libraries such as Project Tungsten that help you generate optimized code automatically for particular queries. This feature assists you in solving complex queries with multiple joins.

- Intuitive APIs: Spark provides intuitive APIs that streamline development, allowing for easy automation of data processing tasks. This feature enables smooth integration with machine learning and data analysis tools.

Pricing

It is free to use.

Ease of Use

- Apache Spark helps you scale seamlessly from a single machine to large clusters, which in turn helps with resource allocation and load balancing.

- This tool simplifies big data processing through a unified framework, which allows you to handle diverse data processing tasks without switching to other tools.

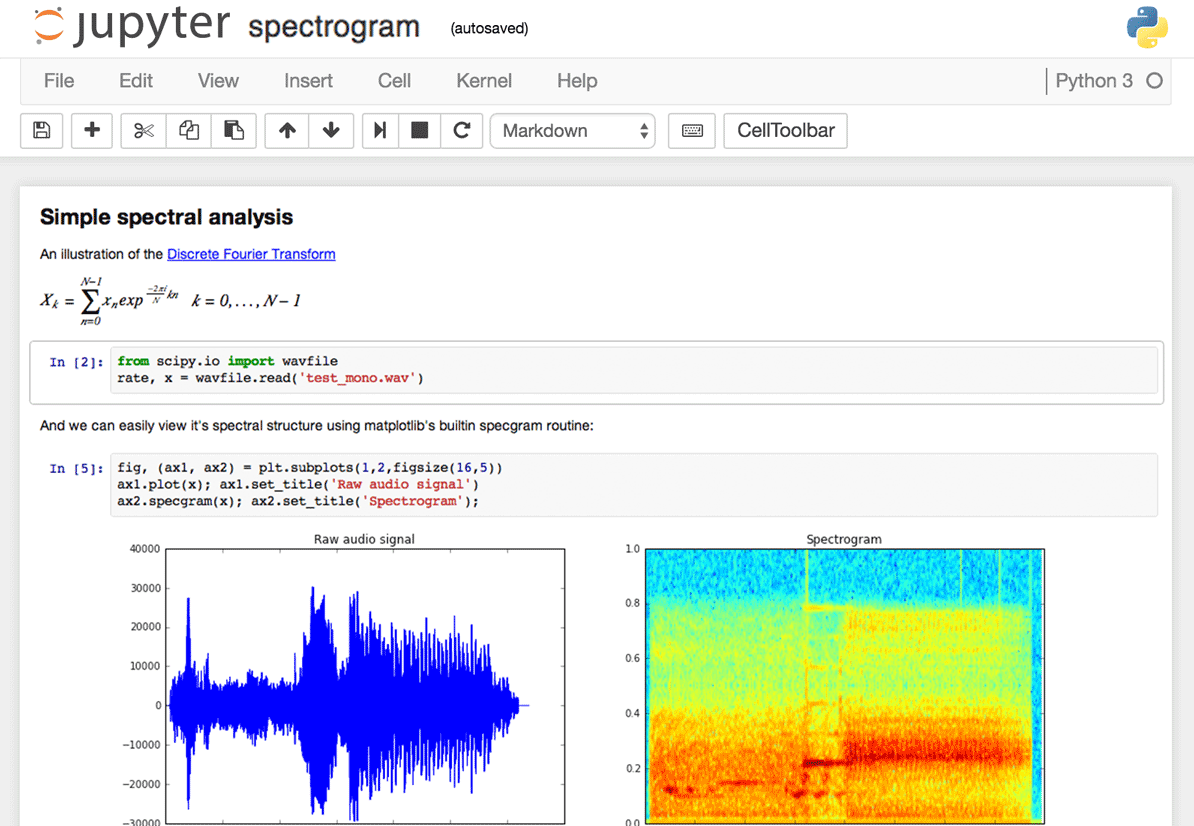

3. Jupyter Notebooks

Jupyter Notebook is an interactive computational environment that empowers you to automate iterative data exploration and modeling using familiar languages like Python, R, and Spark. It streamlines complex data analysis by allowing you to execute code, apply statistical models, and generate custom visualizations all in one place. This helps save time and facilitates effective resource utilization.

Key Features

- Automated Notebook Execution: Jupyter provides capabilities for the automated execution of cells in a notebook through the command-line interface or dedicated libraries like papermill. It ensures consistent and up-to-date results without manually executing each cell.

- Environment Variable Integration: It leverages environment variables to dynamically adjust notebook parameters. This enables flexible automation of data analysis based on different input conditions.

- Easy File Conversions: Jupyter Notebooks are highly shareable and can be exported into various formats, such as HTML and PDF. It also uses platforms like nbviewer to enable viewing of publicly accessible notebooks directly in the browser.

Pricing

Jupyter Notebook is an open-source tool and is free to use.

Ease of Use

- Jupyter supports collaborative work and allows you to easily share notebooks using platforms like email, Dropbox, GitHub, and the Jupyter Notebook Viewer.

- Jupyter generates rich data reports with detailed outputs, including images, graphs, maps, plots, and narrative text, which makes understanding data analysis results much easier.

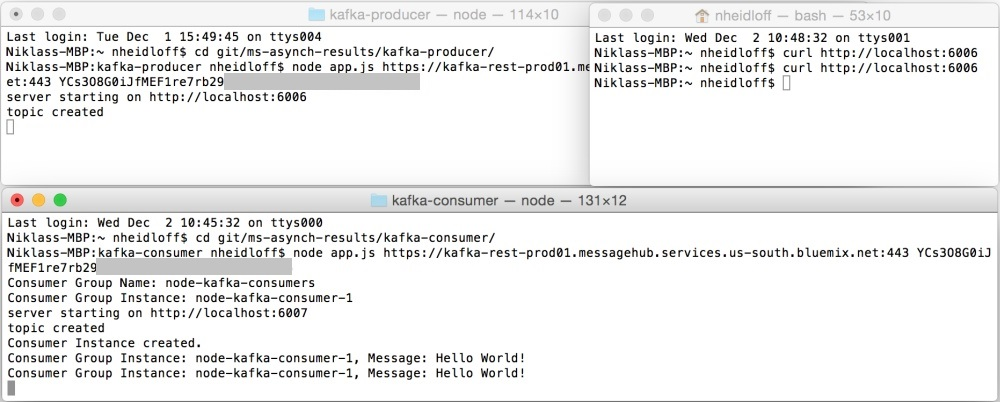

4. Apache Kafka

Apache Kafka is a distributed platform designed for real-time data streaming. It allows you to handle data from multiple sources and deliver it to various consumers, making it suitable for fault-tolerant data ingestion and processing.

Key Features

- Schema Registry Integration: Kafka allows you to integrate with Schema Registry to automate schema management for data formats, ensuring consistency.

- Scalable Messaging: Kafka’s subscribe model supports scalable messaging, automating data distribution and integration across various systems and platforms.

Pricing

Apache Kafka is free to use.

Ease of Use

- Kafka offers robust APIs such as Producer API, Consumer API, and Streams API for seamless interaction between various software applications.

- Active community support and documentation make Kafka easily accessible.

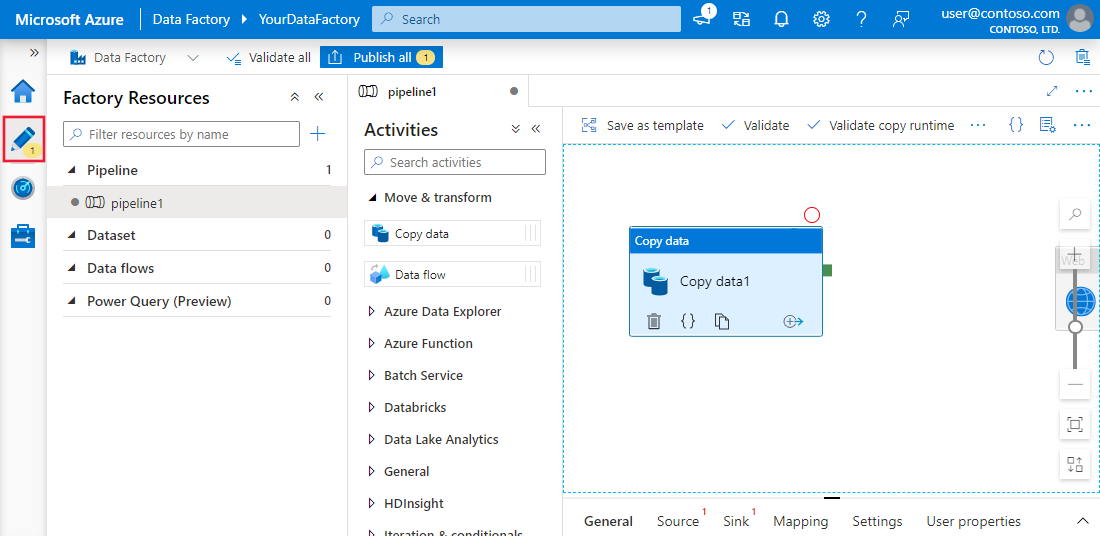

5. Azure Data Factory

Azure Data Factory (ADF) is a cloud-based platform for creating and automating data-driven workflows. It allows you to orchestrate data movement between various data systems and supports external computing services like Azure HDInsight and Azure Databricks for manually coded transformations. Azure Data Factory also enables workflow monitoring through both programming and user-friendly interfaces.

Key Features

- Integration Capabilities: ADF allows you to integrate multiple cloud-based sources for comprehensive data workflows.

- Visible Interface: ADF’s drag-and-drop interface simplifies creating and managing workflows, making them accessible and efficient without extensive coding.

Pricing

ADF’s pricing depends on various factors such as pipeline orchestration and execution, data flow execution and debugging, and a number of Data Factory operations.

Ease of Use

- ADF allows you to integrate with Azure services like Power BI and Synapse Analytics for end-to-end data solutions.

- ADF’s streamlined features, such as CDC, data compression, and custom event triggers, enable faster data transfer and processing, reducing the time needed to analyze huge datasets.

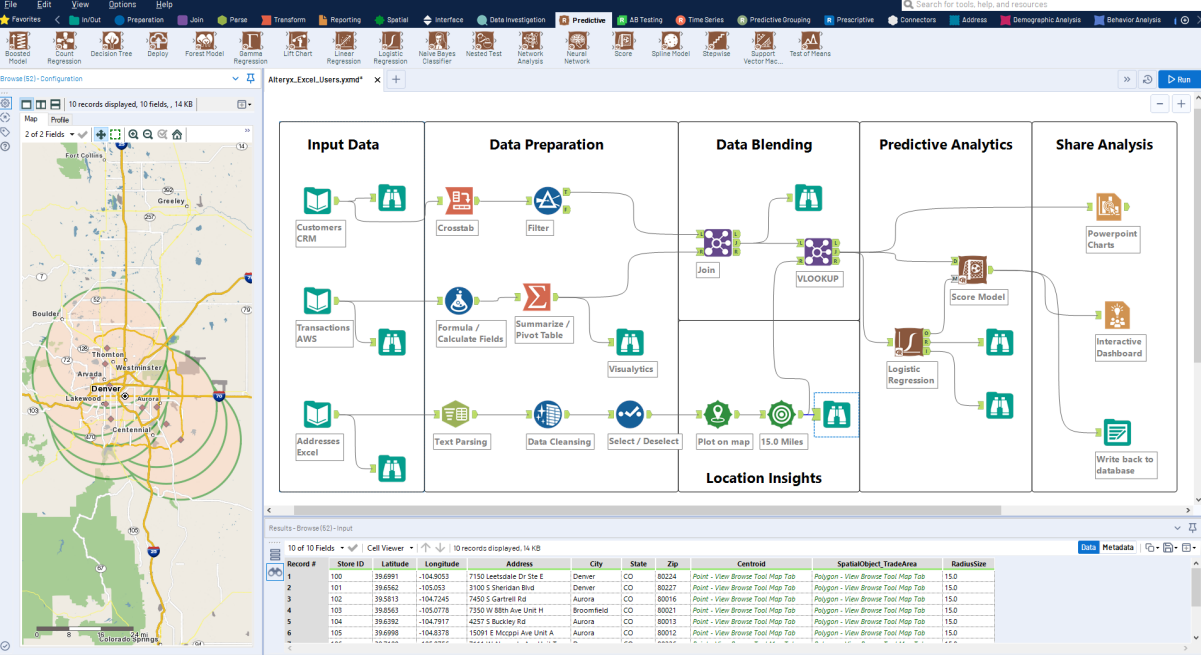

6. Alteryx

Alteryx is a data analytics and visualization platform that offers various tools to help you transform and analyze big data from multiple sources without technical expertise. You can also automate your analytics workflows and perform advanced tasks such as predictive analysis and geospatial analysis.

Key Features

- Automated Data Preparation: Alteryx can help simplify and automate the data collection and preparation process by leveraging over 300+ connectors. You can perform filtering, changing data types, filling in missing values, and other data-cleaning tasks very quickly.

- Building Reports: You can integrate Alteryx with BI tools like Tableau and create interactive dashboards and easy-to-understand reports. This also helps in easier root-cause analysis.

Pricing

Alteryx provides two editions—Designer Cloud starting at $4950 USD, and Designer Desktop, at $5195 USD.

Ease of Use

- Alteryx provides a GUI with drag-and-drop functionality, which makes it easier to use, even for beginners.

- The platform has a large community and many education resources available that can help ease your learning curve.

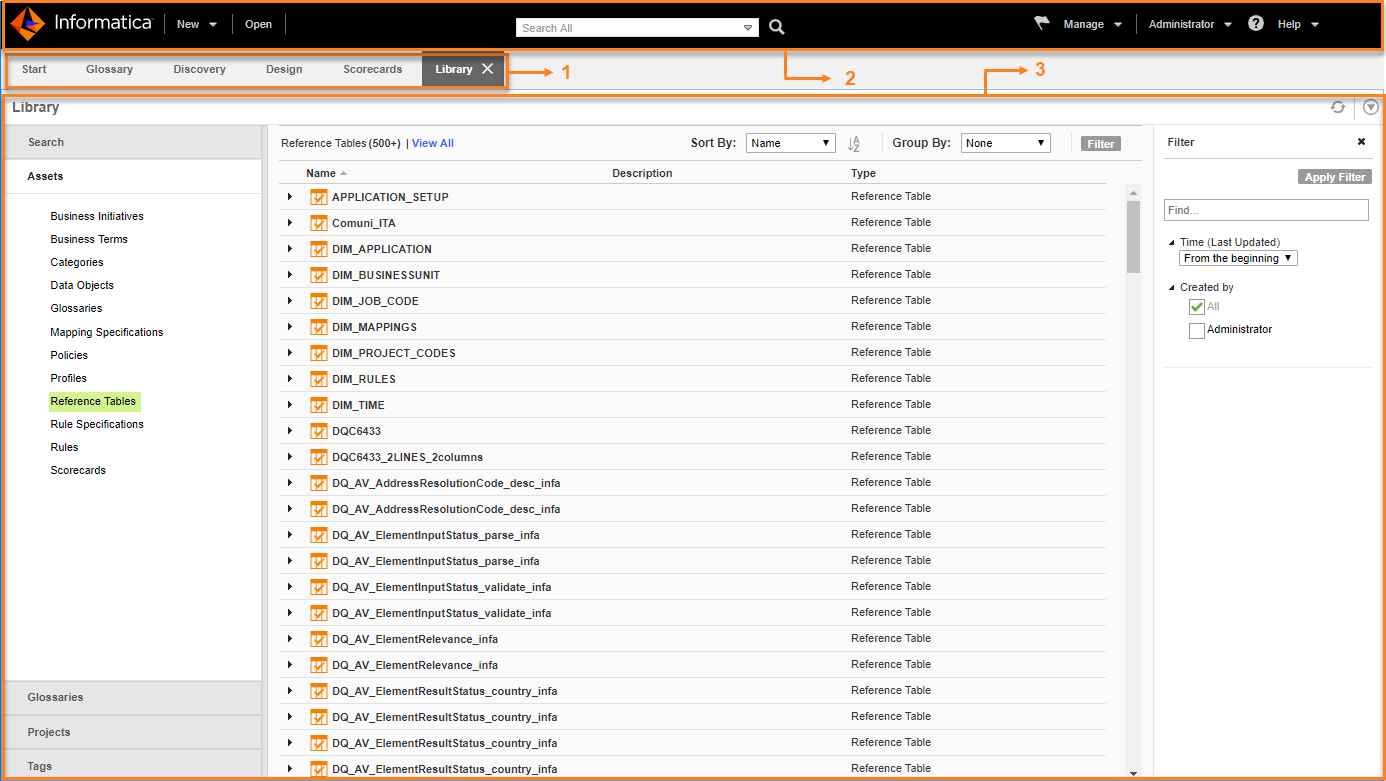

7. Informatica

Informatica is a cloud-based data management platform that allows you to leverage AI and machine learning to process and manage high-volume data. It is a no-code/low-code ETL-based tool that helps you avoid the risk of errors associated with custom coding. Informatica offers parallel processing for high performance and a centralized cloud server for high security, easy accessibility, and data facility tracking.

Key Features

- Automated Workflows: Informatica provides a visual workflow designer that enables you to automate complex data pipelines by defining tasks, their dependencies, and execution schedules.

- Metadata Management: It includes a metadata repository that stores information about mappings, workflows, transformations, and sources. This facilitates improved data lineage, governance, impact analysis, transparency, and reusability.

- Versatile Integration Options: Informatica supports a vast array of data sources and targets, including databases, cloud services, XML, flat files, and more. It also allows you to seamlessly integrate with various enterprise applications and systems.

Pricing

Informatica charges you based on data volume and provides an option to only pay for the services you need.

Ease of Use

- Informatica offers a visual development interface that simplifies the design and management of data workflows, reducing the complexity of data integration tasks.

- It provides easy data maintenance, data monitoring, and recovery.

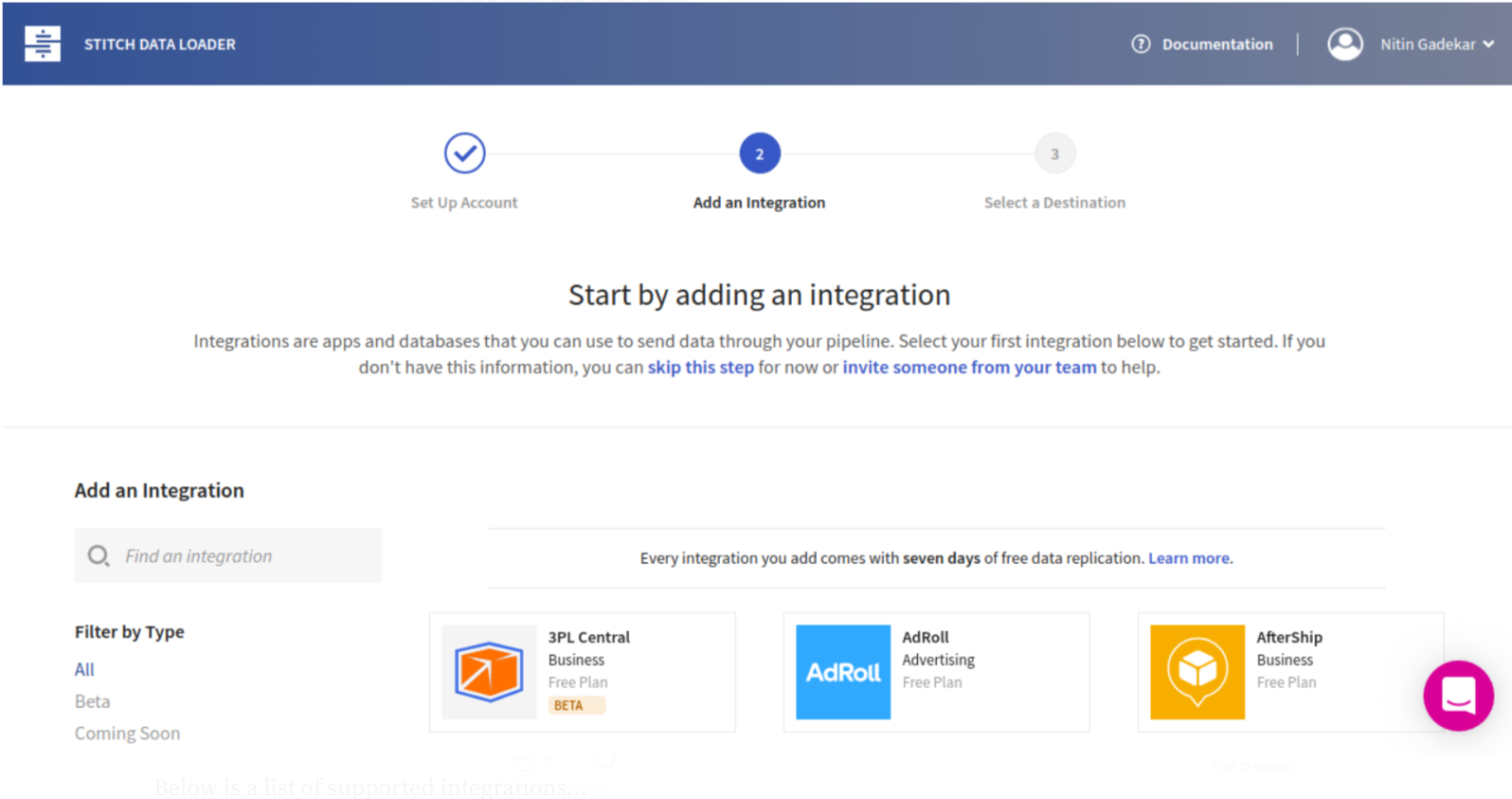

8. Stitch

Stitch, acquired by Qlik, is a cloud-based platform that simplifies the process of replicating data from various sources to a centralized data warehouse. By offering a library of 140 pre-built data connectors, Stitch reduces manual intervention, enabling non-technical users to easily perform complex data integration tasks.

Key Features

- Advanced Security Features: It supports security certifications like SOC 2 and GDPR to protect sensitive data from misuse.

- Advanced Replication Scheduling: The Advanced Scheduler allows you to schedule the data extraction process. Using cron expressions, you can specify the exact starting time, day of the week, or month your data extraction process should begin.

Pricing

It offers the following three types of plans:

- Standard: Starts from $100/ month, depending on the number of rows replicated.

- Advanced: $1250/month; it is for teams wanting control and extensibility of their pipelines.

- Premium: $2500/month; it is for organizations with heavy workloads that require the best data security and compliance.

Ease of Use

- It reduces manual intervention by orchestrating data pipelines.

- Stitch tool offers a user-friendly interface that simplifies data integration.

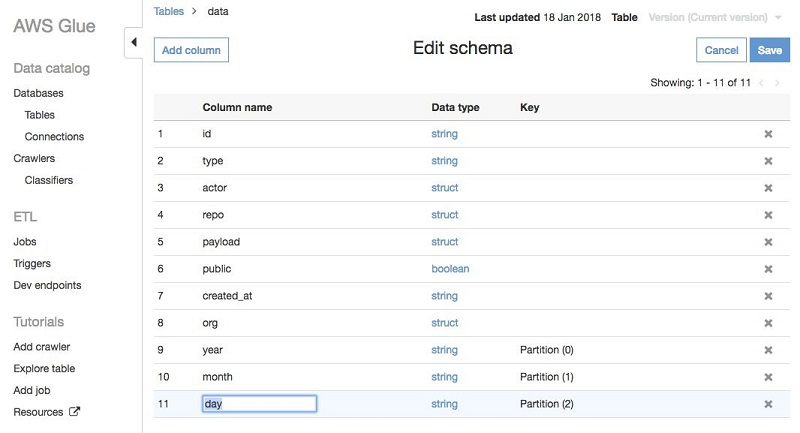

9. AWS Glue

AWS Glue is a fully managed ETL tool that simplifies data integration and preparation on AWS. In addition to integration, it helps you automate several tasks involved in data preparation, such as discovering, enriching, converting, and loading data.

Key Features

- ETL Jobs: It offers a visual interface for creating ETL jobs without writing code. This empowers both technical and non-technical users to build and manage data pipelines efficiently.

- Automatic Source Documentation: AWS Glue utilizes crawlers to document data sources and infer schemas automatically. These crawlers create tables, enabling data querying and ETL operations.

Pricing

AWS offers a pay-as-you-go pricing model. AWS Glue pricing varies by region.

Ease of Use

- Combining AWS Glue jobs with event-based triggers can enable you to design a chain of dependent jobs, automating most of your data processing tasks.

- It offers a drag-and-drop interface for defining your ETL process and automatically generates code for performing data extraction, transformation, and loading.

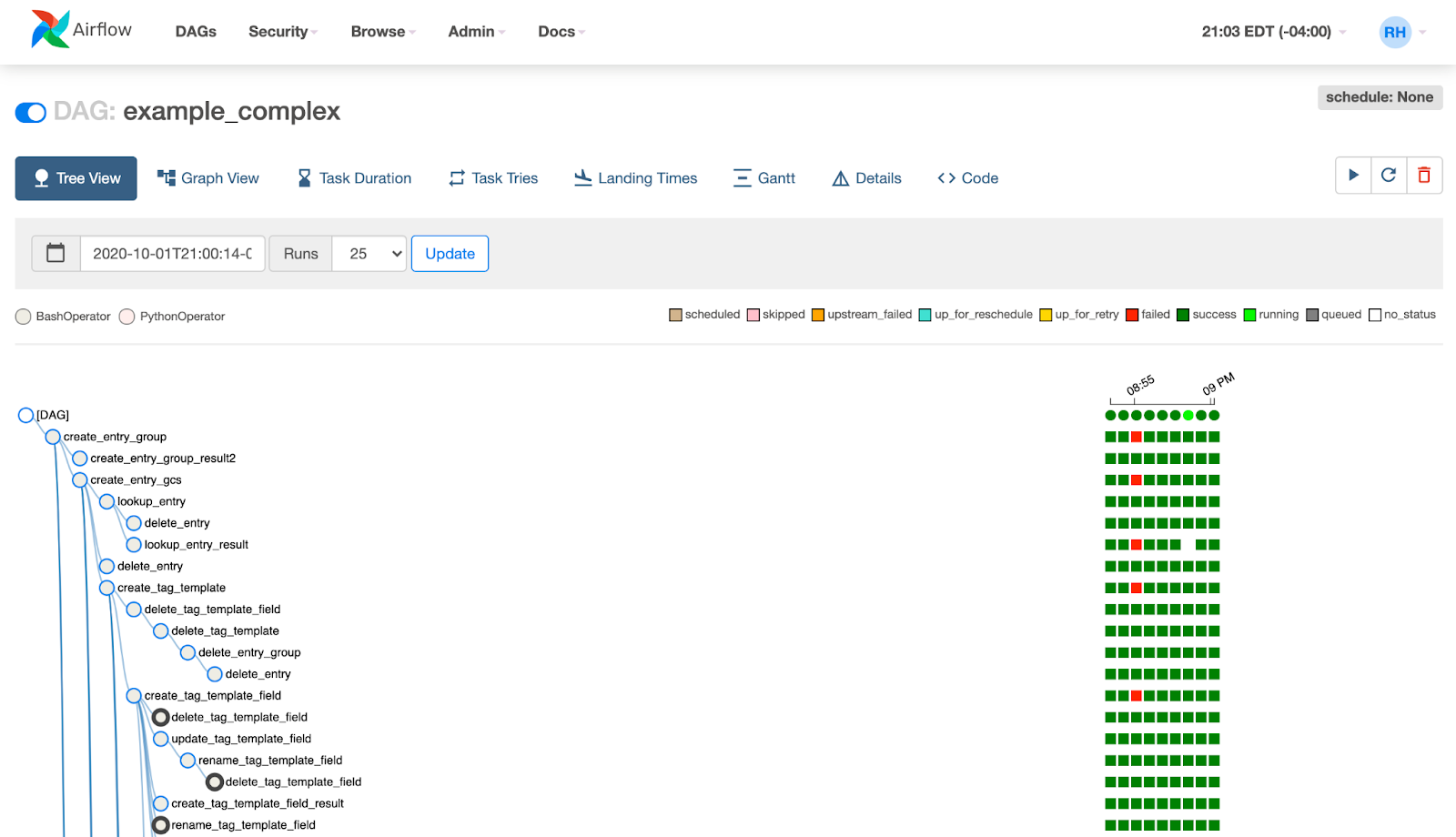

10. Apache Airflow

Apache Airflow is an open-source platform for orchestrating, scheduling, and monitoring workflows. It allows you to define and manage complex data pipelines using directed acyclic graphs (DAGs), a visual representation of a series of processes or tasks and their dependencies. This allows you to execute tasks in the correct order and handle their dependencies.

Key Features

- In-built Dependency Management: It automatically manages task dependencies to ensure correct execution order.

- Scalability: Apache Airflow’s modular architecture and message queue allow it to cater to a large number of users while ensuring enhanced scalability.

Pricing

Airflow is free to use.

Ease of Use

- The tool offers a centralized control panel, which helps in pipeline orchestration, monitoring, and management.

11. Syncari

Syncari is designed to streamline data management and improve data quality. It provides a robust set of features to enhance data cleaning, synchronization, and governance. Syncari’s intelligent API connector, Synapse, automatically understands the schema of each connection, ensuring accurate data integration.

Key Features

- Integrated Data Governance: Syncari has integrated data governance, which helps you implement data quality and compliance across all connected systems. This feature allows you to manage data policy, rules, and standards across multiple datasets.

- Unified Data Management: This tool consolidates data from various sources into a single, reliable destination for better data management.

Pricing

The pricing plan starts from $4,995 /month. For detailed pricing, you can visit their pricing page.

Ease of Use

- Its user-friendly interface helps simplify the setup and management of data workflows.

- Syncari follows multi-directional sync technology that ensures your destination system is always consistent and reliable with the source databases.

12. Fivetran

Fivetran is a cloud-based data movement platform that simplifies moving data from various sources to a centralized destination, such as a data warehouse. It offers over 500 pre-built connectors that help you automatically extract data from diverse sources, including databases, cloud applications, APIs, and more. This eliminates the need for manual data extraction and integration processes.

Key Features

- Schema Drift Handling: Fivetran automatically adapts to changes in the source schema, ensuring that data continues to flow seamlessly even when the structure of the source data changes.

- Orchestration and Scheduling: Fivetran’s integrated scheduling automatically triggers model runs following the completion of connector syncs in your warehouse. This ensures that your data is always updated and ready for analysis.

Pricing

Fivetran offers usage-based pricing that scales with your data needs. You only pay for what you use each month, with pricing based on Monthly Active Rows (MAR).

Ease of Use

- Fivetran allows you to selectively block certain tables or columns from being replicated, helping you avoid exposing sensitive information.

- Fivetran offers pre-built, dbt Core-compatible data models for popular connectors, which transform raw data into analytics-ready datasets with just a few clicks.

Types of Data Automation Tools

You must understand the types of automation tools available for efficient data management. Let’s discuss these in detail.

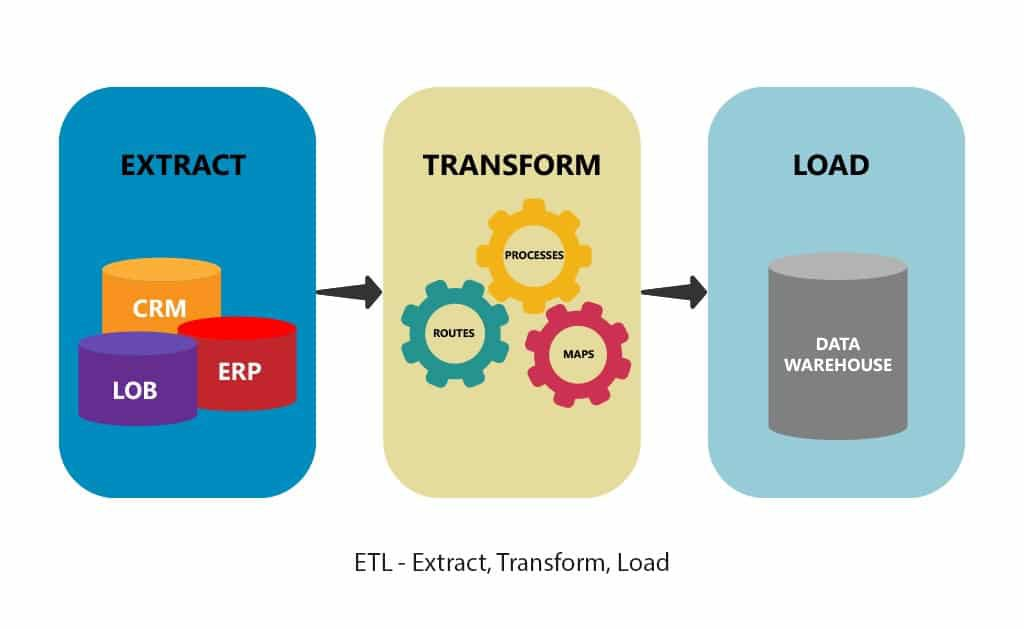

1. ETL Tools

ETL (Extract, Transform, Load) tools help you automate data integration from various sources, such as databases, APIs, and applications. These tools enable you to transform the data to meet specific requirements before loading it into targeted databases. Using ETL tools can reduce manual efforts, ensure data consistency, and enhance overall data quality across different platforms.

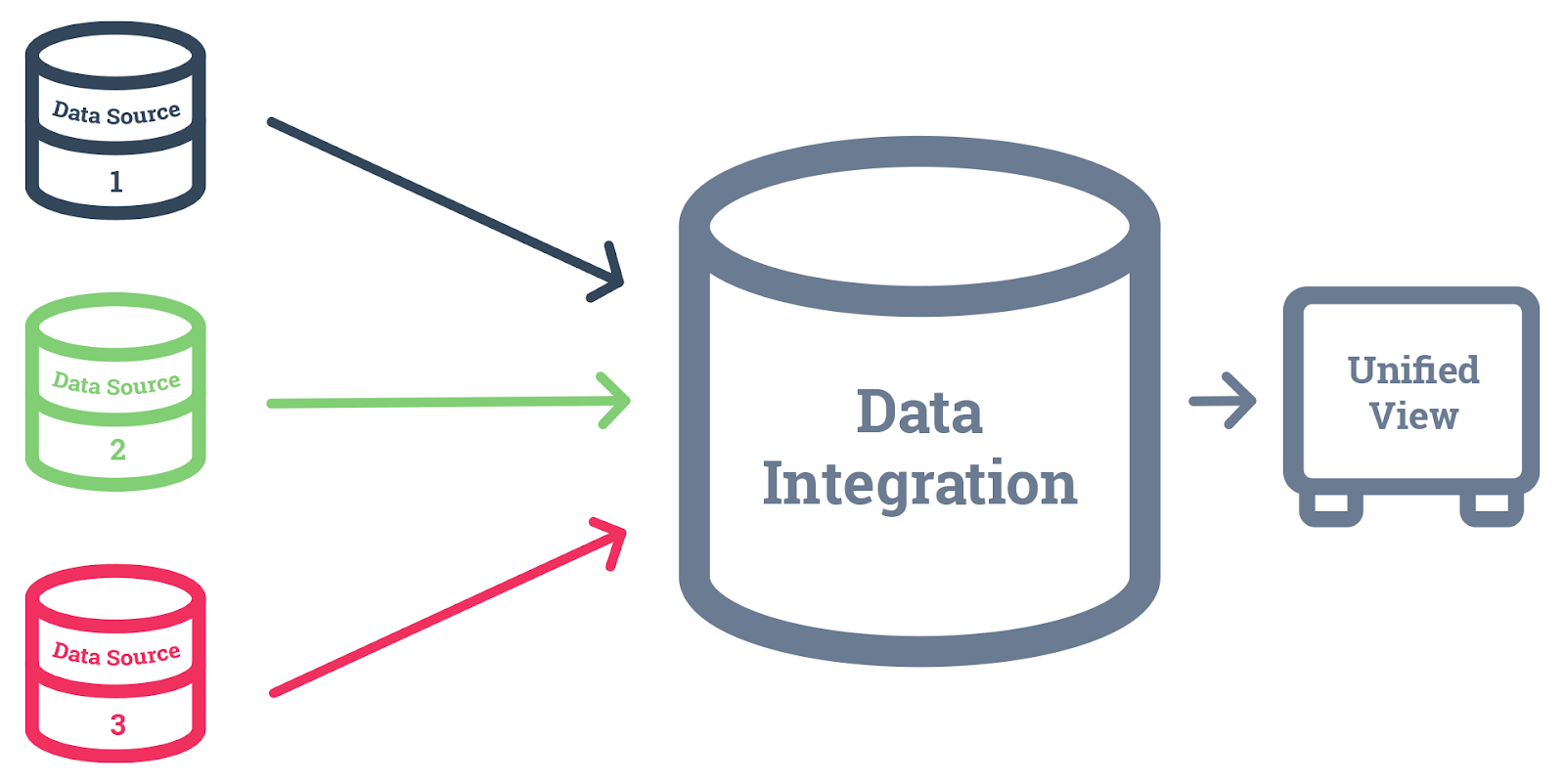

2. Data Integration Platforms

Data integration platforms are centralized solutions that help gather, arrange, and transform data from many sources. Their primary purpose is to combine many datasets into a unified format, which improves data accessibility and management. This collective approach promotes the extraction of important information through better BI procedures.

3. Workflow Automation Tools

Workflow automation is the process of using any software solution to carry out specific tasks without requiring human input. It increases consistency, speed, and ease of work. By automating workflows, you can reduce manual work and repetitive tasks.

4. Data Pipeline Tools

A data pipeline is a streamlined process that organizes the successive stages that prepare data for analysis. It allows you to collect information from different sources, such as IoT devices, applications, and digital channels. Then, you can transform this data by analyzing, sorting, and filtering it to obtain insights. Some data pipelines also support big data and machine learning tasks.

5. Data Quality And Cleansing Tools

Data quality and cleansing tools are essential for providing you with correct and trustworthy data. Data cleansing, also known as data scrubbing, is the process of finding and fixing incomplete, inaccurate, and erroneous data using automated methods. These tools help you correct mistakes and remove incorrect entries. They also enable you to address common problems such as missing values, mistyped entries, and misplaced data.

Choosing the Right Data Automation Tools

The right automation tool is essential for optimizing workflow efficiency and ensuring robust data integration. Here are some key factors to consider when choosing a data automation tool.

1. User-friendly

Tools should be easy to use, with a user-friendly interface. It should also be intuitive, with a minimum learning curve, allowing you to set up and manage automation workflows without extensive technical knowledge.

2. Data Security and Compliance

You must verify that the tool adheres to robust security and compliance standards. It should adhere to security measures such as encryption, access controls, and compliance regulations.

3. Support and Documentation

Comprehensive support and documentation are essential for successful tool adoption. A detailed knowledge base, accessible tutorials, and responsive customer support can significantly facilitate productivity. Effective guidelines will help you troubleshoot errors quickly and ensure minimal disruptions.

4. Cost

Before finalizing the tool, analyze its pricing structure to determine whether it suits your budget. Consider initial setup and ongoing expenses to ensure the tool offers long-term value.

Summary

The landscape of data automation tools is diverse, offering different features and functionalities to simplify your analytics journey. The above-listed tools will help you automate and streamline your data management workflow. However, selecting the appropriate one depends on your business requirements.

FAQs

What are the Benefits of Using Data Automation Tools?

Data automation tools help you streamline repetitive tasks and accelerate data processing. They improve workflows, reduce manual errors, and ensure data consistency. These tools also enable scalable data integration, giving you more time for strategic decision-making.

Is ETL a Data Automation Tool?

ETL (Extract, Transform, Load) is a process that automates data movement. They provide data extraction from various sources, enable you to perform transformations, and load data into storage systems. By automating these tasks, ETL tools significantly improve data movement and reduce manual efforts.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: