Same Airbyte, Different Footprint: One Catalog Across Data Pipeline Deployments

Summarize this article with:

✨ AI Generated Summary

Airbyte offers a unified data pipeline platform that delivers identical functionality and access to 600+ connectors across cloud, hybrid, and on-premises deployments, eliminating the typical trade-offs between compliance and capability. Its architecture separates the control plane (cloud-hosted) from the data plane (customer-managed), enabling consistent pipeline definitions, monitoring, RBAC, and upgrades regardless of environment.

- Single connector catalog and Docker-based integrations ensure uniform configuration and operation across all environments.

- Supports compliance needs like GDPR and HIPAA by keeping sensitive data local while maintaining centralized orchestration and monitoring.

- Reduces DevOps overhead by avoiding parallel stacks, enabling seamless migration, and simplifying audit and governance processes.

You've been forced into this choice before: keep sensitive data on-premises to satisfy compliance teams, or migrate everything to the cloud for the connectors and automation your team actually needs. Most data platform vendors make you pick sides. Their "enterprise" version runs in your data center but lacks half the connectors, while their "cloud" offering has every integration you want but won't touch your regulated workloads.

Airbyte works differently. Whether you deploy Airbyte Cloud, run a hybrid control plane, or operate a fully air-gapped environment, you get the same platform with access to the same 600+ connectors. Your deployment footprint changes based on compliance requirements, but the functionality stays identical. You don't lose CDC replication, monitoring capabilities, or connector quality just because an auditor requires on-premises data processing.

This consistency isn't accidental. It's built into Airbyte's architecture. Here's how that unified approach works and why it matters for teams managing data across multiple environments.

What Makes Data Pipeline Deployment So Complex in Enterprises?

You probably run data pipelines in more than one place: a public cloud account, a private Kubernetes cluster, maybe an air-gapped data center. Each environment has its own network rules, identity systems, and compliance checklists. When the control plane that schedules jobs sits in one network while the data plane that moves records lives elsewhere, even a simple pipeline becomes a maze of firewall rules and service accounts.

Most legacy vendors respond by splitting their roadmaps. "Cloud-only" features in SaaS, pared-down agents on-prem. The result is two products that share a logo but not much code. That fragmentation forces teams to:

- Juggle different UIs, upgrade cycles, and support teams for the same logical pipeline

- Write custom scripts just to keep monitoring dashboards in sync across environments

- Rebuild and redeploy each hotfix in every environment, stretching DevOps bandwidth

- Maintain two divergent stacks with separate failure modes and integration versions

Enterprises tell us they want the same connectors, the same alerts, and the same role-based access controls everywhere. A single library of 600+ integrations available in all footprints eliminates debates over which team "owns" a source or whether a feature will ever arrive on-premises.

Consider a global manufacturer that runs factory systems in Germany but pushes analytics to a U.S. Snowflake warehouse. Local GDPR rules require processing on European soil, yet the business insists on near-real-time reporting. Without one pipeline definition that travels intact from on-premises to cloud, you end up maintaining two divergent stacks. Until deployment stops dictating capability, complexity remains the default tax on your data team.

How Airbyte Unifies Data Pipeline Deployments Across Environments?

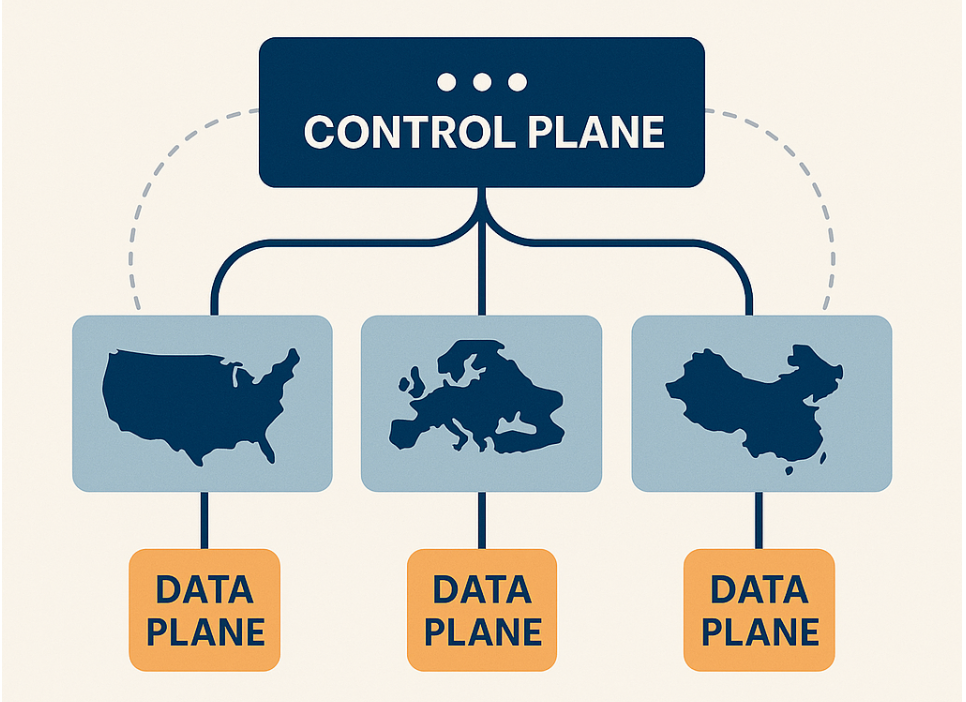

Moving from fragmented deployments to unified operations requires a fundamentally different architectural approach. Airbyte's architecture splits control from execution to deliver consistent functionality across all environments. A cloud-hosted control plane handles orchestration, scheduling, and metadata, while a customer-managed data plane runs sync jobs inside your network. This design enables Airbyte Cloud, Airbyte Enterprise Flex, or fully air-gapped deployments without feature limitations.

The single control plane defines pipelines once and runs them anywhere. Each data plane opens only outbound connections, ensuring data and credentials never cross inbound firewall boundaries. This is essential for GDPR or HIPAA environments. Container-based integrations use Docker images, so the same runtime executes in your Kubernetes cluster, private VPC, or Airbyte Cloud with identical binary execution.

All 600+ integrations in Airbyte's catalog ship with identical code and specifications. A Salesforce-to-Snowflake sync behaves consistently whether triggered from SaaS or on-premises deployments. The Airbyte Protocol and its specification standardize discovery, configuration, and state management across all environments.

Consider an enterprise running Flex: European customer data processes in an EU-only data plane within a private VPC, while U.S. workloads run in Airbyte Cloud. With this deployment model, you get:

- One UI to access and manage all pipelines regardless of location

- Identical RBAC policies applied across both environments

- Unified dashboard monitoring every job through a single pane of glass

- Version upgrades that deploy from the control plane while local clusters pull updated images and continue processing without manual intervention

Shared catalog definitions, configuration schemas, and images eliminate the need for parallel integration sets or pipeline rewrites when compliance requirements change. Airbyte's container-native, control-plane/data-plane architecture maintains operational consistency, letting you focus on data movement rather than environment-specific adaptations.

How a Single Connector Catalog Simplifies Governance and Scale?

This architectural foundation becomes especially powerful when you consider how a unified catalog transforms day-to-day operations. Your day gets easier when every pipeline, no matter where it runs, pulls from the same library of 600+ Airbyte integrations. A unified catalog removes guesswork from deployment and gives you repeatable patterns you can trust.

Consistent Configuration Across Environments

Because each integration is packaged as a Docker image that follows the Airbyte Specification, you configure and run it the same way whether the data plane sits in Airbyte Cloud or an air-gapped Kubernetes cluster. The platform renders the UI dynamically from the integration's spec, so the form fields you see in a SaaS workspace are identical to those you see on-premises. That uniform experience streamlines pipeline creation and cuts onboarding time for new engineers.

Simplified Audit and Compliance

Consistency also pays off during audits. Versioned specs and the metadata stored in the catalog file give risk teams a single place to verify schemas, sync modes, and lineage. When an integration is updated, its new image tag and spec do not automatically propagate across environments in Airbyte; this synchronization requires additional orchestration or deployment automation tooling.

Reduced DevOps Overhead

From a DevOps perspective, a single catalog removes the need to build or validate environment-specific binaries. You no longer maintain parallel CI pipelines just to repackage the same integration for staging, prod, and on-premises clusters. The result is fewer moving parts and faster incident response.

This approach delivers a lower review surface with one set of specs and images to scan, sign, and store. Your RBAC policies map to the same integration IDs everywhere, creating uniform access controls across environments. Logs and metrics can be configured to flow into the control plane in a standardized format, but the exact process and format may vary depending on where data is processed and the monitoring setup.

Example:

Consider a bank that runs CDC from an on-premises Oracle cluster into Snowflake hosted in the cloud. With Airbyte Enterprise Flex, you can manage CDC between an on-premises Oracle cluster and Snowflake in the cloud using a unified UI and consistent configuration across environments, but each distinct environment (such as a U.S. sandbox, EU production VPC, or disaster-recovery site) still requires its own deployment and configuration, including network and firewall settings.

Centralized monitoring completes the picture. Job status, error traces, and throughput metrics from every data plane roll up to the control plane. You keep full visibility while sensitive records stay local, satisfying governance teams and letting you scale pipelines, not process overhead.

Why Unified Deployment Architecture Future-Proofs Data Operations?

Unified deployment architecture future-proofs your data operations by eliminating the rebuild tax that comes with changing business requirements. When compliance rules shift or new regions come online, you adapt infrastructure without touching pipeline logic.

A unified approach delivers:

- No vendor lock-in: Every deployment runs the same 600+ integrations, so migration never forces you to drop a source or destination

- Infrastructure portability: Move pipelines between Airbyte Cloud and private Kubernetes by changing deployment YAML, not business logic

- Compliance adaptability: Add regional data planes for GDPR or data residency requirements without rewriting code or retraining staff

- Operational consistency: Manage every environment through the same UI, API, and Terraform provider as your infrastructure footprint evolves

The platform services that schedule jobs, store state, and expose the UI remain identical across environments. In a hybrid model, Airbyte hosts the control plane while your data plane stays in your VPC, so protected records never leave your network.

The result is that your data platform evolves with compliance rules and growth targets instead of forcing you to choose between capability and control.

Ready to Deploy Data Pipelines Without Compromise?

Airbyte's unified architecture proves that deployment flexibility doesn't require functional compromise. By maintaining identical capabilities across SaaS, hybrid, and air-gapped environments, teams can make infrastructure decisions based on compliance and business needs rather than feature availability. The same 600+ integrations, monitoring tools, and governance controls work consistently whether data stays on-premises or moves to the cloud.

Airbyte Enterprise Flex delivers cloud orchestration with customer-controlled data planes, keeping your data in your VPC or on-premises while eliminating Kubernetes management overhead. Same Airbyte, same connectors, same quality. No trade-offs between compliance and capability. Talk to Sales about hybrid deployment for your regulated environment.

Frequently Asked Questions

Can I migrate pipelines between deployment models without rewriting them?

Yes. Airbyte's unified architecture means pipelines defined in one environment work identically in another. You change deployment YAML, not business logic. The same connector images, specifications, and configuration schemas work across Airbyte Cloud, hybrid, and on-premises deployments.

How does Airbyte maintain the same connector quality across all deployments?

Every connector follows the Airbyte Protocol and ships as a Docker container. The same binary executes in your Kubernetes cluster, private VPC, or Airbyte Cloud. Version upgrades deploy from the control plane, ensuring consistent functionality regardless of where the data plane runs.

What happens when I need to add a new data plane in a different region?

You deploy a new data plane in the target region while the existing control plane continues orchestrating all jobs. Regional expansion doesn't require pipeline rewrites or staff retraining. One control plane manages multiple data planes globally while respecting data residency requirements.

Do I lose monitoring capabilities when data processing happens on-premises?

No. Job status, error traces, and throughput metrics from every data plane roll up to the control plane. You maintain full visibility through a unified dashboard while sensitive records stay in your network. The control plane never accesses your data, only metadata about job execution.

.webp)